27 Unique Dev Challenges: A Recent Study Explored the Top Challenges Faced by LLM Developers

I discovered a recent research paper which analysed the OpenAI development forum questions.

Introduction

Specifically, the study involves crawling and analysing 29,057 relevant questions from a popular OpenAI developer forum. The researchers first examine the popularity and difficulty of these questions. Following this, they manually analyse 2,364 sampled questions.

Quick Overview

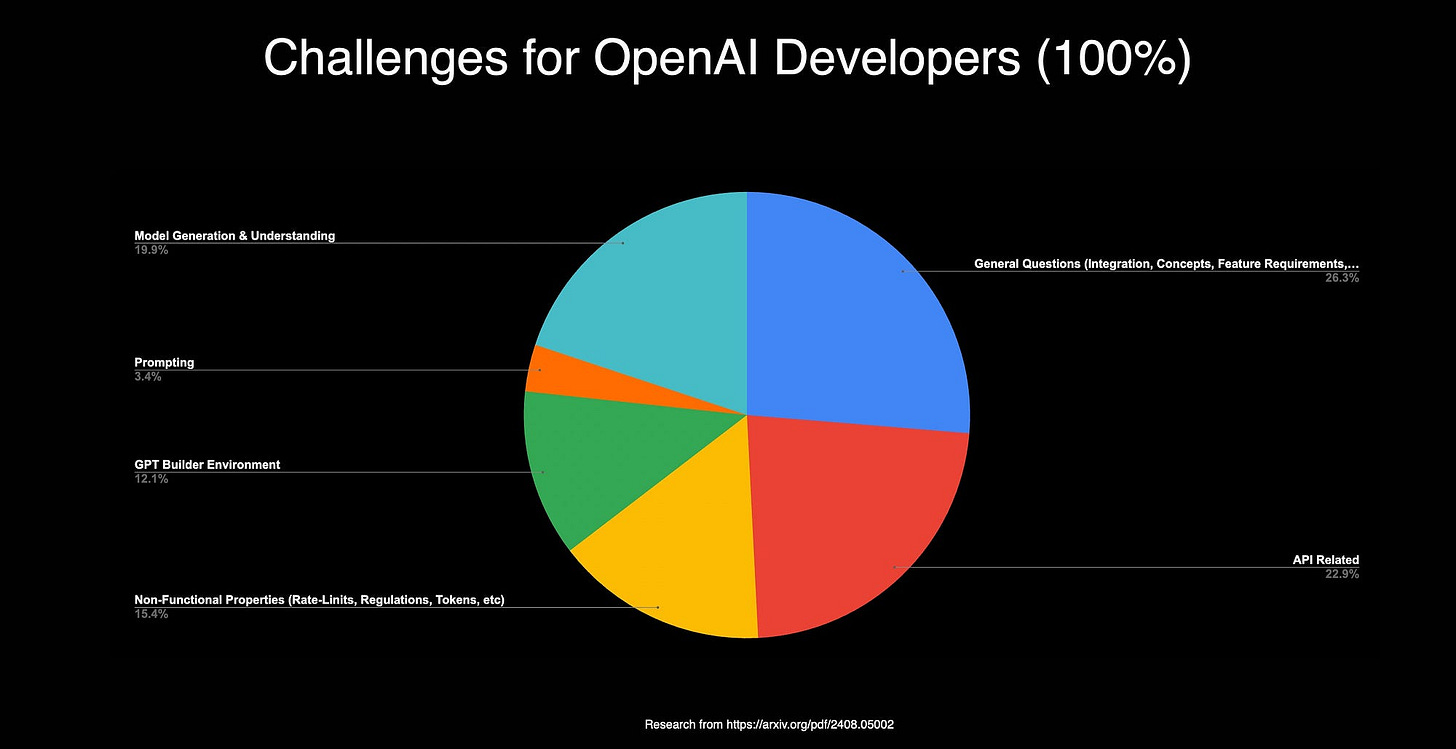

Below is a chart compiled based on the study, highlighting six overarching areas. API-related queries were particularly prevalent, largely due to the complexity involved in configuring the API for specific interactions.

Additionally, a significant number of general questions focused on integrating LLMs with existing business systems.

The majority of the total challenges, 26.3%, fall under the category of General Questions. Within this category, the subcategory Integration with Custom Applications represents the largest proportion, accounting for 64.6% of General Questions.

Research Taxonomy

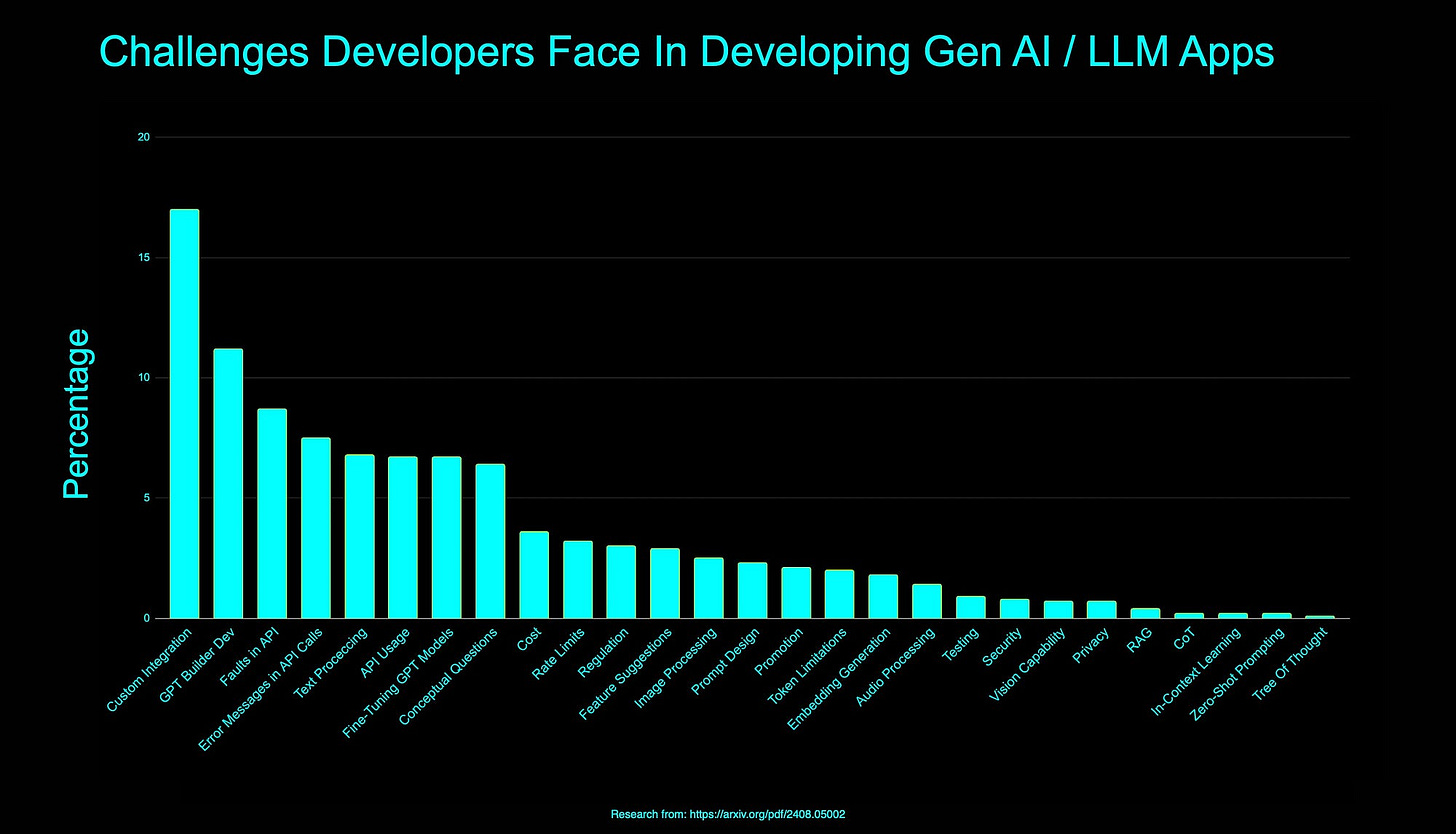

Considering the image below, a manual analysis was conducted on 2,364 sampled questions, leading to the development of a taxonomy of challenges that includes 27 distinct categories, such as:

Prompt Design,

Integration with Custom Applications, and

Token Limitation.

Based on this taxonomy, the study also summarizes key findings and provides actionable implications for LLM stakeholders, including developers and providers.

Error Messages In API Calls

This category includes the various error messages developers encounter when working with LLM APIs.

For example, developers might face request errors and data value capacity limit errors during API calls for image editing.

Additionally, issues related to the OpenAI server, such as gateway timeout errors, may arise. This subcategory represents 7.5% of the total challenges identified.

The Six Challenges Working With LLMs

Automating Task Processing: LLMs can automate tasks like text generation and image recognition, unlike traditional software that requires manual coding.

Dealing with Uncertainty: LLMs produce variable and sometimes unpredictable outputs, requiring developers to manage this uncertainty.

Handling Large-Scale Datasets: Developing LLMs involves managing large datasets, necessitating expertise in data preprocessing and resource efficiency.

Data Privacy and Security: LLMs require extensive data for training, raising concerns about ensuring user data privacy and security.

Performance Optimisation: Optimising LLM performance, particularly in output accuracy, differs from traditional software optimisation.

Interpreting Model Outputs: Understanding and ensuring the reliability of LLM outputs can be complex and context-dependent.

The results show that 54% of these questions have fewer than three replies, suggesting that they are typically challenging to address.

Developer Pain

The introduction of GPT-3.5 and ChatGPT in November 2022, followed by GPT-4 in March 2023, really accelerated the growth of the LLM developer community, markedly increasing the number of posts and users on the OpenAI developer forum.

The challenges faced by LLM developers are multifaceted and diverse, encompassing 6 categories and 27 distinct subcategories.

Challenges such as API call costs, rate limitations, and token constraints are closely associated with the development and use of LLM services.

Developers frequently raise concerns about API call costs, which are affected by the choice of model and the number of tokens used in each request.

Rate limitations, designed to ensure service stability, require developers to understand and manage their API call frequencies. Token limitations present additional hurdles, particularly when handling large datasets or extensive context.

Therefore, LLM providers should develop tools to help developers accurately calculate and manage token usage, including cost optimisation strategies tailored to various models and scenarios. Detailed guidelines on model selection, including cost-performance trade-offs, will aid developers in making informed decisions within their budget constraints.

Additionally, safety and privacy concerns are critical when using AI services. Developers must ensure their applications adhere to safety standards and protect user privacy.

OpenAI should continue to promote its free moderation API, which helps reduce unsafe content in completions by automatically flagging and filtering potentially harmful outputs.

Human review of outputs is essential in high-stakes domains and code generation to account for system limitations and verify content correctness.

Limiting user input text and restricting the number of output tokens can also help mitigate prompt injection risks and misuse, and these measures should be integrated into best practices for safe AI deployment.

In Conclusion

By examining relevant posts on the OpenAI developer forum, it is evident that LLM development is rapidly gaining traction, with developers encountering more complex issues compared to traditional software development.

The study aims to analyse the underlying challenges reflected in these posts, investigating trends and problem difficulty levels on the LLM developer forum.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.