5 Things NLU Engines Are Really Good At

While experimenting with predictive and classification capabilities of Large Language Models (LLMs) I realised how effective NLU engines have become.

Granted, with LLMs the hype and general focus is on generative and not predictive capabilities. But predictive capabilities are immensely important and more tricky to get right.

Chatbots have faced the rigours of production implementations and customer facing conversations for a while now.

With NLU engines being used for real-time classifying (intent detection) of user utterances, while simultaneously extracting entities.

Here are five areas in which NLU Engines are exceptionally well optimised…

1️⃣ Data Entry Is Easy

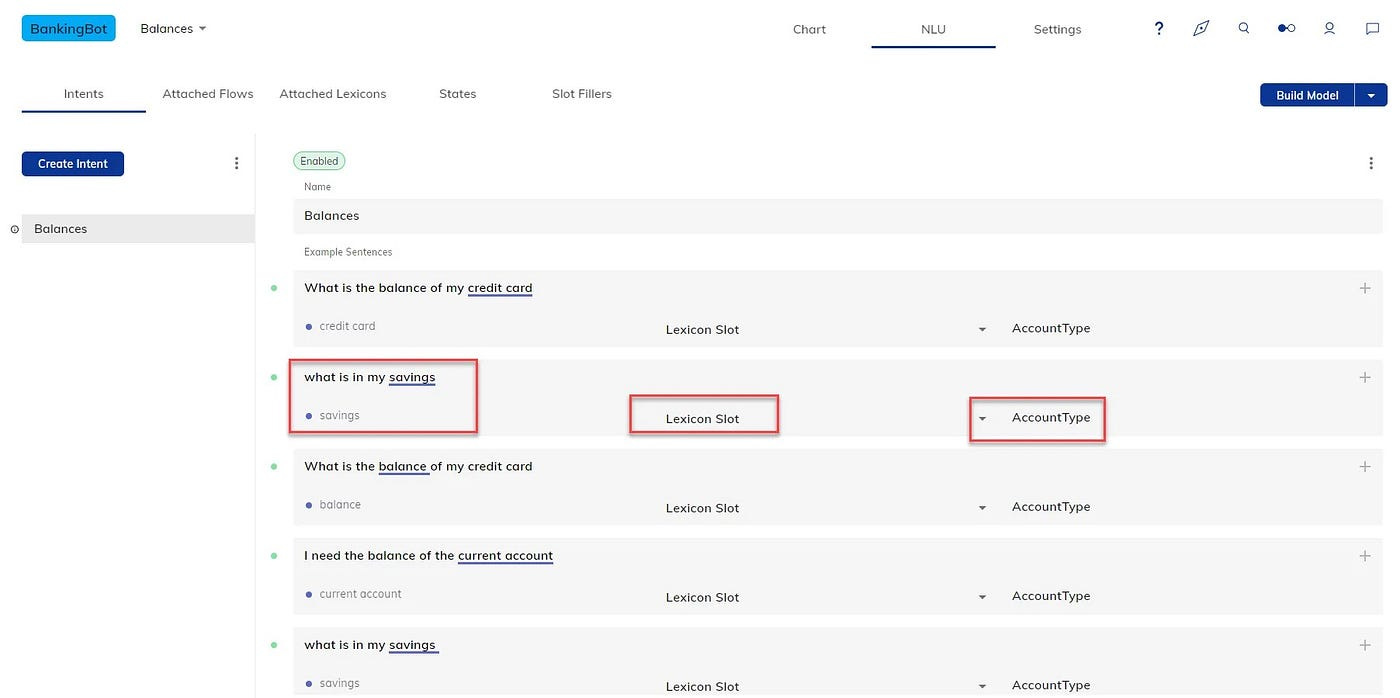

Most chatbot development frameworks have a no-code GUI approach to NLU data entry. Data can be entered via a point-and-click approach without any need for formatting data into a set JSON or CSV structure which then needs to be imported. Only to be followed by a slew of exceptions or errors.

Due to the lightweight nature of NLU Engine data exports, migration between chatbot frameworks and NLU engines is not daunting at all.

2️⃣ Intents Are Flexible

Intents are really just classes; data classification and the process and need of assigning text to one or more classes/labels/intents will never go away.

Below is a list of recent advancements in terms of intents:

3️⃣ Entities Have Evolved

While intents can be seen as verbs, entities can seen as nouns. Accurate entity detection plays a crucial role in not re-prompting the user; hence being able to gather entities from highly unstructured input on the first pass.

Read more here:

The Emergence Of Entity Structures In Chatbots

And Why It Is Important For Capturing Unstructured Data Accurately & Efficiency

4️⃣ Training Time

Recently I trained a multi-label model making use of Google Cloud Vertex AI. The training time of the multi-label model ended up to be quite long; for 11,947 training data items, the model training time was 4 hours, 48 minutes.

Chatbot development frameworks have done significant work in shortening the NLU model training time, with Rasa having implemented incrementaltraining.

5️⃣ Easy & Local Installations

NLU Engines are lightweight, often open-sourced and can easily be installed in any environment; even workstations. The Rasa open-sourced NLU API is easy to configure and train, there are other easy accessible NLU tools to get started with.

To mention a few:

If you are looking to get into natural language understanding there are easy and accessible ways of doing so, while coming to grips with the underlying principles. Without having to revert to expensive computing, LLMs or highly technical ML pipelines.

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.