A Comprehensive Survey of Large Language Models (LLMs)

This is probably the most comprehensive survey of all things related to LLMs.

As the LLM and Conversational AI landscape expands in terms of products, vendors and functionality, it becomes near impossible to keep track of all market dimensions in detail.

Key Points From The Study

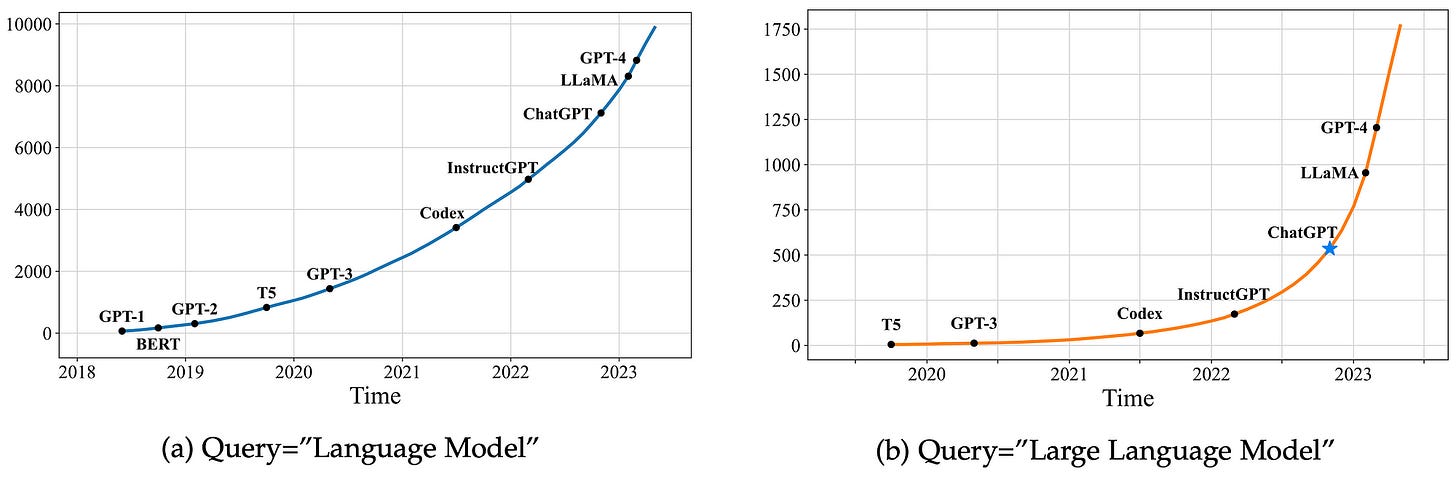

The average number of published arXiv papers containing “large language model” in the title or abstract went from 0.40 per day to 8.58 per day.

Despite the progress and impact of LLMs, the underlying principles of LLMs are still not well explored.

Open-Sourced LLMs acts as an enabler for LLM expansion, customisation and growth. Meta’s contribution should not be underestimated.

The research work conducted by leveraging LLaMA has been significant. A large number of researchers have extended LLaMA models by either instruction tuning or continual pre-training.

Three Emergent Abilities for LLMs are In-Context Learning (ICL), Instruction Following & Step-By-Step Reasoning (CoT).

Key Practices related to LLMs are: Scaling, Training, Ability Eliciting, Alignment Tuning, Tools.

Adaption Of LLMs include: Instruction Tuning, Alignment Tuning, Memory-Efficient Model Adaptation, etc.

Three main approaches to Prompt Engineering are: In-Context Learning, Chain-Of-Thought, Planning.

ICL Prompt Engineering Implementations: KATE, EPR, SG-ICL, APE, Structured Prompting, GlobalE & LocalE.

CoT Prompt Engineering Implementations: Complex CoT, Auto-CoT, Selection-Inference, Self-consistency, DIVERSE, Rationale-augmented ensembles.

Planning Prompt Engineering Implementations: Least-to-most prompting, DECOMP, PS, Faithful CoT, PAL, HuggingGPT, AdaPlanner, TIP, RAP, ChatCoT, ReAct, Reflexion, Tree of Thoughts.

Research Activity

The two graphs below show the cumulative numbers of arXiv papers that contain the keyphrases “language model” (since June 2018) and “large language model” (since October 2019), respectively. The growth since 2019 in papers published related to LLMs is staggering.

Emergent Abilities of LLMs

The study identifies three typical emergent abilities for LLMs which is very insightful…

In-Context Learning (ICL)

The concept of In-Context Learning (ICL) was formally introduced by GPT-3. The assumption of ICL is that if the LLM has been provided with a prompt holding one or more task demonstrations, the model is more likely to generate a correct answer.

Among the GPT- series models, the 175B GPT-3 model exhibited a strong ICL ability in general.

Instruction Following

By fine-tuning with a mixture of multi-task datasets formatted via natural language descriptions (called instruction tuning), LLMs are shown to perform well on unseen tasks that are also described in the form of instructions.

With instruction tuning, LLMs are enabled to follow the task instructions for new tasks without using explicit examples, thus having an improved generalisation ability.

Step-by-step Reasoning

With the Chain-Of-Thought (CoT) prompting strategy , LLMs can solve complex tasks by utilising the prompting techniques that involves intermediate reasoning steps for deriving the final answer.

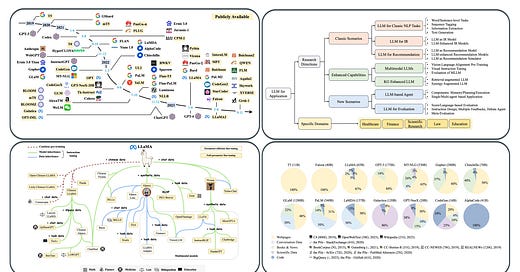

LLM Landscape Development

The image above is a timeline of existing LLMs which have a size greater than 10B. The timeline is established according to the model release dates. The models marked with a yellow background, are all publicly available.

The image above shows an evolutionary graph of some of the research work conducted on LLaMA.

The collection of LLaMA models were introduced by Meta AI in February 2023. Consisting of four sizes (7B, 13B, 30B and 65B). Since then, LLaMA has attracted extensive attention from both research and industry communities.

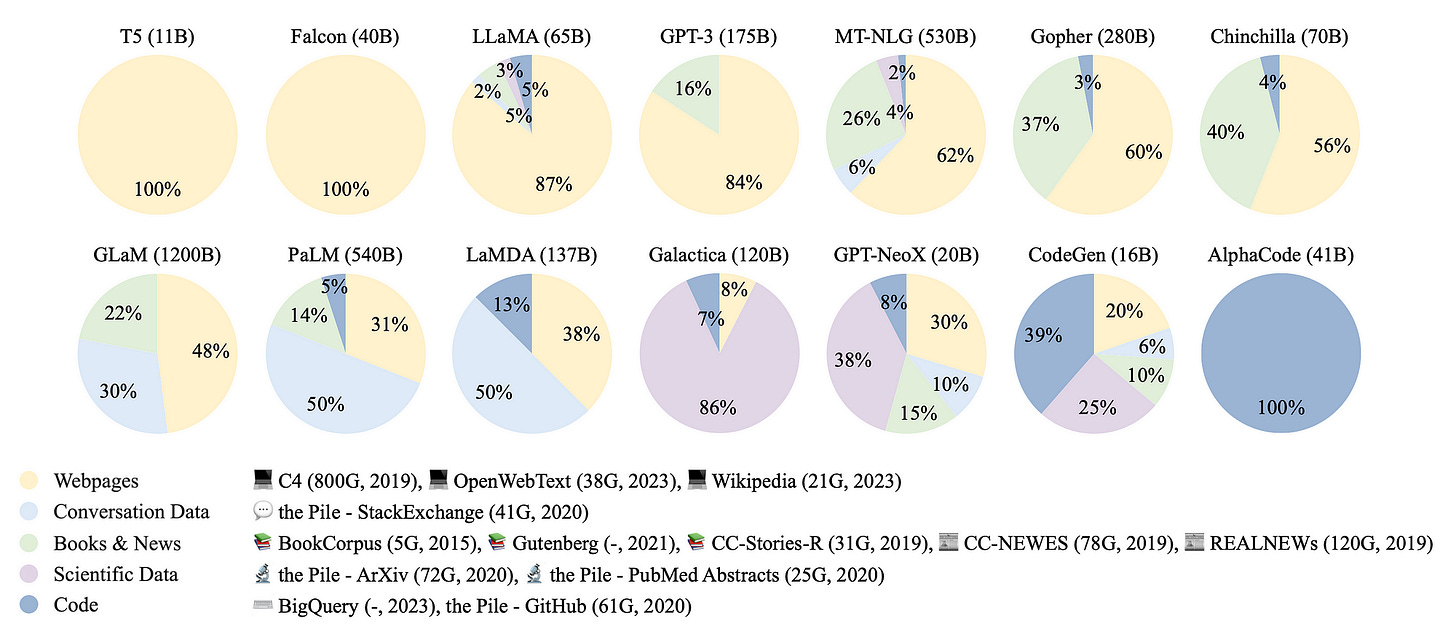

Training Data Sources

The image below shows the ratios of various data sources in the pre-training data for existing LLMs.

The data sources of pre-training corpus can be broadly categorised into two types:

General Data and

Specialised Data

General data, such as webpages, books, and conversational text, is utilised by most LLMs due to its large, diverse, and accessible nature, which can enhance the language modelling and generalisation abilities of LLMs.

In light of the impressive generalisation capabilities exhibited by LLMs, there are also studies that extend their pre-training corpus to more Specialised datasets, such as multilingual data, scientific data, and code.

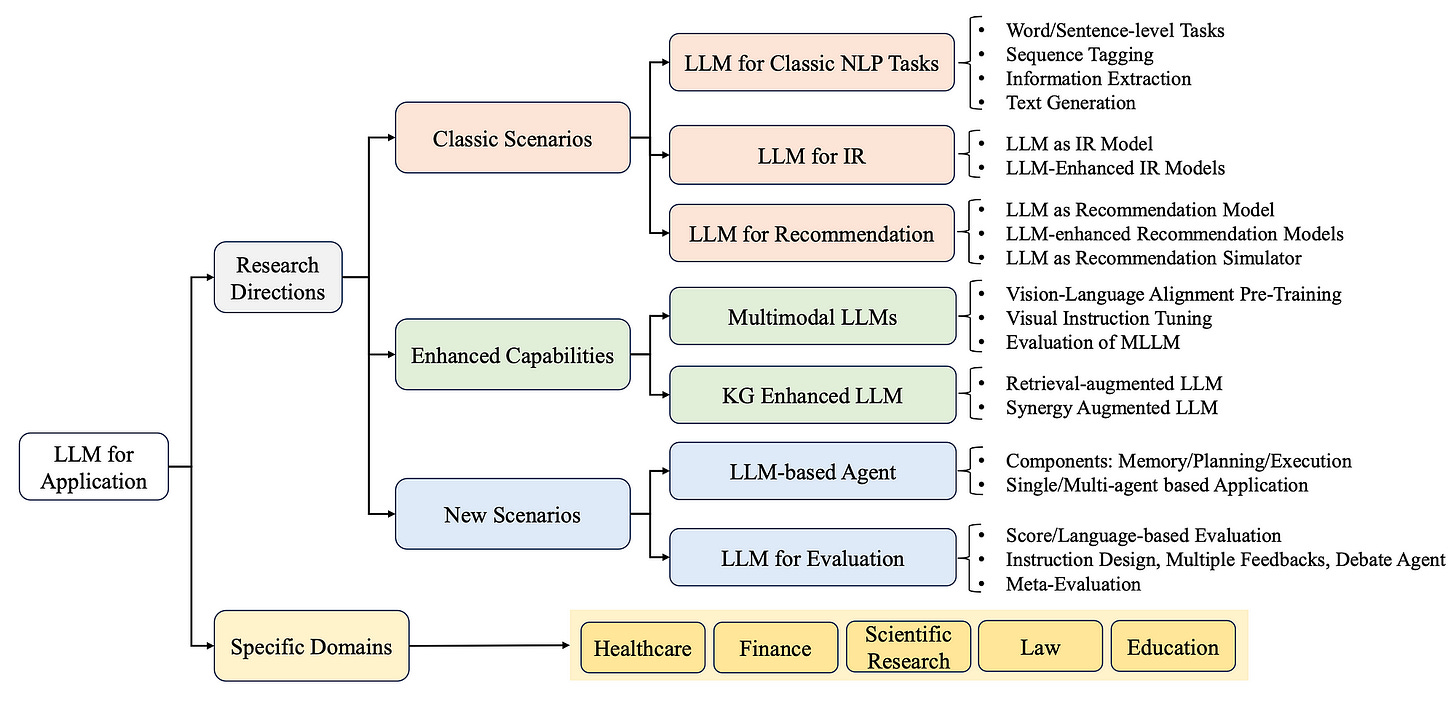

Below, the applications of LLMs in representative research directions and downstream domains.

Finally

In summary, the evolution of large language models marks a significant progression in natural language processing. And from early rule-based dialog management to the emergence of powerful neural networks like GPT-3. Combine this with the power of Natural Language Generation (NLG).

The narrative of large language models is one of continuous refinement, innovation, and the integration into existing technological landscapes.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.