A New Prompt Engineering Technique Has Been Introduced Called Step-Back Prompting

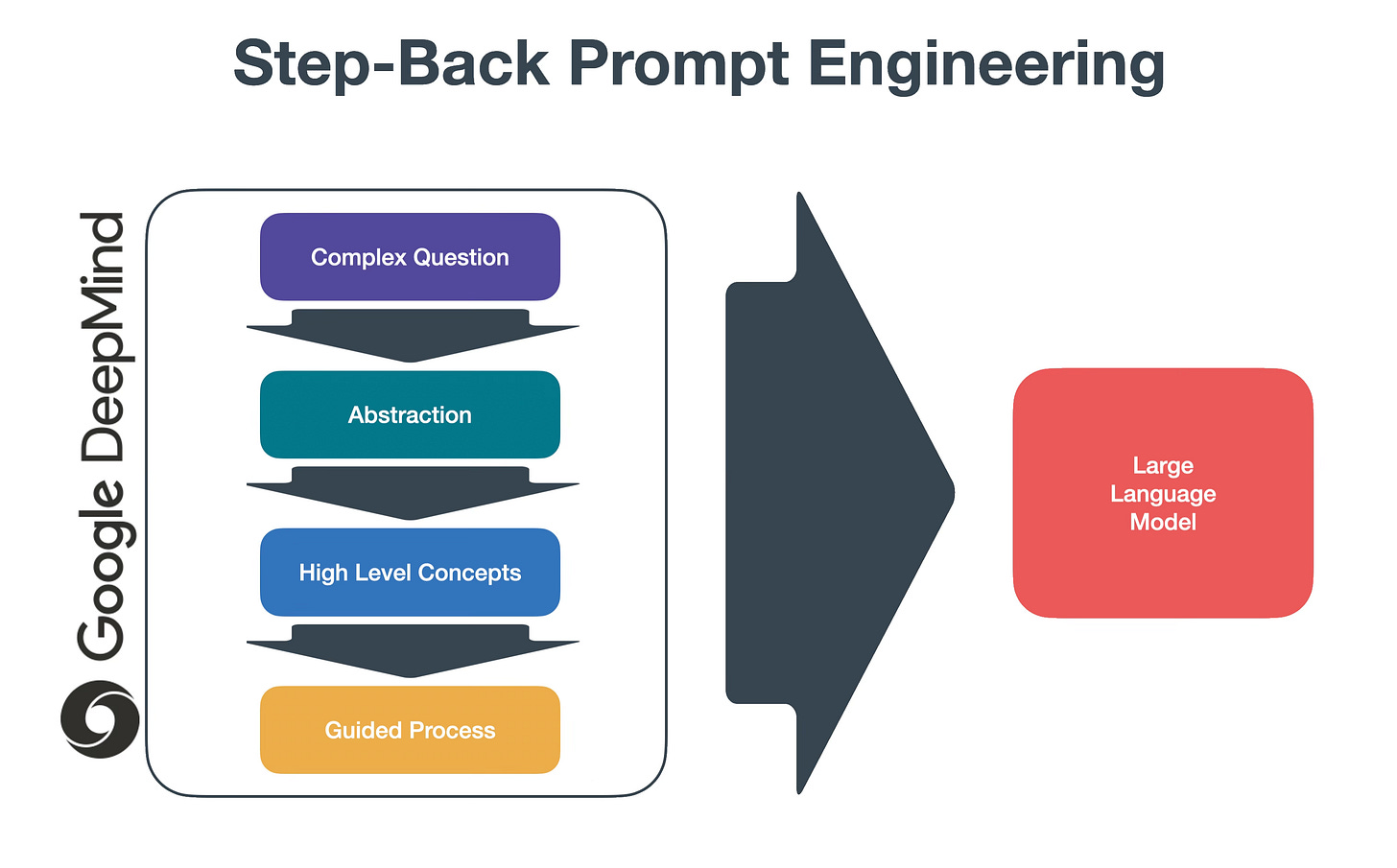

Step-Back Prompting is a prompting technique enabling LLMs to perform abstractions, derive high-level concepts & first principles from which accurate answers can be derived.

Step-Back Prompting (STP) interfaces with one LLM only and in an iterative process.

As you will see later in this article, STP can be used in conjunction with RAG with comparable results.

As seen in the image below, STP is a more technical prompt engineering approach where the original question needs to be distilled into a stepback question. And the stepback answer used for the final answer.

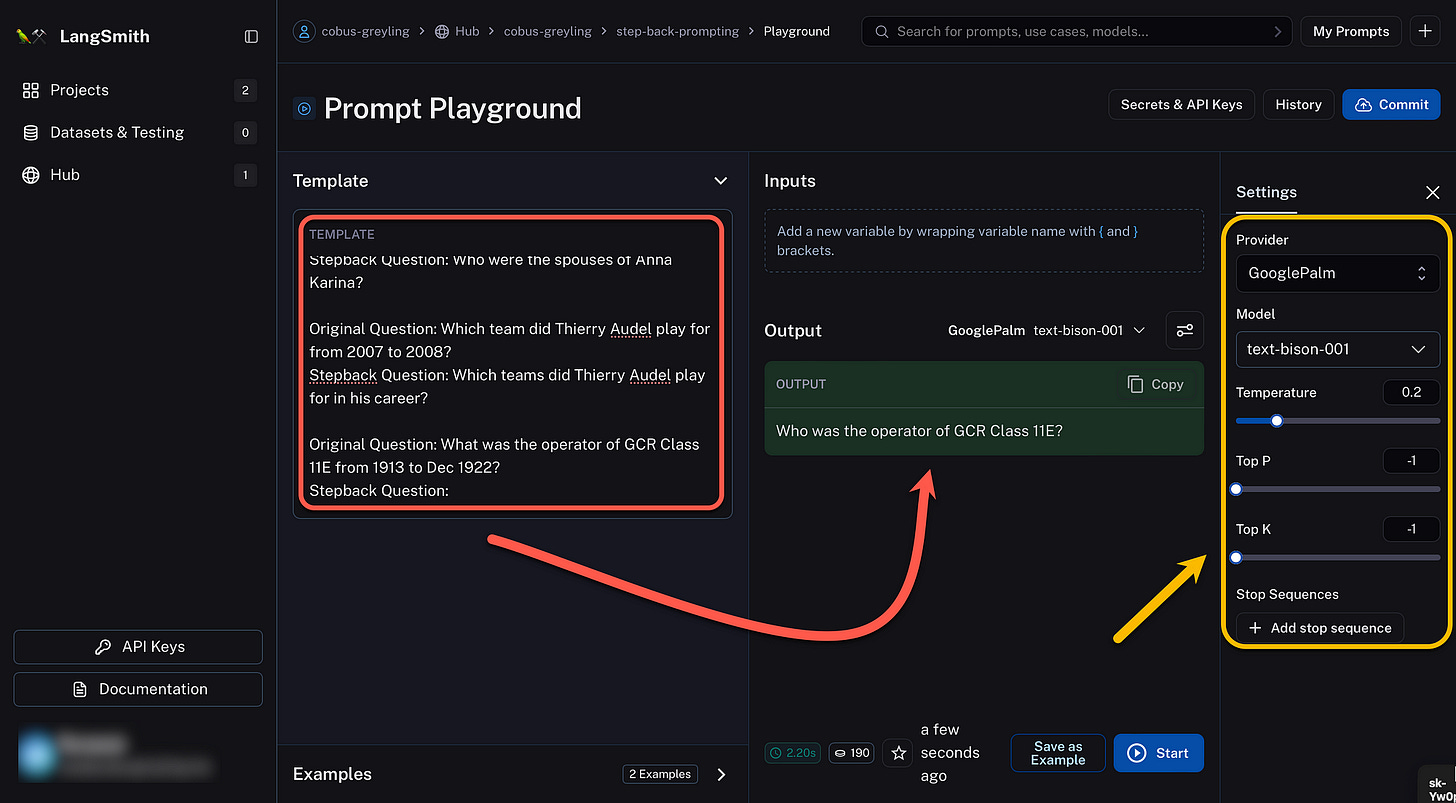

Considering the example below I executed using LangSmith, a prompt can be created which instructs the LLM on how to generate a stepback question:

You are an expert at world knowledge.

Your task is to step back and paraphrase a question to a more generic

step-back question, which is easier to answer.

Here are a few examples:

Original Question: Which position did Knox Cunningham hold from May 1955 to Apr 1956?

Stepback Question: Which positions have Knox Cunning- ham held in his career?

Original Question: Who was the spouse of Anna Karina from 1968 to 1974?

Stepback Question: Who were the spouses of Anna Karina?

Original Question: Which team did Thierry Audel play for from 2007 to 2008?

Stepback Question: Which teams did Thierry Audel play for in his career?Here the stepback question is generated via LangSmith making use of GooglePalm

text-bison-001.

I do get the sense that this prompting technique is too complex for a static or prompt template approach and will work better in an autonomous agent implementation.

As we have seen with most prompting techniques published, Large Language Models (LLMs) need guidance when intricate, multi-step reasoning is demanded from a query, and decomposition is a key component when solving complex request.

A process of supervision with step-by-step verification is a promising remedy to improve the correctness of intermediate reasoning step

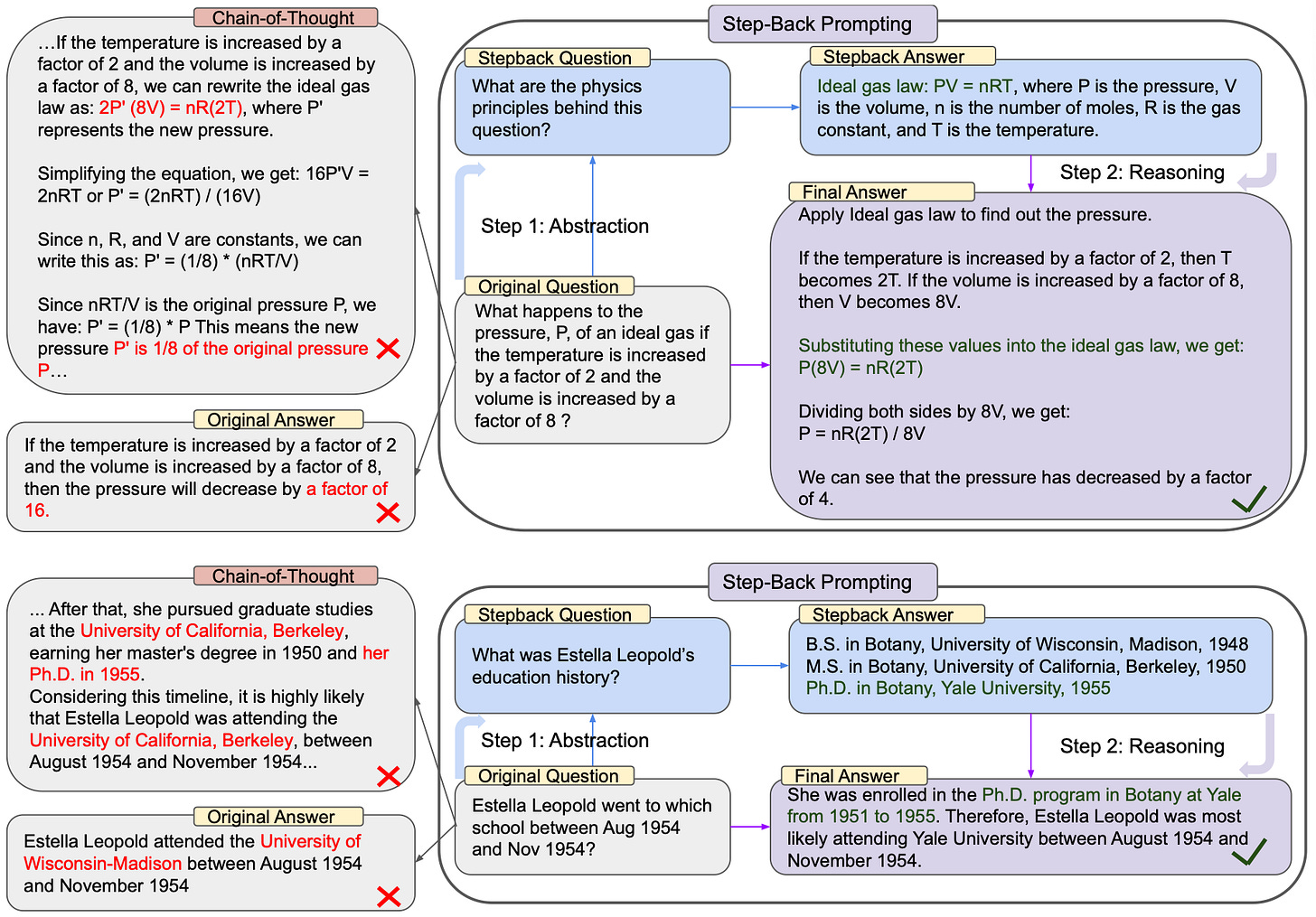

The most well known prompting technique when it comes to decomposition is chain-of-thought reasoning. In this study Step-Back Prompting is compared to COT prompting.

The text below shows a complete example of STP with the original question, the stepback question, principles, and the prompt for the final answer to be generated by the LLM.

Original Question: "Potassium-40 is a minor isotope found in naturally occurring potassium. It is radioactive and can be detected on simple radiation counters.

How many protons, neutrons, and electrons does potassium-40 have when it is part of K2SO4?

Choose an option from the list below:

0) 21 neutrons, 19 protons, 18 electrons

1) 20 neutrons, 19 protons, 19 electrons

2) 21 neutrons, 19 protons, 19 electrons

3) 19 neutrons, 19 protons, 19 electrons"

Stepback Question: "What are the chemistry principles behind this question?"

Principles:

"Atomic number: The atomic number of an element is the number of protons in the nucleus of an atom of that element."

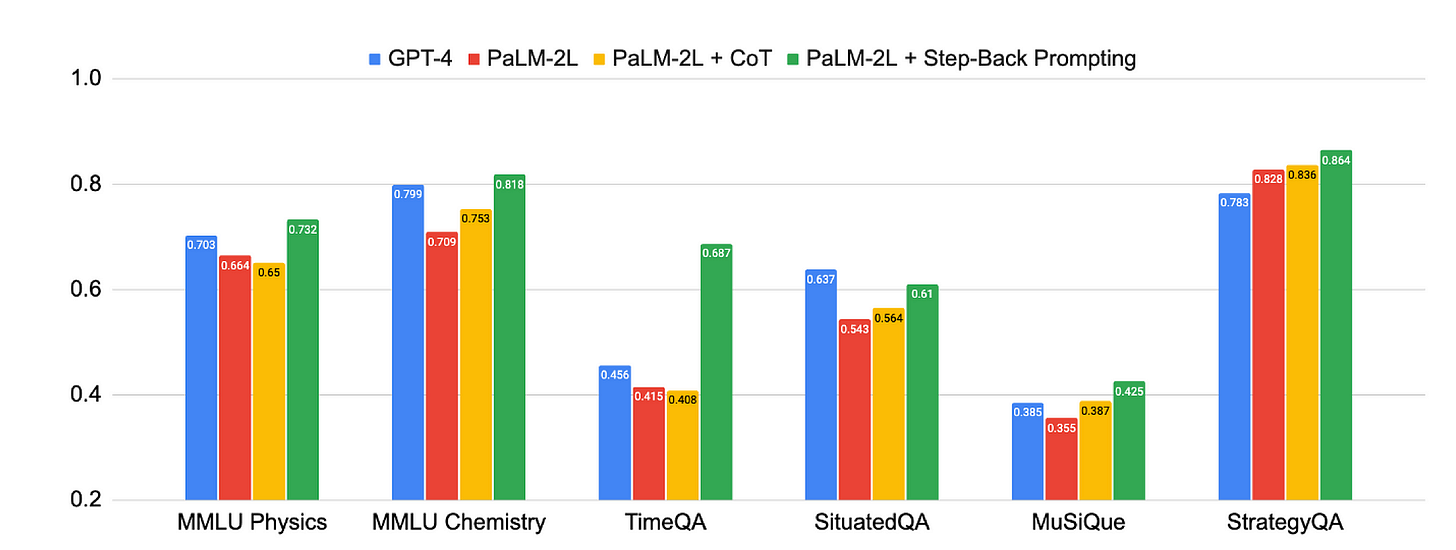

Final Answer:This chart shows the strong performance of Step-Back Prompting which follows an abstraction and reasoning scheme. Evidently this approach leads to significant improvements in a wide range of more complex tasks.

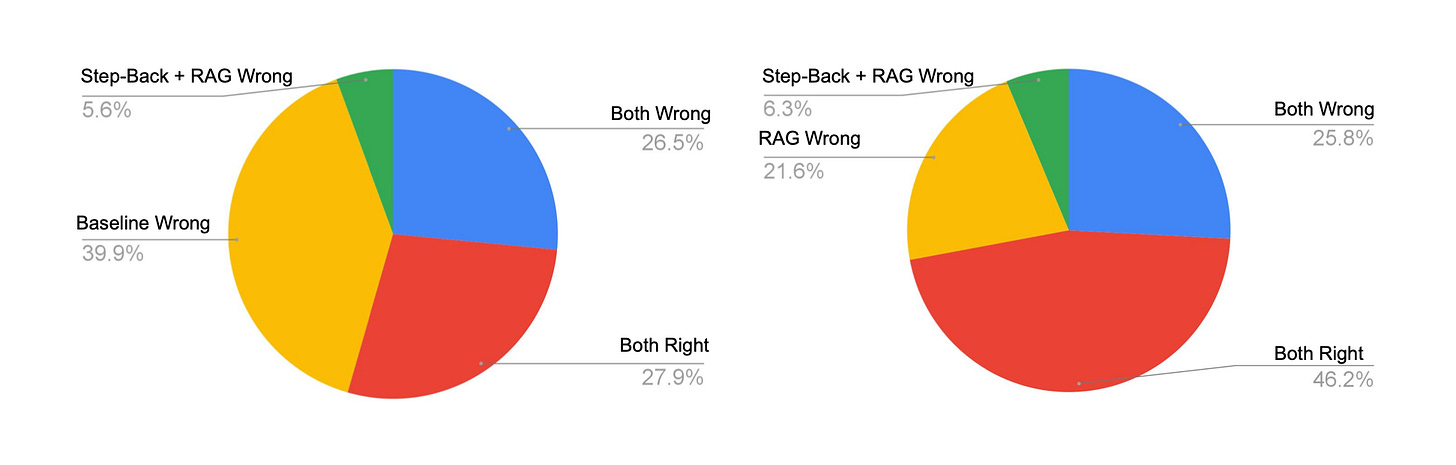

The chart below shows the Step-Back Prompting approach on the TimeQA dataset. Step-Back combined with RAG compared to baseline predictions.

On the left is Step-Back & RAG vs baseline predictions.

On the right, Step-Back RAG vs RAG predictions.

Step-Back Prompting fixed 39.9% of the predictions where the baseline prediction is wrong, while causing 5.6% errors.

Step-Back Prompting + RAG fixes 21.6% errors coming from RAG. While introducing 6.3% errors.

The purpose of abstraction is not to be vague, but to create a new semantic level in which one can be absolutely precise. — Edsger W. Dijkstra

This study again illustrates the versatility of Large Language Models and how new ways of interacting with LLMs can be invented to leverage LLMs even further.

This technique also shows the ambit of static prompting and clearly shows that as complexity grows, more augmented tools like prompt-chaining and autonomous agents need to be employed.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.