Adding A Moderation Layer To Your OpenAI Implementations

If you don’t, you are jeopardising any production implementations you might have.

The moderation endpoint is free to use for monitoring inputs and outputs of OpenAI API calls; this excludes third-party traffic.

If OpenAI find that a product or usage does not adhere to their policies:

OpenAI may ask API users to make necessary changes.

Repeated or serious violations can lead to further action from OpenAI and even termination of your account.

This is crucial for any commercial or product related implementation, as failure to moderate usage can lead to suspension of OpenAI services, and impeding the product or service rendered.

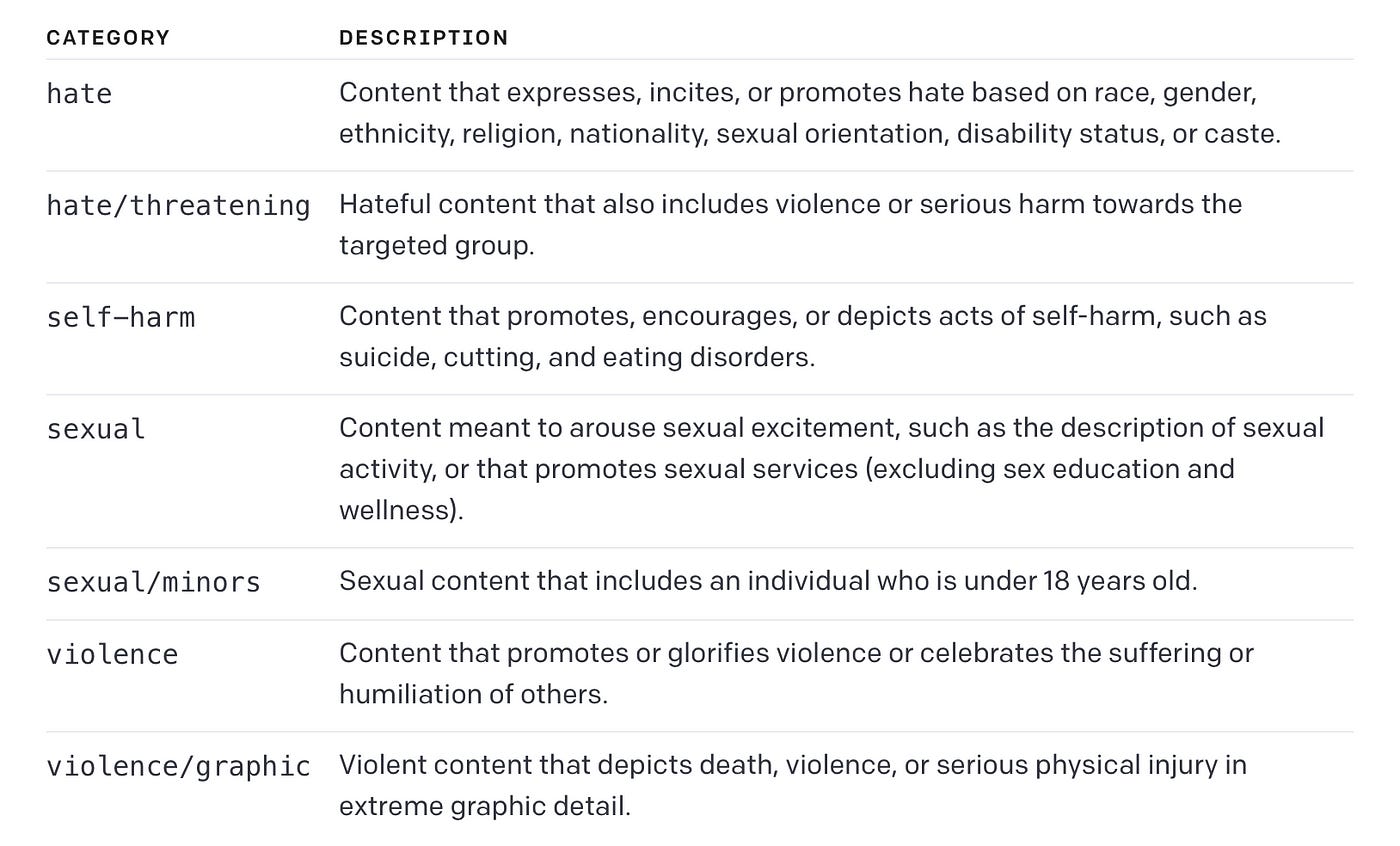

OpenAI has made a moderation endpoint available free of charge, in order for users to check OpenAI API input and output for signs of hate, threats, self-harm, sexual content (including minors) violence and violent content.

Below are descriptions for each of the seven categories monitored:

OpenAI states that the moderation endpoint’s underlying model will be updated on an ongoing basis.

Custom user defined policies that rely on category_scores may need recalibration over time.

A current impediment is that support for non-English languages is limited.

We want everyone to use our tools safely and responsibly. That’s why we’ve created usage policies that apply to all users of OpenAI’s models, tools, and services. By following them, you’ll ensure that our technology is used for good.

~ OpenAI

OpenAI is working on an ongoing basis to improve the accuracy of the classifier. OpenAI also states that they are are especially focussed on improving the classifications for hate, self-harm, and violence/graphic.

Below is an example of how the moderation endpoint is called using Python…with the input text Sample text goes here.

pip install openai

import os

import openai

openai.api_key = "xxxxxxxxxxxxxxxxxxxxxx"

response = openai.Moderation.create(

input="Sample text goes here"

)

output = response["results"][0]

print (output)And the output shown below, with the seven categories and the subsequent score per category. There are three sections in the response; the categories, the category scores and if the text was flagged at all.

{

"categories": {

"hate": false,

"hate/threatening": false,

"self-harm": false,

"sexual": false,

"sexual/minors": false,

"violence": false,

"violence/graphic": false

},

"category_scores": {

"hate": 4.921302206639666e-06,

"hate/threatening": 1.0990176546599173e-09,

"self-harm": 8.864341261016762e-09,

"sexual": 2.6443567548994906e-05,

"sexual/minors": 2.4819328814373876e-07,

"violence": 2.1955165721010417e-05,

"violence/graphic": 5.248724392004078e-06

},

"flagged": false

}A second test, with an example of text defining self-harm, I hate myself and want to do harm to myself:

response = openai.Moderation.create(

input="I hate myself and want to do harm to myself"

)

output = response["results"][0]

print (output)You can see the self-harm flag is set to true, with a high category score for hate and threatening. Overall the utterance is flagged as true.

{

"categories": {

"hate": false,

"hate/threatening": false,

"self-harm": true,

"sexual": false,

"sexual/minors": false,

"violence": false,

"violence/graphic": false

},

"category_scores": {

"hate": 5.714087455999106e-05,

"hate/threatening": 2.554639308982587e-07,

"self-harm": 0.9999761581420898,

"sexual": 2.3994387447601184e-05,

"sexual/minors": 1.6004908331979095e-07,

"violence": 0.027929997071623802,

"violence/graphic": 4.723879101220518e-06

},

"flagged": true

}The three sections as described by OpenAI:

flagged: Set totrueif the model classifies the content as violating OpenAI's usage policies,falseotherwise.

categories: Contains a dictionary of per-category binary usage policies violation flags. For each category, the value istrueif the model flags the corresponding category as violated,falseotherwise.

category_scores: Contains a dictionary of per-category raw scores output by the model, denoting the model's confidence that the input violates the OpenAI's policy for the category. The value is between 0 and 1, where higher values denote higher confidence. The scores should not be interpreted as probabilities.

In Closing

Any production implementation needs to exercise due diligence in ensuring input/output is moderated carefully and in a persistent manner. It might not be reasonable to monitor every single dialog turn both API input and output.

I am curious to know how companies with production OpenAI implementations are addressing moderation?

Do they follow a spot-check approach?

Or are conversation summaries subjected to moderation with an eye on efficiency?

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.