Agentic RAG: Context-Augmented OpenAI Agents

LlamaIndex has coined the phrase Agentic RAG…Agentic RAG can best be described as adding autonomous agent features to a RAG implementation.

Introduction

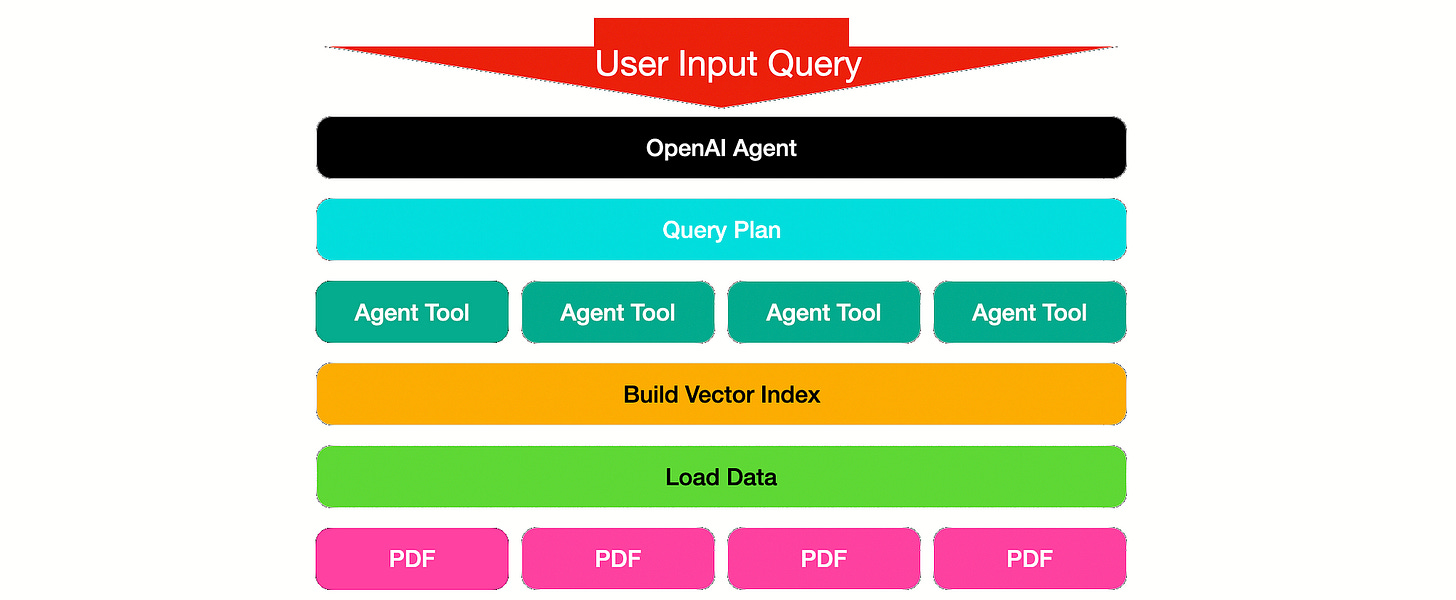

As seen in the image below, multiple documents are loaded and each associated with an agent tool, or which can be referred to as a sub-agent.

Each sub-agent has a description which helps the meta-agent to decide which tool to employ when.

More On OpenAI Function Calling

To be clear, the chat completion API does not call any function but the model does generate the JSON which can be used to call a function from your code.

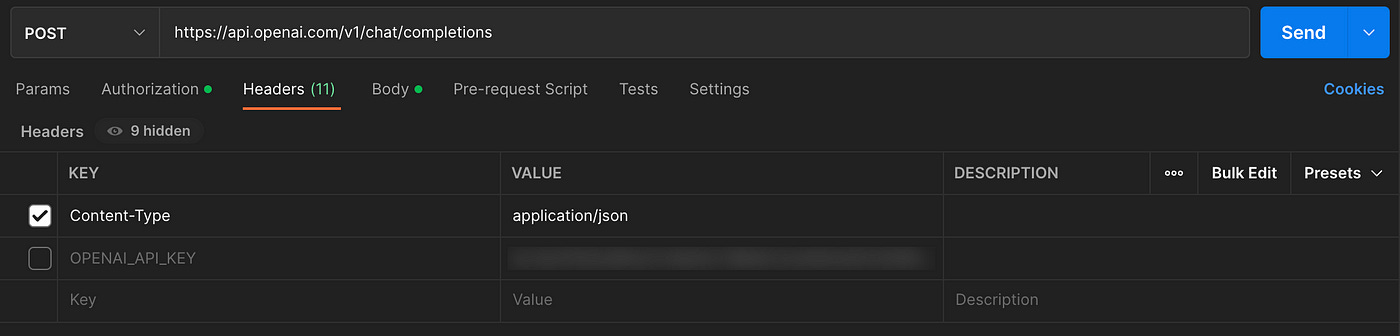

Consider the following working API call:

An API call is submitted to https://api.openai.com/v1/chat/completions as seen below, with the OPEN_API_KEY defined in the header.

Below is the JSON document sent to the model. The aim of this call is to generate a JSON file which can be used to send to an API which sends emails.

You can see the name is send_email. And three parameters are defined, to_address , subject and body , which is the email body. The chatbot user request is: Send Cobus from kore ai an email asking for the monthly report?

{

"model": "gpt-3.5-turbo-0613",

"messages": [

{"role": "user", "content": "Send Cobus from kore ai an email asking for the monthly report?"}

],

"functions": [

{

"name": "send_email",

"description": "Please send an email.",

"parameters": {

"type": "object",

"properties": {

"to_address": {

"type": "string",

"description": "To address for email"

},

"subject": {

"type": "string",

"description": "subject of the email"

},

"body": {

"type": "string",

"description": "Body of the email"

}

}

}

}

]

}Below is the JSON generated by the model, in this case the model gpt-3.5-turbo-0613 is used for the completion.

{

"id": "chatcmpl-7R3k9pN6lLXCmNMiLNoaotNAg86Qg",

"object": "chat.completion",

"created": 1686683601,

"model": "gpt-3.5-turbo-0613",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": null,

"function_call": {

"name": "send_email",

"arguments": "{\n \"to_address\": \"cobus@kore.ai\",\n \"subject\": \"Request for Monthly Report\",\n \"body\": \"Dear Cobus,\\n\\nI hope this email finds you well. I would like to kindly request the monthly report for the current month. Could you please provide me with the report by the end of the week?\\n\\nThank you in advance for your assistance!\\n\\nBest regards,\\n[Your Name]\"\n}"

}

},

"finish_reason": "function_call"

}

],

"usage": {

"prompt_tokens": 86,

"completion_tokens": 99,

"total_tokens": 185

}

}This is a big leap in the right direction, with the Large Language Model not only structuring output into natural conversational language but structuring it.

Back To Agentic RAG by LlamaIndex

This implementation from LlamaIndex combines OpenAI Agent functionality, with Function Calling and RAG. I also like the way LlamaIndex speaks about contextual-augmentation which is actually a more accurate term to use for leveraging in-context learning.

The LlamaIndex ContextRetrieverOpenAIAgent implementation creates an agent on top of OpenAI’s function API and store/index an arbitrary number of tools.

LlamaIndex’s indexing and retrieval modules help to remove the complexity of having too many functions to fit in the prompt.

Just to be clear, this does not leverage the OpenAI Assistant functionality in anyway. So when the code runs an assistant is not created.

You will need to add your OpenAI API key to run this agent.

%pip install llama-index-agent-openai-legacy

!pip install llama-index

import os

import openai

os.environ["OPENAI_API_KEY"] = "< Your OpenAI API Key Goes Here>"Apart from adding your OpenAI API key, the notebook provided from LlamaIndex runs perfectly.

Tools, Also Known As Sub-Agents

Notice how the sub-agents or agent tools are defined below, with a name, and a description which helps the meta-agent to decide which tool to use when.

query_engine_tools = [

QueryEngineTool(

query_engine=march_engine,

metadata=ToolMetadata(

name="uber_march_10q",

description=(

"Provides information about Uber 10Q filings for March 2022. "

"Use a detailed plain text question as input to the tool."

),

),

),

QueryEngineTool(

query_engine=june_engine,

metadata=ToolMetadata(

name="uber_june_10q",

description=(

"Provides information about Uber financials for June 2021. "

"Use a detailed plain text question as input to the tool."

),

),

),

QueryEngineTool(

query_engine=sept_engine,

metadata=ToolMetadata(

name="uber_sept_10q",

description=(

"Provides information about Uber financials for Sept 2021. "

"Use a detailed plain text question as input to the tool."

),

),

),

]Complex Queries

Within the Agent code, the following abbreviations are defined…You can think of these abbreviation as shorthand which can be used to make for more efficient queries.

"Abbreviation: X = Revenue",

"Abbreviation: YZ = Risk Factors",

"Abbreviation: Z = Costs",Consider below how a question can be posed to the agent while using the shorthand:

response = context_agent.chat("What is the YZ of March 2022?")But I’m sure you see what the challenge is here, the query only spans over one month and hence one document…what if we want to calculate the revenue minus the cost for over all three months/documents?

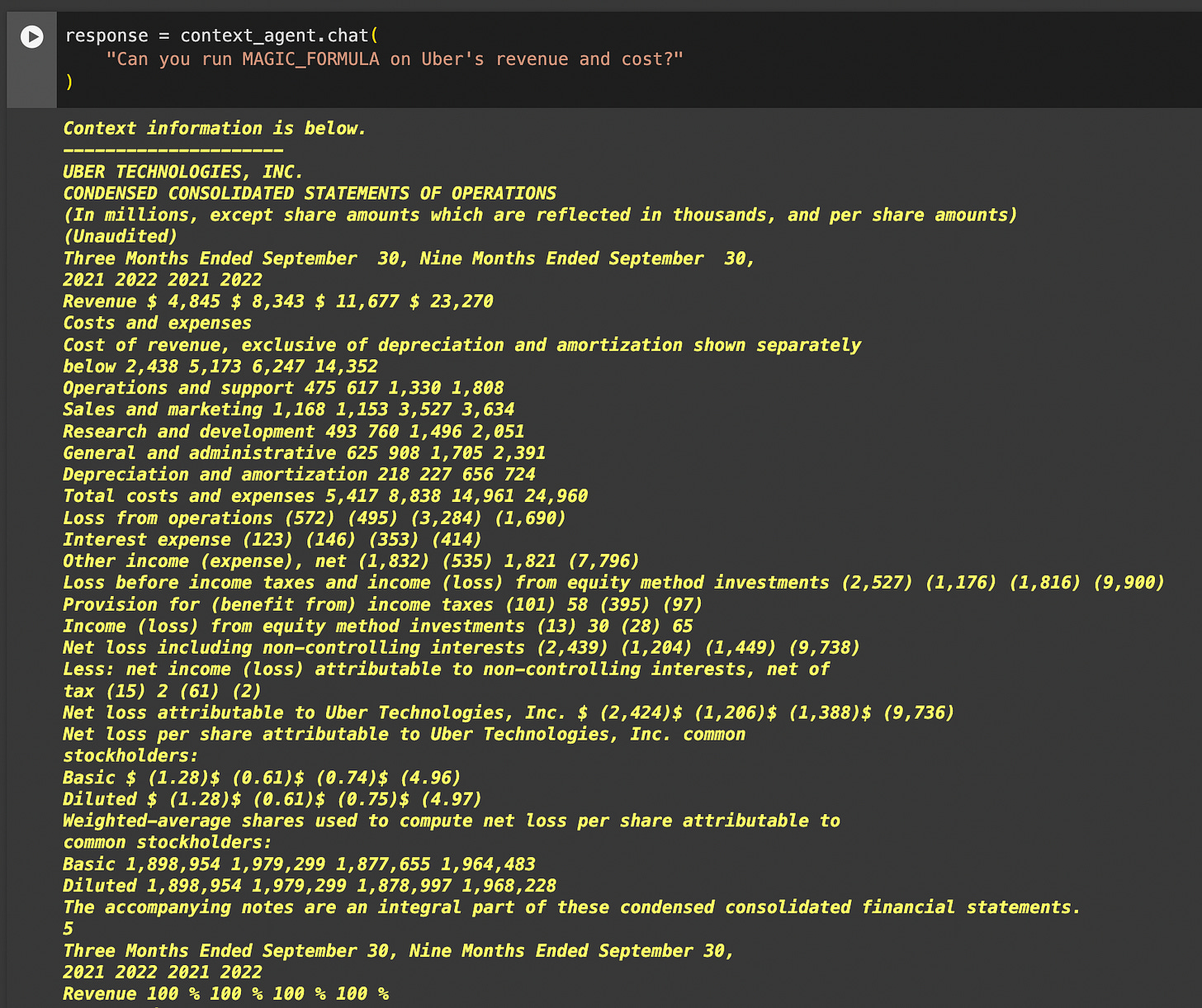

response = context_agent.chat(

"Can you run MAGIC_FORMULA on Uber's revenue and cost?"

)In the image below, you can see how the agent goes about calculating the total revenue minus the cost.

The contextual information contributes to the agent’s output being explainable and inspectable.

And below the final response from the agent…and even-though the response is explainable and inspectable, it would be an interesting exercise to humanly inspect the uploaded PDFs and see if the derived answers are aligned with the agent’s response.

Calling function:

magic_formula with args:

{"revenue":23400,"cost":24960}

Got output: -1560I took the agent’s output and asked ChatGPT to write a narrative around the agent’s output and the result is below…

In the realm of financial analysis, there’s a potent tool known as the “Magic Formula” designed to assess the performance of companies based on specific financial metrics. Considering the data provided for Uber’s revenue and costs, let’s execute the Magic Formula and decipher its implications.

Running the Magic Formula on Uber’s revenue of $23,400 million and costs of $24,960 million yields an intriguing result. The output indicates a negative value of $1,560 million. This negative figure suggests that Uber’s financial performance, as evaluated by the Magic Formula, falls below expectations.

However, it’s crucial to delve deeper into the underlying factors contributing to this outcome. Analyzing Uber’s revenue and cost structures in more detail could unveil insights into areas of strength and weakness within the company’s operations. Furthermore, exploring trends over time and comparing with industry benchmarks may provide valuable context for interpreting the Magic Formula’s assessment.

As we navigate the complexities of financial analysis, the Magic Formula serves as a guiding light, illuminating potential areas for further investigation and strategic decision-making. ~ ChatGPT

In Conclusion

I have written previously about the phenomenon of different approaches and technologies use to be disparate, and now we are seeing a convergence of technologies.

This article, the combination of autonomous agents, tools, RAG and OpenAI Function calling are all combined into one LLM implementation.

This will be combined with Multi-LLM orchestration and using specific LLMs in specific instances.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.