A Notebook, Function Calling, Agentic Workflows & RAG

I leveraged a sophisticated AI research API to analyse emerging trends and identify those on the rise versus in decline.

Surprisingly, interest in AI Agents is waning, while two areas are surging:

Advanced research and reasoning capabilities, and

Agentic Workflows.

Agentic Workflows bridge the gap between traditional RPA — which is often brittle, inflexible, and rigidly predetermined — and full-fledged AI Agents, where the degree of autonomy can feel overwhelming.

Agentic Workflows strike an ideal balance:

They provide a foundational template for applications, enabling agency within defined boundaries,

along with the adaptability and autonomy that traditional RPA sorely lacks.

Of course, all of this remains theoretical for me until I can implement it in the simplest form possible.

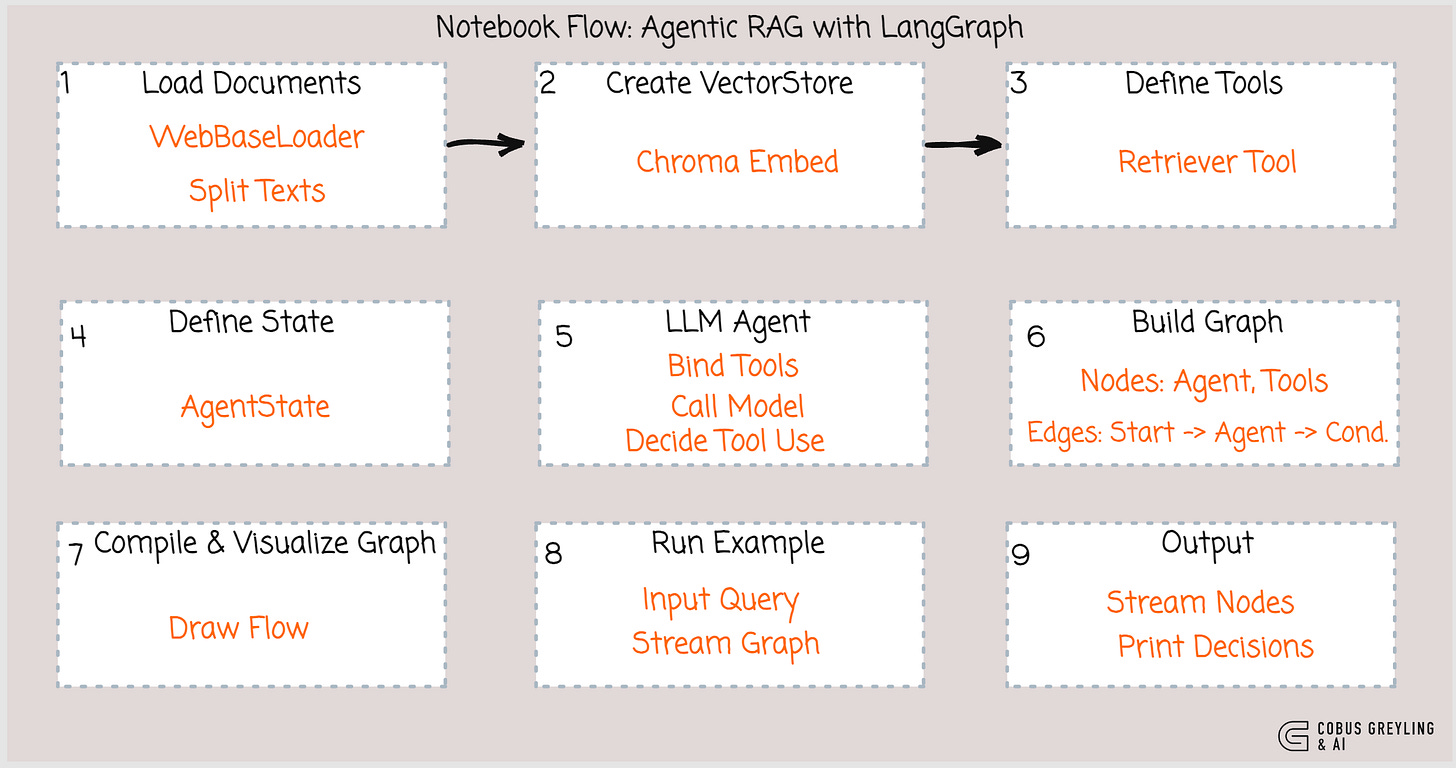

So, I discovered this excellent LangChain notebook that illustrates, in straightforward terms, how a few URLs are indexed in Chroma… and how the RAG lookup is configured as a function call.

This setup allows the LLM to introduce a layer of autonomy into the agentic workflow by deciding whether or not to invoke the RAG function.

You can easily envision extending this process to include multiple tools and functions at the disposal of the Agentic Workflow.

Personally, I believe function calling is under appreciated or not fully understood… but it’s truly an effective way to build resilience while maintaining a controlled level of agency.

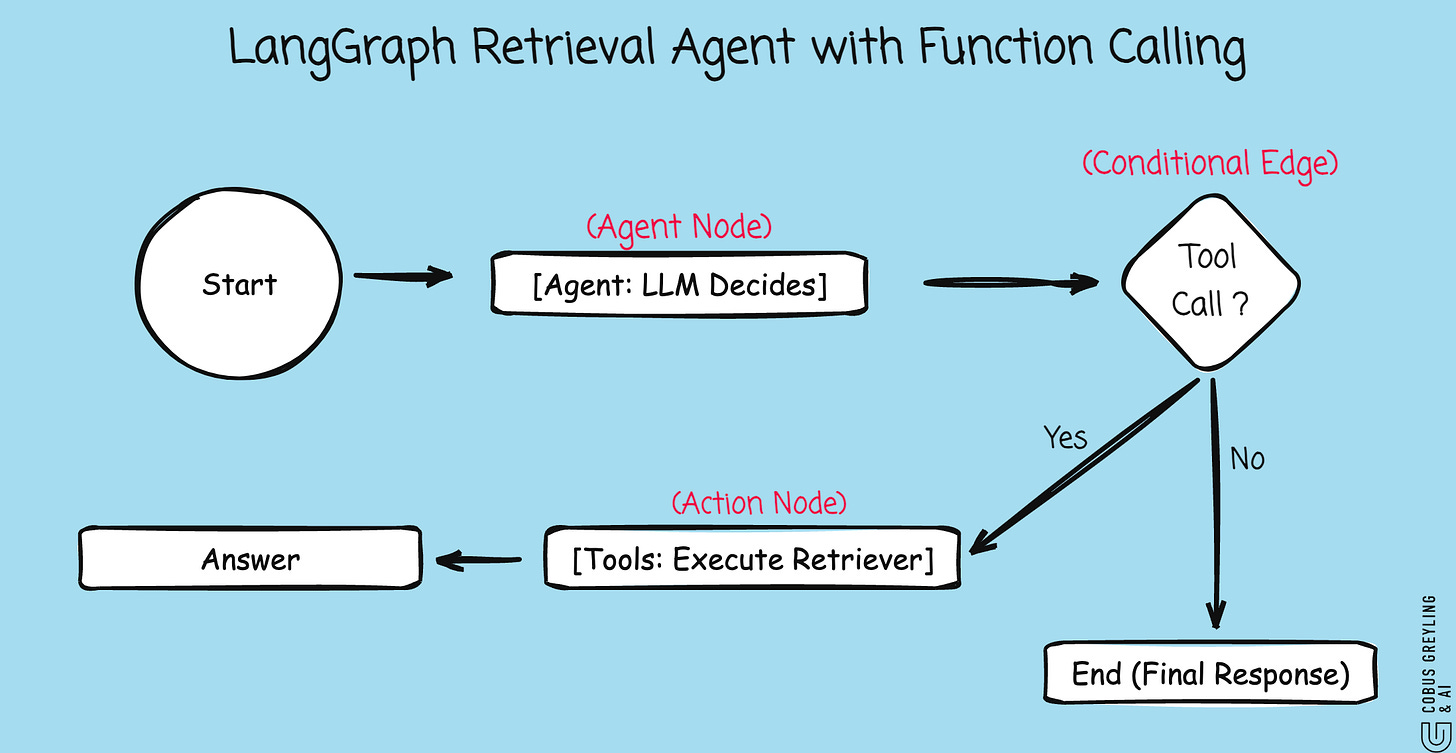

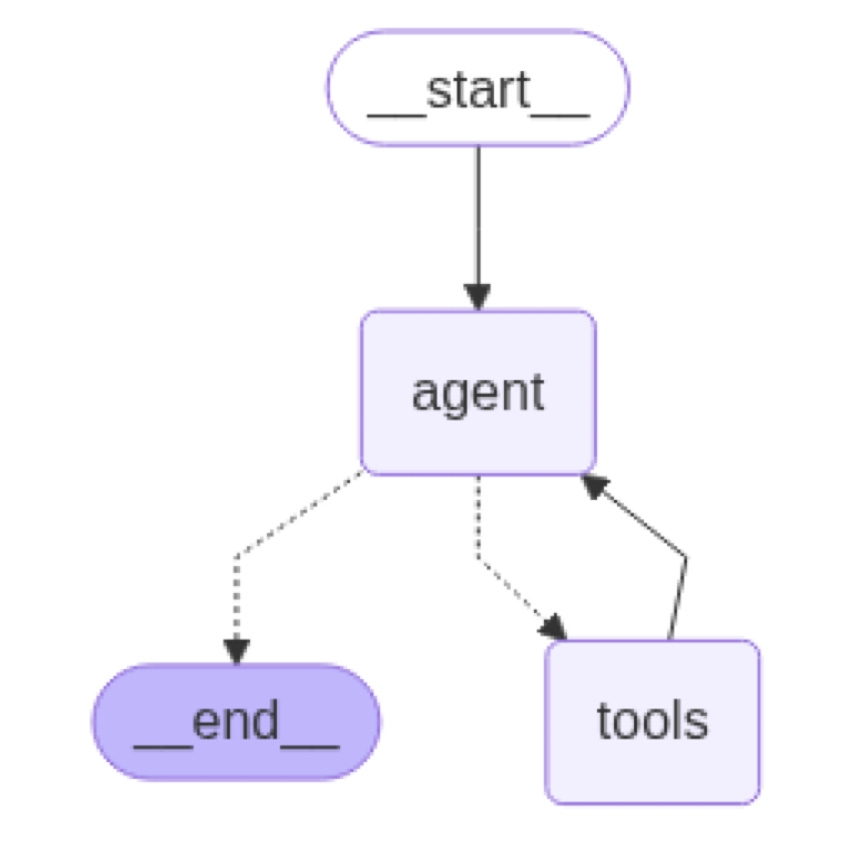

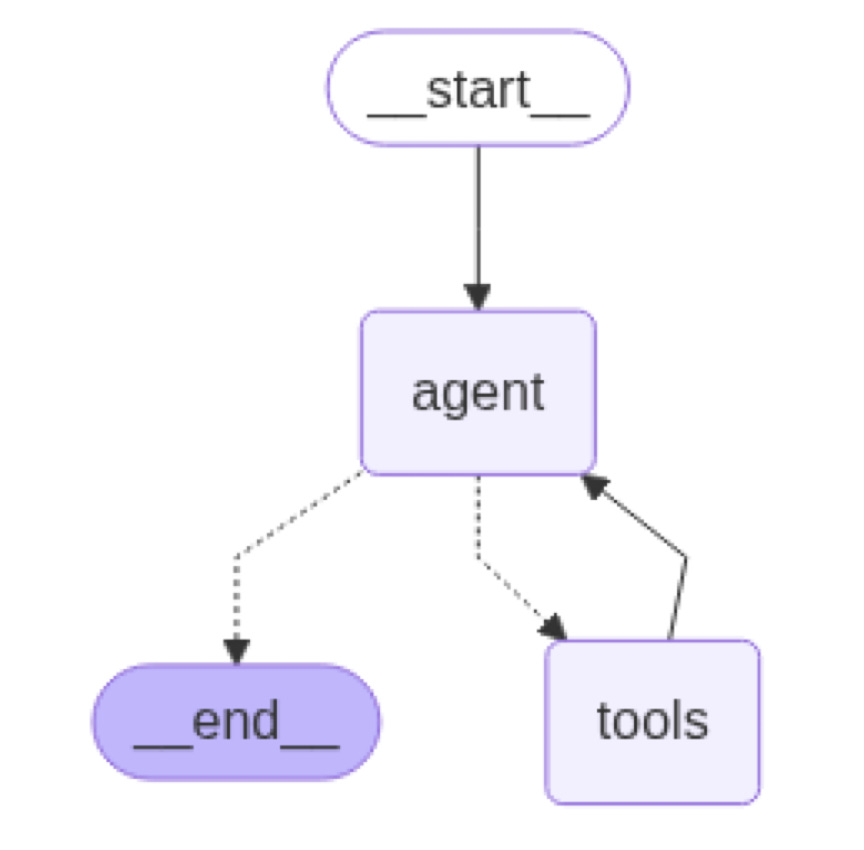

The LangGraph portion in the code is a clear example of an agentic workflow.

I’ll break this down step by step, including what makes it agentic and how the structured flow contributes to that classification.

What Is an Agentic Workflow?

An agentic workflow in AI refers to a dynamic, iterative process where one or more AI Agents (typically powered by LLMs) make autonomous decisions, such as reasoning, planning, and acting (calling tools or routing paths).

Unlike rigid, linear pipelines, the workflows allow for flexibility, looping, and conditional branching based on the agent’s outputs.

They often involve orchestration frameworks to manage state, persistence, and multi-step interactions, enabling more collaborative and refinable AI behaviours.

Agentic workflows offer a balanced level of autonomy — more adaptable than traditional RPA but less unconstrained than full AI agents, which can exhibit overwhelming independence without defined boundaries.

How LangGraph Fits into Agentic Workflows

LangGraph is specifically designed as a stateful orchestration framework for building and deploying such workflows, particularly those involving AI Agents.

It represents processes as graphs, where nodes (an LLM agent or tool execution) and edges (conditional routing) define the flow.

This graph-based structure supports features like persistence, streaming, and debugging, making it ideal for agentic applications.

LangGraph is commonly used to create multi-agent or single-agent setups where the LLM can act as a “router” to decide next steps dynamically.

Why This Specific Code Is an Example

The Flow Aspect

The code uses LangGraph to define a directed graph with nodes for the agent (LLM invocation) and tools (retriever execution), connected by edges.

The conditional edge creates a branching flow, if the LLM outputs tool calls, it routes to the tools node; otherwise, it ends.

This structured flow is what turns a simple LLM call into a workflow — it’s not just sequential but adaptive and stateful (tracking messages across steps).

The Agentic Element

The LLM here functions as an agent that decides the path through function calling (tool invocation).

In the call_model function, the LLM is bound to tools and evaluates the state (messages) to either:

— Call the retrieval tool ( for missing information, routing to further actions).

— Respond directly (no tool calls, routing to completion).

In the second instance, the default functionality of the model is used…for instance…if you ask, what is the weather in SF?

This decision-making mimics agentic behaviour

The LLM reasons step-by-step (guided by the system prompt) and autonomously chooses avenues, enabling iterative refinement ( retrieve context, then generate a final answer).

The presence of a graph-based “flow” orchestrated by LangGraph, combined with the LLM’s role as a decision-making agent, makes this a textbook example of an agentic workflow.

It’s a simple single-agent setup but scalable to more complex multi-agent scenarios.

If you’re building on this, LangGraph tutorials often extend it to include memory, planning, or multiple agents for even more advanced agentic patterns.

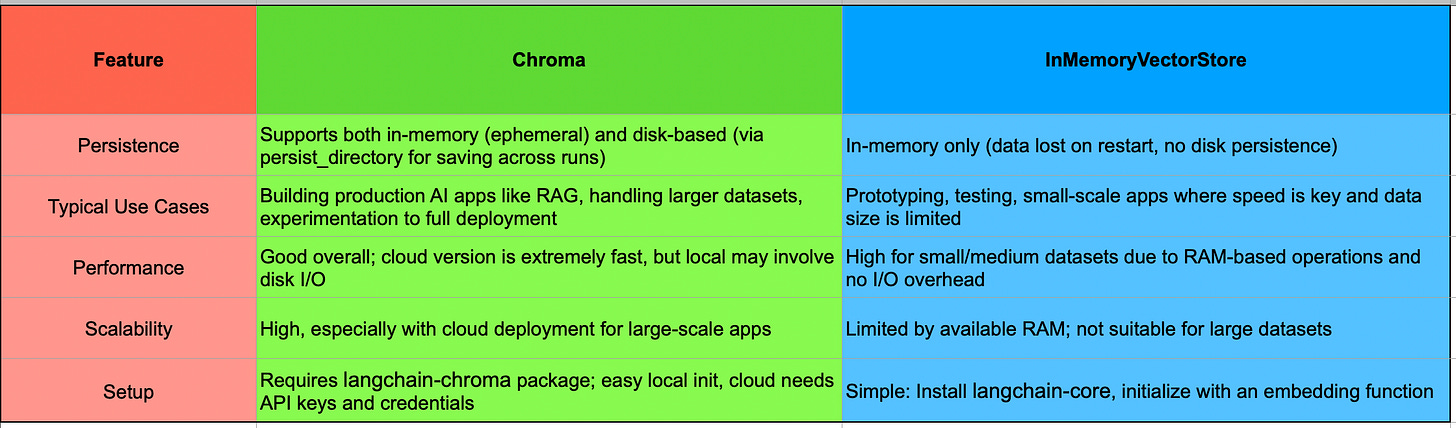

Data Store

The notebook uses a vector store (specifically, Chroma) to store document embeddings for retrieval.

For example, code snippets from related LangGraph RAG tutorials and examples show:

vectorstore = Chroma.from_documents(

documents=doc_splits,

embedding=OpenAIEmbeddings(),

)Function Calling As A Router

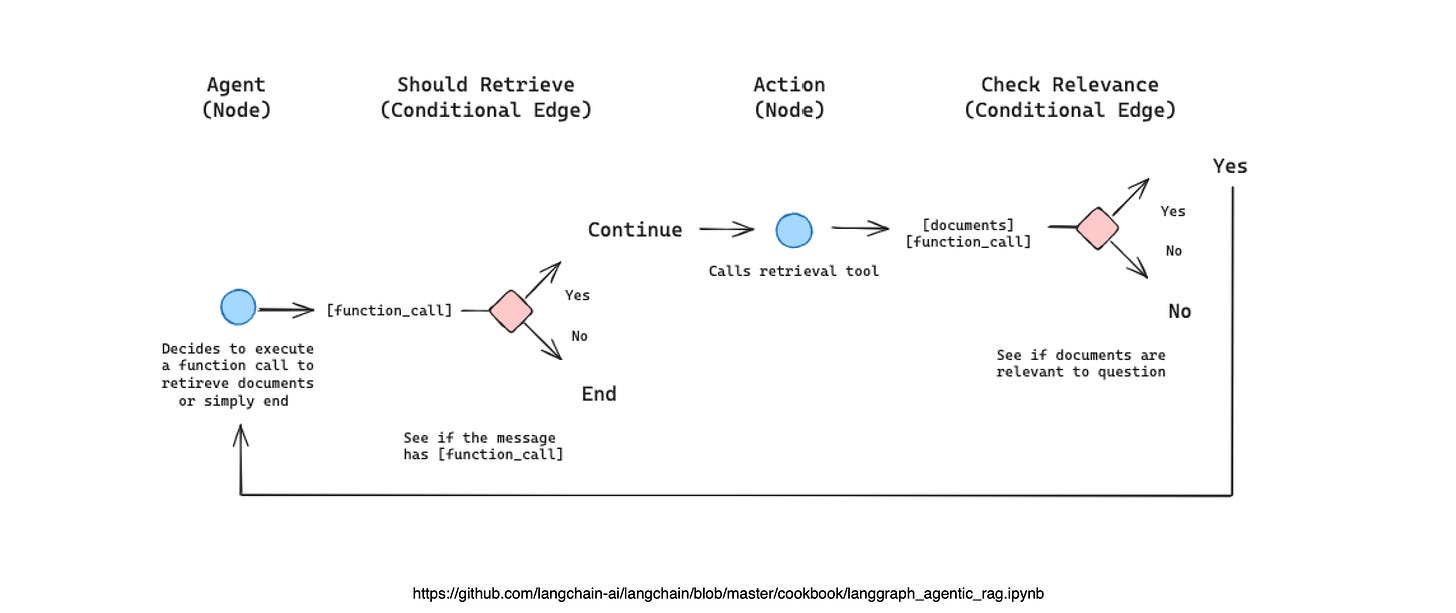

The code effectively illustrates the LLM’s role in function calling (tool invocation) as a decision point or routingmechanism within the LangGraph workflow.

Key Illustration of Routing via Function Calling

LLM as Router

The LLM is bound to tools. When invoked in the call_model function, it processes the input messages and decides whether to:

Respond directly (no tool use, routing to workflow end).

Invoke a tool via function calling (outputting tool_calls in its response, routing to the “tools” node).

This decision is implicit in the model’s output…If the response includes tool_calls (a list of tool invocations), the graph routes accordingly; otherwise, it concludes.

Hence, this setup showcases an agentic workflow with RAG, where the model dynamically routes based on needs (retrieve if info is missing, respond otherwise), enhancing flexibility over static pipelines.

Working Notebook

# Install required packages

!pip install -U --quiet langchain-community tiktoken langchain-openai langchainhub chromadb langchain langgraph langchain-text-splitters

import getpass

import os

# Set OpenAI API key

if "OPENAI_API_KEY" not in os.environ:

os.environ["OPENAI_API_KEY"] = getpass.getpass("OPENAI_API_KEY:")

# Optional: Set LangSmith for tracing (comment out if not needed)

# if "LANGCHAIN_API_KEY" not in os.environ:

# os.environ["LANGCHAIN_API_KEY"] = getpass.getpass("LANGCHAIN_API_KEY:")

# os.environ["LANGCHAIN_TRACING_V2"] = "true"

# os.environ["LANGCHAIN_PROJECT"] = "Agentic RAG"

# Load documents and create vectorstore

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitter

urls = [

"https://lilianweng.github.io/posts/2023-06-23-agent/",

"https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/",

"https://lilianweng.github.io/posts/2023-10-25-adv-attack-llm/",

]

docs = [WebBaseLoader(url).load() for url in urls]

docs_list = [item for sublist in docs for item in sublist]

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=100, chunk_overlap=50

)

doc_splits = text_splitter.split_documents(docs_list)

vectorstore = Chroma.from_documents(

documents=doc_splits,

collection_name="rag-chroma",

embedding=OpenAIEmbeddings(),

)

retriever = vectorstore.as_retriever()

# Create retriever tool

from langchain.tools.retriever import create_retriever_tool

retriever_tool = create_retriever_tool(

retriever,

"retrieve_blog_posts",

"Search and return information about Lilian Weng blog posts on LLM agents, prompt engineering, and adversarial attacks on LLMs.",

)

tools = [retriever_tool]

# Agent state

from typing import Sequence

from typing_extensions import Annotated, TypedDict

from langchain_core.messages import BaseMessage

from langgraph.graph.message import add_messages

class AgentState(TypedDict):

messages: Annotated[Sequence[BaseMessage], add_messages]

# Call model (highlight: this is where the agent decides to use the tool or not via function calling)

from langchain_core.messages import AIMessage, HumanMessage, SystemMessage

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# Use a more reliable model to avoid the error

llm = ChatOpenAI(model="gpt-4o-mini", streaming=True)

# Bind tools

llm = llm.bind_tools(tools)

# Define a system prompt to guide the agent

prompt = ChatPromptTemplate.from_messages(

[

("system", "You are a helpful assistant that answers questions using the provided tools when necessary. Think step-by-step."),

MessagesPlaceholder(variable_name="messages"),

]

)

def call_model(state: AgentState):

# Invoke the chain with the messages

chain = prompt | llm

response = chain.invoke({"messages": state["messages"]})

# Print the LLM's decision on tool use

if response.tool_calls:

tool_names = [tool_call['name'] for tool_call in response.tool_calls]

print(f"LLM decided to use tool(s): {', '.join(tool_names)}")

else:

print("LLM decided not to use any tool.")

# The agent appends the response to the messages

return {"messages": [response]}

# Tool execution

from langgraph.prebuilt import ToolNode

tool_node = ToolNode(tools)

# Define the graph

from langgraph.graph import END, StateGraph, START

from langgraph.prebuilt import tools_condition

workflow = StateGraph(AgentState)

workflow.add_node("agent", call_model)

workflow.add_node("tools", tool_node)

workflow.add_edge(START, "agent")

# Highlight: Tool selection portion - the conditional edge decides if tool is needed based on the agent's response

# If the agent message has tool_calls, it goes to "tools", else to END

workflow.add_conditional_edges(

"agent",

tools_condition,

{"tools": "tools", END: END},

)

workflow.add_edge("tools", "agent")

graph = workflow.compile()

# Print out the graph

from IPython.display import Image, display

display(Image(graph.get_graph().draw_mermaid_png()))

# Example run to illustrate

import pprint

inputs = {

"messages": [

HumanMessage(

content="What are the types of agent memory based on Lilian Weng's blog post?"

)

]

}

for output in graph.stream(inputs):

for key, value in output.items():

pprint.pprint(f"Output from node '{key}':")

pprint.pprint("---")

pprint.pprint(value, indent=2, width=80, depth=None)

pprint.pprint("\n---\n")Print out of the grap

Output

Step 1: LLM Decision on Tool Use

LLM decided to use tool(s): retrieve_blog_posts

Output from Node ‘agent’

{

'messages': [

AIMessage(

content='',

additional_kwargs={

'tool_calls': [

{

'index': 0,

'id': 'call_Vw9cswL1anFHbjKpbC9gPB1N',

'function': {

'arguments': '{"query":"agent memory types"}',

'name': 'retrieve_blog_posts'

},

'type': 'function'

}

]

},

response_metadata={

'finish_reason': 'tool_calls',

'model_name': 'gpt-4o-mini-2024-07-18',

'system_fingerprint': 'fp_560af6e559',

'service_tier': 'default'

},

id='run--26f85d0e-5f70-4df5-a46a-231775f6c08d-0',

tool_calls=[

{

'name': 'retrieve_blog_posts',

'args': {'query': 'agent memory types'},

'id': 'call_Vw9cswL1anFHbjKpbC9gPB1N',

'type': 'tool_call'

}

]

)

]

}Output from Node ‘tools’

{

'messages': [

ToolMessage(

content='Memory stream: is a long-term memory module (external database) that records a comprehensive list of agents’ experience in natural language.\n\nEach element is an observation, an event directly provided by the agent.\n- Inter-agent communication can trigger new natural language statements.\n\n\nRetrieval model: surfaces the context to inform the agent’s behavior, according to relevance, recency and importance.\n\nThe design of generative agents combines LLM with memory, planning and reflection mechanisms to enable agents to behave conditioned on past experience, as well as to interact with other agents.\n\nCategorization of human memory.\n\nWe can roughly consider the following mappings:\n\nSensory memory as learning embedding representations for raw inputs, including text, image or other modalities;\nShort-term memory as in-context learning. It is short and finite, as it is restricted by the finite context window length of Transformer.\nLong-term memory as the external vector store that the agent can attend to at query time, accessible via fast retrieval.\n\nTable of Contents\n\n\n\nAgent System Overview\n\nComponent One: Planning\n\nTask Decomposition\n\nSelf-Reflection\n\n\nComponent Two: Memory\n\nTypes of Memory\n\nMaximum Inner Product Search (MIPS)\n\n\nComponent Three: Tool Use\n\nCase Studies\n\nScientific Discovery Agent\n\nGenerative Agents Simulation\n\nProof-of-Concept Examples\n\n\nChallenges\n\nCitation\n\nReferences',

name='retrieve_blog_posts',

id='1f7c3034-8de4-4031-9429-35d2941edba9',

tool_call_id='call_Vw9cswL1anFHbjKpbC9gPB1N'

)

]

}Step 2: LLM Decision on Tool Use

LLM decided not to use any tool.

Output from Node ‘agent’

{

'messages': [

AIMessage(

content="According to Lilian Weng's blog post, the types of agent memory can be categorized as follows:\n\n1. **Memory Stream**: \n - This serves as a long-term memory module (external database) that records a comprehensive list of the agent's experiences in natural language. Each entry represents an observation or event directly provided by the agent. Inter-agent communication can trigger new natural language statements.\n\n2. **Retrieval Model**: \n - This model surfaces the context that informs the agent's behavior based on relevance, recency, and importance. It helps agents behave conditioned on past experiences and interact with other agents.\n\n3. **Categorization of Human Memory**: \n - This can be roughly mapped as:\n - **Sensory Memory**: Involves learning embedding representations for raw inputs, such as text and images.\n - **Short-term Memory**: Represents in-context learning; it is short and finite due to the limitations of the Transformer’s context window length.\n - **Long-term Memory**: Comprises an external vector store that the agent can access via fast retrieval at query times.\n\nThese memory types facilitate various functionalities and behaviors in agent systems.",

additional_kwargs={},

response_metadata={

'finish_reason': 'stop',

'model_name': 'gpt-4o-mini-2024-07-18',

'system_fingerprint': 'fp_560af6e559',

'service_tier': 'default'

},

id='run--143b529d-6abb-409d-bda7-3c6e1eaef1a9-0'

)

]

}Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. Language Models, AI Agents, Agentic Apps, Dev Frameworks & Data-Driven Tools shaping tomorrow.

langchain/cookbook/langgraph_agentic_rag.ipynb at master · langchain-ai/langchain

🦜🔗 Build context-aware reasoning applications 🦜🔗. Contribute to langchain-ai/langchain development by creating an…github.com

When to Use Functions, a Multi-Tool AI Agent, or Multiple Agents

Sometimes, a single agent with multiple tools is enough — or even just functions.cobusgreyling.medium.com