AI Agent Computer Interface (ACI)

The Agent-Computer Interface (ACI) bridges the gap between traditional desktop environments designed for human users and software agents, enabling more efficient interaction at the GUI level.

With Claude 3.5’s advancements in Sonnet, language models have evolved into AI agents capable of autonomous actions such as browsing the web, running computations, controlling software, and completing real-world tasks.

This marks a significant shift from passive text completion to proactive problem-solving and task execution. Claude 3.5 doesn’t just respond but actively interacts with external tools and environments, embodying a new era where AI is more than a conversational partner — it’s an operational agent within digital ecosystems.

Introduction

The Agent-Computer Interface (ACI) bridges the gap between traditional desktop environments designed for human users and software agents, enabling more efficient interaction at the GUI level.

While human users intuitively respond to visual changes, AI agents like MLLMs struggle with interpreting detailed feedback and executing precise actions due to slower, discrete operation cycles and lack of internal coordinates.

The ACI addresses this by combining image and accessibility tree inputs for better perception and grounding.

It also constrains the action space for safety and precision, allowing agents to perform discrete actions with immediate feedback, improving their ability to interact with complex interfaces reliably.

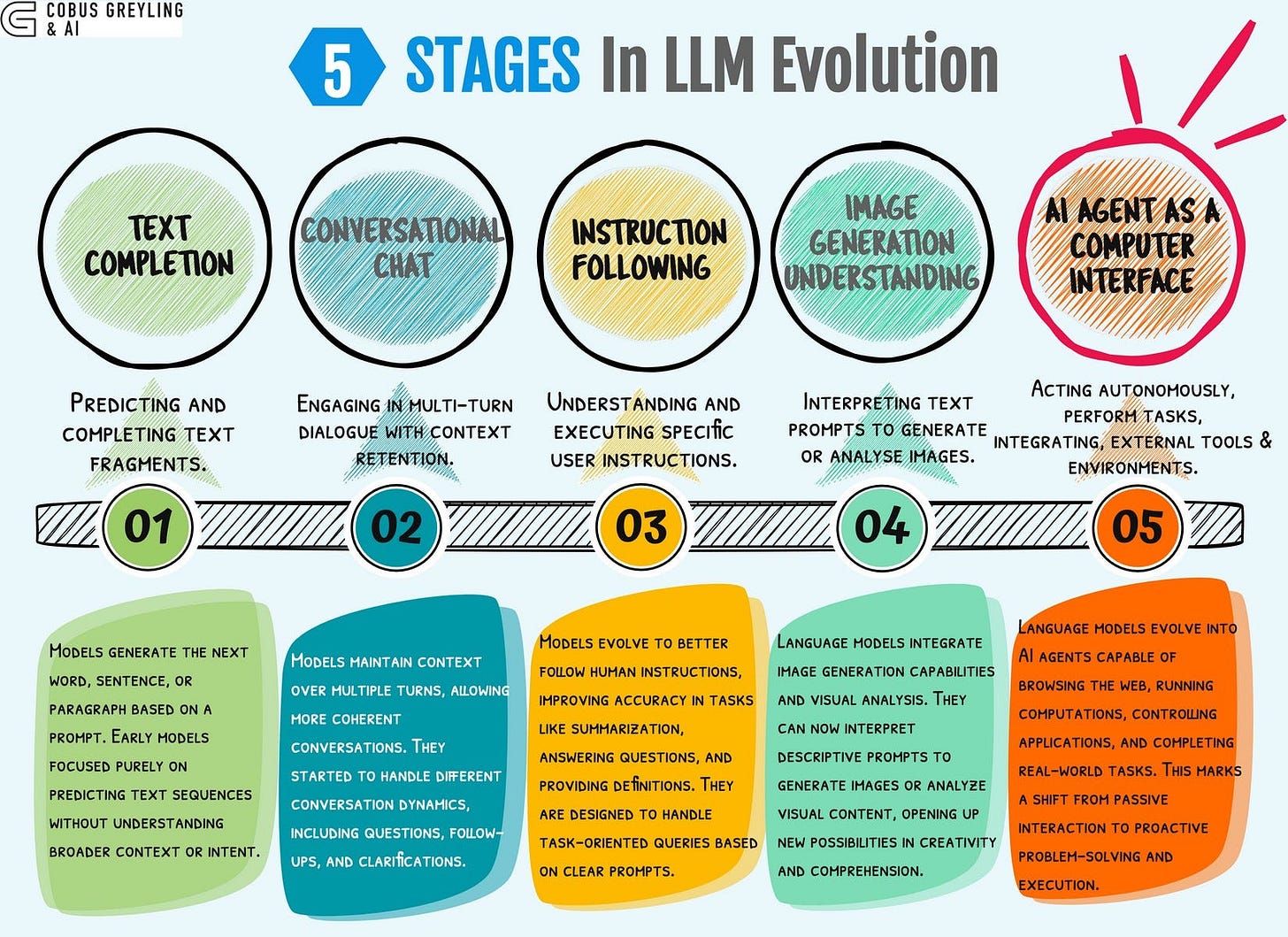

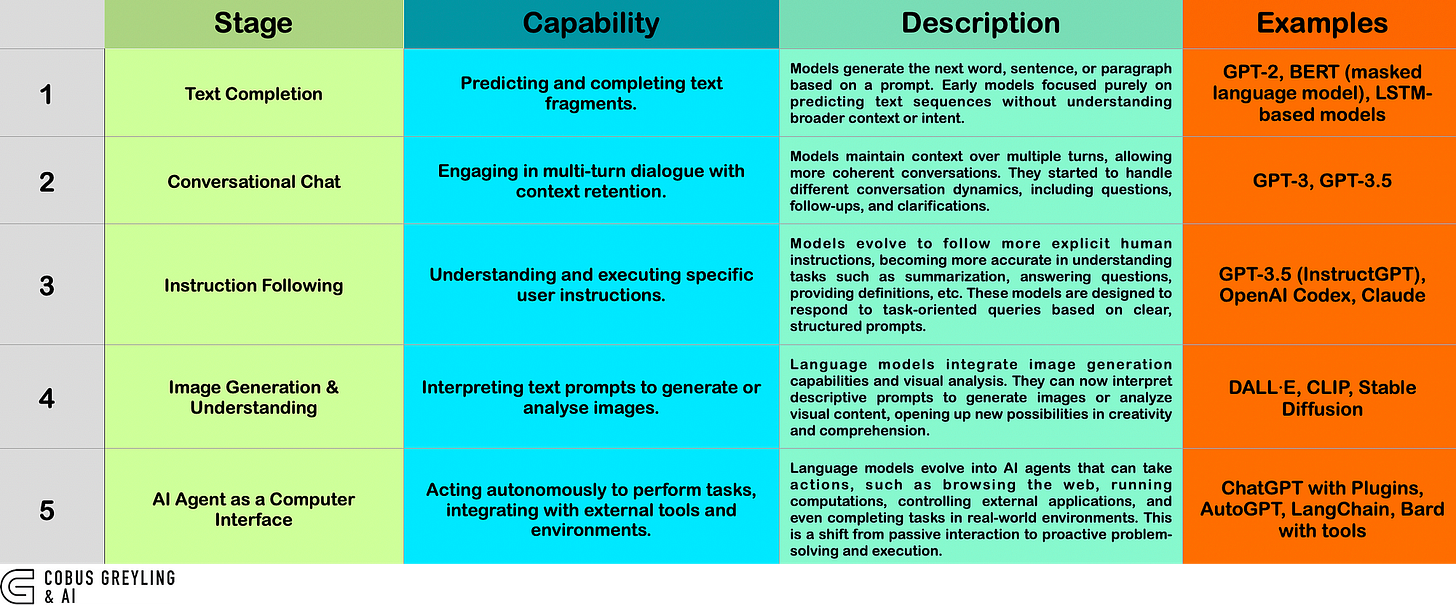

5 Stages in LLM Evolution

Completion: Early models focused on predicting sequences of text.

Chat: Evolved to hold multi-turn conversations while retaining context.

Instruction Following: Better alignment with user commands and goal-driven queries.

Image Capabilities: Text-to-image models expanded the input/output scope beyond text.

AI Agent Interface: Current phase where language models can act autonomously, integrating multiple tools and acting as an interface to perform complex tasks.

Claude 3.5 Sonnet

After reading Anthropic’s blog on Claude’s ability to use software like a human, I found the implications of this advancement really exciting.

Claude’s capacity to navigate graphical user interfaces (GUIs) and perform tasks traditionally done by humans marks a big leap in AI’s practical utility.

What stood out to me was the emphasis on safety, particularly how Anthropic addresses risks like prompt injection attacks, ensuring more reliable and secure AI.

I also appreciate the focus on improving speed and accuracy, which will be critical for making AI more effective in dynamic environments.

This development opens the door to more seamless human-AI collaboration, especially in complex tasks that require precision.

The blog also touched on how Claude’s evolving interaction capabilities will be instrumental in transforming the way AI agents work with software.

I think this step forward could significantly impact fields like automation, making AI not just a tool but an active, reliable agent in everyday tasks.

The AI Agent implementation described in the GitHub repository demonstrates how to enable an AI model to interact with software applications effectively.

It showcases a computer use demo that allows the AI to perform tasks like browsing the web and executing commands, highlighting a shift from merely responding to inquiries to actively completing tasks.

This approach aims to improve human-computer interaction by making AI agents more capable and responsive in various environments.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.