AI Agent Memory

Memory is the Cornerstone of True Intelligence

AI Agent Memory

Memory is the Cornerstone of True Intelligence

AI Agents and Agentic Systems are rapidly evolving and the spotlight keeps shifting — from raw reasoning power to tool use, and now squarely onto memory.

Suddenly, everyone is talking about memory, and for good reason…memory is the foundation of genuine intelligence.

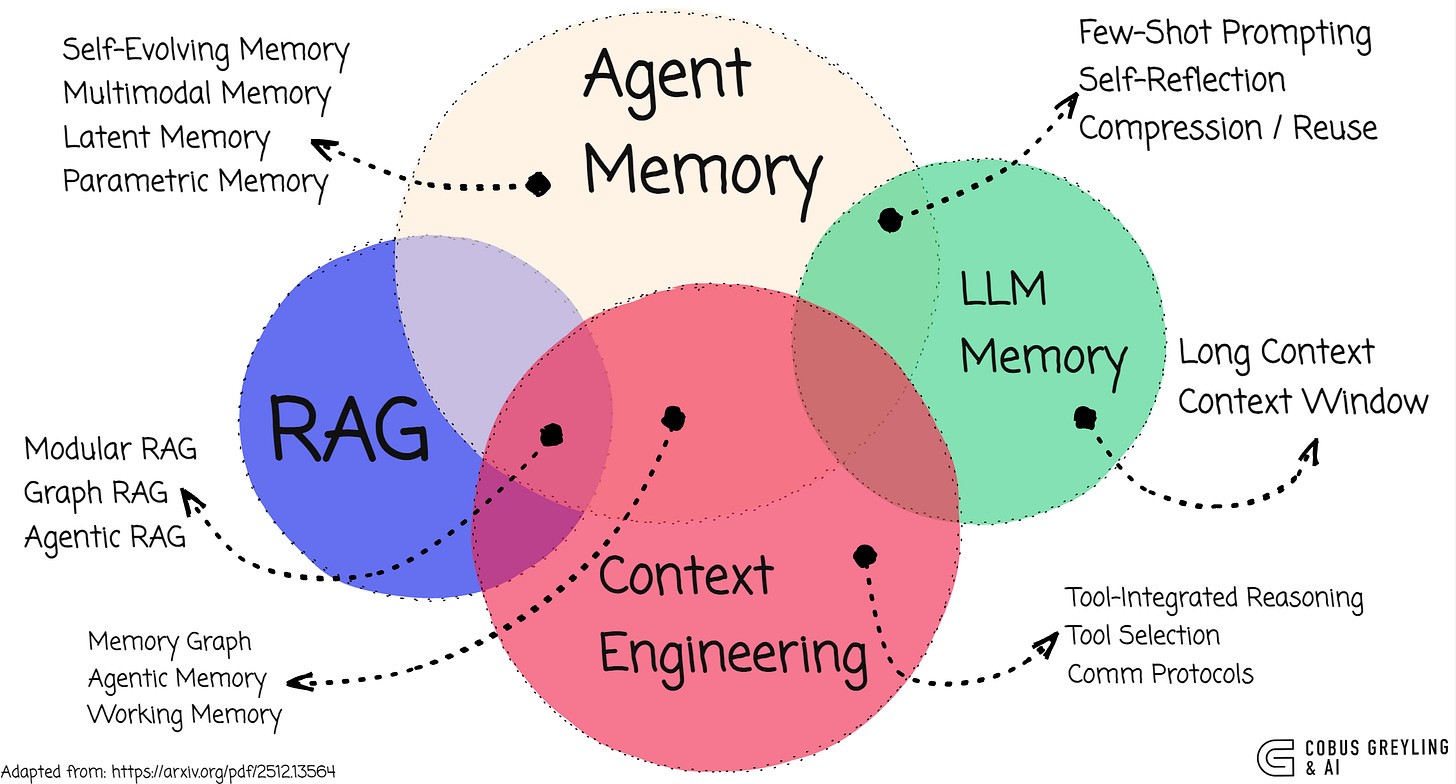

Yet memory in AI Agents is deeply intertwined with context.

Without context, even the most sophisticated conversation becomes meaningless.

Why Memory

Human memory is profoundly personal.

It spans years, devices, apps, emails, photos, messages and fleeting thoughts (immediate context).

It’s fragmented, time-distributed and intimately tied to our identity.

This makes it powerful — but also fragile.

Security and privacy risks are enormous; a single leak can be devastating.

This is precisely why closed ecosystems like Apple and Microsoft hold a massive advantage. They already control the devices, apps, cloud storage and data flows where personal memory lives.

Embedding intelligent memory into an environment they already secure and manage is far easier (and safer) than trying to retrofit it into an open, fragmented world.

Natural Language Demands Rich Context

AI Agents represent the most natural interface we’ve ever built: conversation.

We speak to them in natural language, just as we do with other people.

And human conversation is irreducibly contextual. We constantly retrieve prior shared history to make sense of the present. And as humans, when context is missing, we ask clarifying questions or skilfully fill in the blanks — often with remarkable accuracy.

Today’s AI models still struggle with this. When context is incomplete, they tend to hallucinate — confidently inventing details to patch the gaps.

Reliable, persistent memory is the antidote.

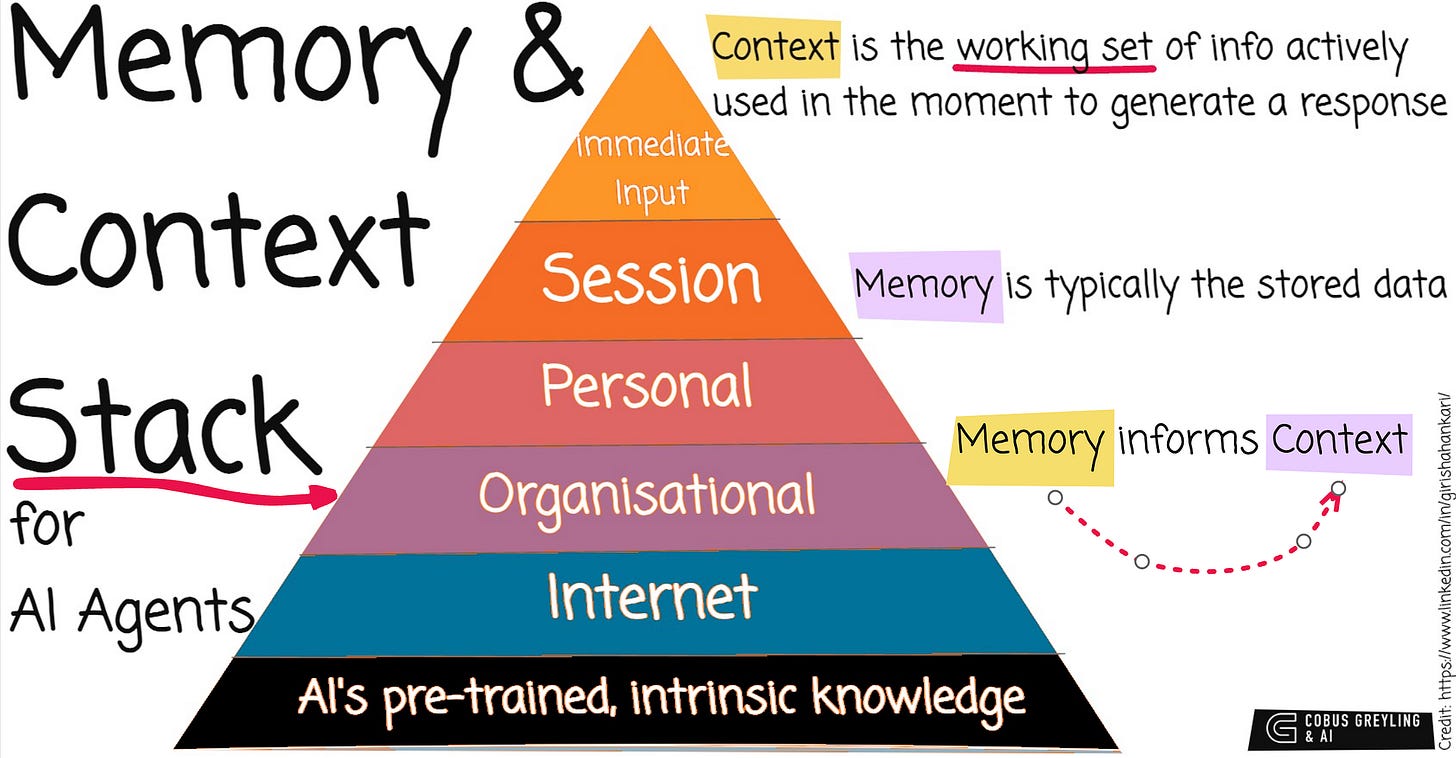

Memory vs. Context — There is a Subtle but Crucial Distinction

Every conversation requires context. That context is constructed from retrieved memory.

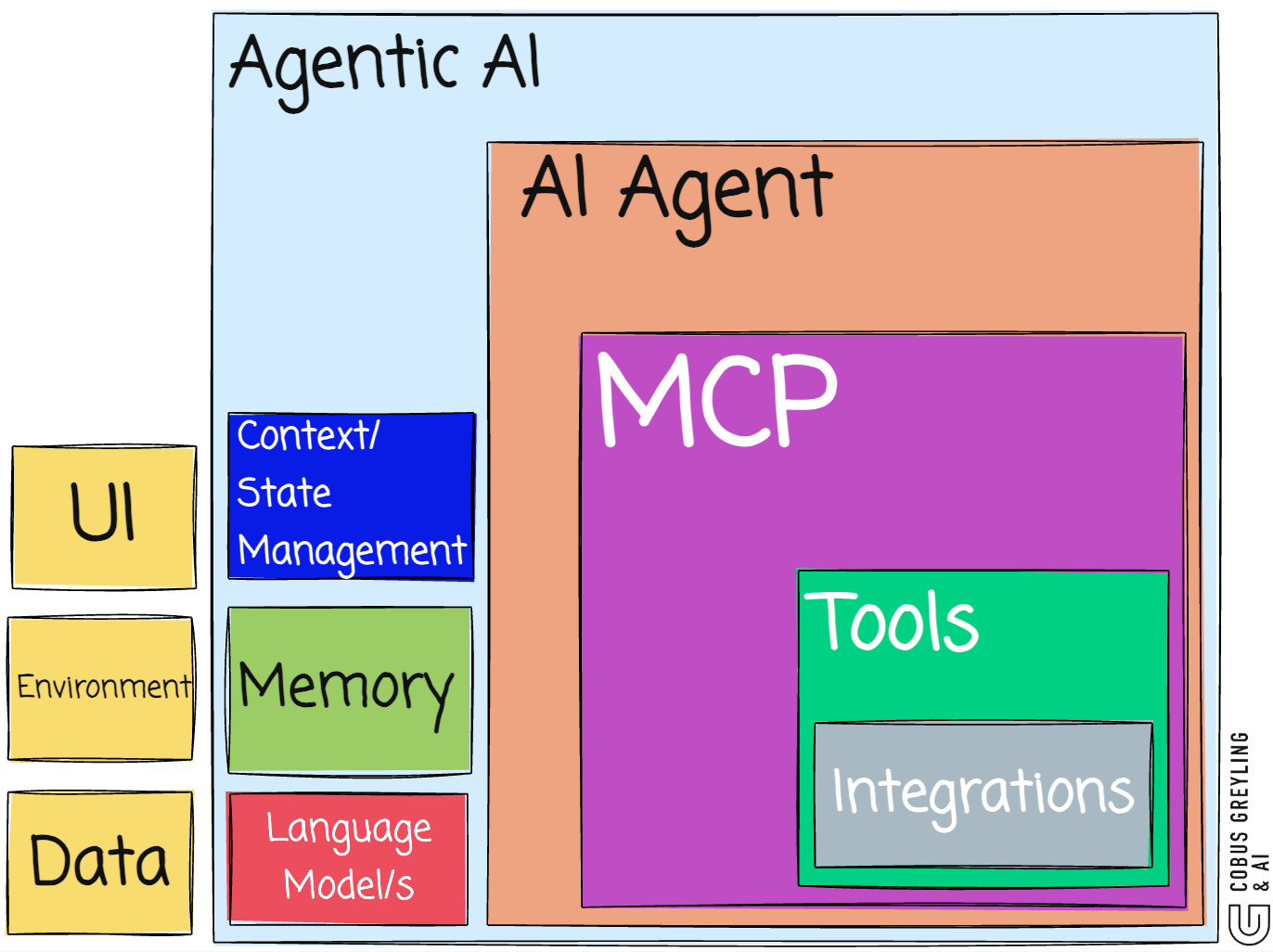

Memory lives at different layers in an AI Agent system — short-term working memory, long-term episodic recall, external knowledge bases, tool outputs, user history, and more.

The AI Agent must intelligently decide when and from where to pull the right pieces to inform the current context.

The Evolution of Context

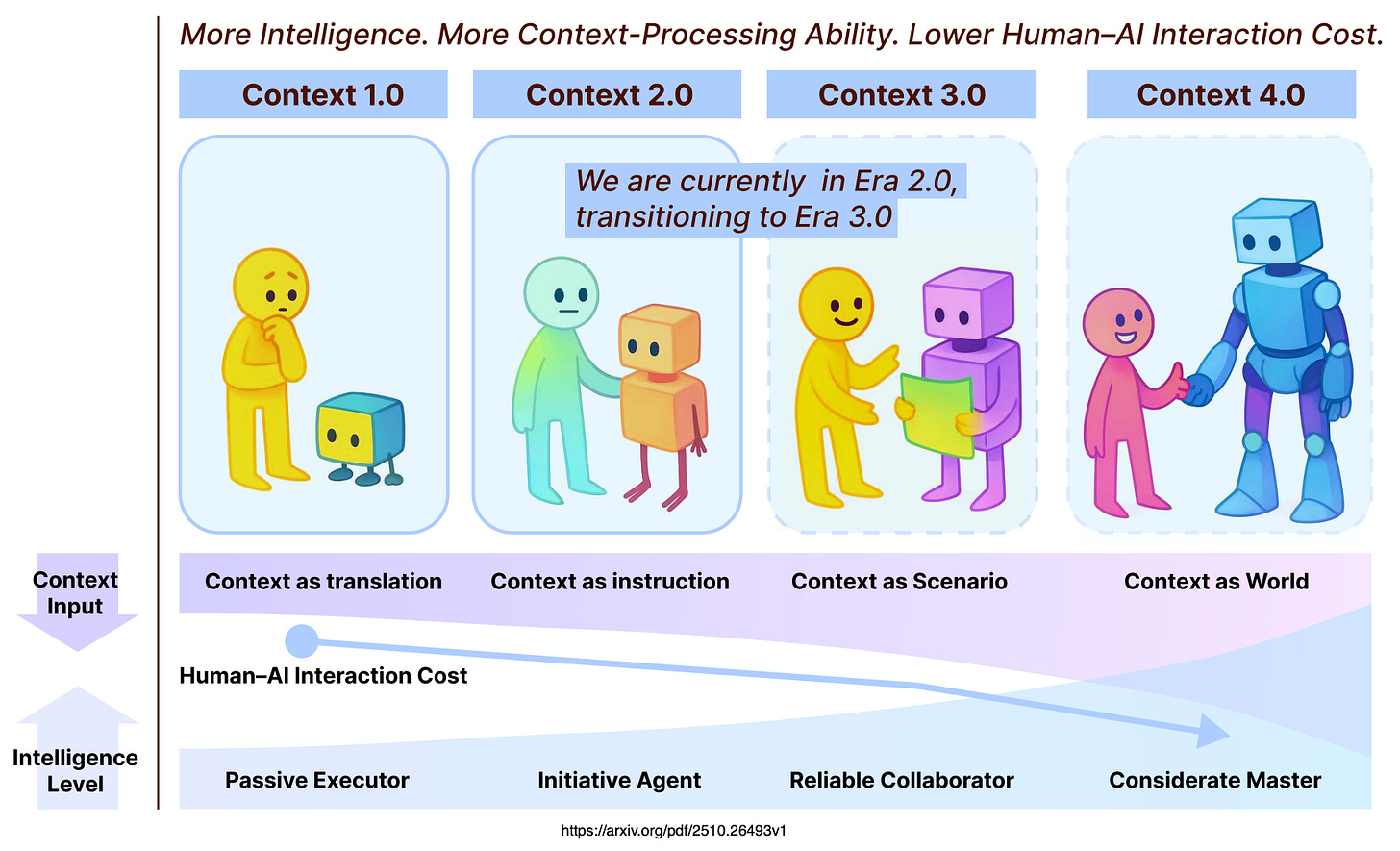

A recent study defined a powerful framework (arXiv 2510.26493) which illustrates how context itself is evolving through four distinct eras,,,

Context 1.0: Context as Translation (Pre-2020)

AI as a Passive Executor. Humans had to translate intent into rigid commands, menus, or structured inputs.

Context was minimal and explicit.

Context 2.0: Context as Instruction (2020–Present)

AI as an Initiative Agent. LLMs understand natural language and reason over ambiguity, but still depend heavily on detailed prompts and instructions. We’re here now — and already transitioning forward.

Context 3.0: Context as Scenario (Near Future)

AI as a Reliable Collaborator. Near-human intelligence enables deep understanding of complex, high-entropy scenarios — emotions, social dynamics, unspoken goals. Collaboration feels seamless and peer-like.

Context 4.0: Context as World (Far Future)

AI as a Considerate Master. Super intelligent systems perceive the full world of context, anticipate unstated needs, and proactively shape interactions.

Roles reverse, AI becomes the guide.

This progression reveals a clear trend, as AI handles ever-richer contexts, human effort plummets, interaction becomes frictionless, and agents shift from tools to true partners.

The Sticky Future of Memory

Robust, secure memory will create the stickiness that model providers and platforms desperately want.

Users won’t switch away from an AI Agent that truly remembers their preferences, history, goals, and quirks — especially if that memory feels safe and private.

We’re also likely to see an entirely new category emerge: dedicated memory applications and systems — specialised stores, retrieval engines and privacy-first memory layers designed specifically for AI Agents.

In the end, the winners in the agent era won’t just be the smartest models.

They’ll be the ones that remember best — and most responsibly.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. Language Models, AI Agents, Agentic Apps, Dev Frameworks & Data-Driven Tools shaping tomorrow.

Reference study:

https://arxiv.org/abs/2512.13564

Solid take on memory as the next frontier for agents. The Context 1.0 → 4.0 framework is particuarly interesting—we're clearly still in that 2.0 → 3.0 transition zone where agents understand instructions but struggle with high-entropy human contexts. The stickiness point is spot-on tho; once an agent actualy remembers preferences and past interactions reliably, switching costs spike. I've been working with long-context systems and the retrieval-timing challenge (when to pull from memory vs tool output) is harder than most people think.