AI Focussed On Collaboration Not Completion

Agents We are Too Fixated on Completion & Not Collaboration

In Short

Chatbots were very collaborative, not by choice but by necessity.

The development and technical affordances of today did not exist a few years ago when chatbots became highly popular.

Chatbots worked best when user input was not too verbose and detailed. Single intent utterances worked best, with 2 or 3 entities.

The process and conversation was very collaborative because the chatbot worked best when the conversation and unfolded steadily between the user and the conversational UI.

With GenAI we went to the other extreme.

Users can give all their context in one go, multi-intent and entity (nouns)…and the LLM-based UI can go away and in short time come back with the answer.

But often not accurate in terms of the unexpressed or implicit intent of the user.

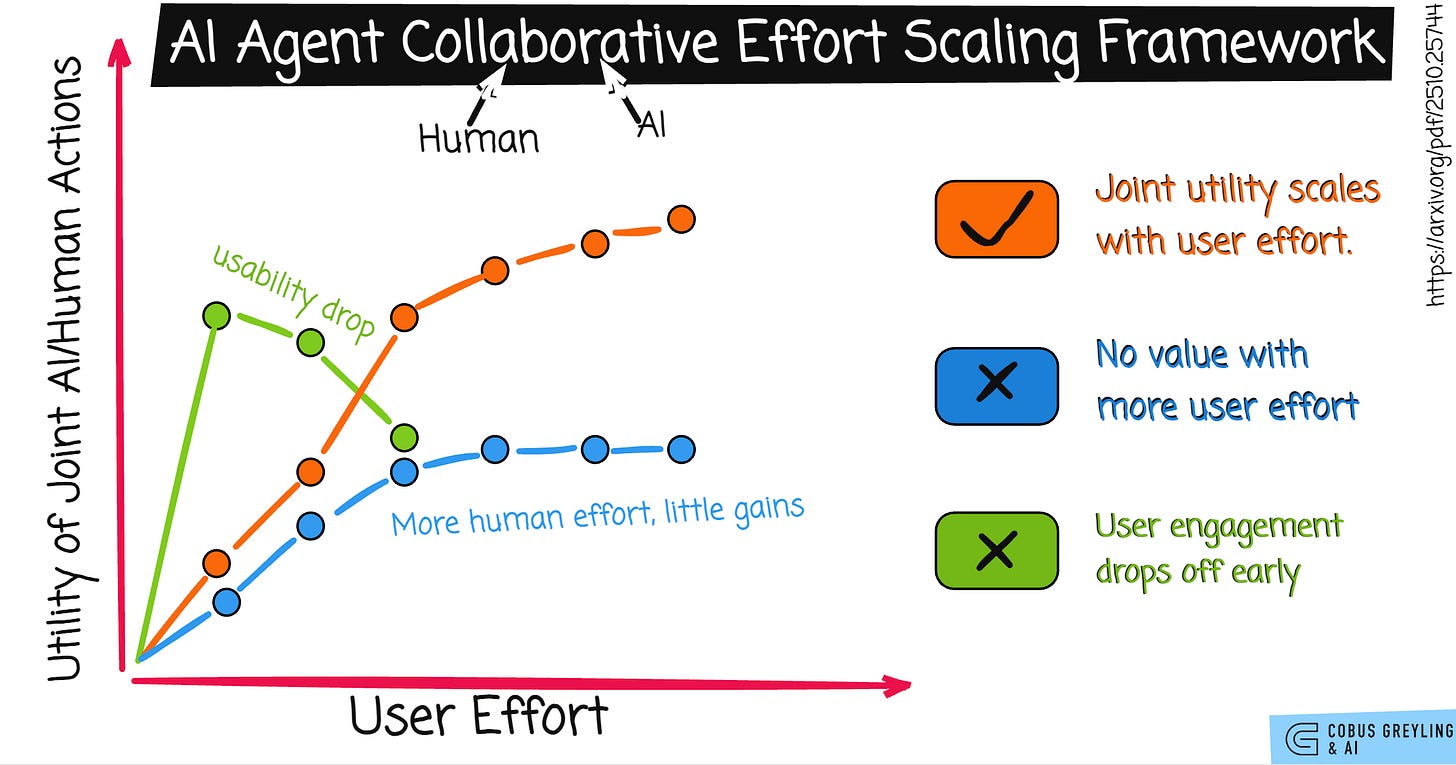

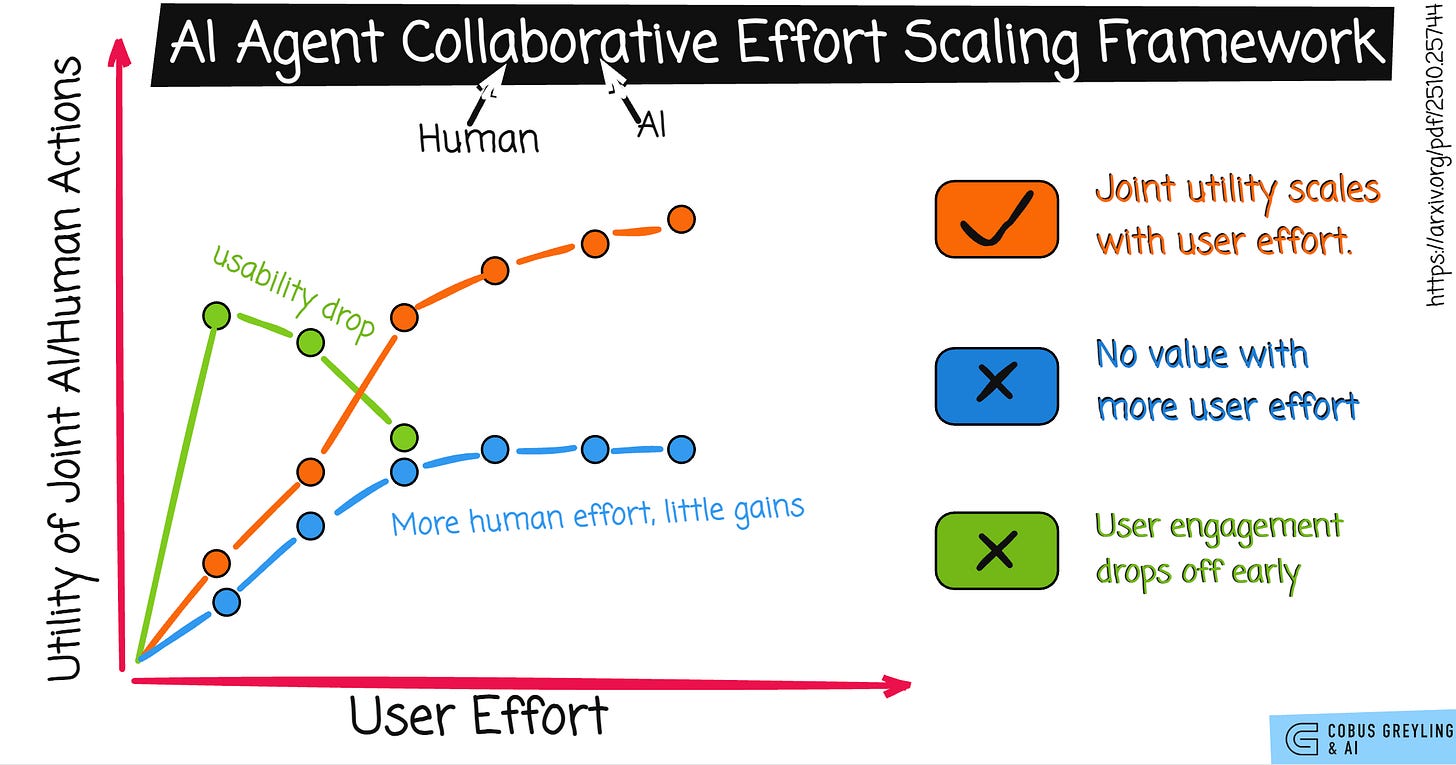

Generative AI (GenAI) Agents, through frameworks like collaborative effort scaling, enable the quantification how human collaboration boosts joint utility.

Via rising performance with effort in complex, evolving tasks, so we can design agents to dynamically engage users when it creates value.

Fostering sustainable interactions.

While defaulting to autonomous completion for straightforward, low-effort scenarios where added human input yields diminishing or no returns.

More Detail

I generally find Generative AI UI’s too verbose, and fixated on reaching a conclusion, instead of collaboration.

With the advent of AI Agents as advanced conversational UI’s, most if not all conversation design practices were ditched. But many of these conversation design principles are making a comeback now.

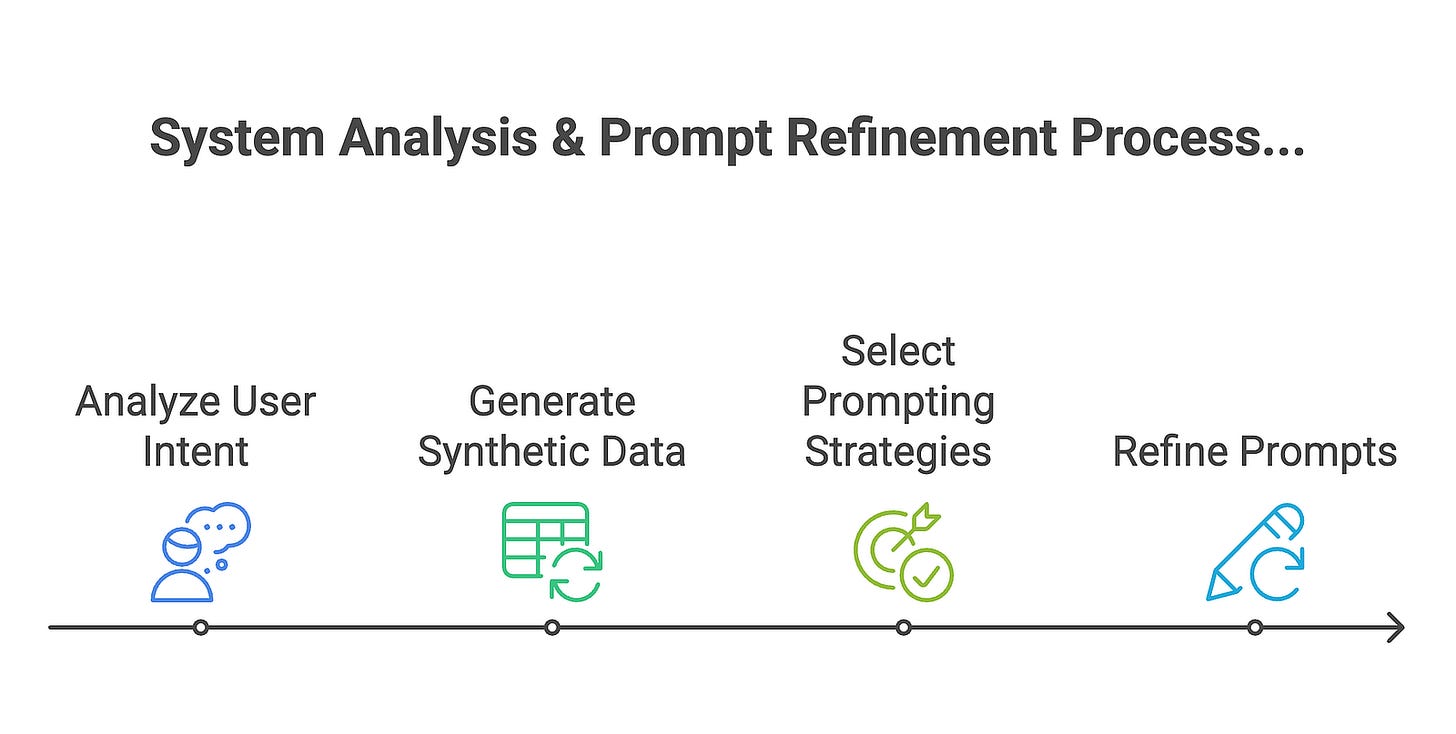

For instance, intents were the backbone of traditional chatbots, and have been declared dead a number of times. However, in building Agentic workflows, intents have made a comeback in classifying the user intent.

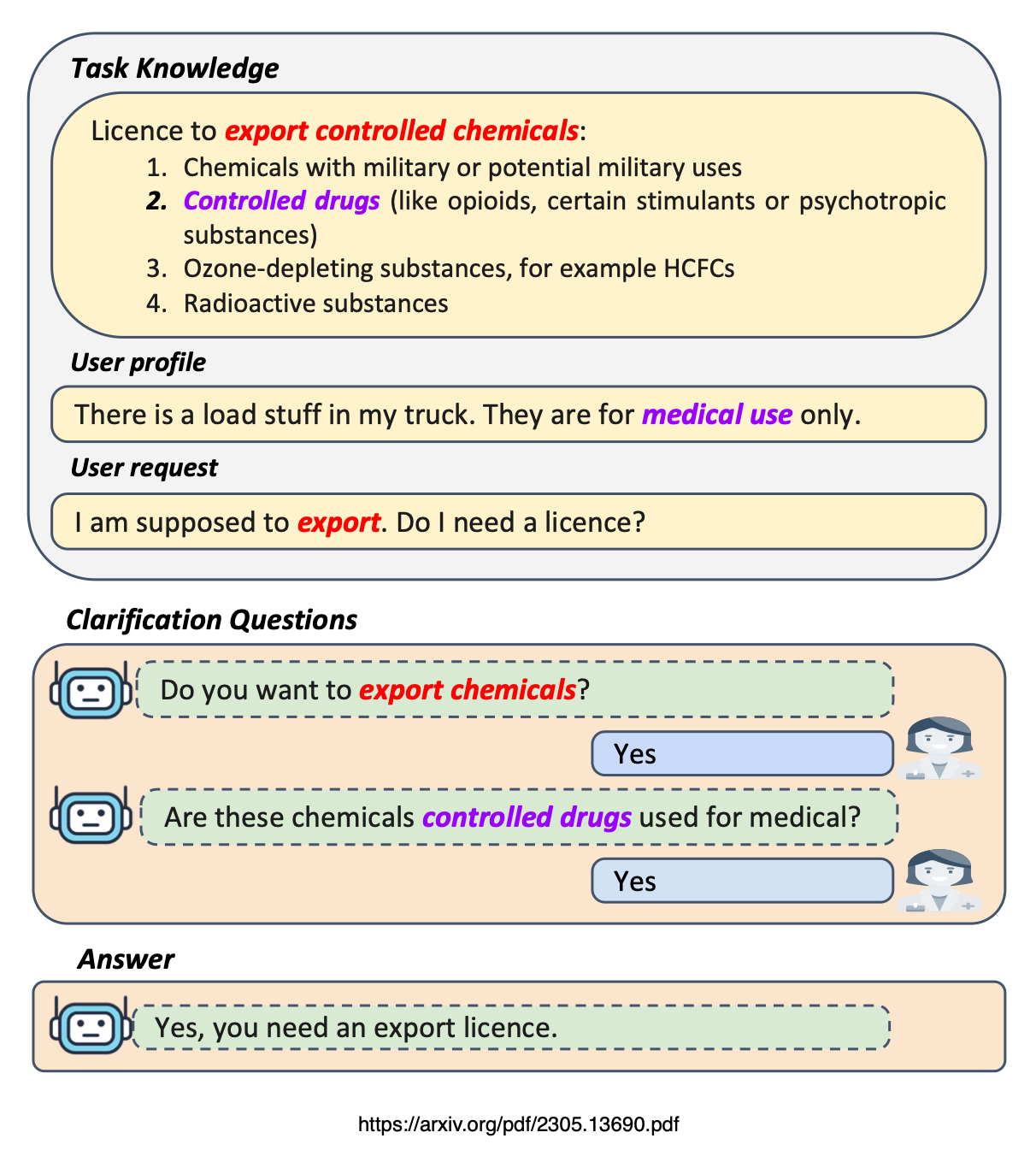

Disambiguation is another of the conversation design principles which came back to help us.

Recently OpenAI revealed how their deep research API and ChatGPT work under the hood. A big part of the underlying orchestration was clarification and establishing the user’s intended context.

So due to to the lack of technical affordances when building chatbots not too long ago, the conversation between to chatbot / conversational UI and the user had to be very much iterative based on a multi-turn conversation.

Users were also not able to submit too much context or be too verbose, the ideal was for the user to use as little as words possible, too many intents and entities created confusion.

Then with generative AI the more verbose the user were, the better. User’s could supply rich content in the form of intents and entities, and the AI will do the rest. And reach a final conclusion.

Seemingly an ideal situation, verbose and detailed user input leading the a final answer from the AI.

According to a recent study, AI UI’s are centred around one-shot task completion, failing to account for the inherently iterative and collaborative nature of many real-world problems.

One area where I saw this is getting Grok to write a software application for me. It is really hard to be explicit in all regards of describing the application.

Having an interactive and collaborative conversation to reach the user’s goal turns out not to be a bad thing after all…

Again, using Language Models for especially coding showed us that human goals are often underspecified and evolve.

So the study argues that there needs to be a shift from building and assessing task completion AI Agents to developing collaborative AI Agents, assessed not only by the quality of their final outputs but by how well they engage with and enhance human effort throughout the problem-solving process.

A collaborative effort scaling, a framework that captures how an agent’s utility grows with increasing user involvement.

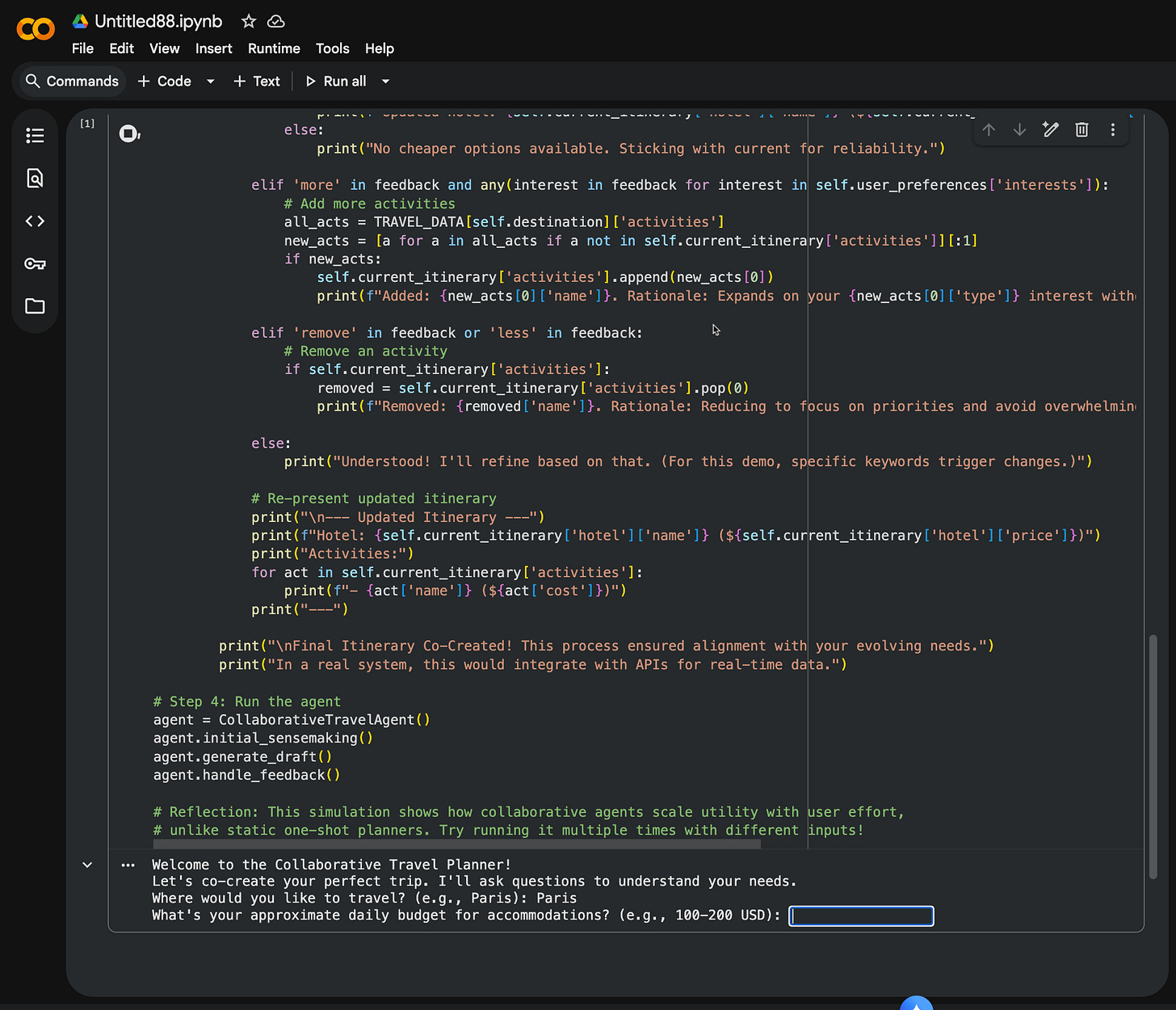

The code below is a working notebook showing a collaborative travel planning AI Agent.

This notebook illustrates the new way of collaborative working from the research I discussed.

Completion ≠ Collaboration.

It demonstrates an iterative, user-engaged AI Agent for travel planning that does the following:

Supports iterative sense-making through clarifying questions.

Explains rationales behind recommendations.

Responds constructively to evolving feedback.

Maintains reliability by avoiding low-quality or inconsistent content.

No external libraries needed beyond standard Python. You can run cells sequentially in Colab.

Step 1, Define travel data (simplified for illustration)

# Step 1: Define travel data (simplified for illustration)

TRAVEL_DATA = {

‘paris’: {

‘hotels’: [

{’name’: ‘Luxury Hotel A’, ‘price’: 300, ‘location’: ‘City Center’, ‘rating’: 4.8, ‘amenities’: [’Spa’, ‘Rooftop View’]},

{’name’: ‘Budget Hotel B’, ‘price’: 150, ‘location’: ‘Near Metro’, ‘rating’: 4.2, ‘amenities’: [’Free WiFi’, ‘Breakfast’]},

{’name’: ‘Mid-Range Hotel C’, ‘price’: 200, ‘location’: ‘Historic District’, ‘rating’: 4.5, ‘amenities’: [’Gym’, ‘Pet-Friendly’]}

],

‘activities’: [

{’name’: ‘Eiffel Tower’, ‘cost’: 25, ‘duration’: ‘2 hours’, ‘type’: ‘Sightseeing’, ‘crowd_level’: ‘High’},

{’name’: ‘Louvre Museum’, ‘cost’: 17, ‘duration’: ‘3 hours’, ‘type’: ‘Cultural’, ‘crowd_level’: ‘Medium’},

{’name’: ‘Seine River Cruise’, ‘cost’: 15, ‘duration’: ‘1 hour’, ‘type’: ‘Relaxation’, ‘crowd_level’: ‘Low’}

]

}

# Add more destinations as needed

}

class CollaborativeTravelAgent:

def __init__(self):

self.user_preferences = {} # To store evolving user intent

self.current_itinerary = {} # Draft itinerary

self.destination = None

def initial_sensemaking(self):

“”“Step 1: Iterative sensemaking - Gather initial input and clarify underspecified goals.”“”

print(”Welcome to the Collaborative Travel Planner!”)

print(”Let’s co-create your perfect trip. I’ll ask questions to understand your needs.”)

self.destination = input(”Where would you like to travel? (e.g., Paris): “).strip().lower()

if self.destination not in TRAVEL_DATA:

print(”Sorry, I only have data for Paris right now. Let’s use that as an example.”)

self.destination = ‘paris’

self.user_preferences[’budget’] = input(”What’s your approximate daily budget for accommodations? (e.g., 100-200 USD): “).strip()

self.user_preferences[’interests’] = input(”What are your main interests? (e.g., sightseeing, culture, relaxation): “).strip().split(’,’)

# Clarify if needed

if ‘relaxation’ in self.user_preferences[’interests’] and len(self.user_preferences[’interests’]) > 1:

confirm = input(”You mentioned relaxation along with other activities. Would you prefer a balanced itinerary or more focus on unwinding? (balanced/unwind): “).strip().lower()

self.user_preferences[’focus’] = confirm if confirm in [’balanced’, ‘unwind’] else ‘balanced’

else:

self.user_preferences[’focus’] = ‘balanced’

print(f”\nGot it! Planning for {self.destination.capitalize()} with budget {self.user_preferences[’budget’]} and interests: {’, ‘.join(self.user_preferences[’interests’])}.”)

def generate_draft(self):

“”“Step 2: Produce an initial draft with rationale explanations.”“”

data = TRAVEL_DATA[self.destination]

# Filter hotels by budget (simple parsing)

budget_range = [int(x.strip()) for x in self.user_preferences[’budget’].split(’-’)]

min_budget, max_budget = budget_range[0], budget_range[-1] if len(budget_range) > 1 else budget_range[0] * 2

filtered_hotels = [h for h in data[’hotels’] if min_budget <= h[’price’] <= max_budget]

if not filtered_hotels:

filtered_hotels = data[’hotels’][:2] # Fallback

print(”Note: Expanding budget slightly for options.”)

# Select top hotel

selected_hotel = max(filtered_hotels, key=lambda h: h[’rating’])

self.current_itinerary[’hotel’] = selected_hotel

# Filter activities by interests

interest_types = [i.strip().lower() for i in self.user_preferences[’interests’]]

filtered_activities = [a for a in data[’activities’] if any(t in a[’type’].lower() for t in interest_types)]

if self.user_preferences[’focus’] == ‘unwind’:

filtered_activities = sorted(filtered_activities, key=lambda a: a[’crowd_level’])[:2]

else:

filtered_activities = filtered_activities[:2]

self.current_itinerary[’activities’] = filtered_activities

# Explain rationale

print(”\n--- Initial Draft Itinerary ---”)

print(f”**Hotel Recommendation: {selected_hotel[’name’]} (${selected_hotel[’price’]}/night)**”)

print(f”Rationale: Selected based on your budget ({self.user_preferences[’budget’]}) and highest rating ({selected_hotel[’rating’]}).”)

print(f”Location: {selected_hotel[’location’]}, Amenities: {’, ‘.join(selected_hotel[’amenities’])}”)

print(”\n**Activity Suggestions:**”)

for activity in filtered_activities:

print(f”- {activity[’name’]} (${activity[’cost’]}, {activity[’duration’]})”)

print(f” Rationale: Matches your interest in {activity[’type’].lower()}. Crowd level: {activity[’crowd_level’]}.”)

print(”--- End Draft ---”)

def handle_feedback(self):

“”“Step 3: Constructive response to evolving feedback - Adapt iteratively.”“”

while True:

feedback = input(”\nWhat feedback do you have? (e.g., ‘Change hotel to cheaper’, ‘Add more cultural activities’, ‘Done’): “).strip().lower()

if feedback == ‘done’:

break

# Adapt based on feedback (simple keyword matching for illustration)

if ‘cheaper’ in feedback or ‘budget’ in feedback:

cheaper_hotels = [h for h in TRAVEL_DATA[self.destination][’hotels’] if h[’price’] < self.current_itinerary[’hotel’][’price’]]

if cheaper_hotels:

self.current_itinerary[’hotel’] = min(cheaper_hotels, key=lambda h: h[’price’])

print(f”Updated hotel: {self.current_itinerary[’hotel’][’name’]} (${self.current_itinerary[’hotel’][’price’]}). Rationale: Lower cost while staying in budget.”)

else:

print(”No cheaper options available. Sticking with current for reliability.”)

elif ‘more’ in feedback and any(interest in feedback for interest in self.user_preferences[’interests’]):

# Add more activities

all_acts = TRAVEL_DATA[self.destination][’activities’]

new_acts = [a for a in all_acts if a not in self.current_itinerary[’activities’]][:1]

if new_acts:

self.current_itinerary[’activities’].append(new_acts[0])

print(f”Added: {new_acts[0][’name’]}. Rationale: Expands on your {new_acts[0][’type’]} interest without overload.”)

elif ‘remove’ in feedback or ‘less’ in feedback:

# Remove an activity

if self.current_itinerary[’activities’]:

removed = self.current_itinerary[’activities’].pop(0)

print(f”Removed: {removed[’name’]}. Rationale: Reducing to focus on priorities and avoid overwhelming schedule.”)

else:

print(”Understood! I’ll refine based on that. (For this demo, specific keywords trigger changes.)”)

# Re-present updated itinerary

print(”\n--- Updated Itinerary ---”)

print(f”Hotel: {self.current_itinerary[’hotel’][’name’]} (${self.current_itinerary[’hotel’][’price’]})”)

print(”Activities:”)

for act in self.current_itinerary[’activities’]:

print(f”- {act[’name’]} (${act[’cost’]})”)

print(”---”)

print(”\nFinal Itinerary Co-Created! This process ensured alignment with your evolving needs.”)

print(”In a real system, this would integrate with APIs for real-time data.”)

# Step 4: Run the agent

agent = CollaborativeTravelAgent()

agent.initial_sensemaking()

agent.generate_draft()

agent.handle_feedback()

# Reflection: This simulation shows how collaborative agents scale utility with user effort,

# unlike static one-shot planners. Try running it multiple times with different inputs!Below the conversational UI, if I can call it that, from running the notebook.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. Language Models, AI Agents, Agentic Apps, Dev Frameworks & Data-Driven Tools shaping tomorrow.

Completion $\neq$ Collaboration: Scaling Collaborative Effort with Agents

Current evaluations of agents remain centered around one-shot task completion, failing to account for the inherently…arxiv.org

COBUS GREYLING

Where AI Meets Language | Language Models, AI Agents, Agentic Applications, Development Frameworks & Data-Centric…www.cobusgreyling.com

GitHub - clinicalml/collaborative-effort-scaling

Contribute to clinicalml/collaborative-effort-scaling development by creating an account on GitHub.github.com

Designing and Evaluating LLM Agents Through the Lens of Collaborative Effort Scaling

Large Language Model (LLM) agents capable of handling complex tasks are becoming increasingly popular: Given a task…www.szj.io