AI Web Agents

AI Web Agents are a type of Agentic Application capable of autonomously exploring the web to accomplish specific tasks.

In this article I take a step back to briefly reconsider and again define agents as we got to know it via the LangChain & LlamaIndex implementations.

And then I consider how virtually all of the focus was on grounding and establishing context, and how the focus is shifting from grounding to exploration.

Considering agents with vision and GUI navigation capabilities, recent research on agents with vision and web/OS navigation capabilities, include Apple, Microsoft, IBM and others…

Contrary to previous assertions, planning emerges as the primary bottleneck limiting overall agent performance. ~ Source

Unlike traditional AI models that rely on predefined data, these agents actively interact with the web by identifying, interpreting, and interacting with elements such as text boxes, buttons, and links to fulfill their objectives.

This shift marks a transition from static, grounded knowledge to dynamic exploration, where AI agents not only retrieve information but also perform tasks like data extraction, form submission, or even navigating complex workflows.

This opens new horizons for AI-driven task automation, making systems more adaptable and autonomous in real-world applications.

Grounding to Exploration

Grounding Fundamentals

The focus was always on grounding in a quest to establish contextual relevance, injecting real-time relevant data and mitigate hallucination.

In the context of large language models (LLMs), in-context learning played a crucial role in improving their ability to generate contextual and relevant responses.

In-context learning allows an LLM to make decisions based on a sequence of inputs (prompts) provided during a specific session, without modifying its underlying parameters.

Essentially, the model learns from the context given within the input (like examples or patterns), allowing it to understand and respond more accurately in real-time, without the need for external fine-tuning.

This makes it more adaptable, able to generate responses closely aligned with the specific examples or tasks in the input sequence.

This in turn brings RAG into the picture…RAG (Retrieval-Augmented Generation) is an advanced method that complements in-context learning by improving an LLM’s ability to handle complex or specialised tasks.

In RAG, the model can retrieve information from external knowledge sources or databases during the generation process. This ensures the LLM can access up-to-date or specific knowledge that it might not have been trained on directly, thus enhancing the quality of its responses.

Exploration

With Agentic applications, LLM implementations are evolving from simply grounding responses in existing knowledge to actively exploring and interacting with their environment to complete tasks.

Instead of relying solely on static information retrieval, agents are now equipped to autonomously navigate GUIs like web environments or operating systems to gather real-time data.

This allows them to execute complex workflows, such as filling out forms, solving problems, or adapting to changing user needs in dynamic settings.

By combining exploration with reasoning and decision-making, these agents can perform tasks more efficiently and autonomously. This shift represents a more robust and flexible approach to task automation, aligning LLMs closer to human-like problem-solving capabilities.

Multi-Modal Language Models

Multi-Modal Language Models also known as Foundation Models are the enabler for AI Agents with exploratory capabilities…

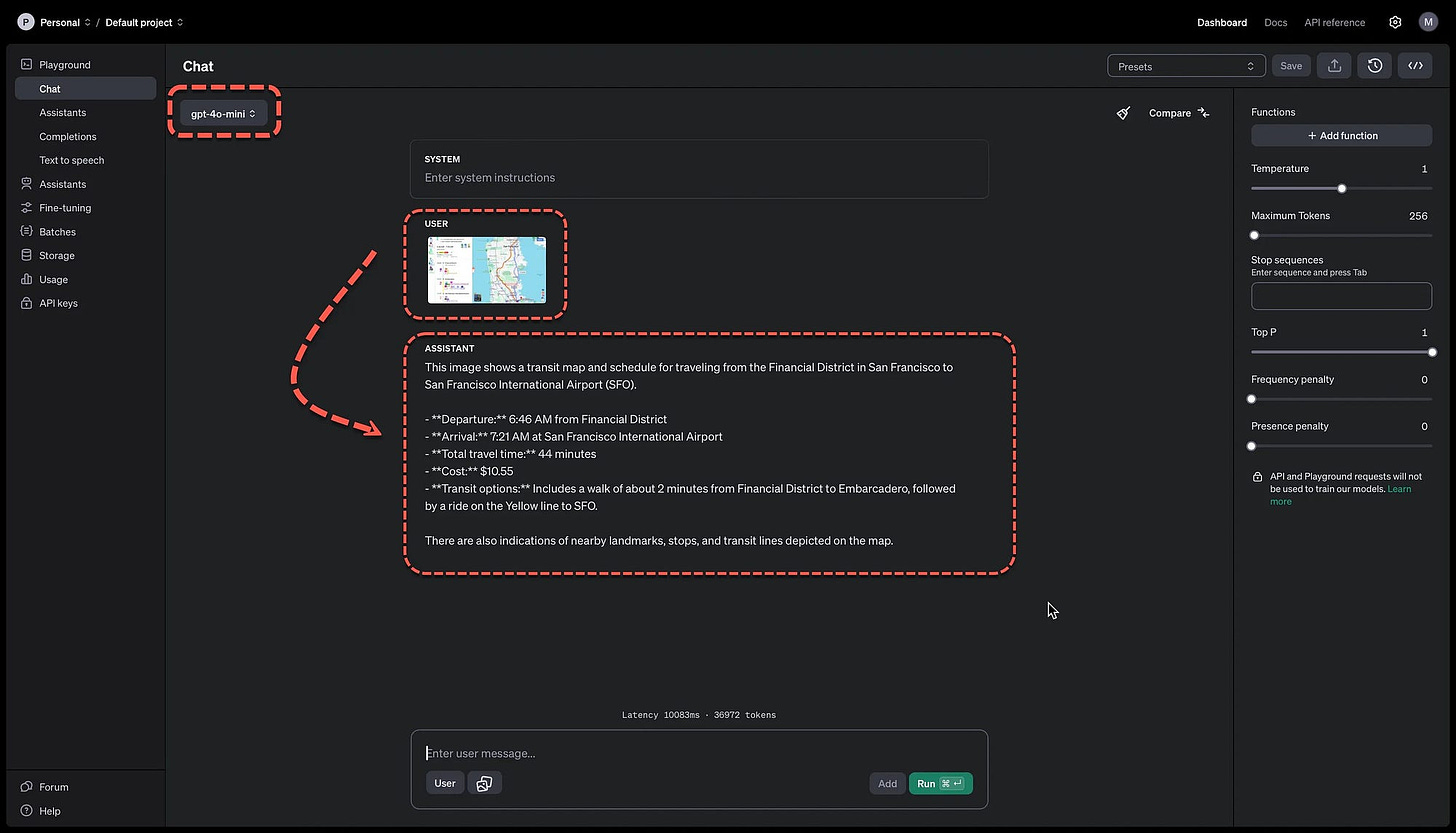

In the image below from the OpenAI Playground, using the GPT-4o-mini model, users can upload an image, and the model generates descriptive text or related information based on the visual content of the image.

This feature allows the model to process visual data and produce relevant textual outputs, expanding its utility beyond text-based tasks.

AI Agents & Agentic Applications

What are AI agents or Agentic Applications? Well, this is the best definition I could come up with:

𝘈𝘯 𝘈𝘐 𝘈𝘨𝘦𝘯𝘵 𝘪𝘴 𝘢 𝘴𝘰𝘧𝘵𝘸𝘢𝘳𝘦 𝘱𝘳𝘰𝘨𝘳𝘢𝘮 𝘥𝘦𝘴𝘪𝘨𝘯𝘦𝘥 𝘵𝘰 𝘱𝘦𝘳𝘧𝘰𝘳𝘮 𝘵𝘢𝘴𝘬𝘴 𝘰𝘳 𝘮𝘢𝘬𝘦 𝘥𝘦𝘤𝘪𝘴𝘪𝘰𝘯𝘴 𝘢𝘶𝘵𝘰𝘯𝘰𝘮𝘰𝘶𝘴𝘭𝘺 𝘣𝘢𝘴𝘦𝘥 𝘰𝘯 𝘵𝘩𝘦 𝘵𝘰𝘰𝘭𝘴 𝘵𝘩𝘢𝘵 𝘢𝘳𝘦 𝘢𝘷𝘢𝘪𝘭𝘢𝘣𝘭𝘦.

𝘈𝘴 𝘴𝘩𝘰𝘸𝘯, 𝘢𝘨𝘦𝘯𝘵𝘴 𝘳𝘦𝘭𝘺 𝘰𝘯 𝘰𝘯𝘦 𝘰𝘳 𝘮𝘰𝘳𝘦 𝘓𝘢𝘳𝘨𝘦 𝘓𝘢𝘯𝘨𝘶𝘢𝘨𝘦 𝘔𝘰𝘥𝘦𝘭𝘴 𝘰𝘳 𝘍𝘰𝘶𝘯𝘥𝘢𝘵𝘪𝘰𝘯 𝘔𝘰𝘥𝘦𝘭𝘴 𝘵𝘰 𝘣𝘳𝘦𝘢𝘬 𝘥𝘰𝘸𝘯 𝘤𝘰𝘮𝘱𝘭𝘦𝘹 𝘵𝘢𝘴𝘬𝘴 𝘪𝘯𝘵𝘰 𝘮𝘢𝘯𝘢𝘨𝘦𝘢𝘣𝘭𝘦 𝘴𝘶𝘣-𝘵𝘢𝘴𝘬𝘴.

𝘛𝘩𝘦𝘴𝘦 𝘴𝘶𝘣-𝘵𝘢𝘴𝘬𝘴 𝘢𝘳𝘦 𝘰𝘳𝘨𝘢𝘯𝘪𝘴𝘦𝘥 𝘪𝘯𝘵𝘰 𝘢 𝘴𝘦𝘲𝘶𝘦𝘯𝘤𝘦 𝘰𝘧 𝘢𝘤𝘵𝘪𝘰𝘯𝘴 𝘵𝘩𝘢𝘵 𝘵𝘩𝘦 𝘢𝘨𝘦𝘯𝘵 𝘤𝘢𝘯 𝘦𝘹𝘦𝘤𝘶𝘵𝘦.

𝘛𝘩𝘦 𝘢𝘨𝘦𝘯𝘵 𝘢𝘭𝘴𝘰 𝘩𝘢𝘴 𝘢𝘤𝘤𝘦𝘴𝘴 𝘵𝘰 𝘢 𝘴𝘦𝘵 𝘰𝘧 𝘥𝘦𝘧𝘪𝘯𝘦𝘥 𝘵𝘰𝘰𝘭𝘴, 𝘦𝘢𝘤𝘩 𝘸𝘪𝘵𝘩 𝘢 𝘥𝘦𝘴𝘤𝘳𝘪𝘱𝘵𝘪𝘰𝘯 𝘵𝘩𝘢𝘵 𝘩𝘦𝘭𝘱𝘴 𝘪𝘵 𝘥𝘦𝘵𝘦𝘳𝘮𝘪𝘯𝘦 𝘸𝘩𝘦𝘯 𝘢𝘯𝘥 𝘩𝘰𝘸 𝘵𝘰 𝘶𝘴𝘦 𝘵𝘩𝘦𝘴𝘦 𝘵𝘰𝘰𝘭𝘴 𝘪𝘯 𝘴𝘦𝘲𝘶𝘦𝘯𝘤𝘦 𝘵𝘰 𝘢𝘥𝘥𝘳𝘦𝘴𝘴 𝘤𝘩𝘢𝘭𝘭𝘦𝘯𝘨𝘦𝘴 𝘢𝘯𝘥 𝘳𝘦𝘢𝘤𝘩 𝘢 𝘧𝘪𝘯𝘢𝘭 𝘤𝘰𝘯𝘤𝘭𝘶𝘴𝘪𝘰𝘯.

Below is a good example of the LangChain implementation of the WebVoyager implementation…the image is quiteself-explanatory.

More On Exploration

Generalised web agents are designed to autonomously navigate and interact with complex web environments, performing tasks that range from basic information retrieval to complex multi-step procedures.

As the demand for automation and intelligent interaction with web interfaces increases, these agents are becoming vital across a range of applications, including virtual assistants, automated customer service, and AI copilots.

Their ability to handle diverse web-based tasks efficiently makes them increasingly important in improving user experience and streamlining workflows.

Web agents consist of two key components: planning and grounding.

Planning refers to the agent’s ability to determine the correct sequence of actions needed to complete a given task.

Below is an example of an agent’s planning capabilities, the agent is asked a complex, compound and slightly ambiguous question. The agent response demonstrates planning, decomposition, actions, observations, thoughts and the reaching a final answer.

### Request:

agent.run("Who is regarded as the farther of the iPhone and what is the square root of his year of birth?")

### Agent Response:

> Entering new AgentExecutor chain...

I need to find out who is regarded as the father of the iPhone and his year of birth. Then, I will calculate the square root of his year of birth.

Action: Search

Action Input: father of the iPhone year of birth

Observation: Family. Steven Paul Jobs was born in San Francisco, California, on February 24, 1955, to Joanne Carole Schieble and Abdulfattah "John" Jandali (Arabic: عبد الف ...

Thought:I found that Steve Jobs is regarded as the father of the iPhone and he was born in 1955. Now I will calculate the square root of his year of birth.

Action: Calculator

Action Input: sqrt(1955)

Observation: Answer: 44.21538193886829

Thought:I now know the final answer.

Final Answer: Steve Jobs is regarded as the father of the iPhone, and the square root of his year of birth (1955) is approximately 44.22.

> Finished chain.

'Steve Jobs is regarded as the father of the iPhone, and the square root of his year of birth (1955) is approximately 44.22.Grounding, on the other hand, involves identifying and interacting with the relevant web elements based on the agent’s planning decisions.

While planning focuses on the high-level strategy, grounding ensures accurate interaction with the specific elements required to execute each step successfully.

Failure to distinguish between these components can hinder an agent’s effectiveness in navigating and completing tasks in complex web environments.

Finally

Web AI agents are transforming how we interact with the web by moving beyond static information retrieval to active exploration and task execution.

By combining grounding (accurately identifying web elements) with exploration (autonomously navigating web environments), these agents can complete complex tasks more effectively.

As the technology advances, Web AI Agents are becoming essential tools for automation, improving user experience, and streamlining workflows across various industries.

Want to get a basic POC agent running, here is a complete working notebook:

### Install Required Packages:

pip install -qU langchain-openai langchain langchain_community langchain_experimental

pip install -U duckduckgo-search

pip install -U langchain langchain-openai

### Import Required Modules and Set Environment Variables:

import os

from uuid import uuid4

### Setup the LangSmith environment variables

unique_id = uuid4().hex[0:8]

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_PROJECT"] = f"OpenAI_SM_1"

os.environ["LANGCHAIN_ENDPOINT"] = "https://api.smith.langchain.com"

os.environ["LANGCHAIN_API_KEY"] = "<LangSmith API Key Goes Here>"

### Import LangChain Components and OpenAI API Key

from langchain.chains import LLMMathChain

from langchain_community.utilities import DuckDuckGoSearchAPIWrapper

from langchain_core.tools import Tool

from langchain_experimental.plan_and_execute import (

PlanAndExecute,

load_agent_executor,

load_chat_planner,

)

from langchain_openai import ChatOpenAI, OpenAI

###

os.environ['OPENAI_API_KEY'] = str("<OpenAI API Key>")

llm = OpenAI(temperature=0,model_name='gpt-4o-mini')

### Set Up Search and Math Chain Tools

search = DuckDuckGoSearchAPIWrapper()

llm = OpenAI(temperature=0)

llm_math_chain = LLMMathChain.from_llm(llm=llm, verbose=True)

tools = [

Tool(

name="Search",

func=search.run,

description="useful for when you need to answer questions about current events",

),

Tool(

name="Calculator",

func=llm_math_chain.run,

description="useful for when you need to answer questions about math",

),

]

### Initialize Planner and Executor

model = ChatOpenAI(model_name='gpt-4o-mini', temperature=0)

planner = load_chat_planner(model)

executor = load_agent_executor(model, tools, verbose=True)

agent = PlanAndExecute(planner=planner, executor=executor)

### Invoke the Agent

agent.invoke(

"Who is the founder of SpaceX an what is the square root of his year of birth?"

)I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.