Anthropic ACI (AI Agent Computer Interface)

An AI Agent Computer Interface is a tool in an Agent’s toolbox which enables the agent to leverage a web browser as a human would.

This interface often supports seamless, context-aware exchanges, letting AI Agents handle complex tasks through intuitive commands and adaptive responses.

General problems with a Web GUI is time of query executing and errors in interpreting the screen. Human supervision is something which can really help a lot it ensuring a smooth GUI agent journey.

Introduction

What is a AI Agent (Agentic) Computer Interface?

An ACI is a piece of software which can receive compound and complex input from a user, and answer the question by making use of a Computer Interface. Very much in the same fashion we as humans will interact with a computer.

As you will see later in this article, the ACI acts as an agent tool in the context of the Anthropic example.

The interfaces should support natural, intuitive interactions with AI Agents to improve accessibility and usability, allowing users to engage effortlessly.

AI Agents should have context-sensitive capabilities, adapting responses based on past interactions and user needs for continuity and relevance.

Effective interfaces facilitate task automation, enabling agents to assist in complex workflows by taking over repetitive or straightforward actions.

Continuous user feedback integration enhances the agent’s ability to learn, adjust, and optimise performance over time.

The AI Agent has one of its tools which are available, a Computer interface.

Back to Anthropic

A new capability called computer use is now available in public beta, enabling developers to guide Claude in interacting with computers similarly to humans — navigating screens, clicking, and typing.

Claude 3.5 Sonnet is the first frontier AI model to support this functionality in a public beta, allowing for real-time experimentation and user feedback.

Though still in an early stage and occasionally prone to errors, this feature is expected to evolve quickly based on input from developers.

I think it is important to note that many models support vision, and that vision enabled models from OpenAI and others have been used in frameworks to deliver AI Agents which interfaces to computers.

The most notable, for me at least, is the LangChain implementation of WebVoyager.

Hence it is important to note that this is a Computer User Interface framework made available by Anthropic. This has been an approach followed by many model providers, to provide frameworks through which value is delivered. And hence make their offering more compelling.

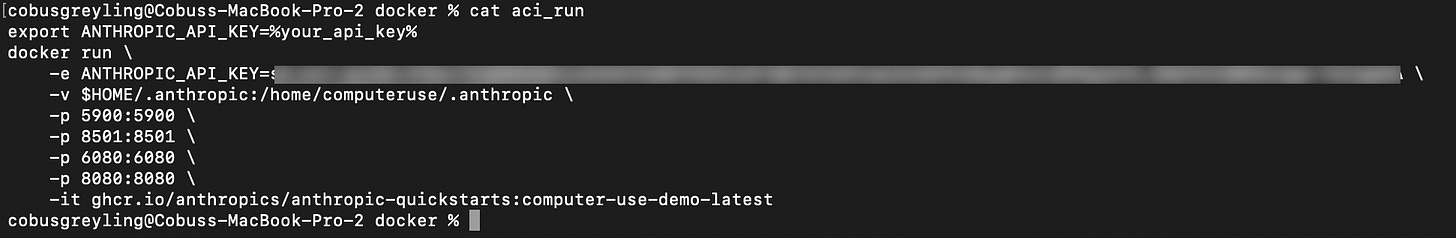

Docker Container

I made use of the docker container locally on my MacBook…

Once the container is running, see the Accessing the demo app section below for instructions on how to connect to the interface.

Once the container is running, open your browser to

http://localhost:8080

to access the combined interface that includes both the agent chat and desktop view.

The container stores settings like the API key and custom system prompt in ~/.anthropic/. Mount this directory to persist these settings between container runs.

Alternative access points:

Streamlit interface only:

http://localhost:8501

Desktop view only: http://localhost:6080/vnc.html

Direct VNC connection:

vnc://localhost:5900(for VNC clients)

Below is the script I made use of tho initiate the docker container…

Find the GitHub quick start here.

The upgraded Claude 3.5 Sonnet model is capable of interacting with tools that can manipulate a computer desktop environment.

Demonstration Application

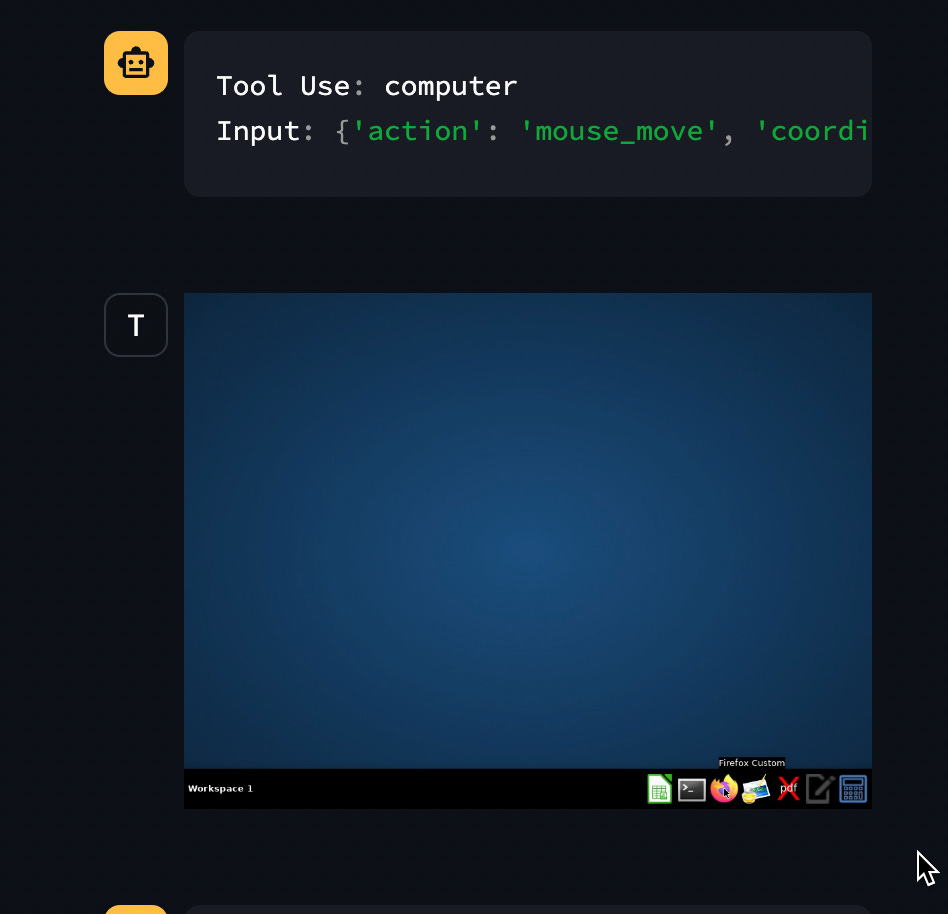

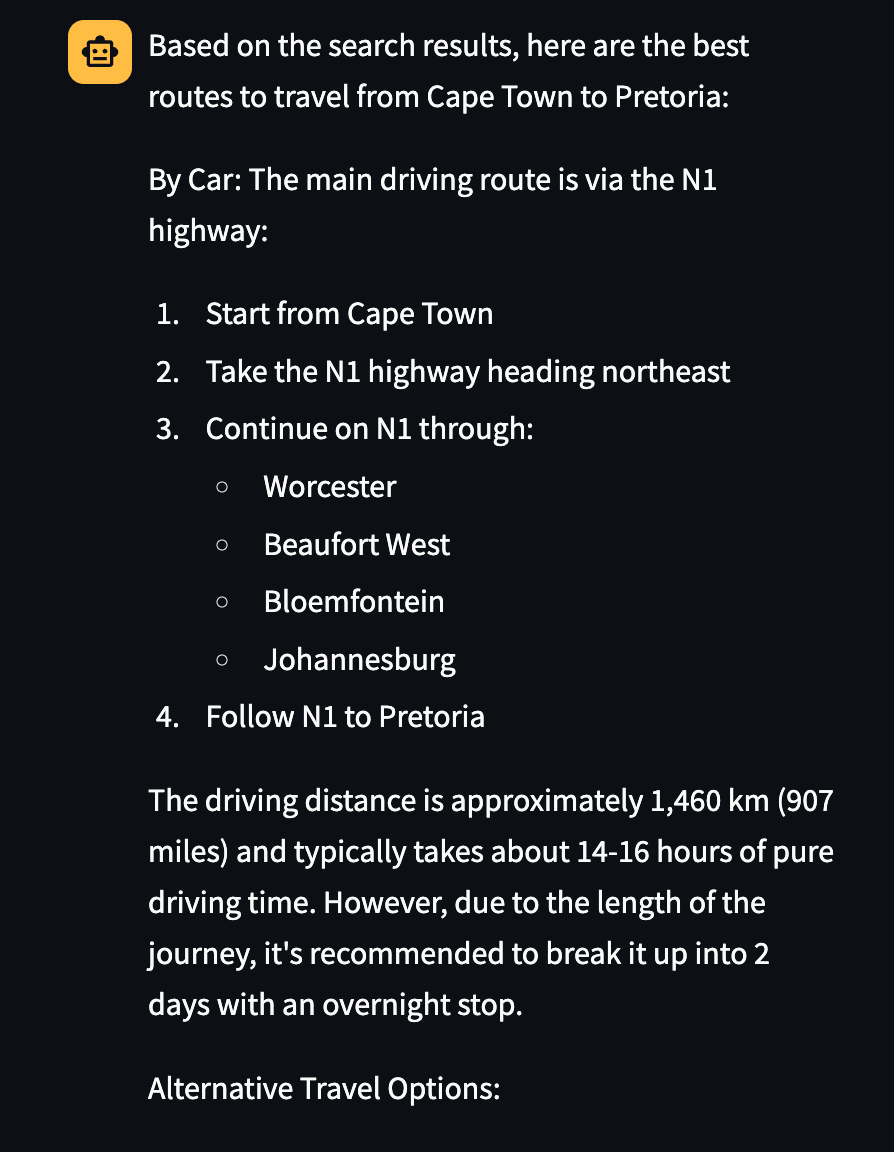

Below is a sequence from the example app…I asked the ACI the following question:

What is the best route to travel from Cape Town to Pretoria?

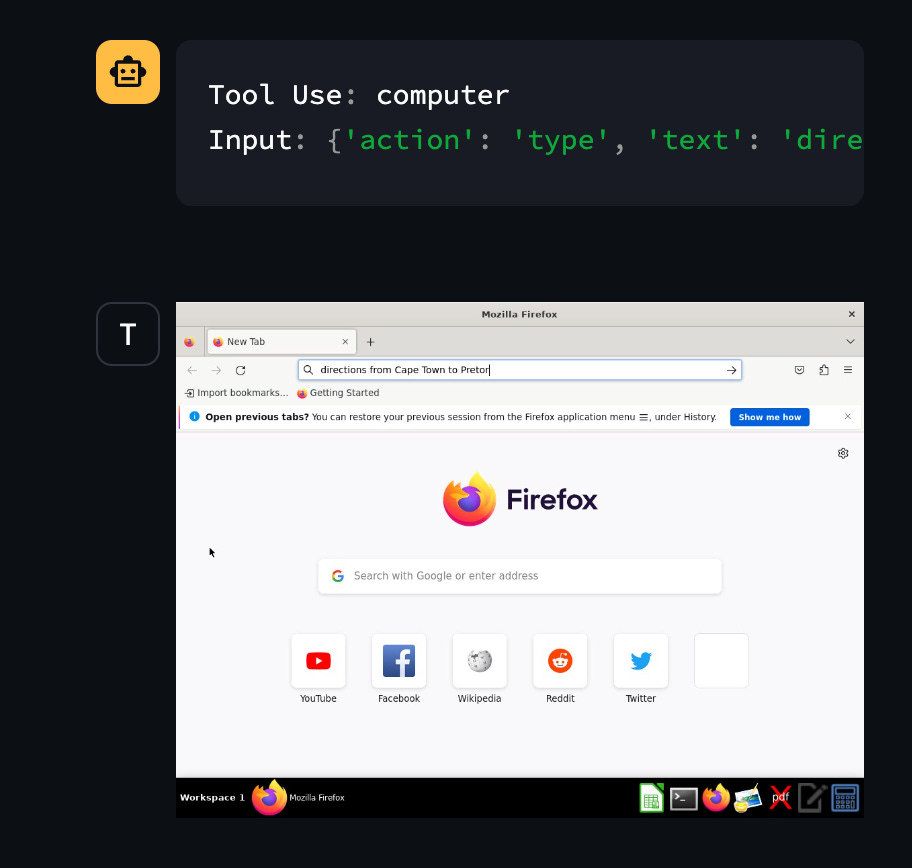

The framework goes through the following sequence, as seen below…you will notice the tool used is computer, and different actions are available to the tool, which include mouse_move, type text, press the return or enter key and more…

Tool Use: computer

Input: {‘action’: ‘mouse_move’, ‘coordinate’: [512, 100]}

Tool Use: computer

Input: {‘action’: ‘left_click’}

Tool Use: computer

Input: {‘action’: ‘type’, ‘text’: ‘route from Cape Town to Pretoria’}

Tool Use: computer

Input: {‘action’: ‘key’, ‘text’: ‘Return’}

Tool Use: computer

Input: {‘action’: ‘mouse_move’, ‘coordinate’: [804, 738]}

Lastly…

Here’s a conversational breakdown of how computer use works with Claude:

Start with the Tools and a Prompt: First, give Claude the computer tools it might need, along with a user request — like Save a picture of a cat to my desktop.

Tool Selection: Claude reviews the tools available to see if one matches the request. If yes, Claude signals it by creating a tool request.

Processing the Tool: You extract the tool info from Claude’s request, run it on a virtual environment, and then return the results back to Claude.

Loop Until Done: Claude checks the results and decides if more tools are needed. If so, it loops back to processing until the task is complete.

This back-and-forth is called the “agent loop,” where Claude keeps calling tools and you keep supplying results until the user’s task is fully handled.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.