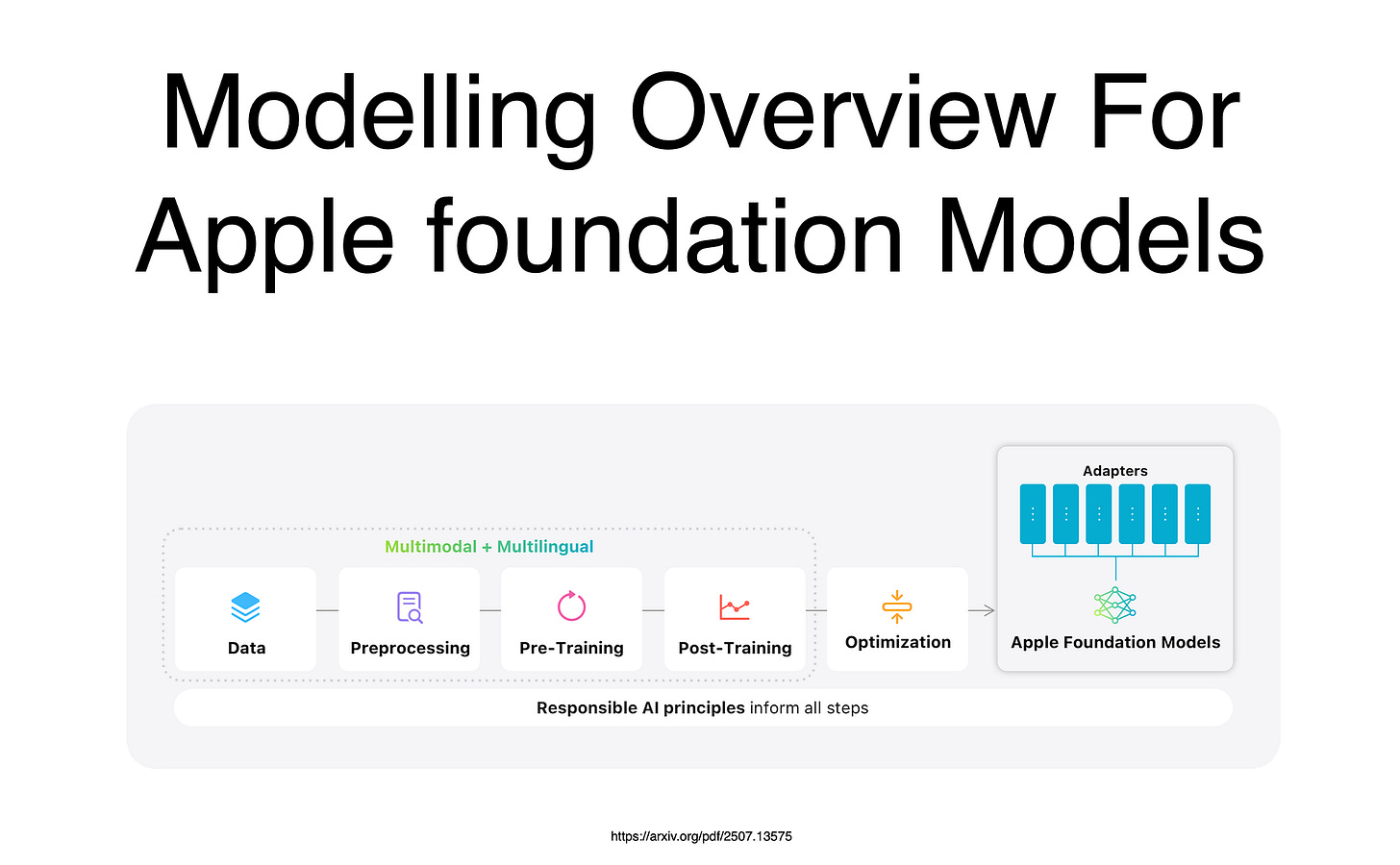

Apple Foundation Models — Data Sourcing & Human Oversight In Building Models

In this article I want to focus on two elements from the recent research from Apple in creating their two foundation models, data and human oversight…

Obviously these models will not be open-sourced and the details on large server-based model is scant.

The technical report does not explicitly state the exact number of parameters for the server model, describing it only as a “large-scale server model”.

In contrast, it specifies the on-device model as having approximately 3 billion parameters (~3B).

We are in the era of on-device intelligence…check out this benchmark comparison for foundation language models.

Apple’s ~3B parameter on-device model (AFM) is fairly on par with heavy hitters like Qwen and Google’s Gemma series.

MMLU (blue line)

General knowledge benchmark — Apple edges out similar-sized models at 67.85%, showing strong multitask understanding.

MMMLU (pink line)

Multilingual version — AFM leads the pack for ~3B models at 60.60%, highlighting Apple’s push for global accessibility (now supporting 16 languages).

MGSM (green line): Math reasoning — Solid 74.91% for Apple, outperforming most peers except the larger Qwen-3–4B.

What’s impressive?

This compact model is optimised for Apple Silicon, delivering efficiency without sacrificing quality.

It’s a testament to responsible AI…

Privacy-first data sourcing (no user data)

Ethical web crawling, and

Human-supervised fine-tuning.

Apple’s focus on edge computing could redefine AI deployment.

The data that fuels models & the human supervision that refines them…

Apple’s recent technical report on their Intelligence Foundation Language Models offers a compelling case study.

The models, which underpin features across Apple devices, emphasise privacy, responsibility and efficiency.

The Data Foundation

Data is the lifeblood of any large language model (LLM), but Apple’s approach diverges from the pack by prioritising responsible sourcing over sheer volume.

Unlike some competitors who have faced scrutiny for scraping vast, unfiltered internet datasets — sometimes including copyrighted or personal content — Apple builds its models on a curated blend of

licensed,

public, and

synthetic data.

This strategy not only enhances model quality because it is a more curated and granular approach but also according to Apple aligns with their core value of user privacy.

Applebot

At the heart of Apple’s data pipeline is Applebot, their proprietary web crawler.

It scours hundreds of billions of web pages, focusing on high-quality, diverse content across languages, locales, and topics.

What sets it apart?

Strict adherence to ethical practices.

Applebot respects robots.txt protocols, allowing publishers to opt out of their content being used for training generative models.

This gives web owners fine-grained control — pages can still appear in Siri or Spotlight searches but are excluded from AI training if desired.

To combat the web’s inherent noise, Apple employs advanced techniques like headless rendering for dynamic sites, ensuring accurate extraction of text, metadata & even JavaScript-heavy content.

They also integrate LLMs into the extraction process for domain-specific documents, outperforming traditional rules-based methods.

Filtering is refined too…

Moving away from aggressive heuristics that might discard valuable data, they use model-based signals tuned per language to retain informative tokens while removing profanity, unsafe material & personally identifiable information (PII).

Privacy…

The report explicitly states that Apple does not use users’ private data or interactions for training — a stark contrast to models trained on user-generated content from platforms like search engines or social media.

Instead, they license data from publishers, curate open-source datasets, and generate high-quality synthetic data.

For multimodal capabilities (handling text and images), this includes over 10 billion filtered image-text pairs from web crawls, 175 million interleaved documents with 550 million images & 7 billion synthetic captions created via in-house models.

This data expansion supports expanded features:

Multilingual capabilities (now 16 languages),

Visual understanding,

Long-context reasoning.

By increasing weights for code, math and multilingual data in pre-training mixtures — from 8% to 30% for multilingual — they balance performance without overfitting low-resource languages.

The result?

Models that are efficient (3-billion-parameter on-device version) and competitive, surpassing baselines like Gemma-2–2B on benchmarks while maintaining ethical integrity.

Apple’s data path is deliberate…

it’s about building trust through transparency and responsibility, avoiding the pitfalls of “data hoarding” that have plagued others.

Human Supervision…

Guiding Models from Fine-Tuning to Reinforcement…

Once pre-trained, models undergo post-training to align with user needs — this is where human supervision comes in.

Apple’s process evolves Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF) to support multimodal and multilingual expansions, blending human input with automation for scalability.

In SFT, human-written demonstrations form the backbone, combined with synthetic data for efficiency.

Humans craft high-quality examples across domains: general knowledge (Q&A on text/images), reasoning (math/code with Chain-of-Thought augmentations), and vision tasks ( OCR for 15 languages, grounding for summarisation).

Native speakers contribute prompts to ensure naturalness, avoiding unnatural translations.

AI Agent Use

For tool-use, a custom annotation platform enables “process-supervision”: annotators interact with an AI Agent, correcting trajectories in real-time to create tree-structured datasets of valid multi-turn interactions.

The human involvement extends to filtering…

Model-based techniques detect response quality, and adversarial data (prompts asking for non-existent info paired with refusals) mitigates hallucinations.

Ablations optimise data ratios, ensuring the model remains helpful, honest and responsible.

RLHF takes supervision further, using human preferences to train a reward model that guides iterative improvements.

Humans grade large volumes of text and image responses for preferences like helpfulness and instruction-following, with consensus rates around 70–80% (disagreements often on subjective prompts).

This data trains the reward model, which — alongside rule-based signals (math verification) — updates the entire model, including the vision encoder.

The infrastructure is asynchronous and distributed, making it efficient (37.5% fewer devices, 75% less compute).

Rewards focus on general preferences for text/multilingual/image prompts and accuracy for STEM reasoning.

Post-deployment, user feedback (thumbs up/down on outputs) and red teaming identify risks, feeding into updates.

Finally

Apple’s integration of data ethics and human supervision isn’t just technical — it’s philosophical.

By shunning user data and enabling opt-outs, they address privacy concerns head-on.

Human involvement ensures models are refined for real-world utility, reducing biases and errors.

This approach powers efficient models that rival larger ones, all while upholding Responsible AI principles.

In a field rife with data scandals and opaque training, Apple’s transparency could inspire broader industry shifts.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.