Are Emergent Abilities In LLMs Inherent Or Merely In-Context Learning?

What are Emergent Abilities? Emergent Abilities is when LLMs demonstrate exceptional performance across diverse tasks for which they were not explicitly trained...

Emergent Abilities is when LLMs demonstrate exceptional performance across diverse tasks for which they were not explicitly trained, including those that require complex reasoning abilities.

The notion of Emergent Abilities also creates immense market hype, with new prompting techniques and presumed hidden latent abilities of LLMs being discovered and published.

However, this study shows that as models scale and become more capable, the discipline of prompt engineering is used to develop new approaches to leverage in-context learning, as we have seen of late…

Introduction

A new study argues that Emergent Abilities are not hidden or unpublished model capabilities which are just waiting to be discovered, but rather new approaches of In-Context Learning which are being built.

This study can be considered as a meta-study, acting as an aggregator of numerous other papers.

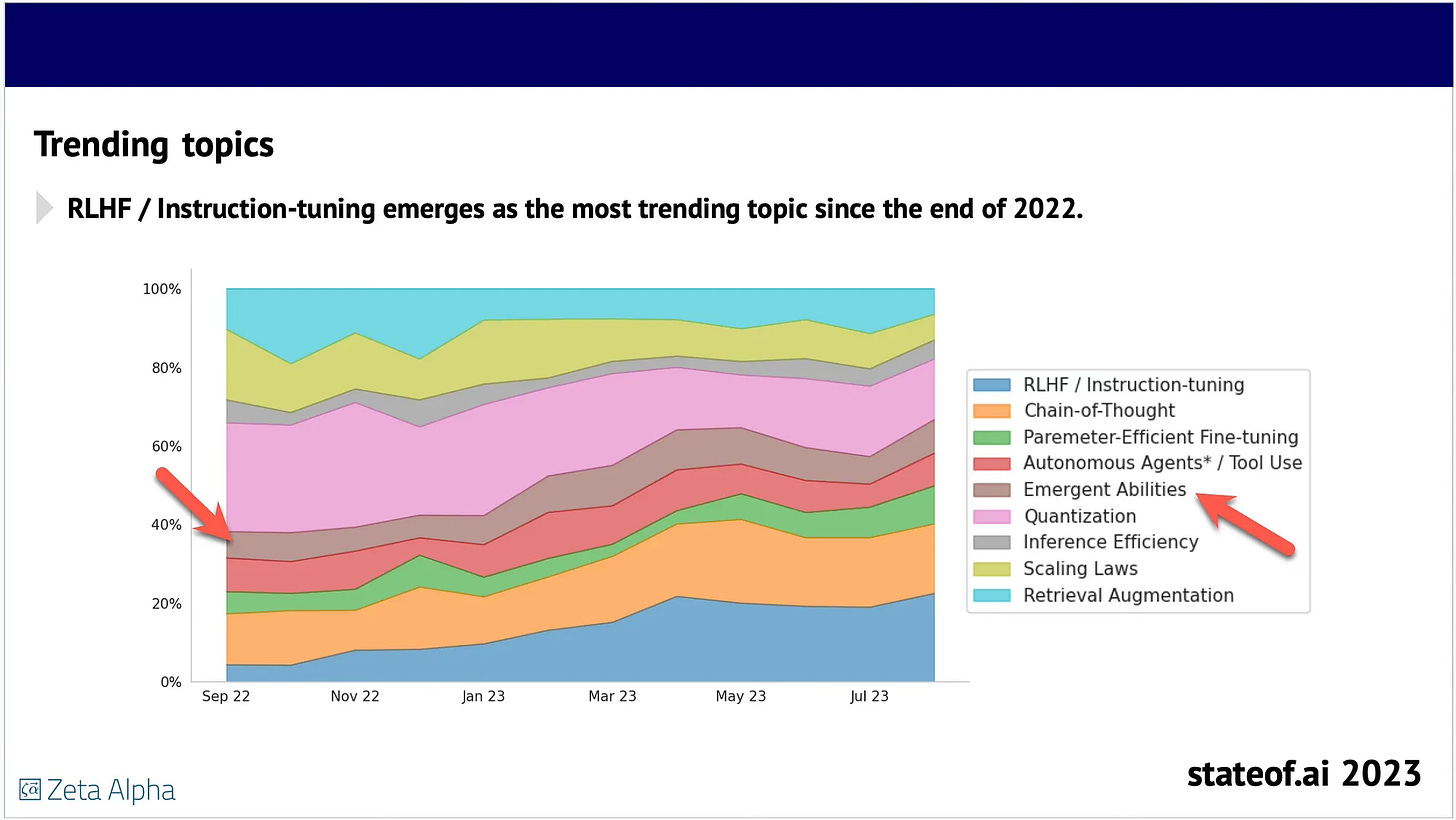

As seen in the graph above, the concept of Emergent Abilities is one of the trending topics of last year and still receives much attention.

The hype around Emergent Abilities is understandable, it also elevated this notion of LLMs being virtually unlimited, and that potential game changing, unknown and latent capabilities are just waiting to be discovered.

Abilities versus Techniques

In-context learning is the technique in which LLMs are provided with a limited number of examples from which they learn how to perform a task.

Recent investigations into the theoretical underpinnings of in-context learning and its specific manifestation in LLMs indicate that it might bear resemblance to the process of fine-tuning.

In most cases models are not explicitly trained for specific tasks. Alternatively, it would suggest that a model possesses the expressive power required to undergo training for the said task at inference.

Only if an LLM has not been trained on a task that it performed well on can the claim be made that the model inherently possesses the ability necessary for that task.

Otherwise, the ability must be learned, i.e. through explicit training or in-context learning, in which case it is no longer an ability of the model per-se, and is no longer unpredictable. In other words, the ability is not emergent.

The recent insights suggest parallels between in-context learning and explicit training. Implying that the success on a task through in-context learning, much like models trained explicitly for task-solving, does not inherently imply a model possess that ability.

Our findings indicate that there are no emergent functional linguistic abilities in the absence of in-context learning, affirming the safety of utilising LLMs and negating any potential hazardous latent capabilities.

LLM Performance & Scale

These new LLM competencies are surfaced by novel prompting techniqueswhich invariably included some kind of in-context learning and instruction following.

This implies that the performance of LLMs does improve progressively with scale, but, when using discrete evaluation metrics, such improvements are only detectable when they tip over a threshold, thus giving the illusion of ‘emergence’.

In Closing

The study conducted tests on 18 models across a comprehensive set of 22 tasks.

After considering a series of more than 1,000 experiments the study provides compelling evidence that emergent abilities can primarily be ascribed to in-context learning.

The study finds no evidence for the emergence of reasoning abilities, and provides valuable insights into the underlying mechanisms driving the observed abilities.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.