As-Needed Decomposition & Planning Using Large Language Models — (ADaPT)

There has been a shift with regard to LLM implementations to decompose tasks and solve each step in an interactive fashion. But with this increased complexity is also introduced…

Introduction

Considering the recent research related to LLMs, the tasks assigned to LLMs are becoming increasingly complex. Hence these tasks cannot be solved by prompting alone, but by following an autonomous agent approach.

LLMs are being used as agents in two implementation types:

Creating a sequence of sub-steps followed by iterative executions

Generating a plan and executing or solving sub-tasks; plan-and-execute.

With conventional CoT the model does not know what faults to avoid and which deductions could lead to increased mistakes and error propagation.

CCoT provides both the correct and incorrect reasoning steps in the demonstration examples.

Decomposition & Sub-Tasks

ADaPT is an approach to explicitly plan and decompose complex sub-tasks as-needed, when the LLM is unable to execute the task.

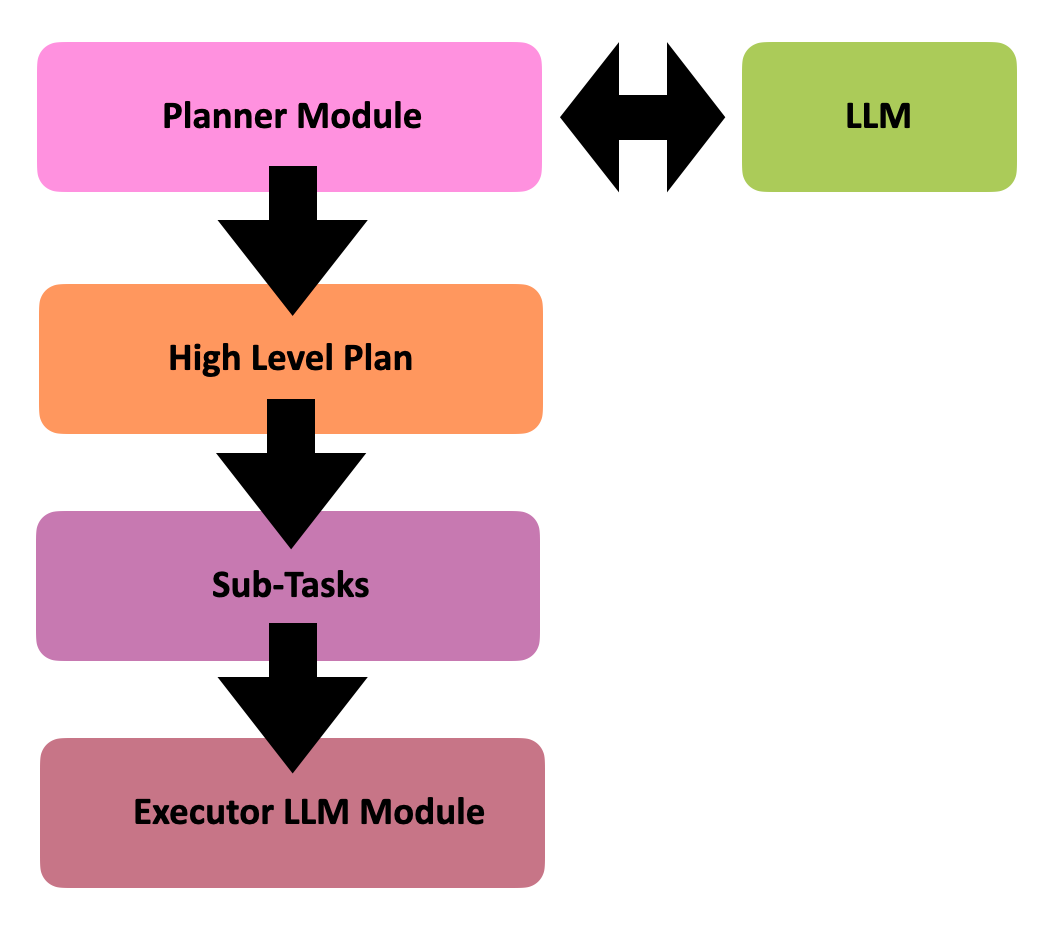

Hence ADaPT is an approach where a separate planner module is incorporated that leverages an LLM to create a high-level plan.

Considering the image below, a recognised approach is to use a separate planner module that leverages an LLM to create a high-level plan.

The planner in turn then delegates the simpler sub-tasks to an executor LLM module.

This reduces the compositional complexity and length of action trajectory of the query.

However, the ADaPT study considers this approach as non-adaptive and not resilient enough when faced with unachievable sub-tasks. The approach also cannot adapt to increased task complexity or manage execution failures. Failure in one sub-tasks will lead to overall task failure.

More About ADaPT

ADaPT is a recursive algorithm which decomposes a task into sub-tasks, but only when necessary. This adds a dynamic component when addressing task complexity.

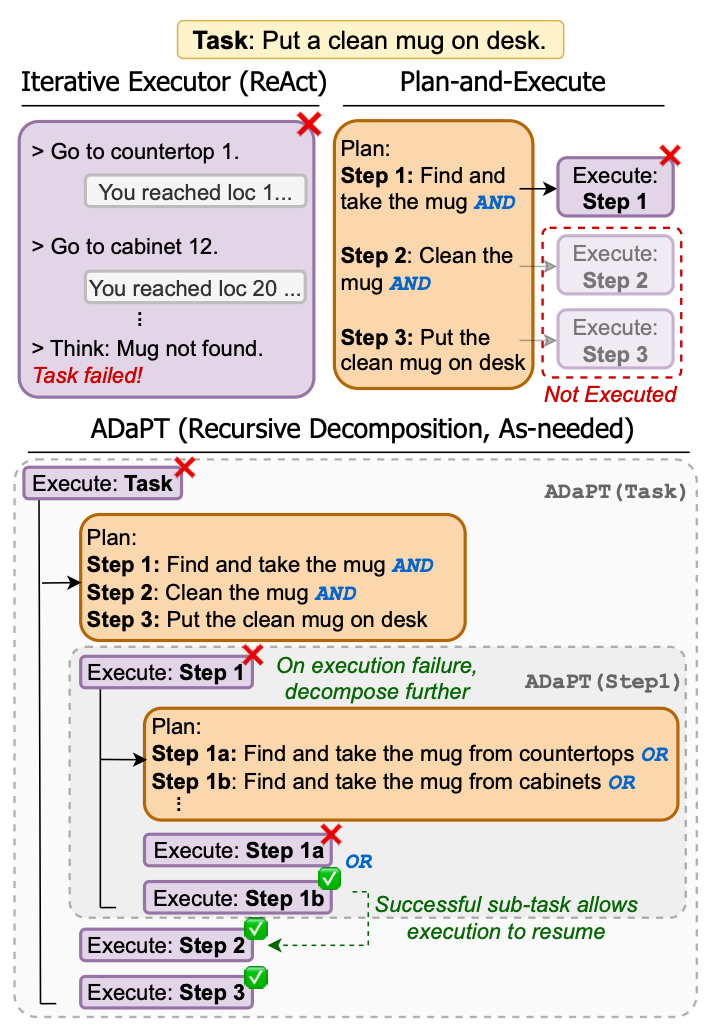

Considering the image below, the ReAct approach is shown and also the Plan-And-Execute approach.

ADaPT with its recursive algorithm that dynamically decomposes complex sub-tasks on an as-needed basis and intervening only if the task is too complex for the executor.

It is evident that ADaPT is a more complex implementation and not merely a prompting strategy. ADaPT is really well suited for an LLM-based agent implementation.

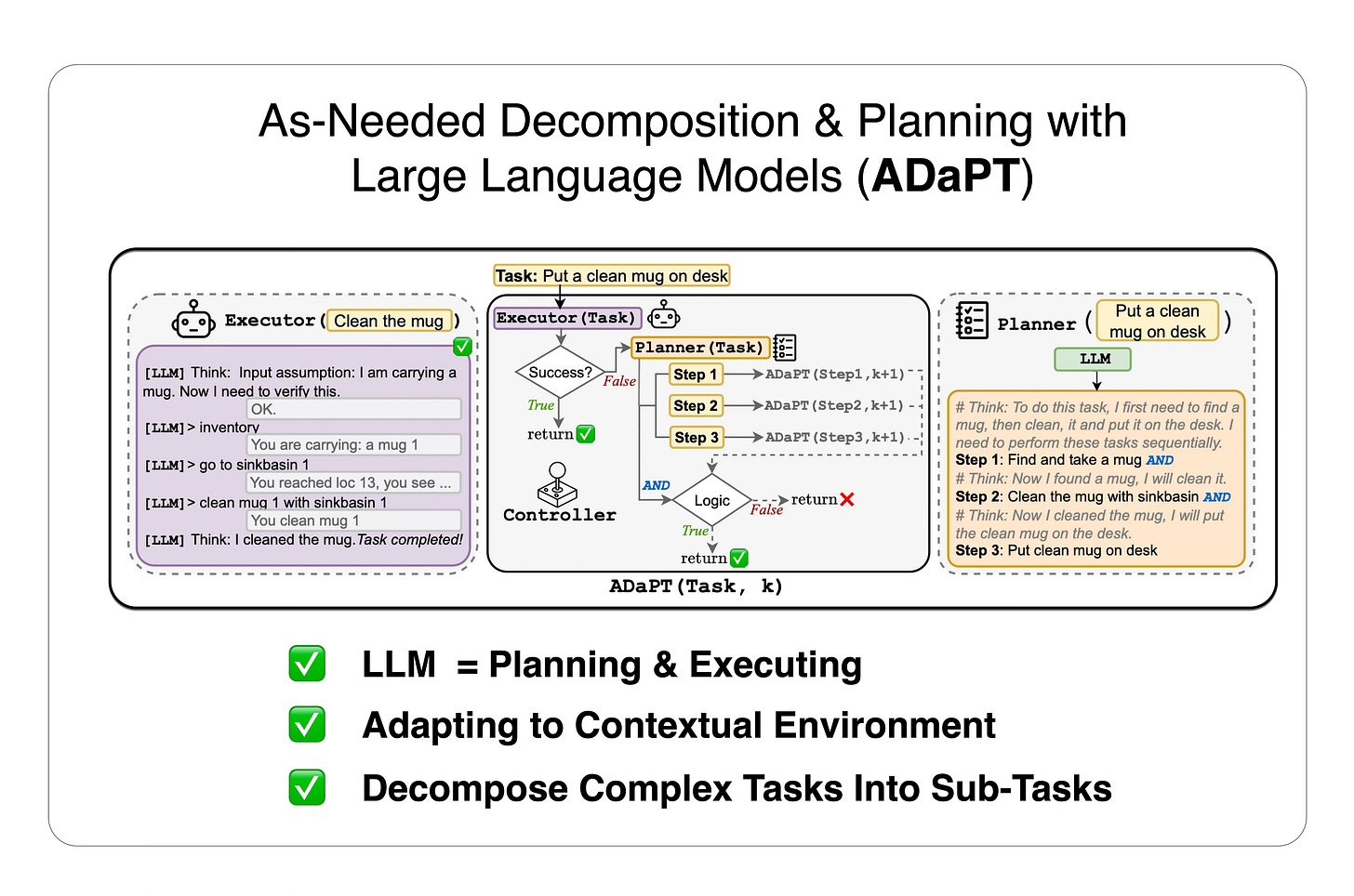

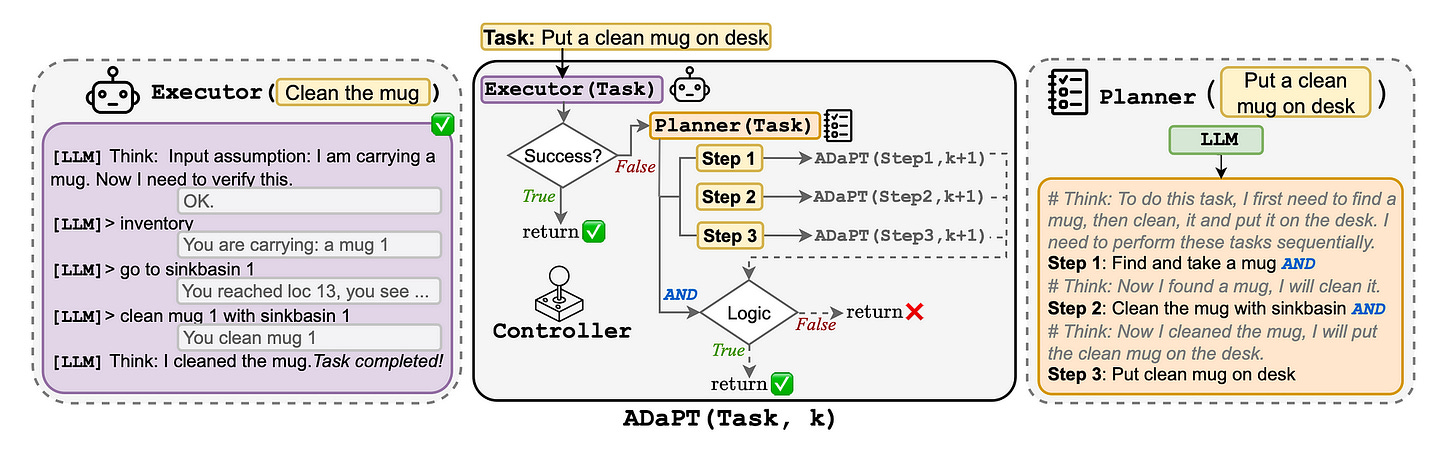

Considering the image below, the ADaPT pipeline is shown.

LLM Executor: the LLM is used for executing iterative interaction. An example execution trajectory is given.

Controller — LLM Program: This program, or module embeds the executor and planner.

LLM Planner: The LLM is used to generate sub-tasks.

Considerations

This approach adds resilience, exception handling and a high level of logic to LLM-based applications.

ADaPT cannot be described as prompting engineering technique, but rather as a LLM-based Agent implementation.

A large number of LLM-calls will be made; hence this approach demands a tool like LangSmith to implement inspectability and observability.

Cost is also a consideration with autonomous agents in terms of a large number of LLM calls. Prompt Chaining might serve as a good initial, more measured, hand-crafted approach.

Considering research, we have moved past the point of new Prompt Engineering techniques leveraging in-context learning (ICL), which was thought of previously as emergent abilities.

ADaPT moves into the realm of new agent types, by defining new algorithms.

ADAPT is also a good example of possible future multi-LLM orchestration; with using different LLMs in the capacity of LLM Executor, LLM Program (Controller) and LLM Planner.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.