Building Conversational AI Agents By Integrating Reasoning, Speaking & Acting With LLMs

AI Agents meet Conversational UI for intuitive & natural conversations.

Introduction

There has been much focus on using AI Agents to automate complex tasks and perform these task in a true autonomous fashion.

However, how about we employ AI Agents to have an engaging conversation with a customer?

A conversation where the chatbot can think, speak, act, reason and come to conclusions…everything we have come to expect from AI Agents.

So, the idea is not to negate the conversation the customer wants to have, but to enhance it.

Current frameworks often lack the flexibility for agents to collaborate effectively with users, such as asking clarifying questions or confirming task specifics to align on goals.

Instead, in ambiguous situations, these agents may make assumptions that can lead to suboptimal decisions, rather than interactively refining their approach based on user guidance.

ReSpAct Framework

The ReSpAct framework enables agents to:

Interpret user instructions,

reason about complex tasks,

execute appropriate actions,

and engage in dynamic dialogue to seek guidance,

clarify ambiguities,

understand user preferences,

resolve problems,

and use the intermediate feedback and responses of users to update their plans.

Most conversations are task oriented and AI Agents should perform tasks to resolve the user’s intent.

Consider task-orientated AI Agents where the AI Agent uses the human as a resource to ask questions, get feedback and adapt conversational dialogs and strategies based on user input.

Dialog Types

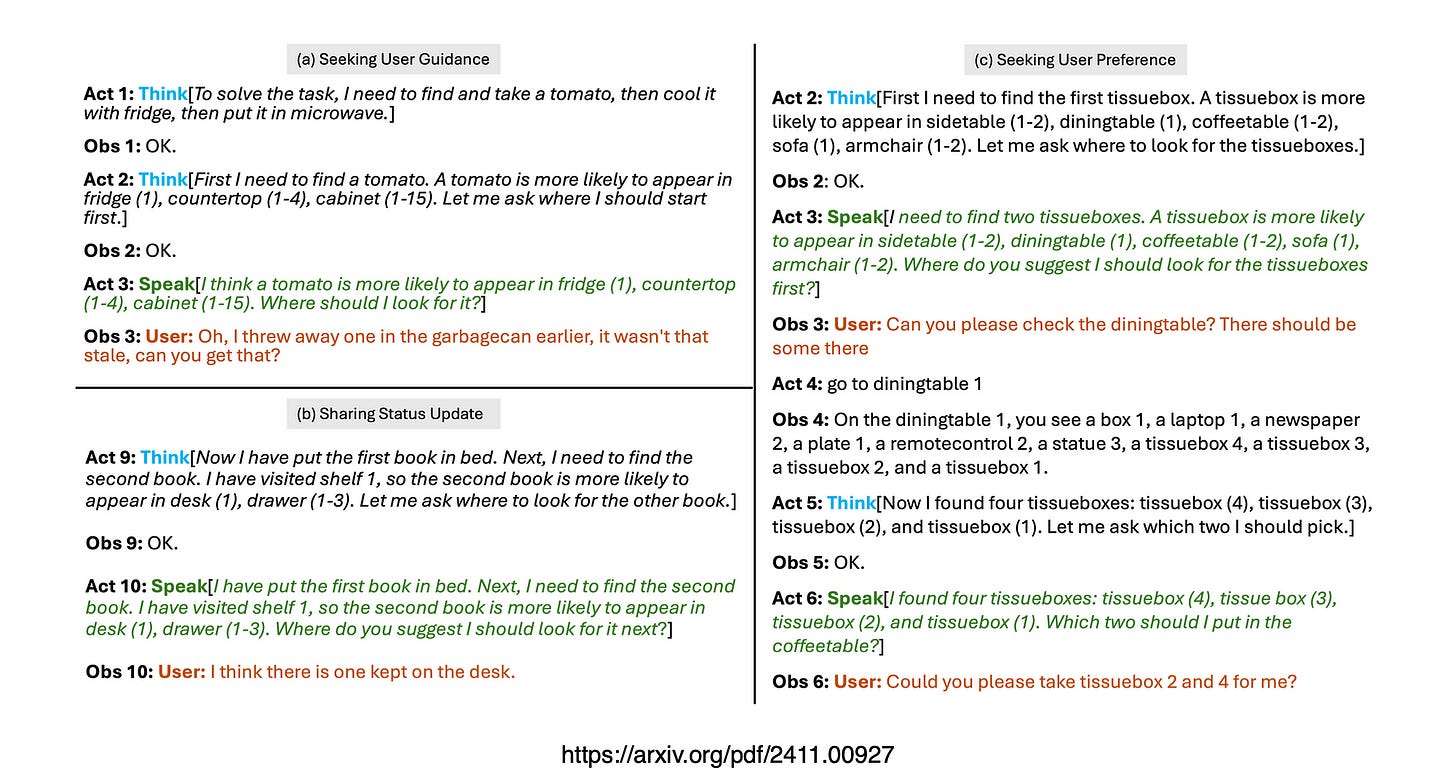

1. When an agent seeks user guidance to refine its search strategy, it actively involves the user in defining the best approach, improving accuracy by ensuring its search aligns with user expectations.

2. This type of dialogue encourages collaboration, allowing users to clarify ambiguous instructions or adjust the search path as new insights arise.

3. Sharing status updates on task progress is essential for transparency, as it informs users of what the agent has completed and any challenges encountered.

4. Regular updates help users feel informed and give them an opportunity to provide additional instructions if the task requires it.

5. Soliciting user preferences is another valuable dialogue type, where the agent gathers input to shape task outcomes, ensuring decisions align closely with user needs.

6. This approach supports more personalised results, making the task execution feel interactive and responsive to individual preferences.

7. Together, these dialogue types create a flexible, two-way interaction that enhances the quality of task completion by combining automated assistance with user-specific insights.

8. Ultimately, these interactions improve alignment, trust, and satisfaction as the agent works to adapt and optimise its actions based on direct user input.

Symbolic Reasoning

In the context of language models, symbolic reasoning is a way for AI Agents to organise and process complex tasks by creating an internal mental map of an environment.

For example, just as we would remember the layout of a room and where items are located, an AI agent can use symbolic reasoning to recognise objects (like a shelf, a drawer, or a sink) and understand their relationships.

This helps the agent reason through the steps it needs to take — like finding a cloth, cleaning it, and placing it on the shelf — based on an internal representation or picture of the task environment.

This structured approach allows AI Agents to make decisions, handle ambiguity, and refine their actions in a logical sequence, similar to how we might mentally map and solve tasks step-by-step.

From “Reason & Act” to “Reason, Speak & Act”

Considering the AI Agents which are created by LangChain, which are mostly ReAct agents which follows a pattern of Reasoning and Acting. The ReSpAct AI Agent follows a pattern of Reasoning, then speaking and then acting.

The ReAct agents as implemented by LangChain has a very high level of inspectability and explainability, as each sub-step in the process is shown. Hence from a conversational perspective, each of these sub-steps can be repurposed as a dialog turn where the user can be consulted or informed.

In real-world settings, engaging with users can provide essential insights, clarifications, and guidance that greatly enhance an agent’s ability to solve tasks effectively.

Finally

ReSpAct enables agents to handle both actions and dialogue seamlessly, allowing them to switch fluidly between reasoning, performing tasks, and interacting with users. This approach supports more coherent and effective decision-making, especially in complex environments where both interaction and action are needed to complete tasks accurately.

The ReSpAct framework supports adaptive, context-aware interactions, elevating AI agents from simple command-response tools to truly conversational partners.

By facilitating meaningful dialogue, ReSpAct enables agents to explain decisions, respond to feedback, and operate in complex environments intuitively and effectively.

Stateful policies in ReSpAct allow agents to make context-based adjustments, like confirming details or selecting specific APIs, ensuring alignment with task requirements for precise LLM-based task completion.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.