Building The Most Basic LangChain Chatbot

In this article I consider what the basic building blocks are of a LLM-based chatbot, and how LangChain goes about building it.

Traditional Chatbots

Considering the image below, traditional chatbots really consists of four basic elements. In the recent past there has been numerous attempts to reimagine this structure. The main focus of these attempts was to loosen-upthe rigidity of the hard-coded and fixed architectural elements.

Natural Language Understanding (NLU)

The NLU Engine performs the facilitation of intents and entities. This is the only AI part of the chatbot, where user input is submitted to the NLU engine and intents and entities are detected from the input.

Normally there is a GUI to define the training data of the NLU engine and start the training of the model based on this training data. Typically advantages of NLU engines are:

There are many open-sourced models available.

NLU engines have a small footprint and is not resource intensive; local and edge installations are feasible.

The UI is non-technical, and small amounts of training data is required.

NLU’s have been around for so long, large corpus of named entities exist, together with predefined entities and training data for specific verticals. For instance like banking, hep desks, HR, etc.

Model training time is short and in a production environment, models can be trained multiple times during a day.

NLU training data was one of the areas where LLMs were introduced the first time. LLMs were used to generate training data for the NLU model based on existing conversations and sample training data.

Conversation Flow & Dialog Management

The dialog flow and logic are designed and built within a no-code to low-code GUI. The flow and logic is basically a predefined flow with predefined logic points. The conversation flows according to the input data matching certain criteria of the logic gate.

There has been efforts to introduce flexibility to the flow for some semblance of intelligence.

Message Abstraction Layer

The message abstraction layer holds predefined bot responses for each dialog turn. These responses are fixed, and in some cases a template is used to insert data and create personalised messages.

Managing the messages proves to be a challenge especially when the chatbot application grows, and due to the static nature of the messages, the total number of messages can be significant. Introducing multilingual chatbots adds considerable complexity.

Whenever the tone or persona of the chatbot needs to change, all of these messages need to be revisited and updated.

This is also one of the areas where LLMs were introduced the first time to leverage the power of Natural Language Generation (NLG) within LLMs.

Out-of-Domain questions were handled by means of knowledge bases and semantic similarity searches. These knowledge bases were primarily used for QnA and the solutions made use of semantic search. In many regards this could be considered as an early version of RAG.

Considering the image below, many organisations and technology providers are navigating the transition from Traditional Chatbots to introducing Large Language Models.

The image depicted below outlines the various elements and features comprising a Language Model (LLM). Consequently, the challenge lies in accessing each of these features at the appropriate time, ensuring stability, predictability, and to a certain extent, reproducibility.

LangChain Structure

Introduction

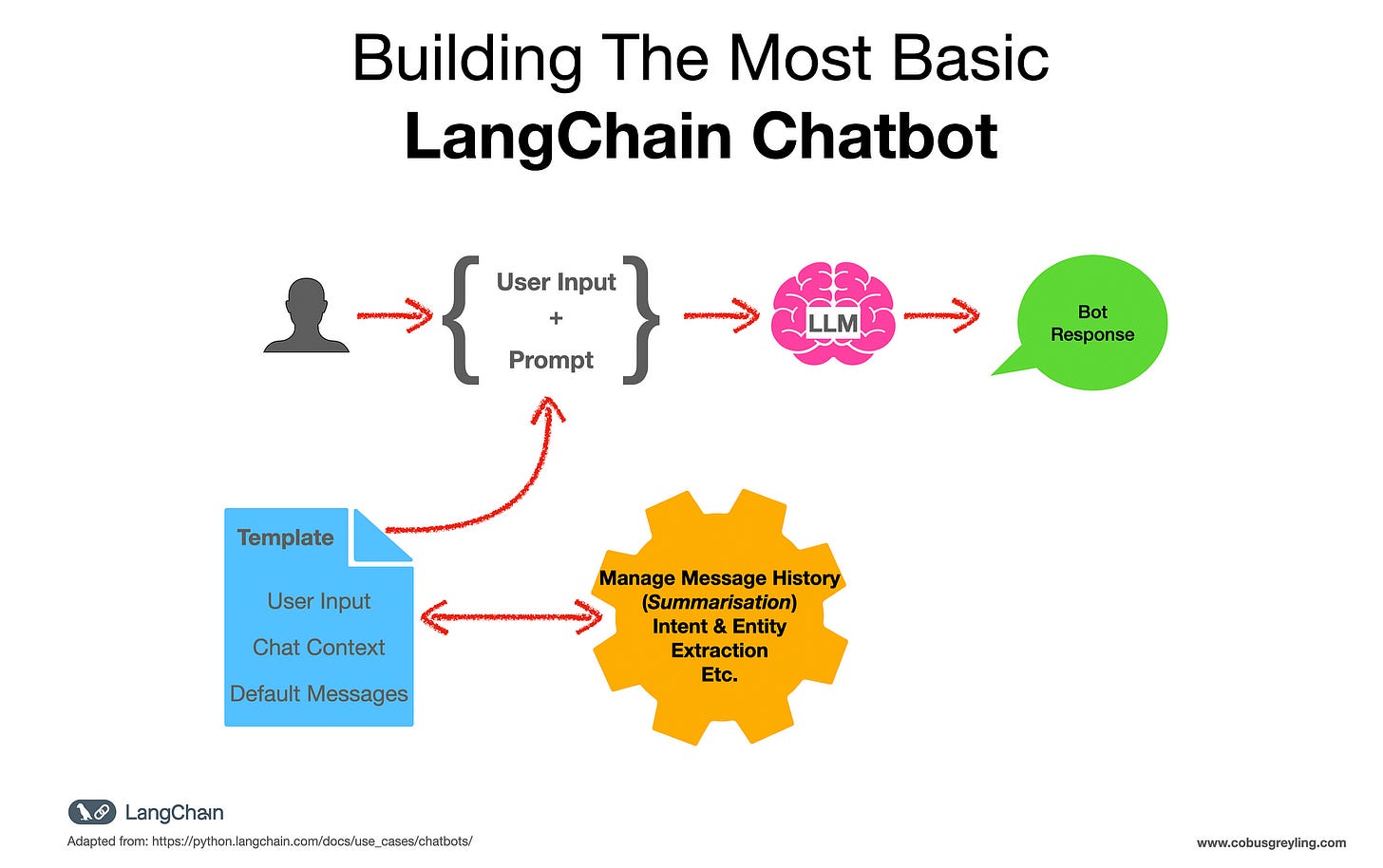

Chatbots represent one of the most common applications for Large Language Models (LLMs).

Chatbots’ fundamental capabilities include conducting extended (requiring memory), stateful dialogues and providing users with pertinent responses derived from relevant information.

The Use-Case Is Important

Crafting a chatbot entails evaluating diverse techniques, each offering unique advantages and trade-offs.

These considerations hinge on the types of inquiries the chatbot is anticipated to address effectively.

Chatbots frequently leverage retrieval-augmented generation (RAG) when accessing private data to enhance their proficiency in responding to domain-specific queries.

Additionally, designers might opt to implement routing mechanisms across multiple data sources, ensuring the selection of the most relevant contextfor delivering accurate answers.

The use of specialised forms of chat history or memory beyond mere message exchange can further enrich the bot’s capabilities.

Basic Chatbot Architecture

Chroma is a database for building AI applications with embeddings, and needs to be installed.

For this chatbot, OpenAI is used as the backbone of the chatbot with the model gpt-3.5-turbo-1106 defined.

The code also shows how the messages are passed into the conversation history. This basic idea underpins a chatbot’s ability to interact conversationally.

Prompt templating is made use of to make formatting easier. And the MessagesPlaceholder as seen below inserts chat messages passed into the chain’s input as chat_history directly into the prompt.

Complete Working LangChain Example

The code below can copied verbatim and pasted into a Colab notebook. The only change you will have to make, is to add your OpenAI API key in line four…

%pip install --upgrade --quiet langchain langchain-openai langchain-chroma

##########

import os

os.environ['OPENAI_API_KEY'] = str("<Your API Key Goes Here>")

##########

from langchain_openai import ChatOpenAI

chat = ChatOpenAI(model="gpt-3.5-turbo-1106", temperature=0.2)

##########

from langchain_core.messages import HumanMessage

chat.invoke(

[

HumanMessage(

content="Translate this sentence from English to French: I love programming."

)

]

)

########## Generate Response 1

chat.invoke([HumanMessage(content="What did you just say?")])

##########

from langchain_core.messages import AIMessage

chat.invoke(

[

HumanMessage(

content="Translate this sentence from English to French: I love programming."

),

AIMessage(content="J'adore la programmation."),

HumanMessage(content="What did you just say?"),

]

)

########## Generate Response 2

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a helpful assistant. Answer all questions to the best of your ability.",

),

MessagesPlaceholder(variable_name="messages"),

]

)

chain = prompt | chat

##########

chain.invoke(

{

"messages": [

HumanMessage(

content="Translate this sentence from English to French: I love programming."

),

AIMessage(content="J'adore la programmation."),

HumanMessage(content="What did you just say?"),

],

}

)

##########

demo_ephemeral_chat_history.add_user_message(

"Translate this sentence from English to French: I love programming."

)

response = chain.invoke({"messages": demo_ephemeral_chat_history.messages})

response

##########

demo_ephemeral_chat_history.add_ai_message(response)

demo_ephemeral_chat_history.add_user_message("What did you just say?")

chain.invoke({"messages": demo_ephemeral_chat_history.messages})

In Conclusion

Large Language Models (LLMs) have revolutionised traditional chatbot development by offering unprecedented capabilities in natural language understanding and especially generation.

Unlike earlier rule-based or template-driven approaches, LLMs enable chatbots to comprehend and generate human-like responses dynamically, adapting to diverse conversational contexts.

This shift has disrupted chatbot development and the established and settled architectures. By reducing the reliance on handcrafted rules and domain-specific knowledge bases.

Also, LLMs facilitate the creation of more sophisticated chatbots capable of handling a broader range of inquiries with increased accuracy and fluency.

Developer focus has shifted to fine-tuning models, RAG, In-Context Learning (ICL) and other advanced techniques, rather than solely on crafting dialogue scripts.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

Succinct and to the point. Graphic of Chatbot v LLM will be useful.