Can Conversation Designers Excel As Data Designers?

The Emergence Of Data Design to create highly granular, conversational & refined data for language model fine-tuning.

Introduction

This phenomenon is that models are trained not to necessarily imbue the model with knowledge, hence augmenting the Knowledge Intensive nature of the model.

But rather change the behaviour of the model, teaching the model new behaviour.

Can Conversation Designers Excel As Data Designers?

There has been many discussions on the future of conversation designers…and an idea came to mind…many of these datasets require human involvement in terms of annotation and oversight.

And these datasets hold key elements of dialog, reasoning and chains of thought…

So, the question which has been lingering in the back of my mind for the last couple of days is, is this not such a well suited task for conversation designers?

Especially in getting the conversational and thought process topology of the data right?

Allow me to explain, I have been talking much about a data strategy needing to consist of the Eight D’s: data discovery, data design, data development and data delivery.

Data delivery has been discuss much considering RAG and other delivery strategies.

Data Discovery has also been addressed to some degree, for instance XO Platform’s Intent Discovery. However, there is still much to do in finding new development opportunities…

Coming to Data Design…in this article I discuss three recent studies which focusses on teaching language models (both large and small) certain behaviours. While not necessarily imbuing the model with specific world knowledge, but rather improving the behaviour and abilities of the model.

These abilities can include self correction, reasoning abilities, improving contextual understanding, both short and long, and more…

Partial Answer Masking (PAM)

A recent study showed how Small Language Models (SLMs) can be fine-tuned to imbue them with the ability to self-correct.

A pipeline was constructed to teach SLMs, via self-correction data a method called Partial Answer Masking (PAM). This approach aims to endow the model with the capability for intrinsic self-correction through fine-tuning.

During the fine-tuning process, we propose Partial Answer Masking (PAM) to make the model have the ability of self- verification. ~ Source

The objective of Partial Answer Masking is to guide the language model towards self-correction. PAM can be considered as a new data design.

Prompt Erasure

This data design approach of Prompt Erasure, is a technique of data design which turns Orca-2 into a Cautious Reasoner because it learns not only how to execute specific reasoning steps, but to strategise at a higher level on how to approach a particular task.

Rather than naively imitating powerful LLMs, the LLM is used as a reservoir of behaviours from which a judicious selection is made for the approach for the task at hand.

Orca-2 is an open-sourced Small Language Model (SLM) which excels at reasoning. This is achieved by decomposing a problem and solving it step-by-step, which adds to observability and explainability.

This skill of reasoning was developed during fine-tuning of the SLM by means of granular and meticulous fine-tuning…

Nuanced training data was created, an LLM is presented with intricate prompts which is designed with the intention to elicit strategic reasoning patterns which should yield more accurate results.

During the training phase within the training data, the smaller model is exposed to the task and the subsequent output from the LLM. The output data of the LLM defines how the LLM went about in solving the problem.

Phi-3

Post-training of Phi-3-mini involves two stages:

Supervised Fine-Tuning (SFT): Utilises highly curated, high-quality data across diverse domains such as math, coding, reasoning, conversation, model identity, and safety. Initially, the data comprises of English-only examples.

Direct Preference Optimisation (DPO): Focuses on chat format data, reasoning, and responsible AI efforts to steer the model away from unwanted behaviours by using these outputs as “rejected” responses.

These processes enhance the model’s abilities in math, coding, reasoning, robustness, and safety, transforming it into an AI assistant that users can interact with efficiently and safely.

Chain-Of-Thought Active Prompting

Inspired by the idea of annotating reasoning steps to obtain effective exemplars, the study aims to select the most helpful questions for annotation judiciously instead of arbitrarily.

Uncertainty Estimation

The LLM is queried a predefined number of times, to generate possible answers for a set of training questions. These answer and question sets are generated in a decomposed fashion with intermediate steps (Chain-Of-Thought).

An uncertainty calculator is used based on the answers via an uncertainty metric.

Selection

Ranked according to the uncertainty, the most uncertain questions are selected for human inspection and annotation.

Annotation

Human annotators are used to annotate the selected uncertain questions.

Final Inference

Final inference for each question is performed with the newly annotated exemplars.

Implementing Chain-of-Thought Principles in Fine-Tuning Data for RAG Systems

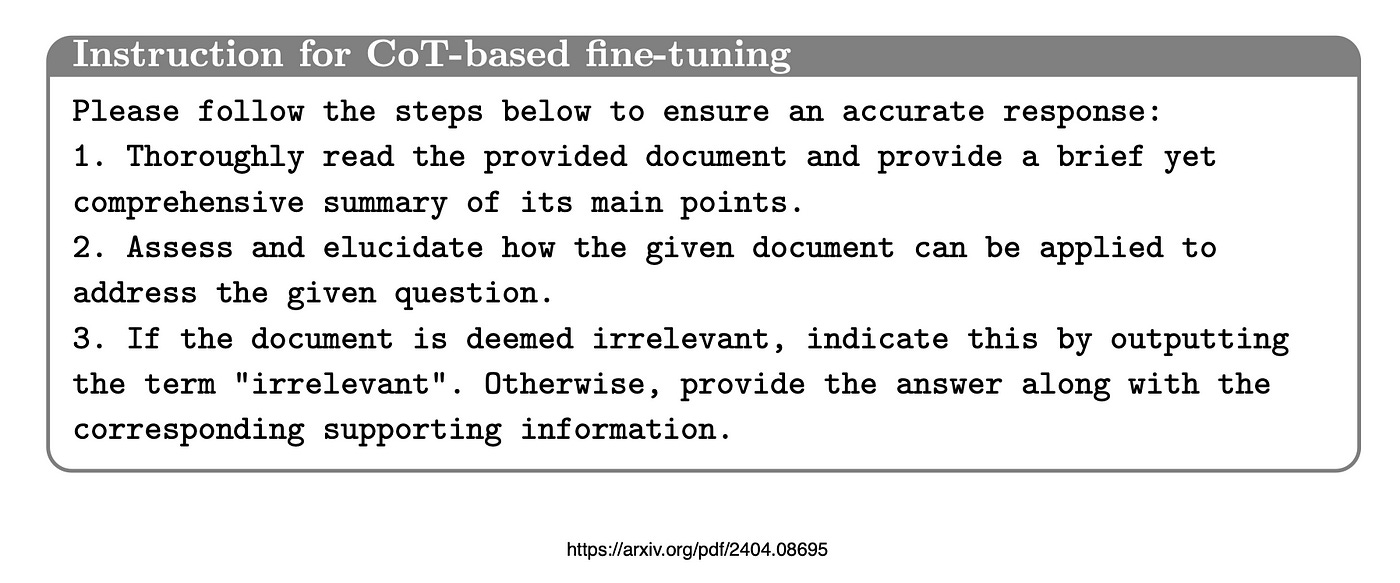

In this research to improve RAG performance, the generation model is subjected to Chain-Of-Thought (CoT) based fine-tuning.

By leveraging a small set of annotated question-answer pairs, the researchers developed an instruction-tuning dataset for fine-tuning an LLM, enabling it to answer questions based on retrieved documents.

For the CoT-based question answering task, we annotated 50 question-answer pairs using the CoT method. ~ Source

Chain-Of-Thought Based Fine-Tuning

The study proposes a new Chain-Of-Thought based fine-tuning method to enhance the capabilities of the LLM generating the response.

Considering the image below, the study divided the RAG question types into three groupings:

Fact orientated,

Short & solution orientated &

Long & solution orientated.

Considering that retrieved documents may not always answer the user’s question, the burden is placed on the LLM to discern if a given document contains the information to answer the question.

Inspired by the Chain-Of-Thought Reasoning (CoT), the study proposes to break down the instruction into several steps.

Initially, the model should summarise the provided document for a comprehensive understanding.

Then, it assesses whether the document directly addresses the question.

If so, the model generates a final response, based on the summarised information.

Otherwise, if the document is deemed irrelevant, the model issues the response as irrelevant.

Additionally, the proposed CoT fine-tuning method should effectively mitigate hallucinations in LLMs, enabling the LLM to answer questions based on the provided knowledge documents.

Below the instruction for CoT fine-tuning is shown…

By leveraging a small set of annotated question-answer pairs, the researchers developed an instruction-tuning dataset to fine-tune an LLM, enabling it to answer questions based on retrieved documents.

There was focus to integrate answers from different documents for a specific question.

Rapid advancements in generative natural language processing technologies have enabled the generation of precise and coherent answers by retrieving relevant documents tailored to user queries.

In Conclusion

In this article I attempt to show how language model behaviour is changed via fine tuning and how inventing new data topologies via a process of data design.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.