Chain-of-Instructions (CoI) Fine-Tuning & Going Beyond Instruction Tuning

This approach draws inspiration from Chain-of-Thought (CoT) prompting which generates step-by-step rationales from LLMs.

Hence CoI is not a new prompting technique, like CoT. Instead, this research focuses on the tasks that require solving complex and compositional instructions, and builds a new dataset and techniques to solve such tasks.

Introduction

Recently I wrote an article on the Orca-2 Small Language Model (SLM) from Microsoft. And even-though Orca-2 is a small language model, it has immense reasoning capabilities.

This reasoning capabilities were achieved by using nuanced training data. This leads into a topic I have been giving consideration to the last few months; that of data design.

And this process of creating nuanced training data at scale can be described as the process of data design. Hence designing the data to be in a certain format for more effective fine-tuning.

Nuanced Data

In the case of Orca-2, in order to create nuanced training data, an LLM is presented with intricate prompts which is designed with the intention to elicit strategic reasoning patterns which should yield more accurate results.

Also, during the training phase, the smaller model is exposed to the (1) taskand the subsequent (2) output from the LLM.

The output data of the LLM defines how the LLM went about in solving the problem.

But the original (3) prompt is not shown to the SLM. This approach of Prompt Erasure, is a technique which turns Orca-2 into a Cautious Reasoner because it learns not only how to execute specific reasoning steps, but to strategise at a higher level how to approach a particular task.

Rather than naively imitating powerful LLMs, the LLM is used as a reservoir of behaviours from which a judicious selection is made for the approach for the task at hand.

Back To Chain-of-Instructions

Fine-tuning large language models (LLMs) with a diverse set of instructions has enhanced their ability to perform various tasks, including ones they haven’t seen before.

However, existing instruction datasets mostly contain single instructionsand struggle with complex instructions that involve multiple steps.

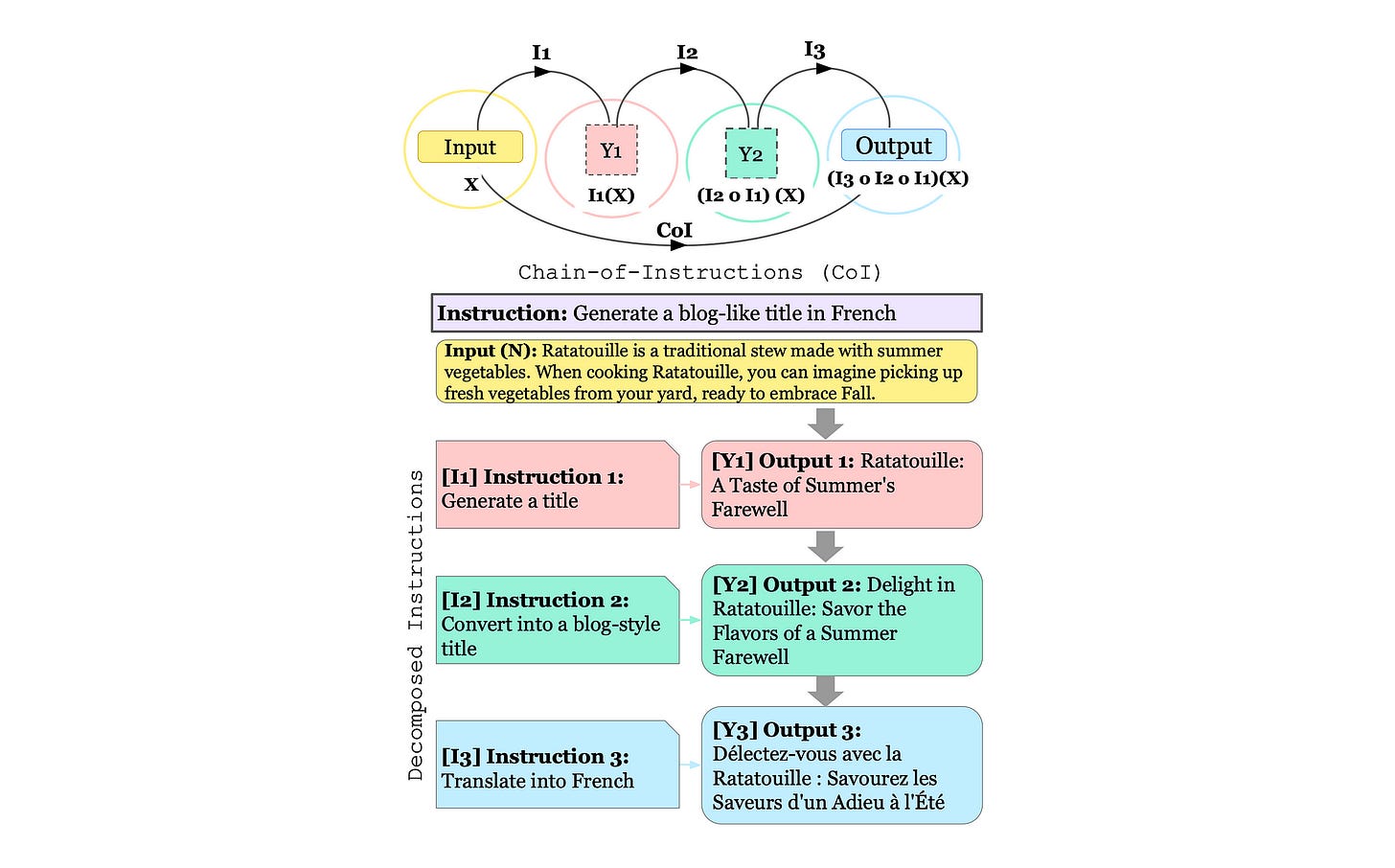

This study introduces a new concept called Chain-of-Instructions (CoI), where the output of one instruction becomes the input for the next, forming a chain within the training data.

So I get the sense that principles from the discipline Prompt Engineering are being implemented within the design of training data. This is a phenomenon where non-gradient training principles for an LLM is transferred to a gradient approach.

Unlike traditional single-instruction tasks, this new approach encourages models to solve each subtask sequentially until reaching the final answer.

Hence the behaviour of the LLM is changed, and elements used to leverage LLM in-context learning are now used within the training data.

Fine-tuning with CoI instructions, known as CoI-tuning, improves the model’s capability to handle instructions with multiple subtasks. CoI-tuned models also outperform baseline models in multilingual summarisation, indicating the effectiveness of CoI models on new composite tasks.

Decomposed Instructions

Considering the image below, an instruction is given, with input text.

This is followed by detailed multi-step decomposition of instructions, with a sequenced instruction and output example.

Basic Approach

In-Context Learning

The framework leverages in-context learning on existing single instruction datasets to create chains of instructions.

The framework also automatically construct composed instruction datasets with minimal human supervision.

A fine-tuned model can generate incremental outputs at each step of a complex task chain. With CoI-tuning, step-by-step reasoning becomes feasible, especially when dealing with instructions composed of multiple subtasks.

The output of this method complies with the principle of having inspectable and observable AI.

In Conclusion

The study states that the training datasets were created with minimal human intervention. I can foresee how a carefully (human) curated dataset can improve this approach of transferring In-Context Learning (ICL) from a non-gradient to a gradient approach.

An interesting element is that an LLM was used in the preparation of the training dataset. This again reminds of the training or Orca-2, where an LLM was used to train a SLM.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.