Chain-Of-Knowledge Prompting

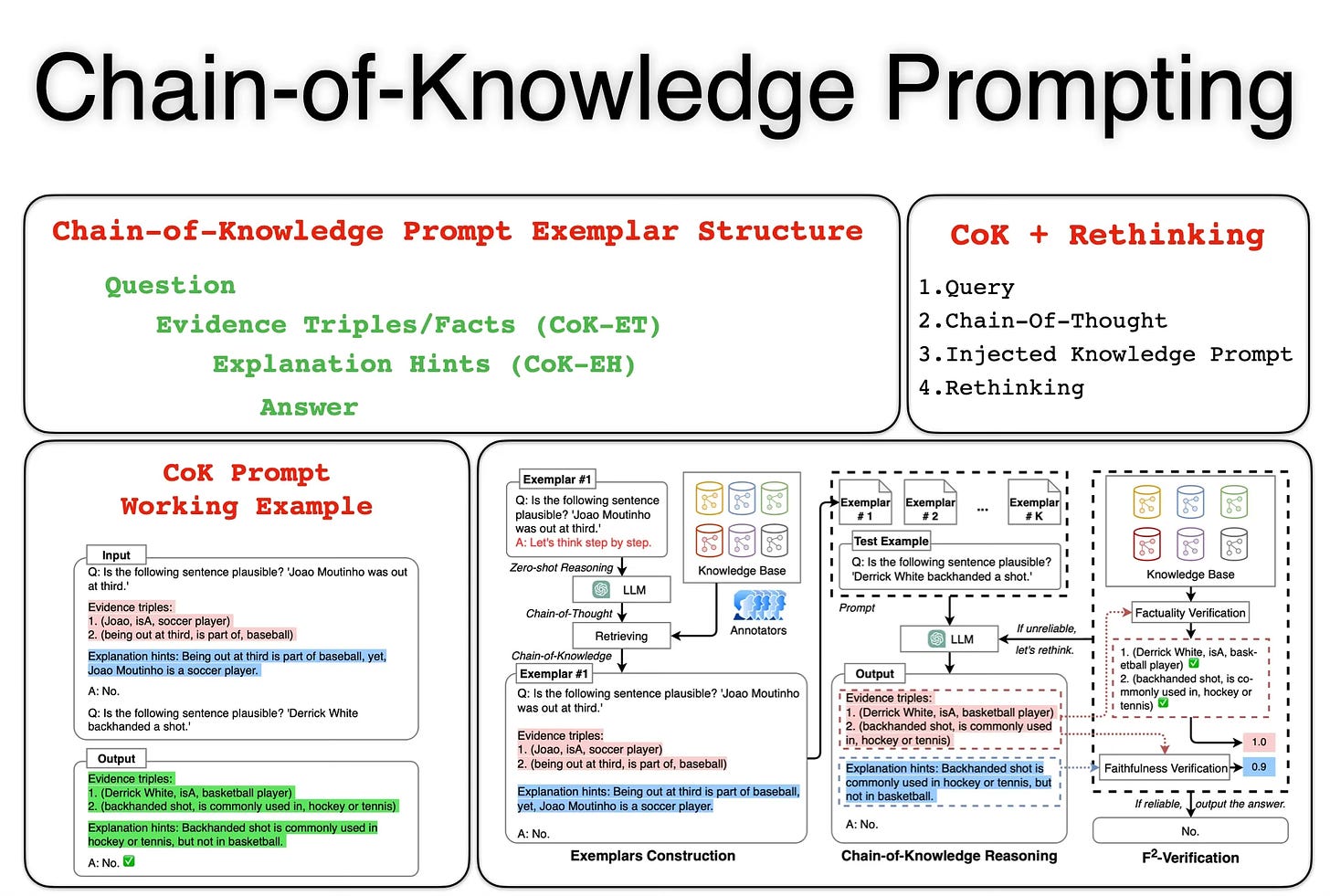

Chain-Of-Knowledge (CoK) is a new prompting technique which is well suited for complex reasoning tasks.

However, the challenge with CoK prompting is that it demands complexity when implemented in a production environment.

Overview

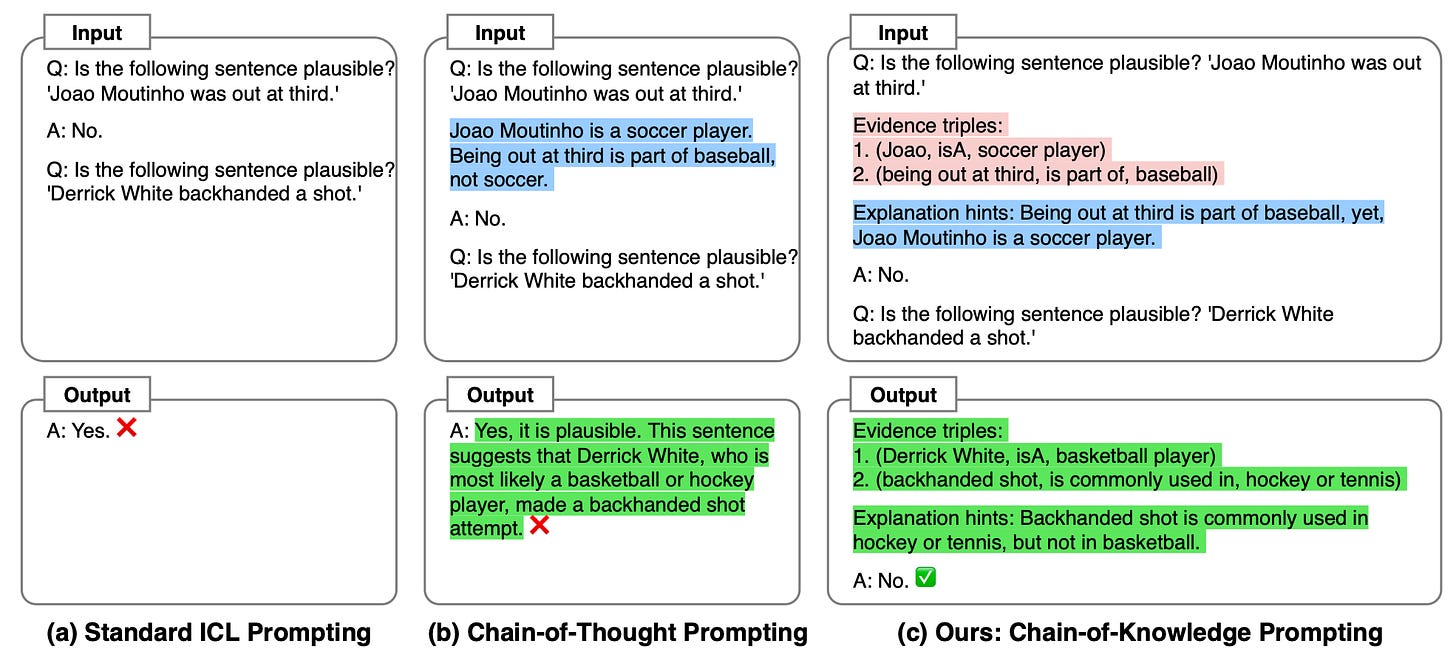

As seen in the graphic above, creating a working example of CoK in a prompt playground is straight forward. But the key challenge of CoK prompting lies in accurately constructing the Evidence Triples or Facts (CoK-ET).

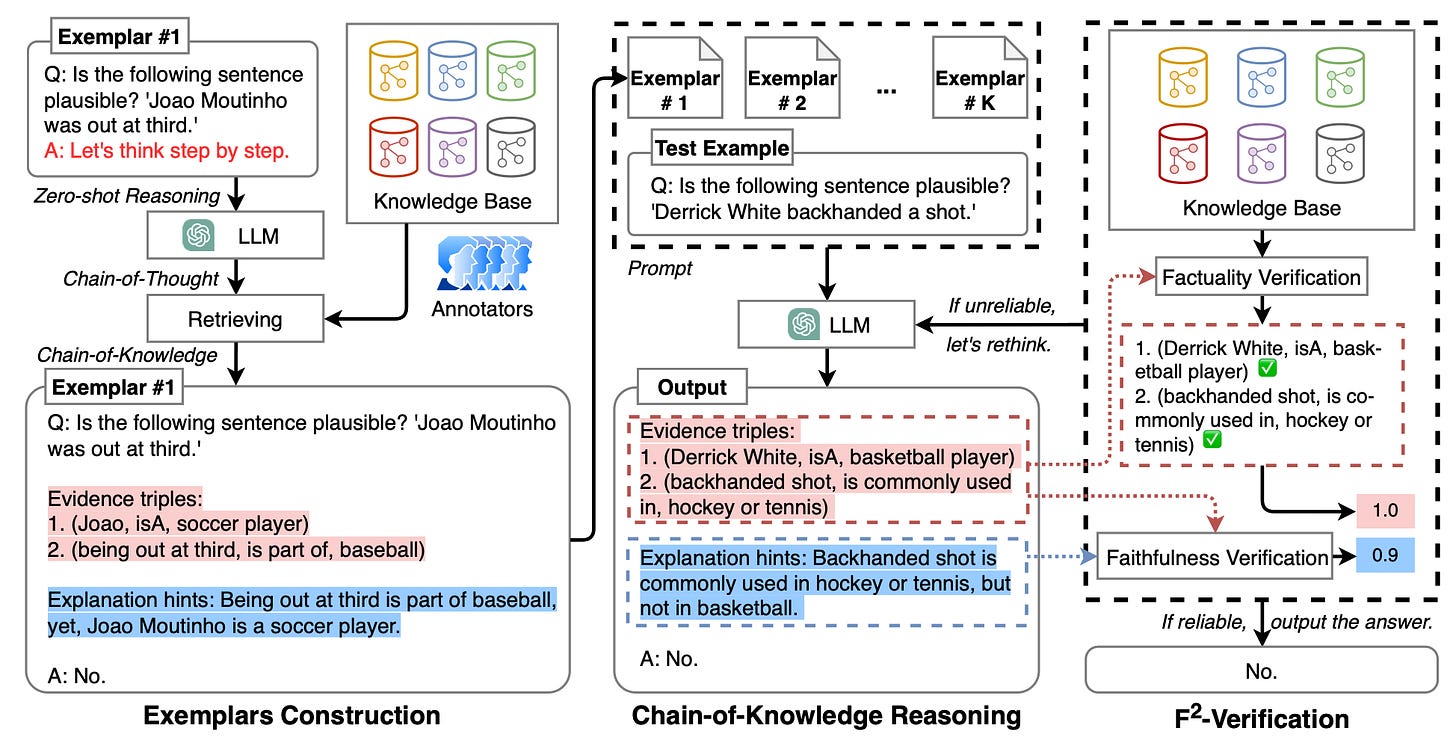

In the study a retrieving tool is proposed with access to a knowledge base. Hence CoK can be seen as an enabler for RAG implementations.

Another challenge is how to obtain annotated CoK-ET that better express the textual rationale.

CoK is another study showing the importance of In-Context Learning (ICL)of LLMs at inference.

A recent study argues that Emergent Abilities are not hidden or unpublished model capabilities which are just waiting to be discovered, but rather new approaches of In-Context Learning which are being built.

CoK hinges on the quality of data retrieved and the fact that human annotation of this data is important.

This brings us back to one of the most discussed topics currently in AI, and that is a human-in-the-loop approach where data is inspected, annotated and augmented.

There is also immense opportunity in accelerating the human-in-the-loop instruction tuning with a AI-Accelerated data-centric studio.

The CoK approach also includes a two factor verification step, including factual and faithfulness verification.

Considerations

Inference time and token count can have negative impact on cost and UX.

This approach is not as opaque as gradient based approaches, and transparency exists throughout the process; which makes inspection easy at any step of the process, with insights into what was the data input and output.

Elements of CoK can be implemented without necessarily implementing the whole approach.

This approach will go a long way in solving for LLM hallucination; a recent study illustrated when performing In Context Learning (ICL) the responses from LLMs are much more accurate.

CoK can also be an enabler for organisations to use smaller models due to high level of contextually relevant data.

CoK again goes to show that at the heart of many LLM-based implementations is a LLM which acts as the back-bone of the application, with a meta-prompt.

The basic premise of Chain-Of-Knowledge Prompting is to be a robust approach as opposed to Chain-Of-Thought which at times can show brittleness.

More Detail On CoK

CoK-ET is a list of structure facts which holds the overall reasoning evidence, acting as a bridge from the query to the answer. CoK-EH is the explanation of this evidence.

A key finding from the study is that text-only reasoning chains are in itself not sufficient to enable LLMs to generate reliable and succinct reasoning.

Hence CoK enhances the prompt with structured data and a post-verification process.

It is widely recognised that reasoning can be modelled as induction and deduction on the existing knowledge system.

CoK can be seen as a framework to construct accurate and well-formed prompts at inference. As seen in the image above, CoK can easily be experimented within a prompt playground by manually creating the prompt.

Conclusion

A recent study considered the ability of LLMs to generate prompts, based on pre-defined inputs and outputs. It is evident now that this approach works only in cases where a very simplistic prompt needs to be created.

CoK again goes to show that generative AI implementations making use of LLMs will demand a level of flexibility, which will invariably introduce complexity.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

Here's a fusion Retrieval Centered Generation that LlamaIndex shared on Twitter -

https://twitter.com/llama_index/status/1727127794289959396