Chain-of-Symbol Prompting (CoS) For Large Language Models

LLMs need to understand a virtual spatial environment described through natural language while planning & achieving defined goals in the environment.

Introduction

Spatial Challenges for LLMs: Conventional Chain-of-Thought prompting is effective for LLMs in general, but its performance in spatial scenarios remained largely unexplored.

LLMs & Spatial Understanding: This research investigates the performance of LLMs for complex spatial understanding and planning tasks using natural language to simulate virtual spatial environments.

Limitations of Current LLMs: LLMs exhibit limitations in handling spatial relationships in textual prompts, prompting the question of whether natural language is the most effective representation for complex spatial environments.

Introducing CoS: This research proposes a method called Chain-of-Symbol Prompting (CoS) that represents spatial relationships with condensed symbols during chained intermediate thinking steps.

CoS is easy to use and does not require additional LLM training.

Performance Improvement: CoS outperforms Chain-of-Thought (CoT) Prompting in natural language across three spatial planning tasks and an existing spatial QA benchmark.

CoS achieves performance gains of up to 60.8% accuracy improvement (from 31.8% to 92.6%) along with a reduction in the number of tokens used in prompts.

Considerations

Converting spatial tasks into symbolic representations may introduce added complexity and computational overhead to the process.

Additionally, it necessitates annotation, which can be more challenging to acquire compared to the chain-of-thought in natural language or the program-based approach to thought.

To some degree describing a virtual spatial environment for the LLM to navigate is an extension of symbolic reasoning in LLMs.

Combining symbolic reasoning with spatial relationships is a powerful combination, where symbolic description are linked to their spatial representation.

The effectiveness of this approach is evident, however the challenge lies in creating good CoS prompts at scale, automatically without any manual intervention or prompt scripting.

CoS

It has been proven that LLMs exhibit impressive sequential textual reasoning ability during inference, resulting in a significant boost in their performance when encountered with reasoning questions described in natural languages.

This phenomenon has been clearly illustrated in the approach called Chain of Thought (CoT), which gave rise to the phenomenon some call Chain-of-X.

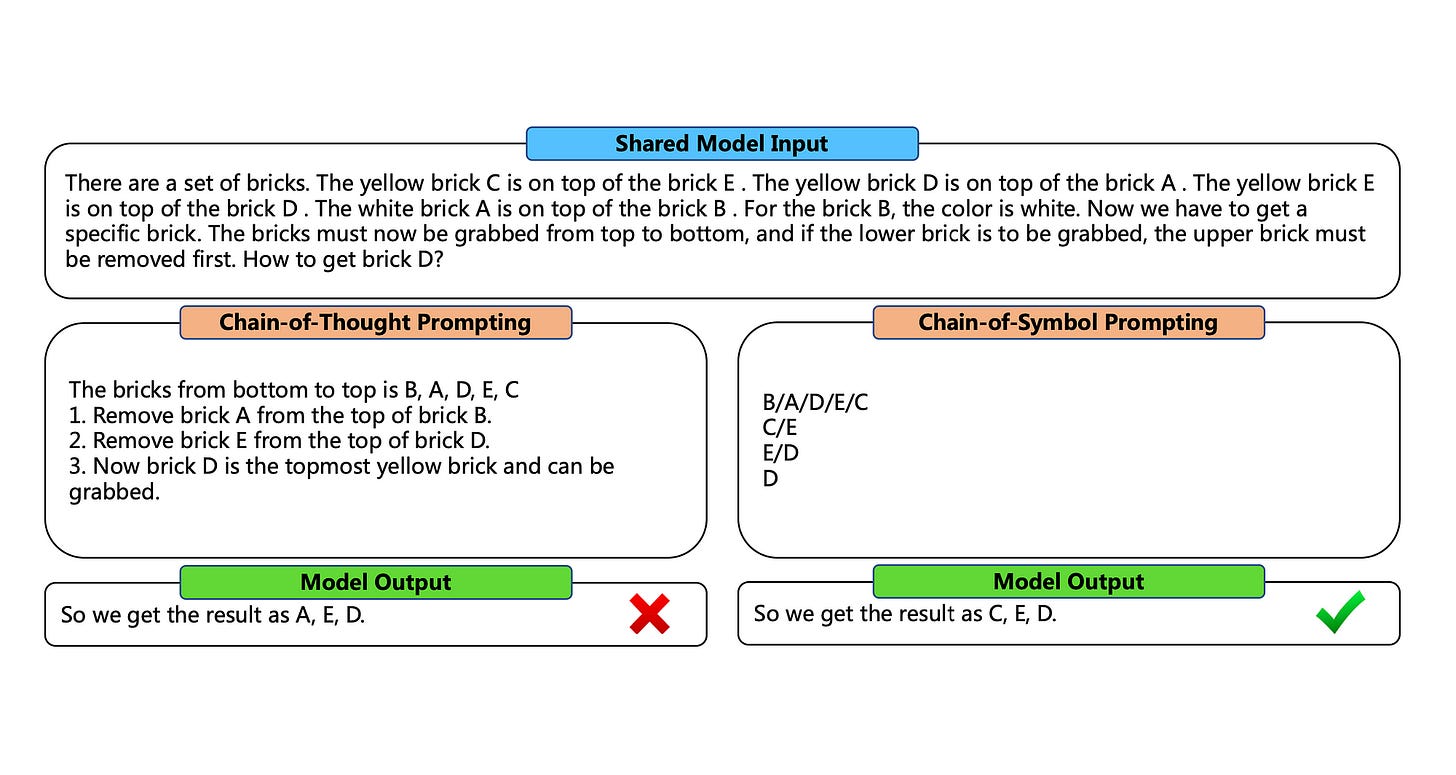

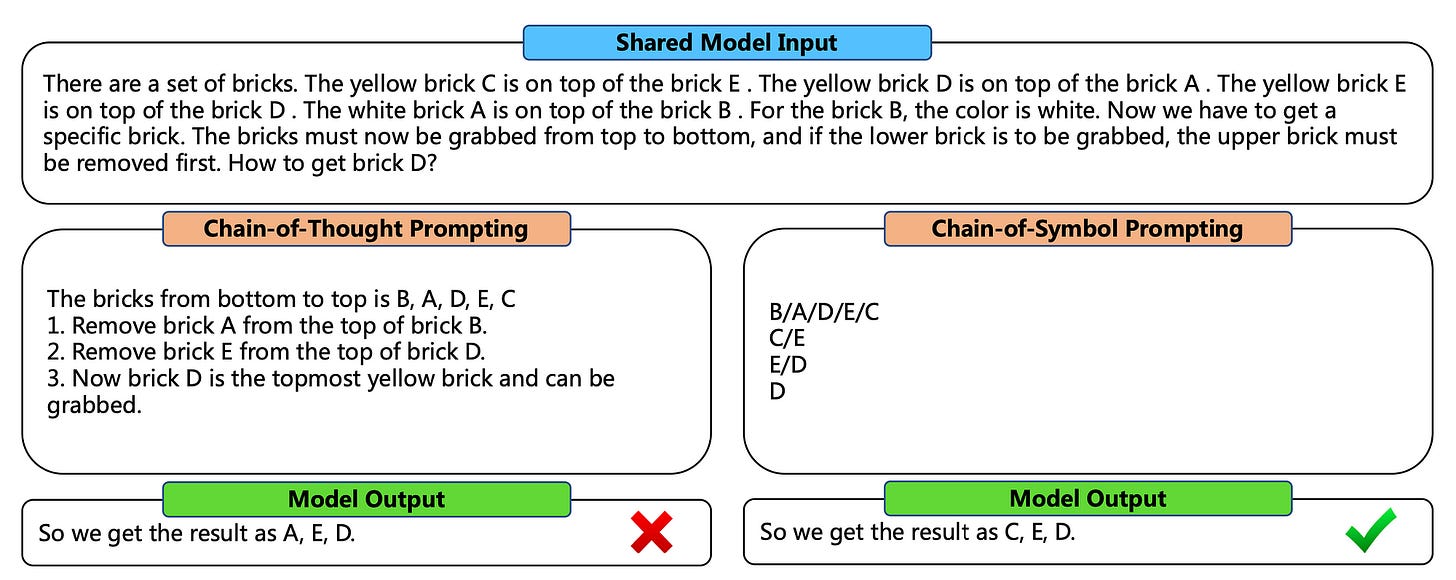

The image below shows a comparison between Chain-of-Thought (CoT) and Chain-of-Symbol (CoS) which illustrates how LLMs can handle complex spatial planning tasks with improved performance and token use.

The proposed three-step procedure to create the CoS demonstrations:

Automatically prompt the LLM to generate a CoT demonstration using a zero-shot approach.

Correct the generated CoT demonstration if there are any errors.

Replace the spatial relationships described in natural languages in CoT with random symbols, and only keep objects and symbols, remove other descriptions.

Some advantages of CoS are:

CoS as apposed to CoT, allows of more succinct and distilled procedures.

The structure of CoS makes it easier to analyse at a glance, by human annotators.

CoS provides an improved representation of spatial considerations which is easier for LLMs to learn compared to natural language.

CoS reduces the amount of input and output tokens.

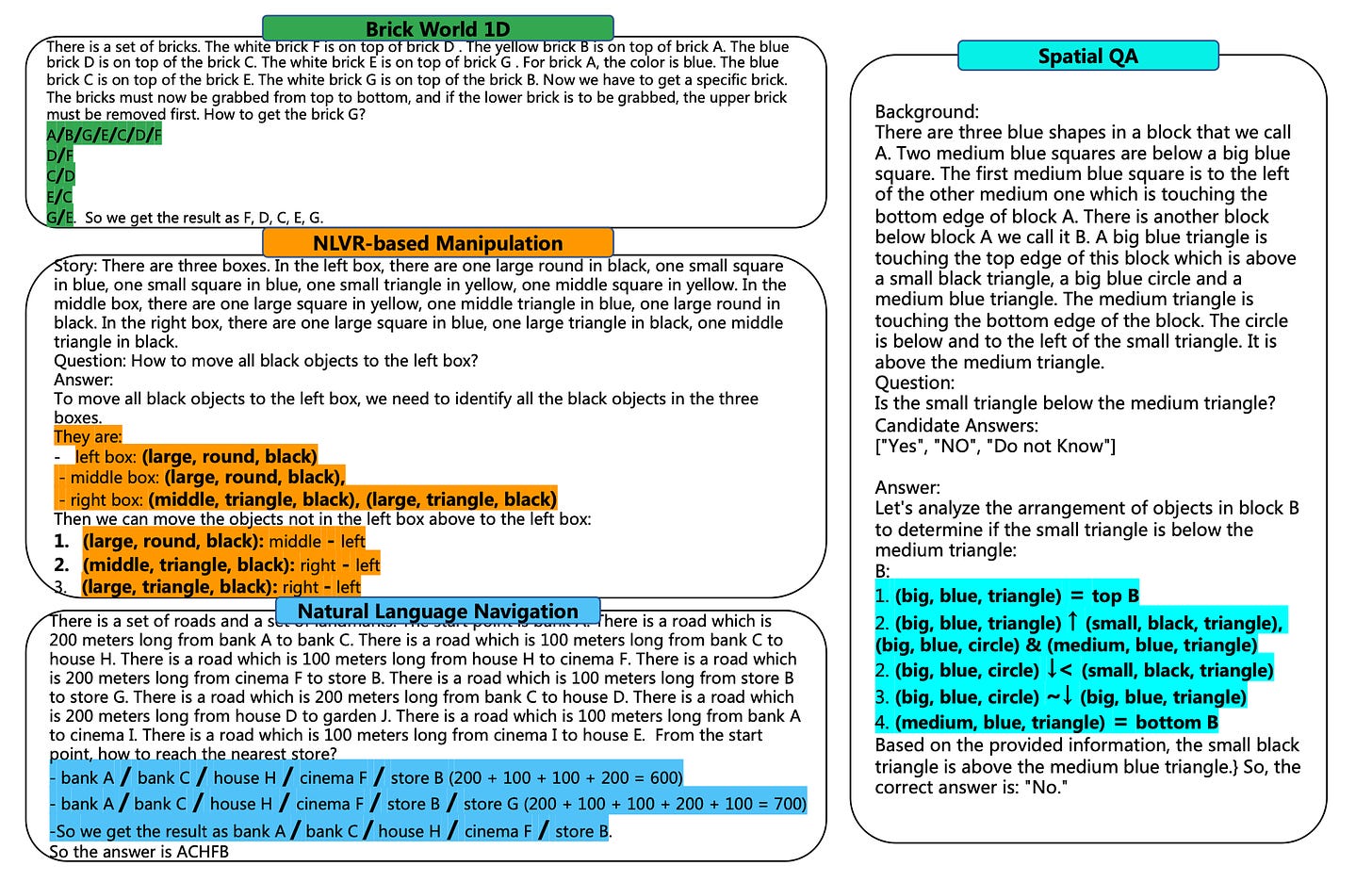

The prompt engineering examples in the image above, shows proposed tasks for Brick World, NLVR-based Manipulation (Natural Language Visual Representation), and Natural Language Navigation. Chains of Symbols are highlighted.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.