Chain-Of-Symbol Prompting To Improve Spatial Reasoning

The symbolic reasoning abilities of Large Language Models (LLMs) appears to me a largely overlooked topic.

Symbolic reasoning is similar to how models can interpret images — except, in this case, the model can “visualise” a scene based on a text-based user description.

With this capability, an LLM can create a “mental“ model & representation of an environment and provide insightful commentary or answers based on what’s been described, hence adding a new dimension to how we interact with AI.

In Short

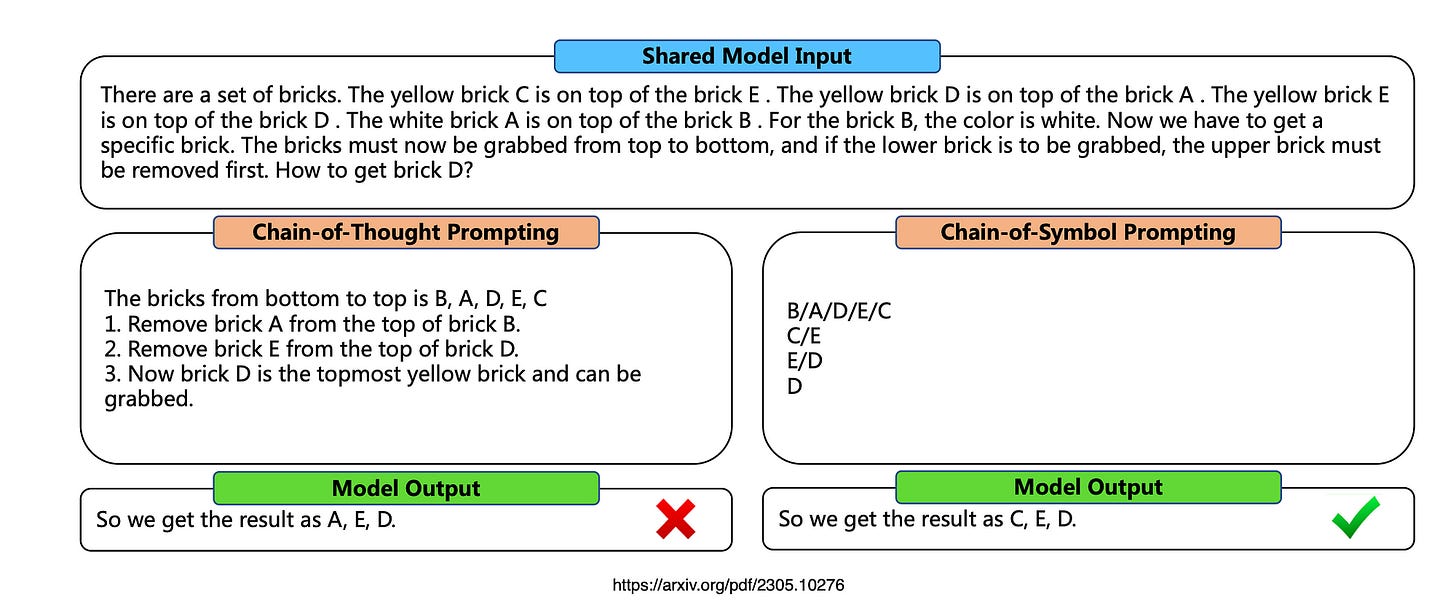

Large language models (LLMs) often struggle with complex spatial reasoning tasks. To address this, COS (Chain-of-Symbol Prompting) simplifies spatial descriptions by converting them into symbolic representations, streamlining complex concepts.

The Chain-of-Symbol (COS) method prompts large language models (LLMs) to transform complex natural language descriptions of environments into symbolic representations.

This approach significantly enhances LLM performance on spatial tasks by simplifying reasoning processes.

COS achieves impressive gains in accuracy while reducing token consumption, making it a more efficient method for handling intricate spatial planning tasks compared to traditional techniques. The use of symbols helps streamline input, allowing LLMs to process information more effectively without losing essential details.

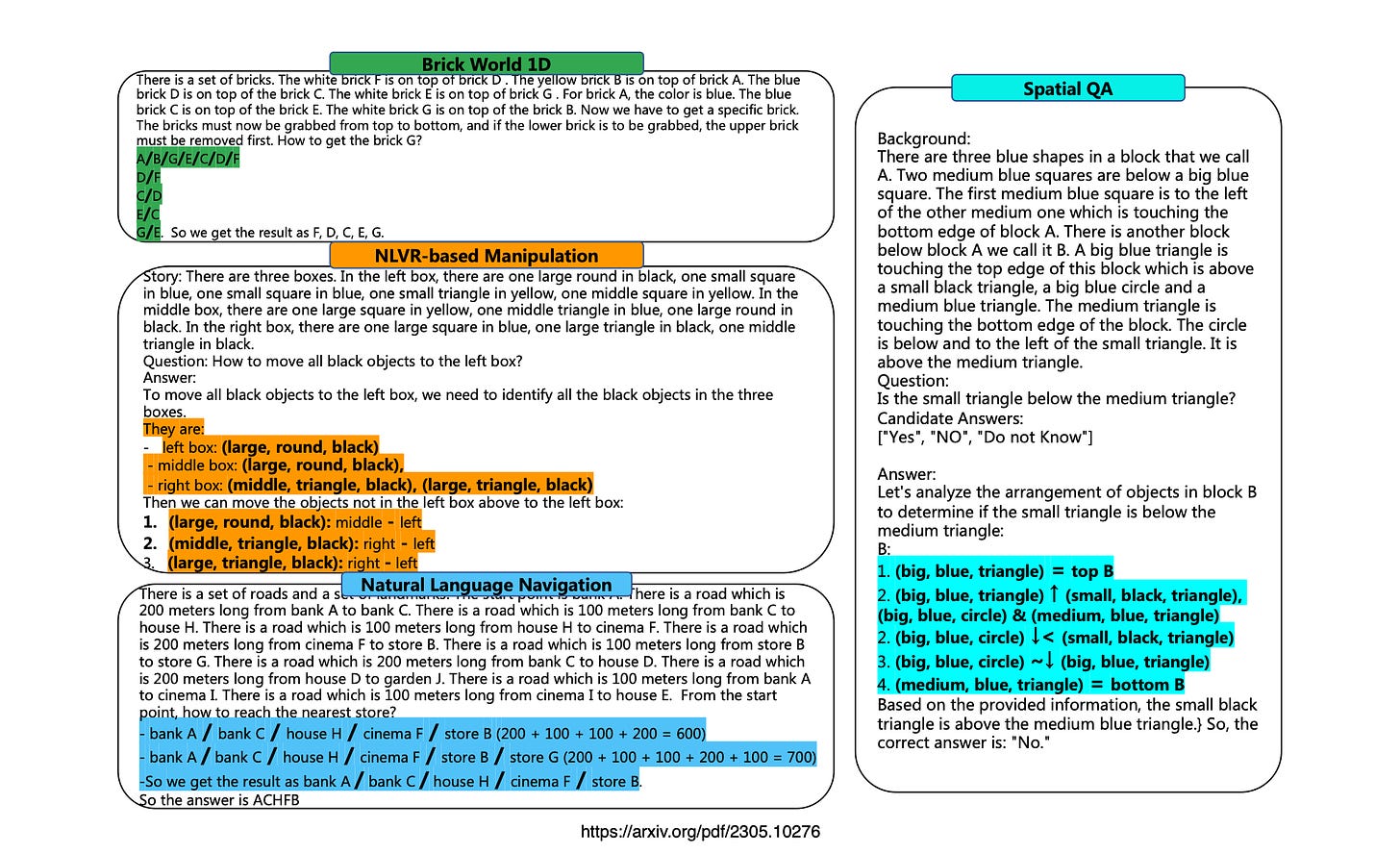

NLVR-based Manipulation refers to tasks that involve reasoning over natural language and visual relationships. NLVR stands for Natural Language for Visual Reasoning

Saving tokens for prompting refers to a technique that optimises the input size for large language models (LLMs) by using more concise representations, like symbols, instead of full-text chains of thought.

Some Examples

For example, when tasked with answering questions about the colours of objects on a surface, the LLM must track both the relative and absolute positions of each item, as well as their colours.

Consider this scenario:

I have a chair, two potatoes, a cauliflower, a head of lettuce, two tables, a cabbage, two onions, and three fridges. How many vegetables do I have?

The LLM should first parse this input into a dictionary, identifying entities and their corresponding quantities while filtering out non-vegetable items.

The final answer would be the sum of the values from this dictionary, illustrating the model’s reasoning capabilities.

LLMs need to understand a virtual spatial environment described through natural language while planning & achieving defined goals in the environment.

Some Considerations

Redundant natural language and irrelevant information also can hamper the performance of LLMs.

Using symbols to represent spatial relationships in word sequences can provide a clearer and more understandable framework for large language models (LLMs).

This method potentially simplifies data processing for the models.

Researchers are exploring the use of symbols in prompting LLMs, highlighting its importance as it extends beyond traditional language comprehension. This under-explored area offers valuable insights into enhancing LLM capabilities and understanding.

Using a set of symbols to represent spatial relationships between objects in complex environments.

To examine the impact of symbolic representations in prompting, researchers focus on complex spatial understanding and planning tasks.

These tasks require large language models (LLMs) to interpret virtual spatial environments described in natural language, while also planning and achieving specific objectives within those settings.

This approach helps assess how effectively LLMs can navigate and manipulate spatial information.

GPT-4o-mini Example of Basis Spatial Reasoning

The model is posed with the following question:

Answer this question: I have a chair, two potatoes, a cauliflower, a lettuce head, two tables, a cabbage, two onions, and three fridges. How many vegetables do I have?

Symbolic reasoning involves reasoning about objects, their relation to each-other and concepts.

And The Model Response:

The question lists several items, but only some of them are vegetables. Here’s the breakdown:

Potatoes: 2 (vegetable)Cauliflower: 1 (vegetable)Lettuce head: 1 (vegetable)Cabbage: 1 (vegetable)Onions: 2 (vegetable)

The other items (chair, tables, and fridges) are not vegetables.

Total number of vegetables: 2 + 1 + 1 + 1 + 2 = 7.

So, you have 7 vegetables.

Spatial Reasoning

Spatial reasoning in natural language processing focuses on understanding and processing spatial information within text. Some researchers have explored methods for training models on navigation tasks that require agents to reach specific locations based on textual descriptions.

Others have developed tasks where models generate instructions from “before” and “after” image pairs or created benchmarks for answering spatial questions about environments. Additionally, it has been observed that large language models struggle with text-based games that involve multi-step reasoning.

Navigation & Path Planning

Language grounding navigation involves an intelligent agent interpreting the visual environment and providing users with natural language instructions to reach a specific location.

Path planning requires the agent to determine its route to achieve goals, such as finding the shortest path or optimising cleaning tasks, often using reinforcement learning.

These topics are closely related to spatial planning and can be represented through symbolic representations.

In Conclusion

This paper explores how well Large Language Models (LLMs) perform in complex planning tasks that require understanding virtual spatial environments described in natural language.

The findings indicate that current LLMs struggle with spatial relationships in text.

This raises a crucial question: Is natural language the most effective way to represent complex spatial environments, or would symbolic representations be more efficient?

To address this, the authors introduce COS (Chain-of-Symbol Prompting), which uses condensed symbols for spatial relationships during reasoning steps.

COS significantly outperforms traditional methods, achieving higher accuracy while reducing input token usage.

Notably, a greater understanding of abstract symbols was observed as model sizes increased.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.