ChatGPT Models, Structure & Input Formats

In order to create your own ChatGPT like service, it is important to understand how ChatGPT is built and how to implement the various components.

For Starters

ChatGPT is not a Large Language Model (LLM) per se, or a development interface, it is a conversational service which is made available and hosted by OpenAI.

In short, the OpenAI ChatGPT service is a LLM based, conversational UI via a browser, which automatically manages conversational memory and dialog state.

ChatGPT Plus and ChatGPT Plugins are clear indications that OpenAI is intending to build ChatGPT out as a product which extends into service offerings.

Two key principles which ChatGPT Plus and Plugins are mastering are:

Synthesis of data. ChatGPT can extract data from various sources. For instance, existing data in the LLM together with data extracted from the web can be combined and synthesised. Data from various sources need to be synthesised into coherent and succinct dialog turns within a larger conversation.

Calibration. The interface needs to know where to extract data from and what data to prioritise.

ChatGPT Plugins

Plugins is a natural progression for ChatGPT to be extended into products and services in order to be enabled to execute user requests.

OpenAI plugins connect ChatGPT to third-party applications. These plugins enable ChatGPT to interact with APIs defined by developers, enhancing ChatGPT’s capabilities and allowing it to perform a wide range of actions.

ChatGPT Plugins are sure to grow in terms of the number of supported services and granularity. The plugins concept has been described by some as the AppStore of OpenAI or ChatGPT.

ChatGPT Structure

With the launch of the ChatGPT API, the expectation from developers was a complete and managed conversational interface; which was not the case.

As seen in the image below, the ChatGPT API lends access to the LLM models (4) used for ChatGPT, which are listed below . However, for components 1, 2 and 3, functionality will have to be developed for any home-grown solution.

The functionality for component three (data synthesis and calibration) will most probably be hardest to develop in such a way that the functionality developed by OpenAI will be matched.

Read more about managing conversation context memory and dialog state here.

ChatGPT Models

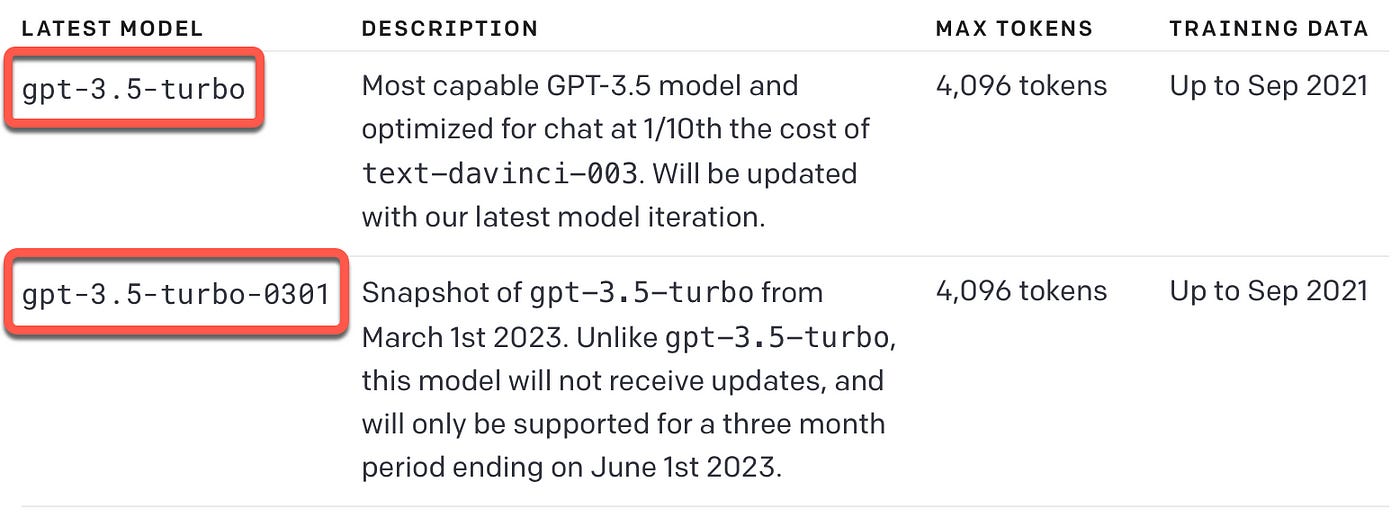

ChatGPT is based on two of OpenAI’s two most powerful models: gpt-3.5-turbo & gpt-4.

gpt-3.5-turbo is a collection of models which improves on gpt-3 which can understand and also generate natural language or code. Below is more information on the two gpt-3 models:

It needs to be noted that gpt-4 which is currently in limited beta, is a set of models which again improves on GPT-3.5 and can understand and also generate natural language or code.

GPT-4 is currently in a limited beta and only accessible to those who have been granted access.

Input Formats

You can build your own applications with gpt-3.5-turbo or gpt-4 using the OpenAI API, by making use of the example code below to get started.

Notice the format of the input data, you can read more about Chat Markup Language (ChatML) here.

pip install openai

import os

import openai

openai.api_key = "xxxxxxxxxxxxxxxxxxxxxxx"

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages = [{"role": "system", "content" : "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.\nKnowledge cutoff: 2021-09-01\nCurrent date: 2023-03-02"},

{"role": "user", "content" : "How are you?"},

{"role": "assistant", "content" : "I am doing well"},

{"role": "user", "content" : "How long does light take to travel from the sun to the eart?"}]

)

print(completion)Below is the response or output from the gpt-3.5-turbo model:

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "It takes about 8 minutes and 20 seconds for light to travel from the sun to the earth.",

"role": "assistant"

}

}

],

"created": 1678039126,

"id": "chatcmpl-6qmv8IVkCclnlsF5ODqlnx9v9Wm3X",

"model": "gpt-3.5-turbo-0301",

"object": "chat.completion",

"usage": {

"completion_tokens": 23,

"prompt_tokens": 89,

"total_tokens": 112

}

}The definitions of the response object fields are:

id: the ID of the requestobject: the type of object returned (e.g.,chat.completion)created: the timestamp of the requestmodel: the full name of the model used to generate the responseusage: the number of tokens used to generate the replies, counting prompt, completion, and totalchoices: a list of completion objects (only one, unless you setngreater than 1)message: the message object generated by the model, withroleandcontentfinish_reason: the reason the model stopped generating text (eitherstop, orlengthifmax_tokenslimit was reached)index: the index of the completion in the list of choices

Finally

In addition to the lack of context management, OpenAI also states that gpt-3.5-turbo-0301 does not pay strong attention to the system message, and extra focus is required for instructions in a user message.

If model generated output is unsatisfactory, iteration and experimenting are required to yield improvements. For instance:

By making the instructions more explicit

Specifying the answer format

Ask the model to think step by step or sequentially

Thirdly, and lastly, no fine-tuning is available for gpt-3.5-turbo models; only base GPT-3 models can be fine-tuned.

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.