Cohere Coral

Coral is a LLM-based chat User Interface from Cohere and competes with products like OpenAI ChatGPT, HuggingFace HuggingChat…

Taking A Step Back

The term ChatGPT has become ubiquitous whenever reference is made to Large Language Model (LLM) functionality. To understand the category Coral falls into, it makes sense to do a quick overview of the three categories of LLM services.

Raw Models

There are a number of open sourced LLMs, like Bloom, Meta’s Llama 2, Anthropic’s Claude, Falcon LLM and others. These models are free to download and use.

However, there are a number of considerations in getting these models into an operational state. Like hosting, training, operational considerations, etc.

LLM APIs

Most users access LLMs via API endpoints made available by the likes of OpenAI, Cohere, AI21Labs, to mention a few.

In general these endpoints point to a specific mode, in the case of OpenAI assistant, chat, edit or completion modes.

Below is an example of calling the OpenAI completions URL.

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "Say this is a test!"}],

"temperature": 0.7

}'However, developers do not have insight into what happens under the hood when an API is called. OpenAI has introduced model fingerprintfunctionality for users to track any underlying model changes.

Generative AI apps, also referred to as GenApps are grounded or based on these APIs. A single LLM (accessed via an API) or multiple LLMs act as the backbone of these generative apps.

User Interfaces

The major LLM providers have branched into two offerings, APIs and end-user UIs. Examples of end-user UIs include HuggingChat, ChatGPT (probably the best known UI) and now Coral from Cohere.

These UIs are focussed on a no-code, configurable, easy to access and customised user experience.

As the standard functionality offered by LLM providers grow, companies developing third party LLM UIs are at risk of being superseded. Especially if their product lacks true differentiation and only consists of a very thin layer of IP and value add.

Back To Coral

Grounding

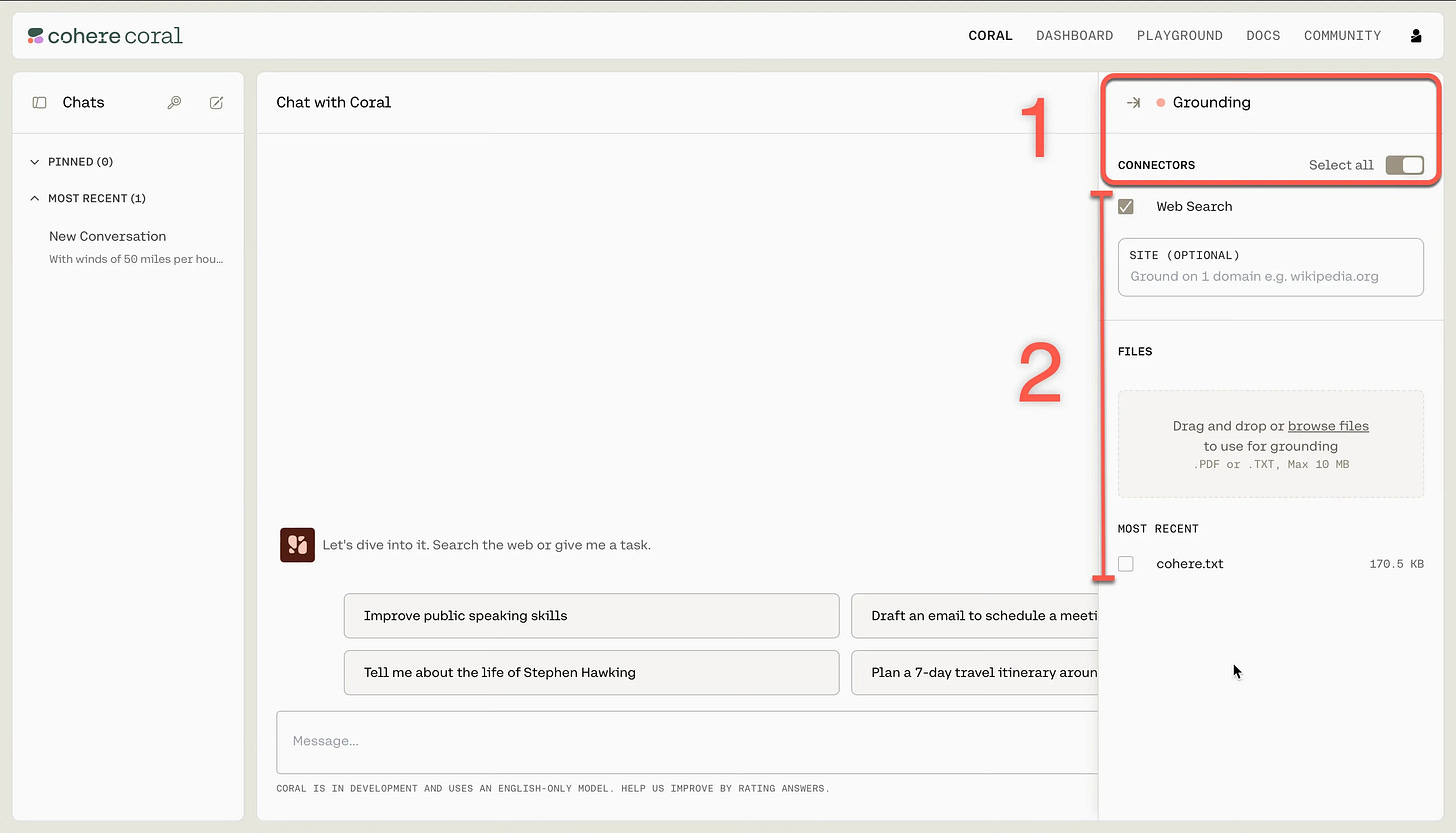

On the right-hand pane, Coral has a feature called GROUNDING, this feature can be toggled on or off. In the image below GROUNDING is set to off.

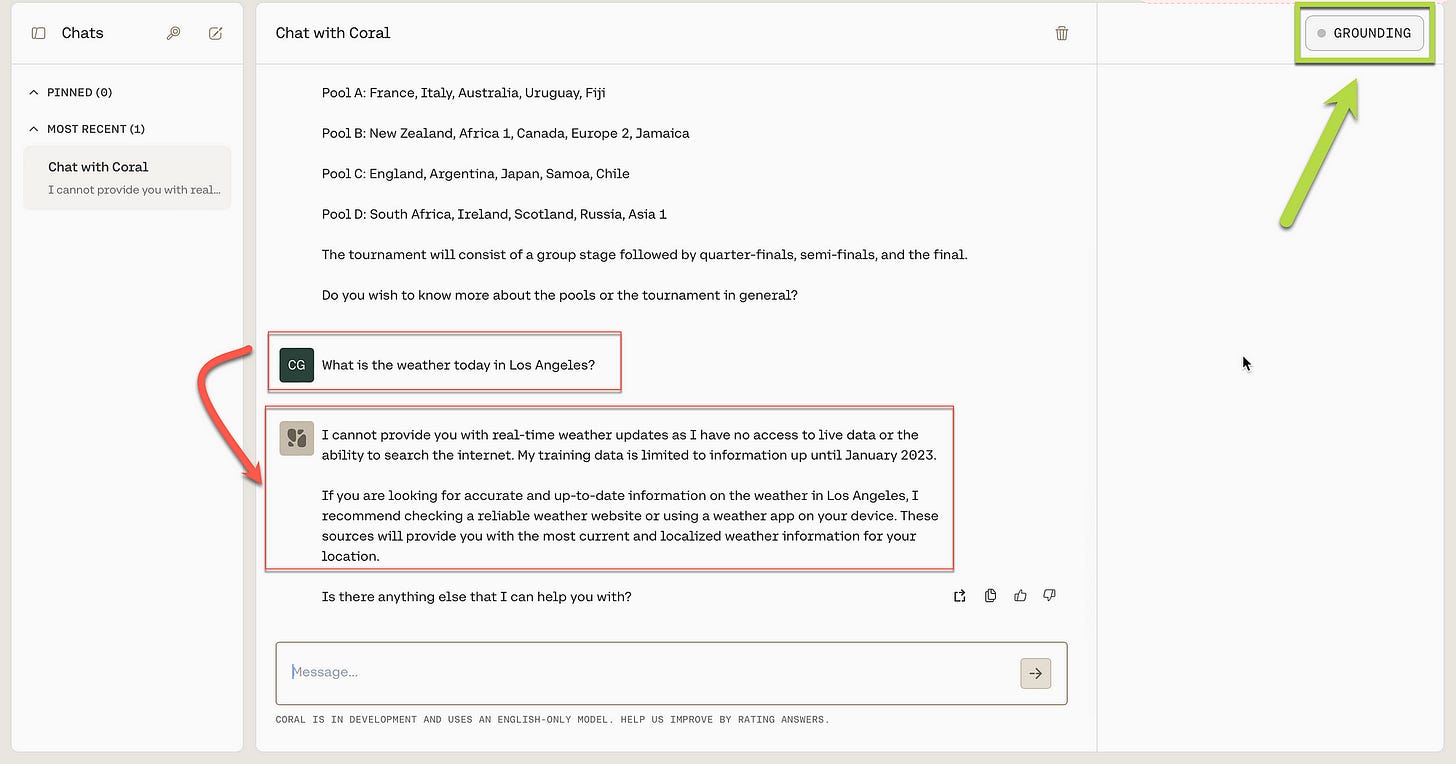

As seen below, when a highly time-sensitive question is asked, Coral responds with an appropriate answer, stating that the model training cutoff date is January 2023.

When grounding is switched off, the conversation is 100% based on the underlying LLM or LLMs.

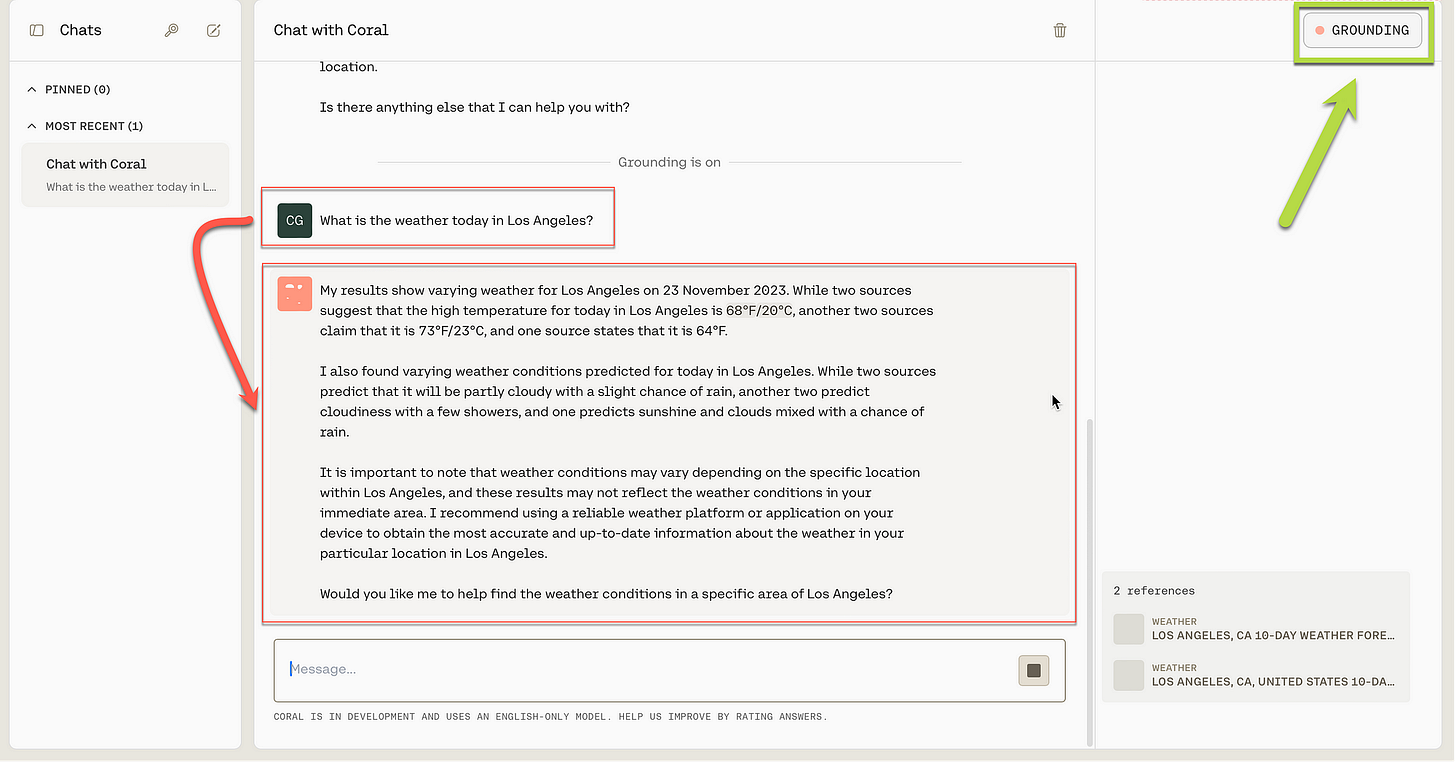

When grounding is switched on, as per the green arrow in the image below, Coral automatically references the web, and lists the two references it made use of to answer the weather related question.

Again considering the image below, Coral referenced five sources on the web to answer the question. There is quite a bit happening under the hood here, with Coral extracting information from various sources on the web. Then synthesising the relevant information and presenting the result via NLG in a coherent and succinct manner.

RAG — Retrieval Augmented Generation

The principles of RAG is fairly straight forward, where a text snippet of highly contextual information is extracted and injected into the prompt at inference.

This enables in-context learning and the contextual references allows for a reduction in hallucination and an improvement in contextual relevance.

A RAG implementation can become very complicated and technical, but the simplest form of implementation is merely uploading a document which the platform references during inference.

As seen below, a text document with highly contextual facts is uploaded. When a question relevant to the file is asked, Coral references the data file, without going to the web.

Coral has a number of follow-up options with quick-reply buttons and next question suggestions.

Tools

In the context of the OpenAI Assistant which refers to helper functions as tools, Cohere chose the term Connectors. As seen below, currently there are two Connectors, being Web Search and Files (RAG).

With Coral automatically performing the orchestration, and choosing one or more Connectors in a seamless fashion. In this sense, Coral does have the feel of an Agent which has access to various tools.

Below the two tool types or connectors are marked with a one (web search) and two (RAG/Files).

I imagine that going forward, Cohere will introduce more Connectors to Coral. Enterprise customers might lead the charge by developing custom Connectors.

In Closing

Coral can read multiple sources to answer questions as truthfully as possible, while citing sources should you want to check accuracy.

Cohere warns that Coral may be worse at answering creative questions when grounded.

Coral is an ideal tool for Cohere to service the needs of enterprise customers. In future Coral can also become a payed subscription service, or a premium service for a monthly fee.

Cohere sees the key features of Coral as follows:

Conversational: With conversation as its primary interface, Coral understands the intent behind conversations, and holds conversational context.

Customisable: Users can augment Coral’s knowledge base through data connections.

Grounded: To help verify generations, Coral can produce responses with citations from relevant data sources.

Privacy: The data used for prompting and the chatbot’s outputs do not leave a company’s data perimeter. Coral is cloud-agnostic and will support deployment on any cloud.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.