Complete AI Productivity Suite

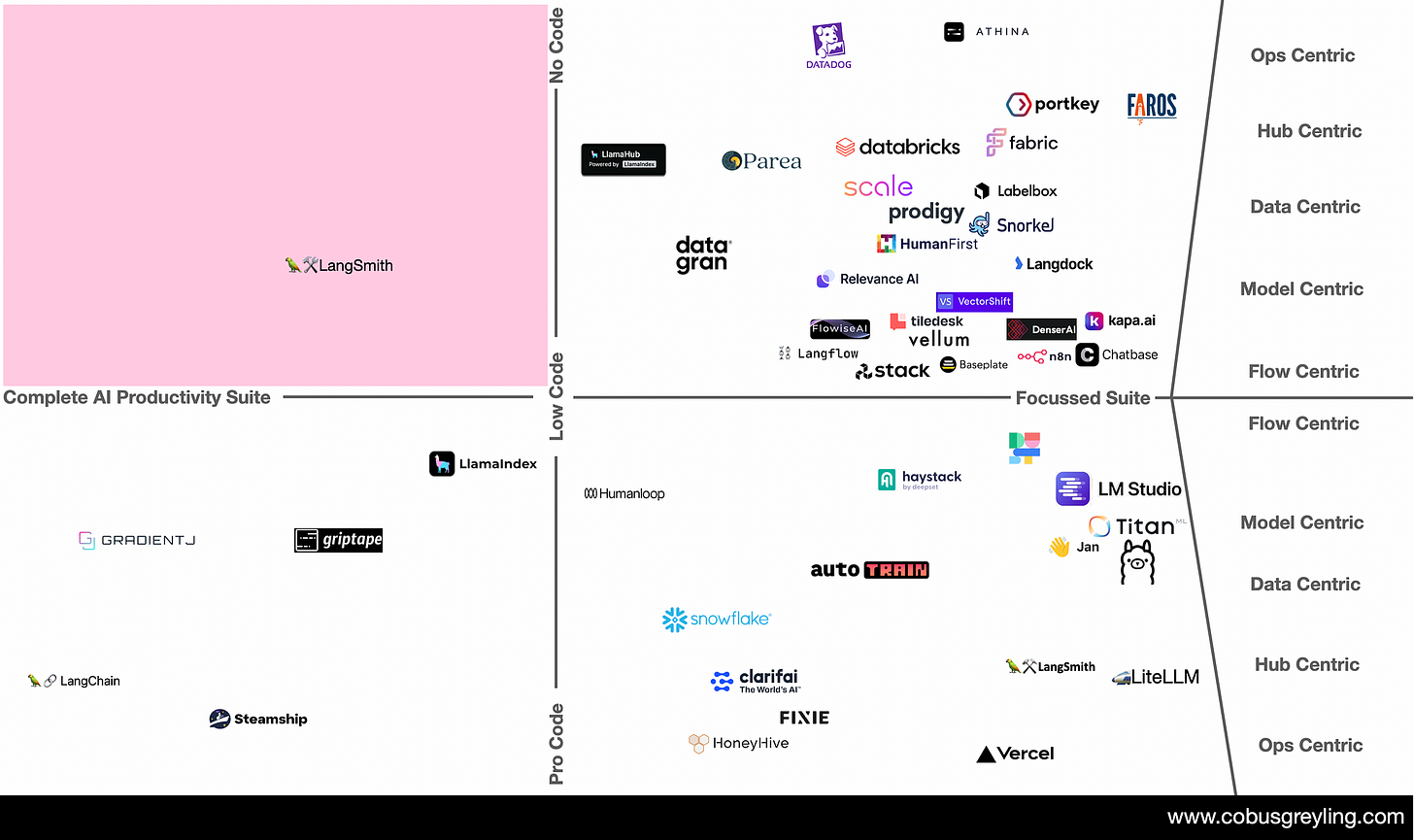

I’m trying to define what a complete AI productivity suite comprises of and what the market landscape looks like.

Introduction

This image represents my initial endeavour to outline the components necessary for a comprehensive AI productivity suite.

I invite any constructive feedback on how to refine our understanding of what such a suite entails, including its various components and functionalities.

Additionally, I welcome insights and suggestions for enhancing the product landscape or refining the chart axes. Your input is invaluable in shaping the development of this market map.

Caveat

As you read further, keep in mind that I acknowledge the challenge of categorising intricate platforms into a limited number of categories.

The intention isn’t to undervalue any platform but rather to establish a framework for reference, bearing in mind that these platforms continually evolve and broaden in functionality.

Complete AI Productivity Suite

A complete AI productivity suite comprises of all the aspects mentioned in the table. It needs to be mentioned that a complete suite will not possess the depth and focus of some or all of the products which choose to address a single aspect of the market.

There are also aspects of the LLM/Generative AI market which are over represented; for instance flow builders are in abundance and still increasing in number. Data is only recently receiving the attention it requires.

Ops Centric

Large Language Model Ops (LLMOps) is a multifaceted discipline that addresses the complex operational challenges associated with deploying, managing, and maintaining large language models in production environments.

By employing best practices, advanced techniques, and specialised tools, LLMOps ensures the efficient, reliable, and secure operation of LLMs to deliver value to organisations and end-users alike. Ops Centric frameworks ensure the optimal performance, scalability, reliability, and security. Where hubs focus more on the application side.

Hub Centric

Hubs are platforms for building LLM & Generative AI applications. Hubs allow for the aggregation and orchestration of functionality to debug, test, evaluate, and monitor chains, intelligent agents and generative AI applications built on any LLMs or in some cases other frameworks.

Hubs often allows for different data connectors, LLM hosting and access to a number of models, together with tracing and monitoring.

Data Centric

This category focusses on processes on the data aspect of LLMs. Vector database and RAG specific solutions have been excluded and forms part of the chart below.

Data centric solutions focus on on data discovery; the process of exploring unstructured data and creating clusters of semantic similarity.

The process of data design, where data is structured and formatted in the optimal way for fine-tuning or RAG. And lastly data development, where data goes through a data safety and governance process. And so augmenting relevant data for fine-tuning. Data annotation, RLHF and weak supervision form part of this.

Model Centric

Model centric platforms range from the basic approach of allowing multiple model access, to orchestrating different LLMs within a flow. Included in this category is inference servers, especially local inference of Small Language Models.

In some instances these products give access to hosted open-sourced models. The products listed are focussed on model access, hosting etc.

Larger cloud environments like HuggingFace, OpenAI, Cohere, Goose AI, AWS, Google, Azure & the like were excluded.

Flow Centric

Focussed on building flows which can be used to create conversational UIs, RPA processes, pipelines, prompt chaining or agents. Flows can incorporate RAG functionality or related knowledge base capabilities; multiple LLMs can be orchestrated.

Platforms vary greatly in complexity and ability. In some cases prompt playgrounds have expanded into flow builders.

In other instances traditional chatbot development frameworks introduced LLM elements into their flow-builders; these instances were not added to the chart.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.