Contrastive Chain-Of-Thought Prompting

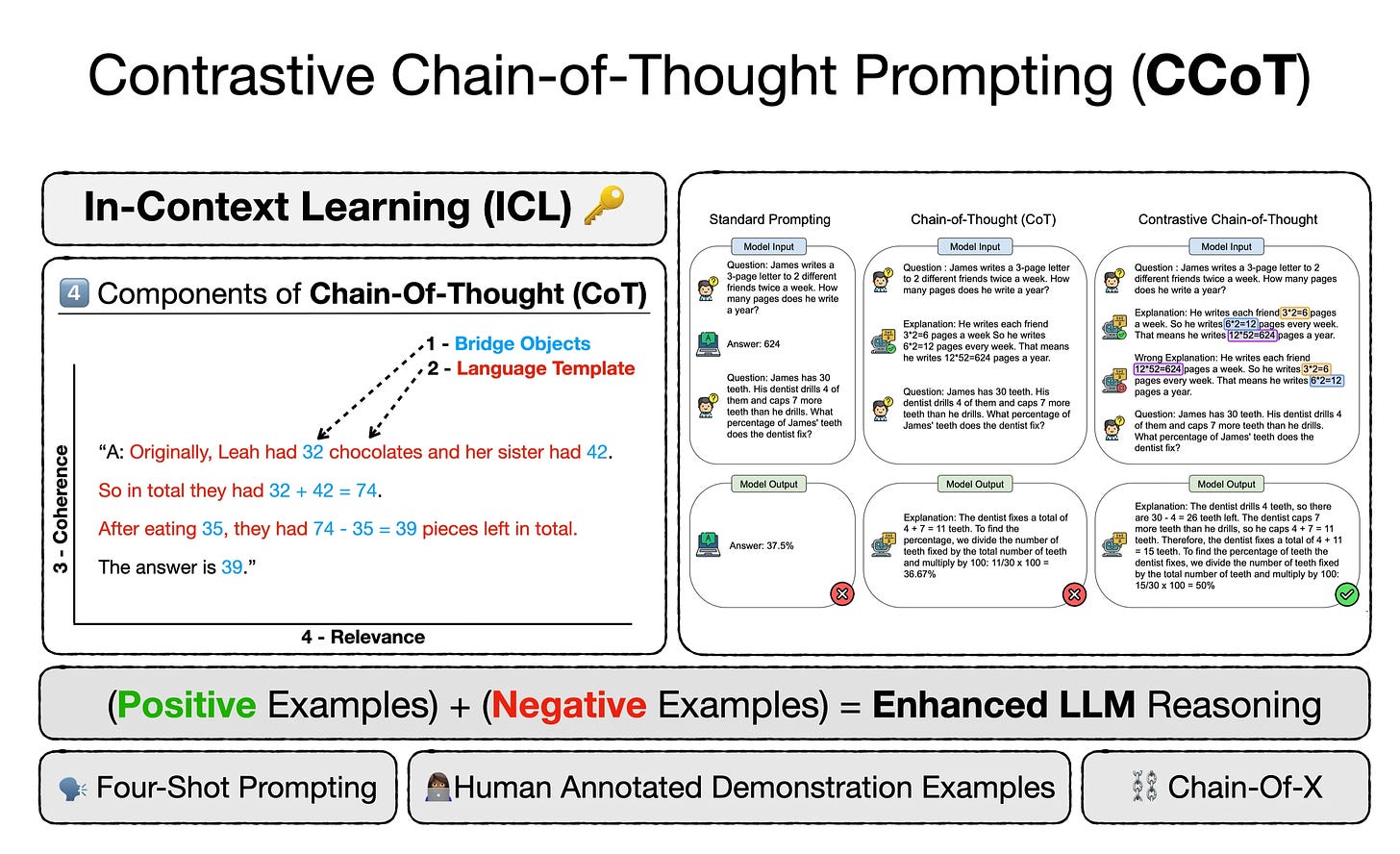

Contrastive Chain-of-Thought Prompting (CCoT) uses both positive & negative demonstrations to improve LLM reasoning.

Introduction to Chain-of-Thought (COT)

Of Late there has been a number of Chain-Of-X illustrating how Large Language Models (LLMs) are capable of decomposing complex problems into a series of intermediate steps.

This lead to a phenomenon which some call Chain-Of-X.

This basic principle was first introduced by the concept of Chain-Of-Thought(CoT) prompting…

The basic premise of CoT prompting is to mirror human problem-solving methods, where we as humans decompose larger problems into smaller steps.

The LLM then addresses each sub-problem with focussed attention hence reducing the likelihood of overlooking crucial details or making wrong assumptions.

The Chain-Of-X approach is very successful in interacting with LLMs.

CoT vs CCoT

An interesting element of CoT was the relatively low impact of using invalid demonstrators; and by implication wrong assumptions during the intermediate reasoning steps are propagated down the chain.

Chain-Of-X approaches do not illustrate behaviour, but only supply correct examples for the LLM to follow.

Considering this, Contrastive Chain-Of-Thought (CCoT) is focussed on supplying both positive and negative examples in an effort to enhance the model’s reasoning capability.

This study is again proof of the importance of In-Context Learning (ICL) for LLMs.

This approach is very much in keeping with the emerging understanding on how important In-Context Learning (ICL) is for LLMs.

CCoT

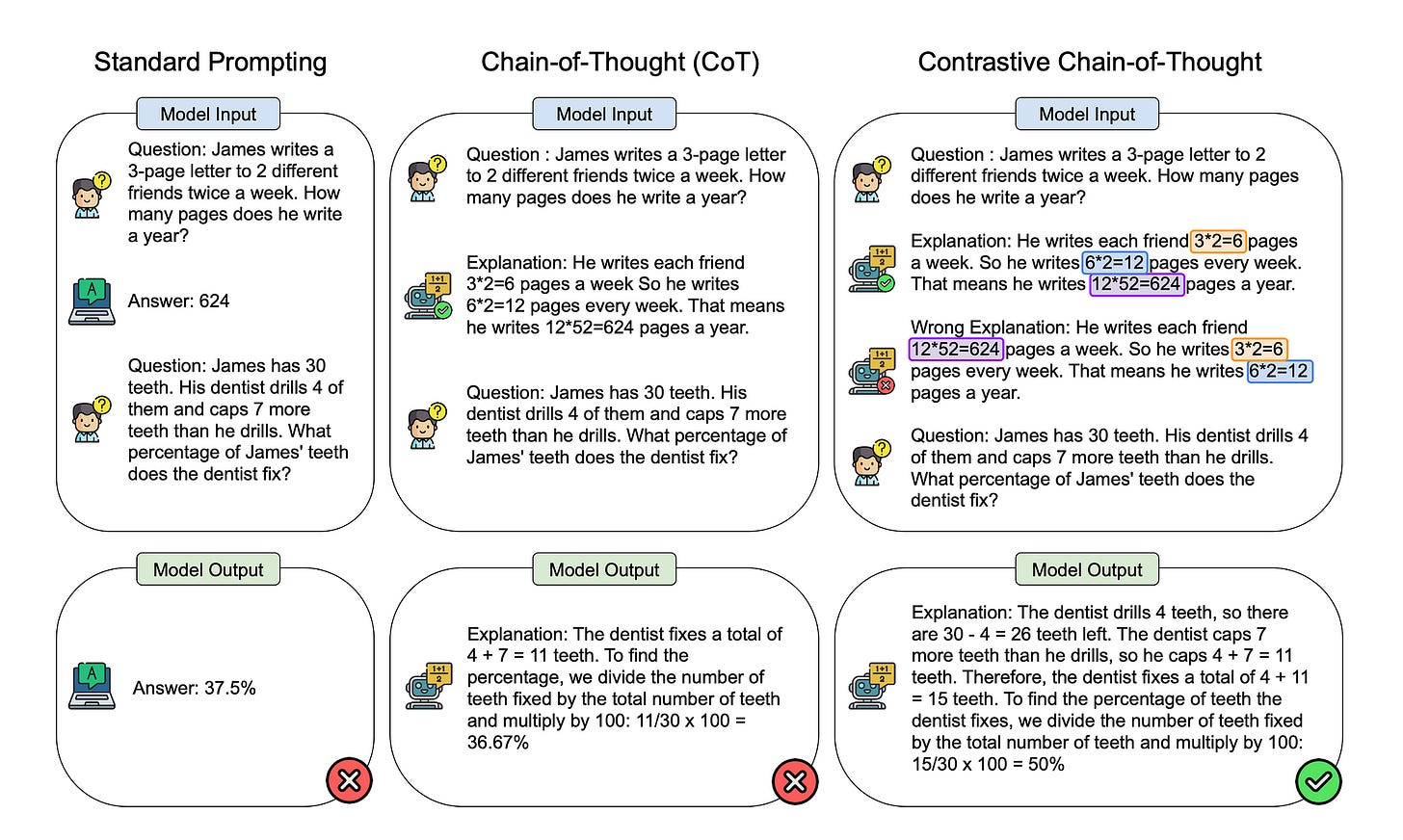

CCoT uses both valid and invalid reasoning demonstrations which are presented to the LLM at inference.

The model does not know what faults to avoid in conventional CoT, which could lead to increased mistakes and error propagation.

CCoT provides both the correct and incorrect reasoning steps in the demonstration examples.

More On CCoT

Contrasting positive and negative examples increase the effectiveness of the CoT approach.

An automated method needs to be developed to create the positive and negative demonstrations. Which is premised on human annotated data with correct and fitting incorrect data.

The improvement of CCoT compared to traditional CoT approaches comes with the added burden of developing a structure for prompt creation.

Traditional CoT consist of two elements, Bridging & Language Templates.

Bridging

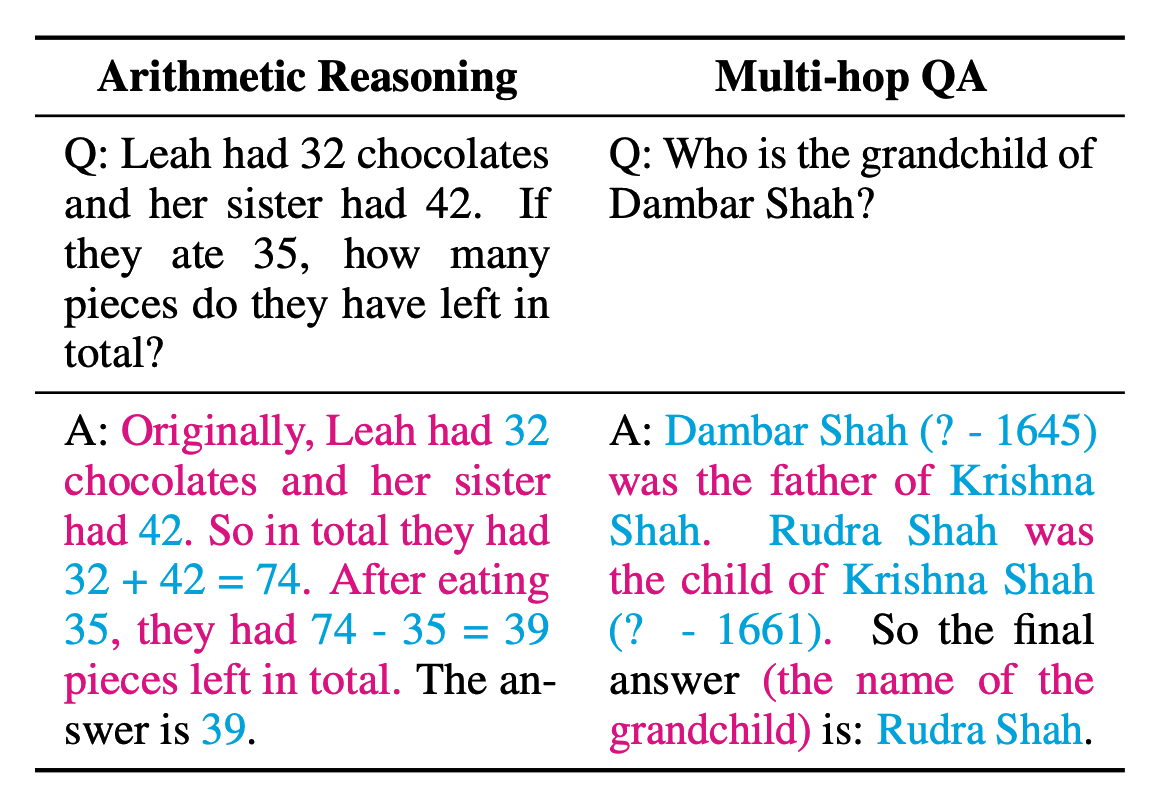

Bridging refers to symbolic items which the model traverses to reach a final conclusion. Bridging can be made up of numbers and equations for arithmetic tasks, or the names of entities in factual tasks.

Language Templates

Language templates are the textual hints that guide the language model to derive and contextualise the correct bridging objects during the reasoning process.

Above is a practical example of Bridging Objects (blue) and Language Templates (red) for creating Chain-of-Thought rationale.

Coherence refers to the correct ordering of steps in a rationale, and is necessary for successful chain of thought. Specifically, as chain of thought is a sequential reasoning process, it is not possible for later steps to be pre- conditions of earlier steps.

Relevance refers to whether the rationale contains corresponding information from the question. For instance, if the question mentions a person named Leah eating chocolates, it would be irrelevant to discuss a different person cutting their hair.

Considering the image above, here you see input and output examples comparing Standard Prompting, Chain-Of-Thought (CoT) prompting and Contrastive Chain-Of-Thought (CCoT) prompting.

Introducing Complexity

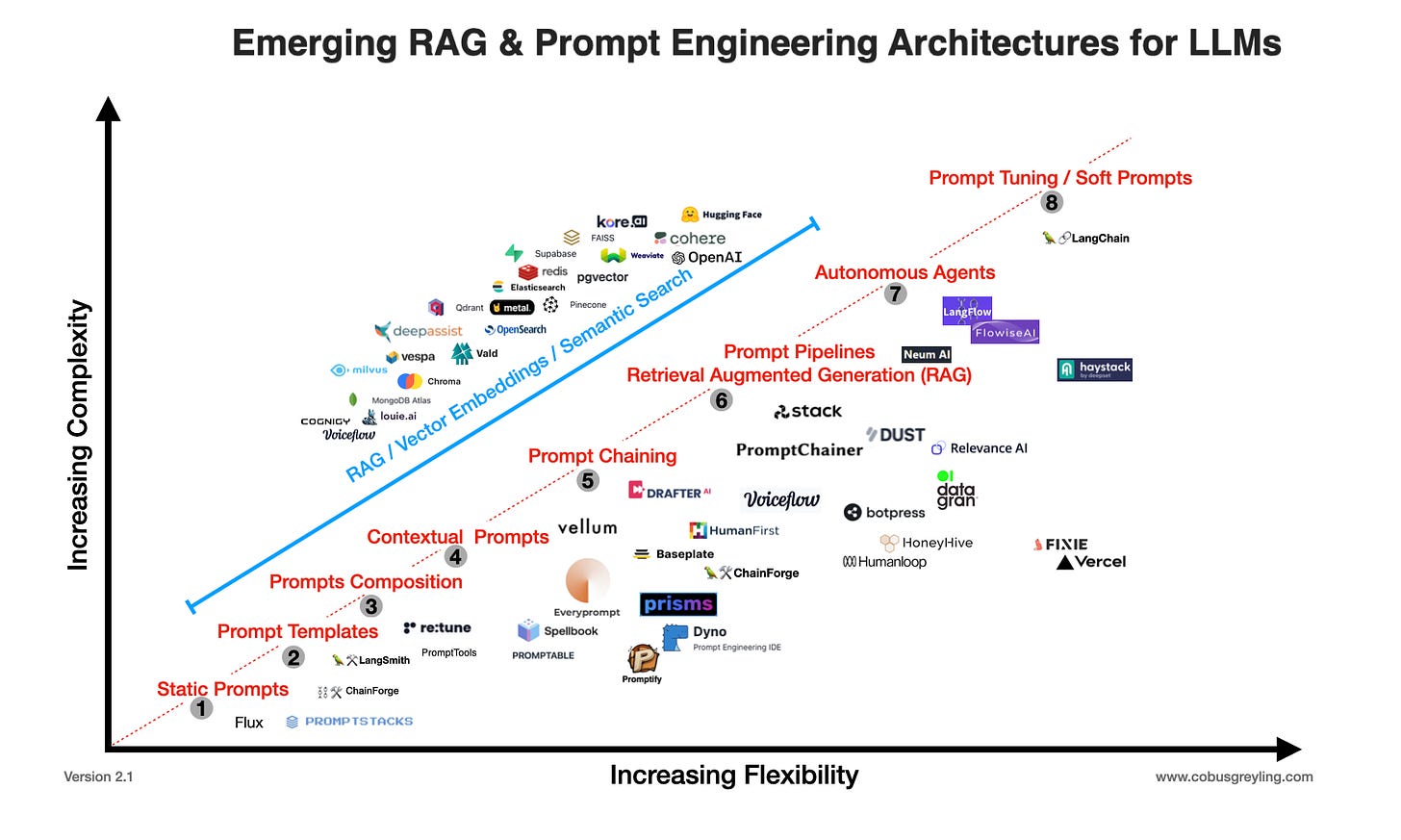

In the recent past there was an allure of the simplicity of LLM implementations and LLMs were thought to have emerging capabilities just waiting to be discovered and leveraged.

A single very well formed meta-prompt was seen as all you needed to build an application. This mirage is disappearing and the reality is dawning that, as flexibility is needed, complexity needs to be introduced.

The study also introduces an automated method of constructing contrasting demonstrations. An automated process premised on human annotated data.

This is also in keeping with the current trend of complexity of LLM based interactions increasing as the demand for flexibility and application resilience increase.

Contrastive chain of thought can serve as a general enhancement of chain-of-thought prompting.

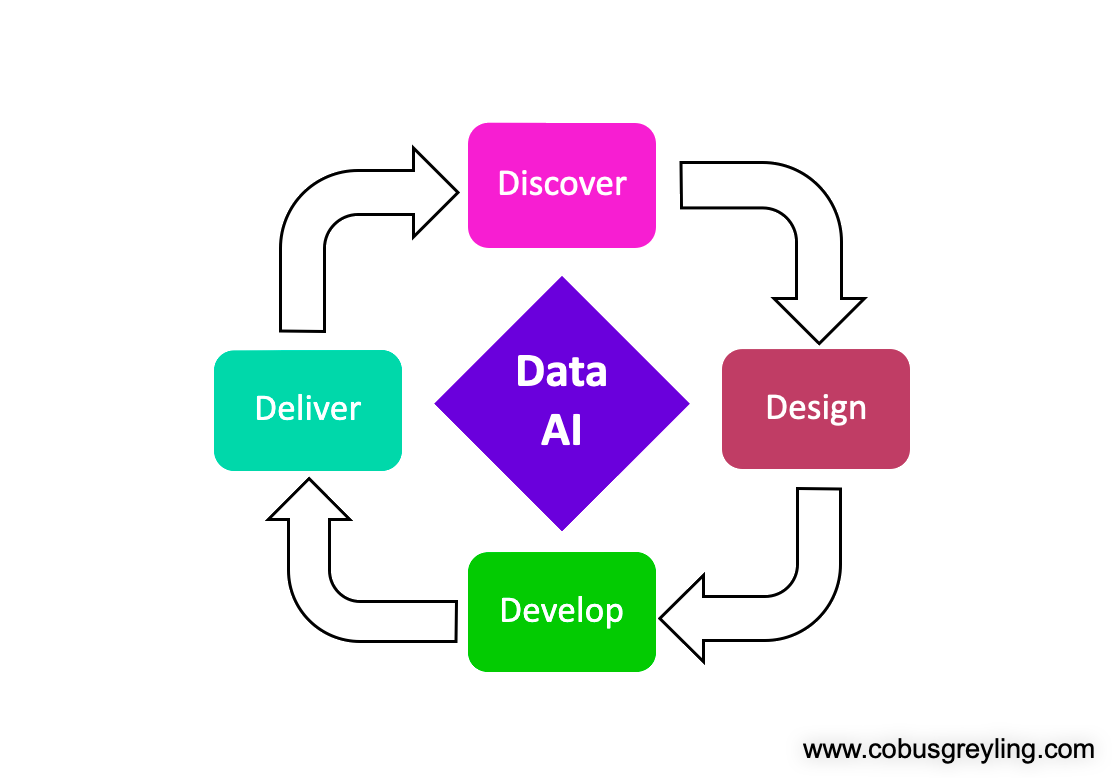

In Conclusion, Data

Recent studies are showing the importance of a data centric approach to LLM implementations.

Data to be injected at inference time needs to be highly contextual, factually correct and succinct. These requirements necessitates a process of data discovery, data design, data development (augmented existing training data) and a method to deliver the data.

Data Discovery demands data inspectability and observation.

Data Design is formatting the data in the correct format, and ensuring the data contents is accurate and correct.

Data Development is the augmentation of data to the optimal volumes and training sets sizes.

Data Delivery are performed via gradient and gradient-free methods, at inference or prior via a custom fine-tuning model; or both.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.