Creating Synthetic Training Data

In the recent past, several impediments have been identified in creating synthetic data. One major challenge is ensuring diversity and coverage.

While large language models (LLMs) can generate vast amounts of data, guaranteeing that this data is sufficiently varied and accurate at scale is problematic. Scaling up the diversity of synthetic data was seen as demanding significant effort and often necessitates human intervention.

Introduction

There are a number of well documented limitations in terms of using LLMs to create synthetic training data…

Challenges in Creating Synthetic Data

Scaling Diversity: While increasing the quantity of synthetic data is easy, ensuring that its diversity scales up is difficult.

Single Instance Limitation: An LLM can produce only one instance given a data synthesis prompt, limiting the diversity without considering sampling techniques.

Instance-driven Approaches:

Relies on a seed corpus to create new instances.

Diversity is limited to the variations within the seed corpus.

Difficult to extend beyond the initial seed corpus.

Limited size of seed corpuses in practical scenarios hampers scalability.

One of the challenges of using real-world non-synthetic training data is finding data which is tailored to the use-case.

Data Design & Development

Two recent studies focussed on how to create a simple framework to use LLMs to generate synthetic training…the frameworks are focussed on:

Avoiding duplications in data,

Introducing diversity in the data sets

Ensuring certain traits and characteristics are present in the data

Following a process of data design and establishing an underlying, defined data topology to reach the desired data development goals.

Creating TinyStories

An approach called TinyStories were followed with the training of the Phi-3 small language model, by Microsoft.

Instead of relying solely on raw web data, the creators of Phi-3 focused on high-quality data.

Microsoft researchers developed a unique dataset comprising 3,000 words, evenly split into nouns, verbs, and adjectives.

They then used a large language model to generate children’s stories, each using one noun, one verb, and one adjective from the list.

This process was repeated millions of times over several days, producing millions of short children’s stories.

Scaling Synthetic Data Creation with 1,000,000,000 Personas

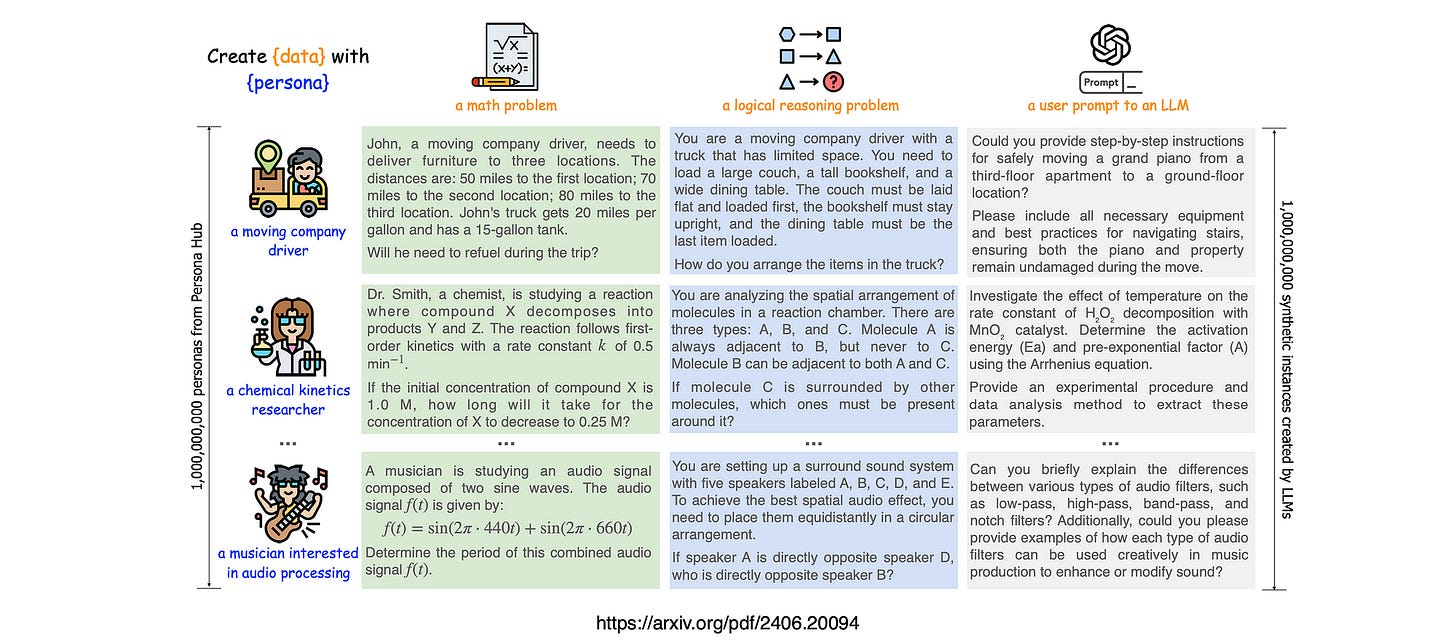

In another study, the researchers propose a new persona-driven data synthesis method that uses different perspectives within a large language model (LLM) to create varied synthetic data.

To support this method on a large scale, they introduce Persona Hub, a collection of 1 billion diverse personas automatically gathered from web data.

These personas, representing about 13% of the world’s population, carry a wide range of world knowledge and can access nearly every perspective within the LLM.

This enables the creation of diverse synthetic data for various scenarios. By demonstrating Persona Hub’s use in generating high-quality mathematical and logical reasoning problems, user prompts, knowledge-rich texts, game NPCs, and tools, they show that persona-driven data synthesis is versatile, scalable, flexible, and user-friendly.

This method could significantly impact LLM research and development.

Considering the image below, personas can handle diverse data synthesis prompts (e.g., creating math problems or user prompts) to guide an LLM in generating data from different perspectives.

The 1 billion personas in Persona Hub enable large-scale synthetic data creation for various scenarios.

Considering the image below, The Text-to-Persona approach can make use of any text as input to obtain corresponding personas just by prompting the LLM “Who is likely to [read|write|like|dislike|…] the text?”

Also consider the Persona-to-Persona approach, which obtains diverse personas via interpersonal relationships, which can be easily achieved by prompting the LLM “Who is in close relationship with the given persona?”

In Conclusion

There is also the phenomenon of model collapse, which occurs when new models are trained on synthetic data generated by previous models, leading to a decline in performance.

There is a study with findings that shows training solely on synthetic data inevitably leads to model collapse. However, mixing real and synthetic data can prevent collapse, provided the synthetic data remains below a certain threshold. Could it be that there is a case to be made to negate model collapse by defining a highly structured approach to creating a framework for generating data?

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.