Demonstrate, Search, Predict (DSP) for LLMs

This study which is just over a year old from Stanford, makes for interesting reading and illustrates how far we have come over as short period of time.

Introduction

First of all, the study refers to what we now know as Large Language Models (LLMs), as Knowledge Intensive Natural Language Processing (NLP). Some refer to this as KI-NLP.

Demonstrate, Search, Predict (DSP) is a program written for answering open-domain questions in a conversational setting and in a multi-hop fashion.

The study recognises that there are two main elements, a frozen language model (LLM) and a retrieval Model (RM).

The study also recognises the advantages of grounding knowledge, lower deployment overheads and annotation costs.

To get an idea how fast concepts and accepted architecture are developing, the study finds that in-context learning offers a new approach where we can create complex systems by assembling pre-trained components through natural language instructions and allowing them to interact.

This paradigm relies on pre-trained LLMs as building blocks and natural language for giving instructions and manipulating text.

The study continues to say that achieving this vision could democratise AI development, accelerate system prototyping for different domains, and leverage specialised pre-trained components more effectively.

And have we not seen this democratisation in the number of prompting frameworks and tools. Together with the number of tools to perform vector embeddings and semantic search.

Decomposition

An interesting component of the DSP approach is not only what we now know as RAG, but the idea of decomposing the query or prompt. The study refers to this process of decomposition as Multi-Hop; but again we know it today as Chain-of-Thought.

In fact, the notion of decomposition has taken on so many different permutations, that the phenomenon of Chain-of-X has seen the light.

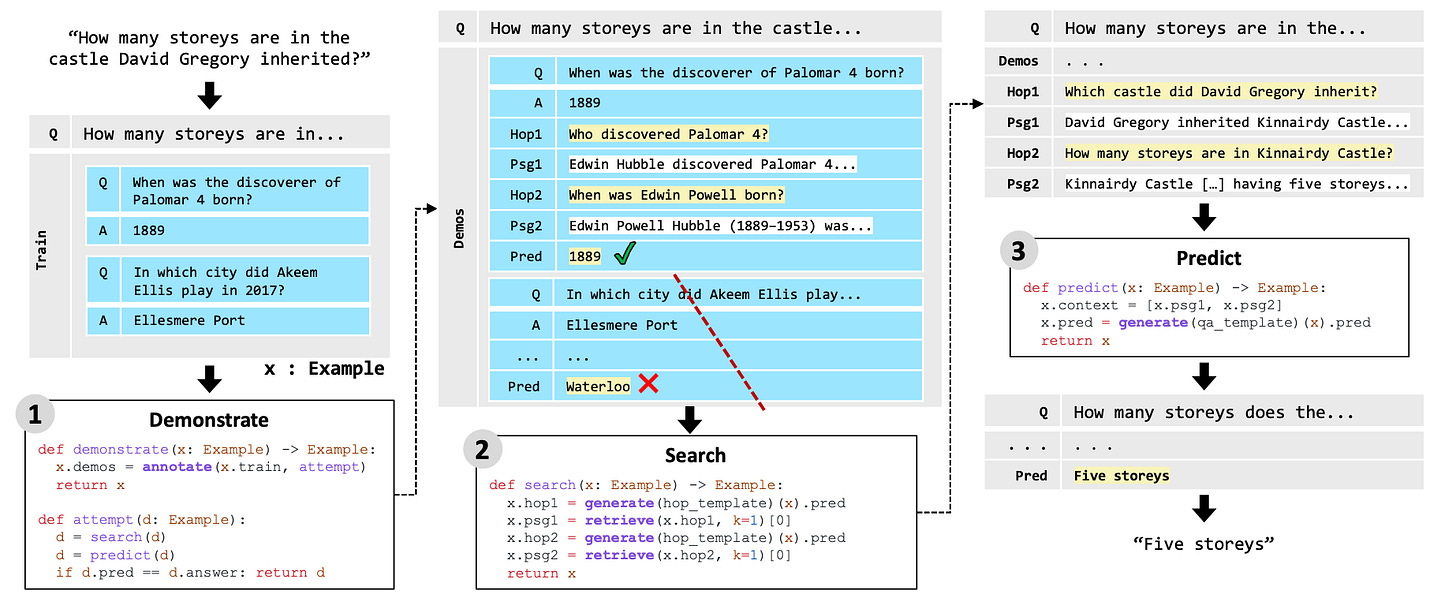

In the image below, a simple example of DSP is shown, with the multiple questions.

Demonstrate stage adds notes to training examples based on a simple form of teaching. Then, in the Search stage, the program breaks down the question and finds relevant information in two steps. Finally, in the Predictstage, it uses the notes and the found information to answer the question.

Autonomous Agent Like

The study contains a notebook to run DSP…

As seen below, DSP needs to be installed…

!pip install dspy-aiAnd below is an extract on how the LLM is instructed…

Follow the following format.

Context: may contain relevant facts

Question: ${question}

Reasoning: Let's think step by step in order to ${produce the answer}.

We ...

Answer: often between 1 and 5 wordsBelow is a snipped from the notebook and what I find interesting is how autonomous agent like the reasoning and response is.

And below an example where the context, question, reasoning and answer are all populated.

Context:

[1] «David Gregory (physician) | David Gregory (20 December 1625 – 1720) was a Scottish physician and inventor. His surname is sometimes spelt as Gregorie, the original Scottish spelling. He inherited Kinnairdy Castle in 1664. Three of his twenty-nine children became mathematics professors. He is credited with inventing a military cannon that Isaac Newton described as "being destructive to the human species". Copies and details of the model no longer exist. Gregory's use of a barometer to predict farming-related weather conditions led him to be accused of witchcraft by Presbyterian ministers from Aberdeen, although he was never convicted.»

[2] «Gregory Tarchaneiotes | Gregory Tarchaneiotes (Greek: Γρηγόριος Ταρχανειώτης , Italian: "Gregorio Tracanioto" or "Tracamoto" ) was a "protospatharius" and the long-reigning catepan of Italy from 998 to 1006. In December 999, and again on February 2, 1002, he reinstituted and confirmed the possessions of the abbey and monks of Monte Cassino in Ascoli. In 1004, he fortified and expanded the castle of Dragonara on the Fortore. He gave it three circular towers and one square one. He also strengthened Lucera.»

[3] «David Gregory (mathematician) | David Gregory (originally spelt Gregorie) FRS (? 1659 – 10 October 1708) was a Scottish mathematician and astronomer. He was professor of mathematics at the University of Edinburgh, Savilian Professor of Astronomy at the University of Oxford, and a commentator on Isaac Newton's "Principia".»

Question: What castle did David Gregory inherit?

Reasoning: Let's think step by step in order to produce the answer. We know that David Gregory inherited a castle. The name of the castle is Kinnairdy Castle.

Answer: Kinnairdy CastleI’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.