Disambiguation: Using Dynamic Context In Crafting Effective RAG Question Suggestions

This approach reminds of a technique initially implemented by IBM Watson called disambiguation. Where ambiguous input from the user is responded to with about five or less options to choose from.

Hence allowing the user to perform disambiguation themselves, and allowing the system to learn from it.

This approach also solves for the blank canvas problem, where the user does not know what the ambit of the interface is, and ends up asking ambiguous exploratory questions.

So instead of forcing users to continuously refine their queries while being furnished with inadequate responses, the Conversational UI takes the initiative to disambiguate the conversation.

The generator is designed to generate suggestion questions that the agent can answer, guided by the user’s initial query. ~ Source

Proposed Framework

The framework identifies appropriate passages (dynamically retrieved contexts) based on the user’s initial input.

The process is aided by questions, answer and suggestion questions (generated via dynamic few-shot).

Suggestion questions are generated by constituting a dynamic context prompt consisting of the dynamically retrieved contexts, and dynamic few- shot examples.

By providing suggestion disambiguation questions, users are relieved of the cognitive load of question formulation.

The fundamental aim of this research is to establish the user’s true intent during interaction.

What is appealing of this solution, is that it is a low resource approach.

Task Orientated Dialogs

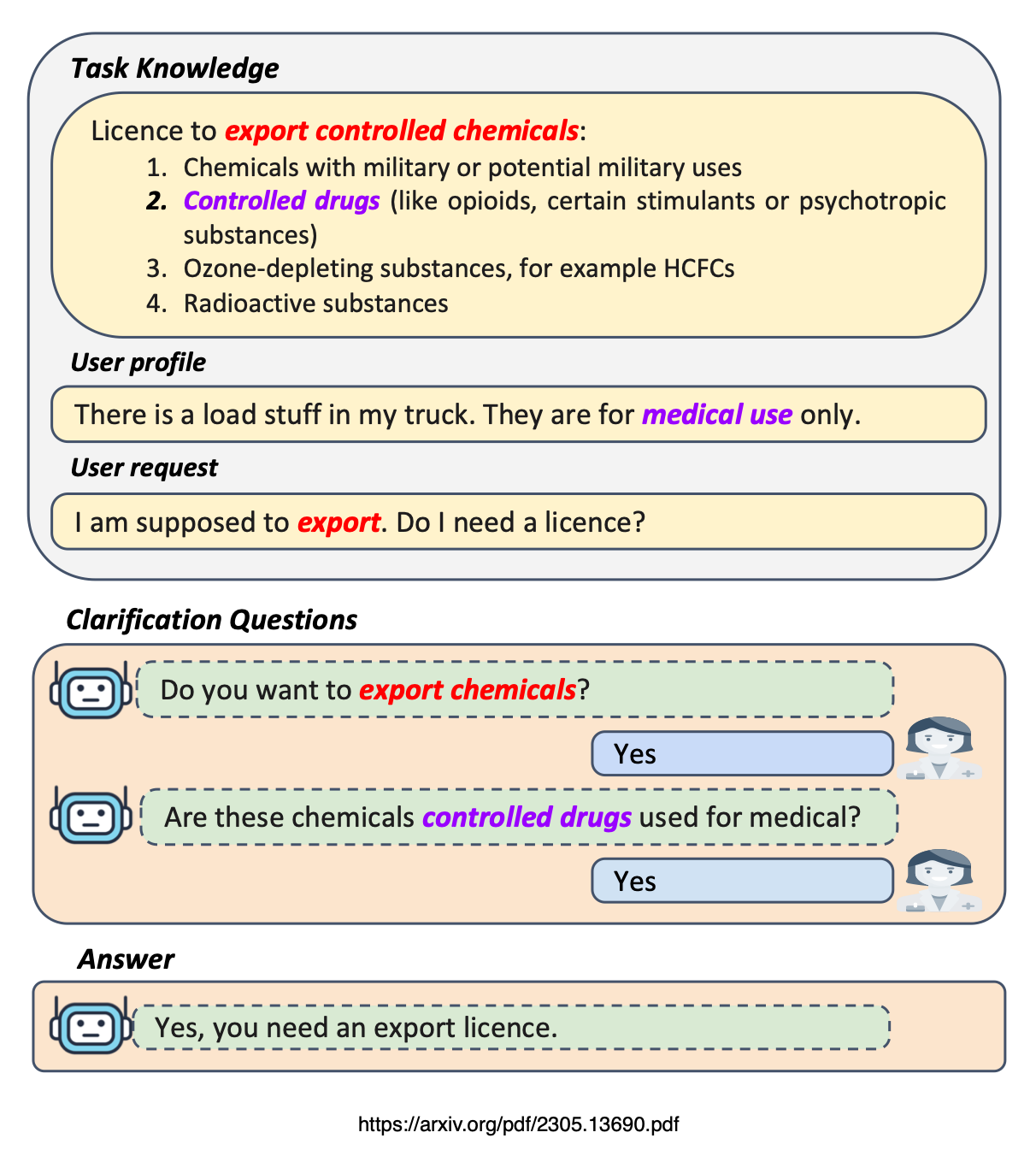

The example below is one of task-oriented information seeking. A user needs to search for information related to a specific task. Due to the ambiguous user input and user profile, the task- oriented dialogue system should ask clarification/disambiguation questions to clarify the user intent and the user profile based on the task knowledge to provide the answer.

Below, the task-oriented System Ask Paradigm.

In Conclusion

This study aims to reduce apologetic responses from the Conversational UI and enhance user experience by providing informed system suggestions.

Dynamic Contexts generate suggestion questions answerable by the agent through:

Dynamic few-shot examples and

Dynamically retrieved contexts.

Unlike traditional few-shot prompting, dynamic few-shot examples dynamically select contextually relevant triplets based on user queries, accommodating varied question formats and structures.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.