Existing Rigid Chatbot Architecture Needs Large Language Model (LLM) Flexibility

In recent posts I have been exploring the impact of LLMs on Conversational AI in general…but in this article I want to take a step back to consider the impediments of current chatbot architecture.

Something I have always found interesting about chatbots and voicebots, is that most every framework converged on the same basic architecture for Conversational AI interfaces.

This architecture consisted of a NLU model (Intents & Entities), a dialog state machine and pre-defined bot messages.

In this architecture, there is not much “AI” involved. The dialog flow structure is pre-determined and hard-coded with state machine logic. The bot responses are fixed or at best a response message is concatenated with variable values inserted. As is the status quo with IVRs. Hence many chatbots were actually more of a ITR system (interactive text response).

Considering that specific intents are assigned to specific sections of the flow…the only “AI” portion was the trained NLU model which extracts intents and entities from user input based on probability.

So, chatbots have always been premised on intents, entities, dialog state management and integration. Integration comprises of back-end API integration to the organisational systems, and integration to front-end user interfaces.

NLU is a reliable and very useful method of understanding user input in terms of detecting verbs, nouns, intent and more.

However, as mentioned earlier, the dialog state management systems are very rigid, and the intents are a finite predefined set of classifications. And each of these classifications invoke a certain part of the dialog flow.

The rigidity of the dialog flow state management and the pre-defined intents have been lamented for quite a while.

The problem was addressed with the tools at our disposal at the time. One idea was to deprecate one or two of the architectural pillars; the dialog flow state machine, or intents, etc.

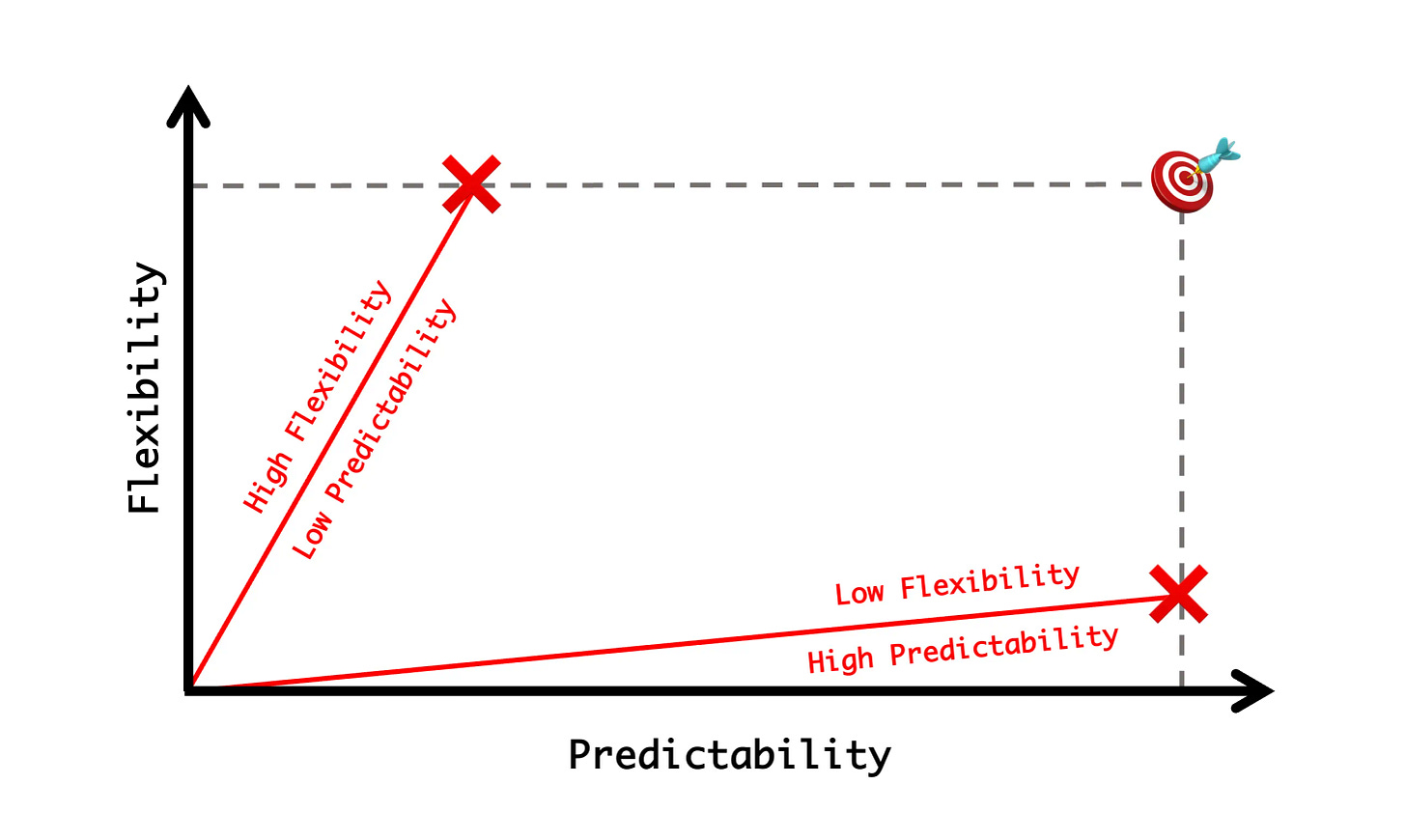

🔄 But there was always this trade-off between flexibility and predictability…

➡️ More flexibility leads to less predictability.

➡️ While more predictability leads to less flexibility.

Considering the graph below, the target is the ideal place for a chatbot to live; where both high flexibility and high predictability can be achieved.

Additional features were introduced to soften the architecture and introduce flexibility.

Some of these features included:

Quick reply intents

Knowledge bases supported by semantic search

Rasa lead with probability based dialog flows via ML stories

Bootstrapping a chatbot with QnA only functionality and more

However, the reasoning always revolved around these fixed pillars of chatbot architecture…and the advent of LLMs forced Conversational AI Frameworks to rethink their approach to Conversational Interfaces.

Final Thoughts…

⏺ Most Conversational AI Companies are merely adding LLM functionality to their current environment. For the majority of vendors, it is very much a “we are LLM enabled too” scenario. Read more about this here.

⏺ LLMs are ushering in an era of reimagining conversational applications.

⏺ There is a current trend of micro conversational interfaces and chatbots being treated as utilities. With the quick ingestion of documents and PDFs to form a queryable knowledge base.

⏺ New frameworks are approaching Conversational AI by combining foundation LLMs via a method of composability.

⏺ Composability is achieved with dialogs or conversations being seen as inter-relationship components. Various combinations can be selected and assembled for very specific user requirements.

⏺ There is an emergence of a new generation of Conversational AI applications which are LLM powered.

⏺ New frameworks are being imagined and designed by innovators without any burden of existing technical debt or current framework constraints.

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.