Fine-Tuning OpenAI GPT-4o mini

Fine-tuning for the GPT-4o mini became available yesterday, so I decided to fine-tune OpenAI’s small language model to explore what I could learn…

Introduction

In future posts, I hope to delve deeper into some of the technical details. For this article, my focus was simply on gathering the minimal amount of data, getting the formatting right, and fine-tuning the Small Language Model (SLM).

Afterward, I aimed to test the model in the playground by running the same completion scenarios side by side to compare the standard and fine-tuned models.

The main advantages of SLMs

Many highly capable SLMs are open-sourced and can be freely used; Meta and Microsoft have open-sourced and made available a few SLMs.

A number of hosting options exist to access SLMs and deploy the models, followed by exposing an API for the model.

These deployment and management frameworks are split between cloud based options, and also quantisation software allowing you to locally host and run inference.

SLMs have been trained to excel at task decomposition and advanced reasoning.

SLMs are also good for RAG implementations which leverages their Natural Language Generation (NLG), dialog state and context management, reasoning, etc. capabilities.

They enable efficient on-device processing, reducing the need for cloud-based resources. This is in general for SLMs, obviously OpenAI’s offering will be commercial API based.

Small models are more cost-effective to run, requiring less computational power and storage.

By processing data locally, they enhance user privacy and reduce data exposure risks.

They allow for easier control and management, enabling fine-tuning and optimisation for particular tasks without relying on external dependencies.

Why would someone use OpenAI’s SLM?

The only reasons I can think of why someone would use a SLM from OpenAI are:

They deemed it better than any open-sourced model, granted GPT-4o mini has multi-modal capabilities.

Easy access for getting started and prototyping.

Web consoles for managing cost, APIs, usage, etc.

Low token cost.

An enterprise might already be a customer of OpenAI with privileges which other normal users do not have.

If you already using OpenAI extensively, then adding GPT-4o mini to your current architecture can make sense.

What does SLMs solve for which OpenAI is not solving for?

With open-sourced SLMs the exciting part is running the model locally and having full control over the model via local inferencing.

In the case of OpenAI, this is not applicable due to their commercial hosted API model.

Hence OpenAI focus on speed, cost and capability.

And also following the trend of small models.

There are highly capable text based SLM’s which are open-sourced in the case of Orca-2, Phi3, TynyLlama, to name a few.

A differentiators for GPT-4o-mini will have to be cost, speed, capability and available modalities.

Advantages of GPT-4o Mini

Multimodal Support: GPT-4o Mini currently supports both text and vision in the API and playground, with plans to include text, image, video, and audio inputs and outputs in the future.

Extended Context: The model offers a context window of 128K tokens and includes knowledge up to October 2023.

Multilingual Capabilities: GPT-4o Mini is equipped with support for multiple languages.

Enhanced Inference Speed: The model boasts improved inference speeds, making it highly efficient.

Ideal for Agentic Applications: Its combination of speed and cost-effectiveness makes it perfect for agentic applications that require multiple parallel calls.

Cost: Priced at 15 cents per million input tokens and 60 cents per million output tokens.

Fine-Tuning

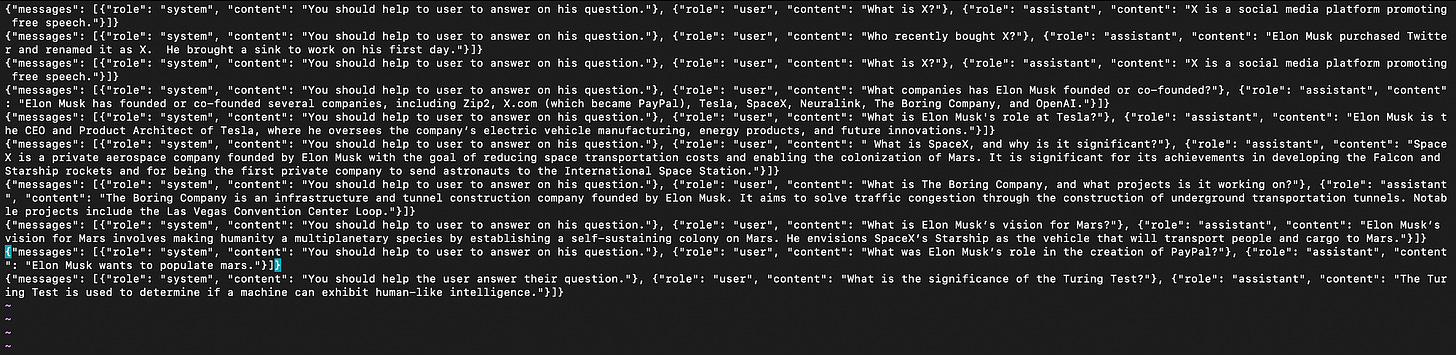

To fine-tune a model, at least 10 examples are required. As seen in my training file below, I had exactly 10 examples. If there are less than 10, the file is rejected during the file validation process after uploading.

As I show below, even with 10 training examples, I was able to sway the outcome of the generated answers.

OpenAI advises that typically, clear improvements are observed with 50 to 100 training examples when using GPT-4o Mini or GPT-3.5 Turbo. But the optimal number can vary significantly depending on the specific use case.

Again OpenAI advises that it is recommended to begin with 50 well-crafted demonstrations and assess whether the model shows signs of improvement after fine-tuning.

In some cases, this may be sufficient, but even if the model is not yet ready for production, noticeable improvements indicate that providing additional data could further enhance the model. If no improvement is observed, it may be necessary to reconsider the task setup or restructure the data before scaling beyond a limited set of examples.

Below my minimum 10 line training file…

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": "What is X?"}, {"role": "assistant", "content": "X is a social media platform promoting free speech."}]}

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": "Who recently bought X?"}, {"role": "assistant", "content": "Elon Musk purchased Twitter and renamed it as X. He brought a sink to work on his first day."}]}

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": "What is X?"}, {"role": "assistant", "content": "X is a social media platform promoting free speech."}]}

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": "What companies has Elon Musk founded or co-founded?"}, {"role": "assistant", "content": "Elon Musk has founded or co-founded several companies, including Zip2, X.com (which became PayPal), Tesla, SpaceX, Neuralink, The Boring Company, and OpenAI."}]}

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": "What is Elon Musk's role at Tesla?"}, {"role": "assistant", "content": "Elon Musk is the CEO and Product Architect of Tesla, where he oversees the company’s electric vehicle manufacturing, energy products, and future innovations."}]}

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": " What is SpaceX, and why is it significant?"}, {"role": "assistant", "content": "SpaceX is a private aerospace company founded by Elon Musk with the goal of reducing space transportation costs and enabling the colonization of Mars. It is significant for its achievements in developing the Falcon and Starship rockets and for being the first private company to send astronauts to the International Space Station."}]}

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": "What is The Boring Company, and what projects is it working on?"}, {"role": "assistant", "content": "The Boring Company is an infrastructure and tunnel construction company founded by Elon Musk. It aims to solve traffic congestion through the construction of underground transportation tunnels. Notable projects include the Las Vegas Convention Center Loop."}]}

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": "What is Elon Musk’s vision for Mars?"}, {"role": "assistant", "content": "Elon Musk’s vision for Mars involves making humanity a multiplanetary species by establishing a self-sustaining colony on Mars. He envisions SpaceX’s Starship as the vehicle that will transport people and cargo to Mars."}]}

{"messages": [{"role": "system", "content": "You should help to user to answer on his question."}, {"role": "user", "content": "What was Elon Musk’s role in the creation of PayPal?"}, {"role": "assistant", "content": "Elon Musk wants to populate mars."}]}

{"messages": [{"role": "system", "content": "You should help the user answer their question."}, {"role": "user", "content": "What is the significance of the Turing Test?"}, {"role": "assistant", "content": "The Turing Test is used to determine if a machine can exhibit human-like intelligence."}]}Back To The Demo

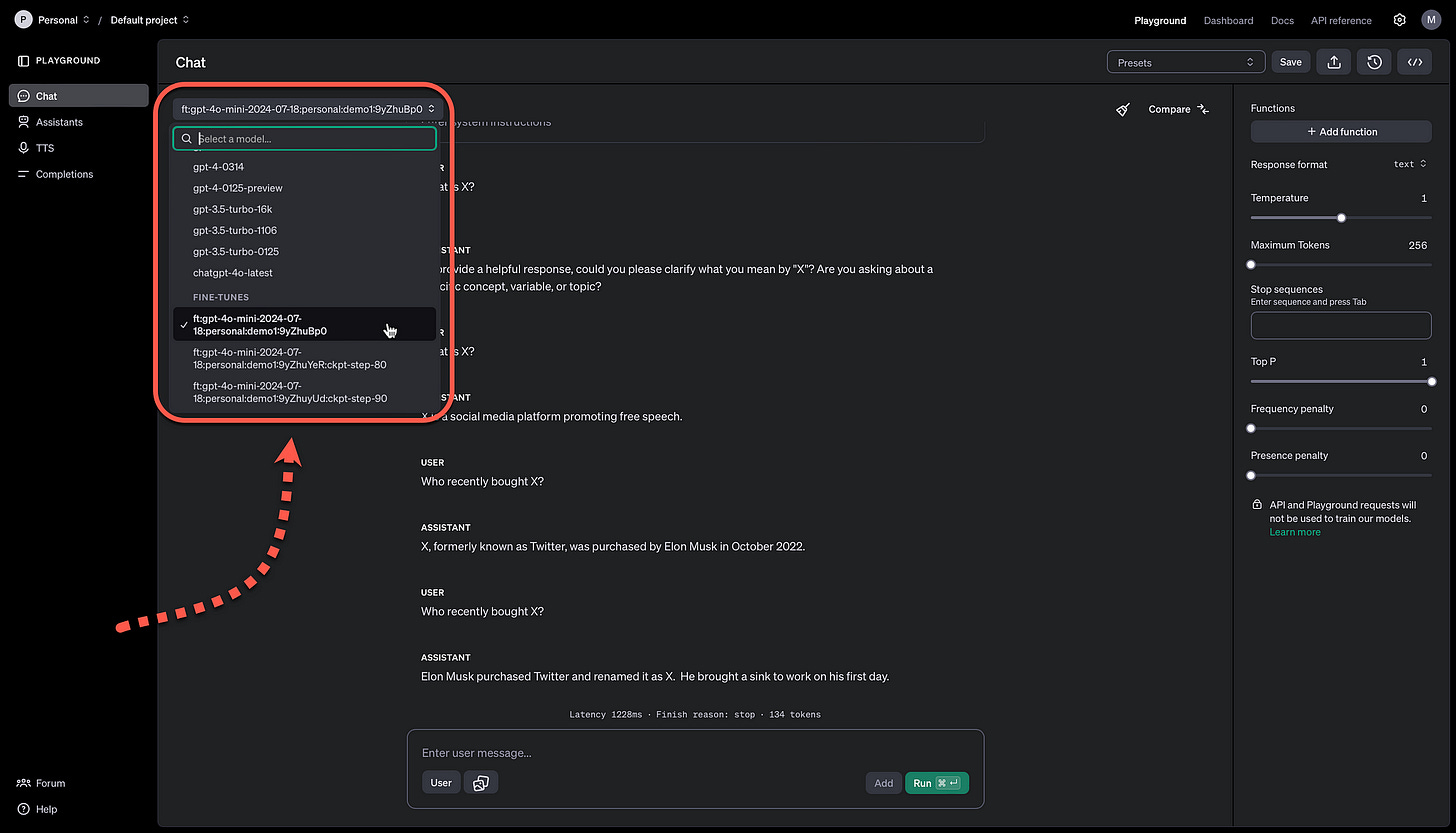

In the image below the gpt-4o-mini model is visible within the playground…

And below the fine-tuned version of the model is selected…

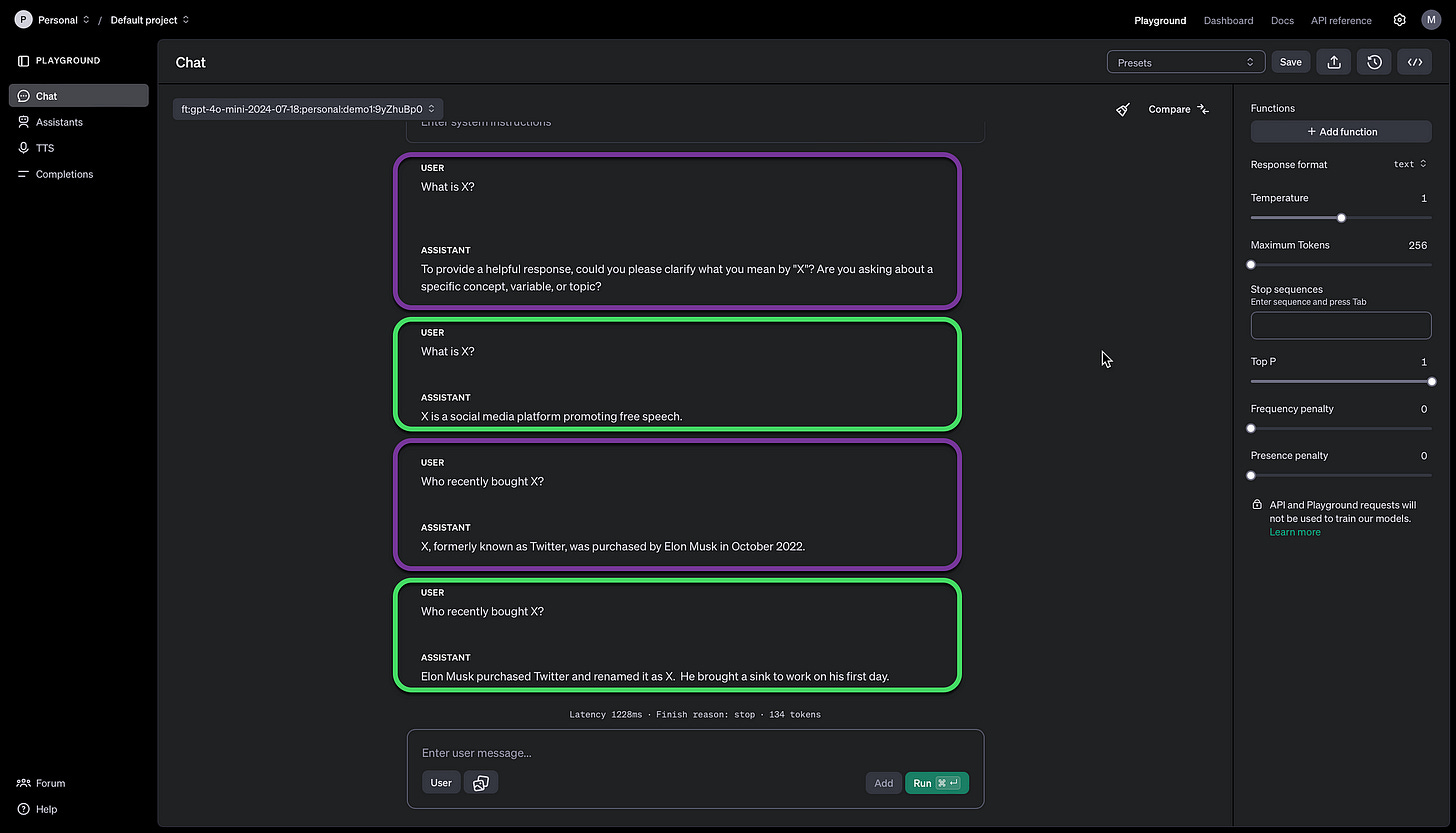

The interactions marked in purple are with the standard mini model, and the interactions in green are with the fine-tuned model.

From the example it is clear how the fine-tuned responses surface when the custom model is referenced. And the standard responses are given with the default standard model.

Below is a console view where the fine-tuning instances are shown, with the previously failed attempts. Here all the detail is shown of the fine-tuning process.

The file which was created holding the fine-tuning data. It was the easiest to make use of the MacOS Console applications to run vim from the command line to ensure formatting and the rest of it was in order.

The OpenAI console has a storage tab where files can be uploaded and manage from. From a process perspective it makes sense to manage data and files here in a central place.

Finally

With fine-tuning of GPT-4o mini being available since yesterday, I wanted to fine-tune the model with the least amount of training data.

I also wanted to measure via casual observation what the effect of the fine-tuning is, and to what extent it is visible at inference.

Who will use GPT-4o mini? I think there are two categories of users primarily.

Firstly, builders wanting to experiment and learn via a simplified no-code console. All which is supported with good documentation and a developer forum.

There might be niche implementations where GPT-4o mini can be implemented.

The second instance is where an enterprise or organisation has a strong bond with OpenAI with special privileges and access…and adding the GPT-4o mini model to the current orchestrated environment is a logical next step.

I’m currently the Chief Evangelist @ Kore.ai. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

https://openai.com/index/gpt-4o-fine-tuning/

https://platform.openai.com/docs/guides/rate-limits/usage-tiers