Future OpenAI Functionality Will Supersede Many Current LLM Applications

There use to be many mobile flashlight apps, until the phone OS came standard with flashlight functionality.

Even-though the startup ecosystem is important for innovation, it is inevitable that near-future OpenAI functionality will supersede many current products.

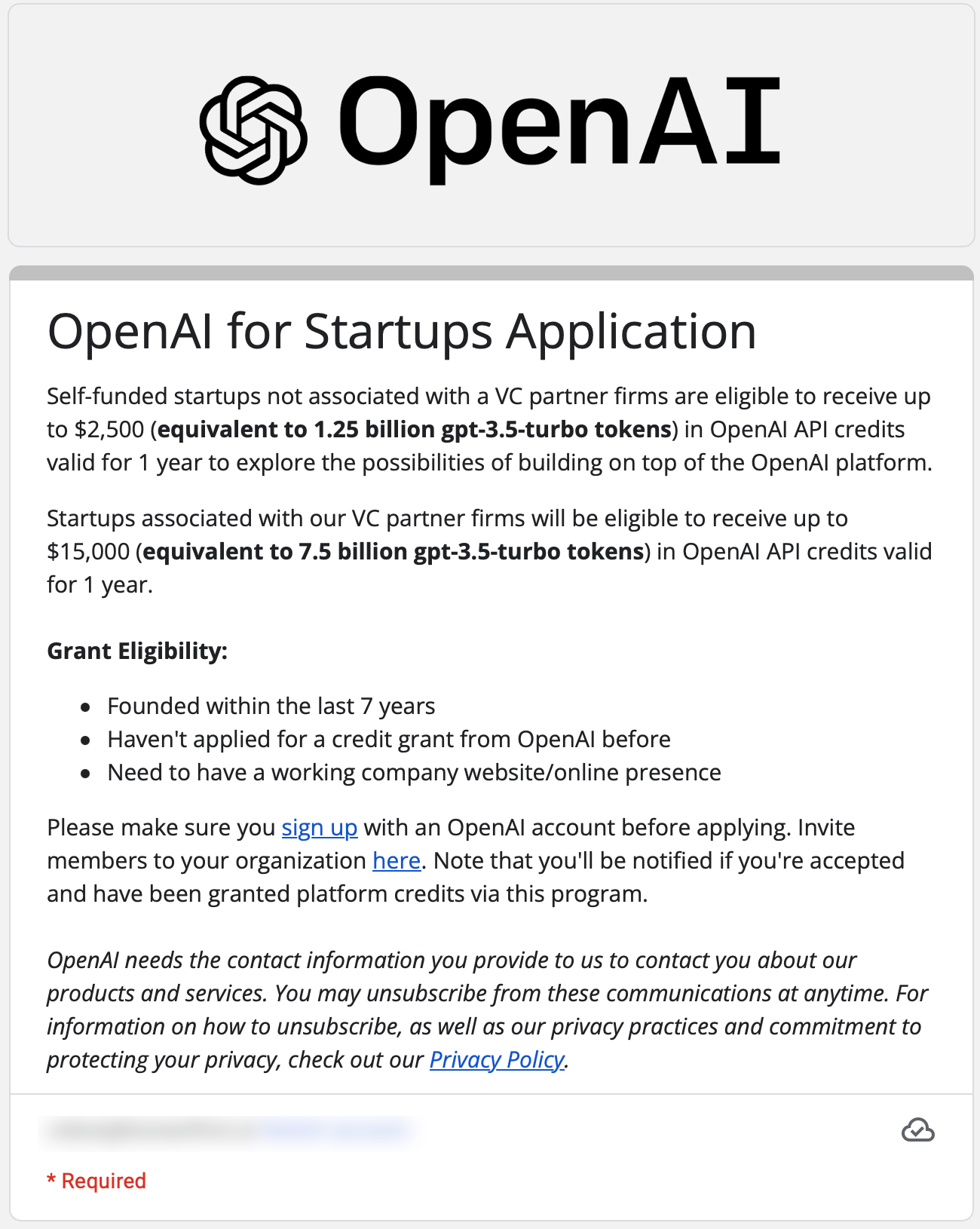

As seen below, OpenAI has announced that self-funded non-VC backed startups can apply for OpenAI API credits. And as I stated in the intro, the startup ecosystem is an important driver for innovation and product development.

However, as the functionality of OpenAI is expanding, many of these products and initiatives will be engulfed by the standard offering of OpenAI.

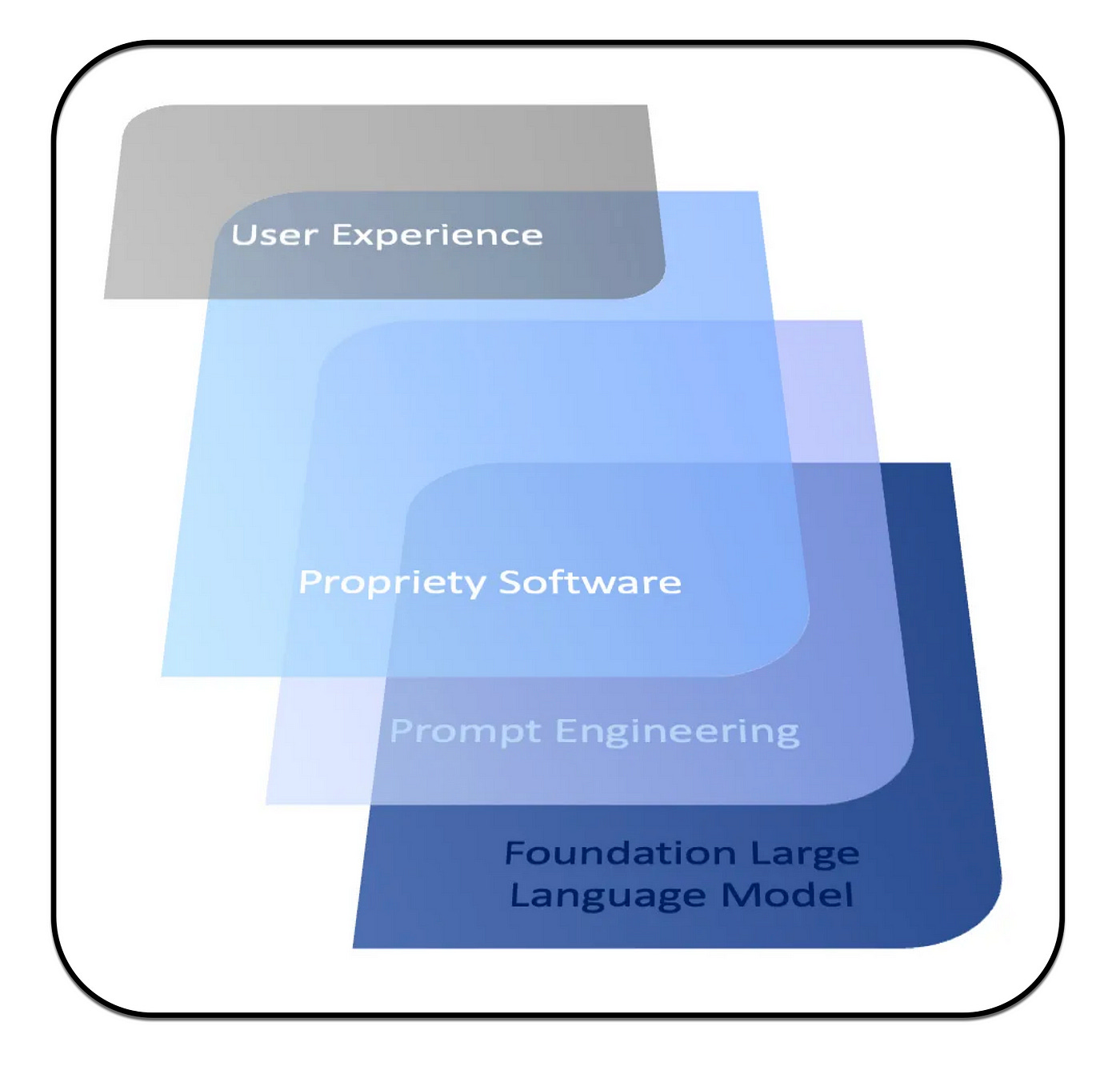

The onus is on startups to create an exceptional user experience and user interface. And to differentiate on intellectual property and implement a stellar propriety software layer.

Here are a few examples on how OpenAI functionality is sprawling and expanding:

OpenAI’s basic playground functionality is increasing, while structure is being introduced to the process of prompt engineering.

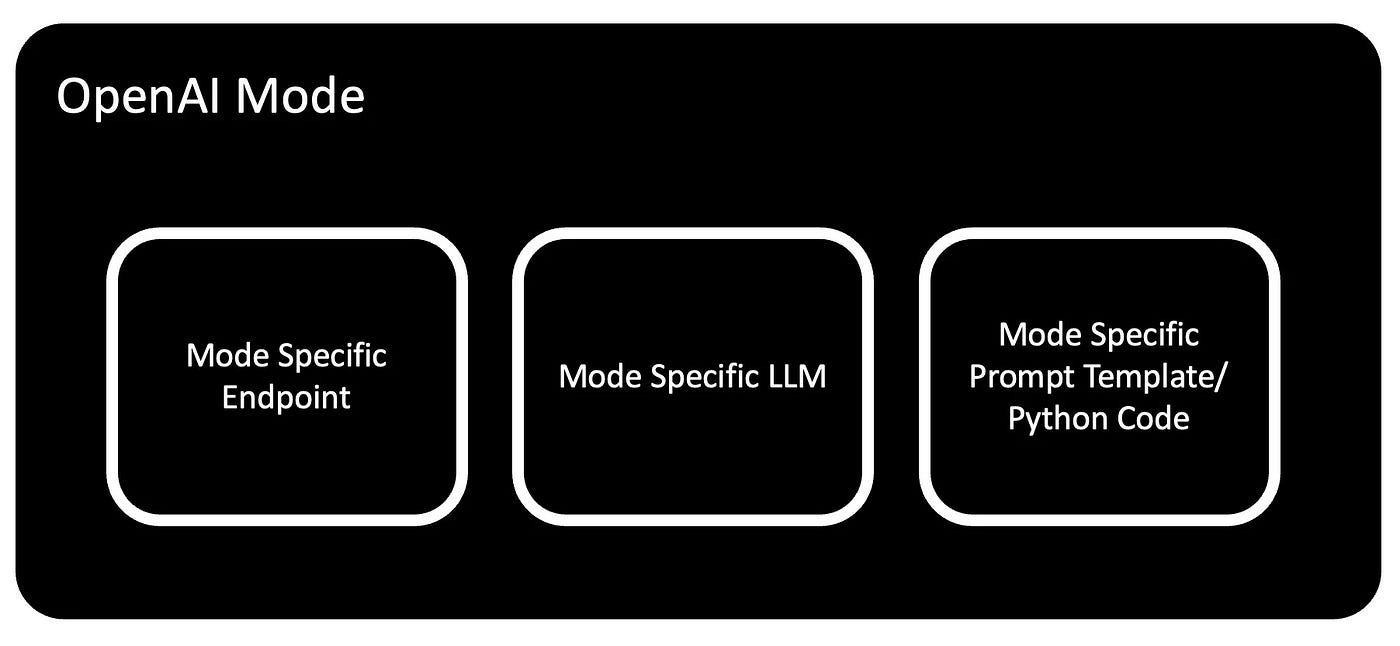

OpenAI has introduced the concept of modes (complete, insert, edit, chat) for different use-cases. Modes have in essence introduced four new versions of the OpenAI playground.

Further to playground updates, OpenAI has also introduced mode specific endpoints and mode specific large langauge models. This introduction of mode specific endpoints and models create an enhanced experience and leads to better output. However, this also leads to increased complexity.

With Chat Markup Language (ChatML) additional structure is introduced to prompt engineering. Read more about ChatML here.

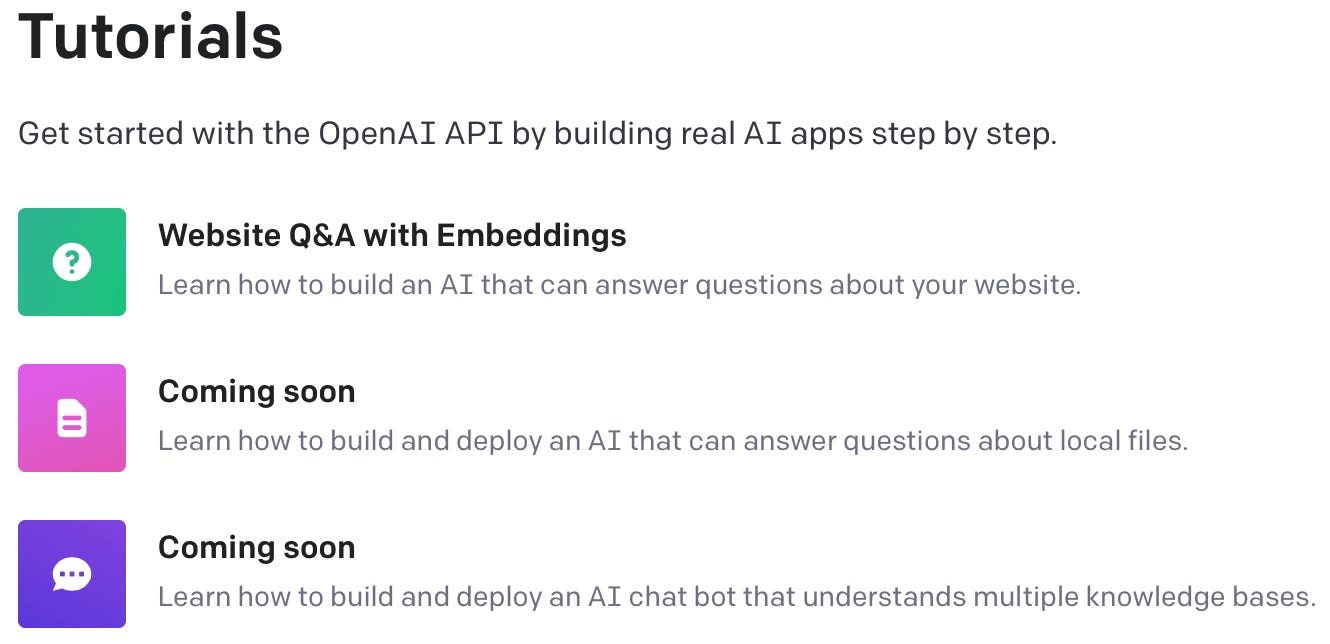

Various types of search has been a key use-case for a significant number of LLM based start-ups. Search encompasses web search, company propriety documents, conversation archives, etc.

The image below shows OpenAI’s ambition to leverage their powerful LLM based embeddings to create search and Q&A tools for websites, local files and also extracting information from multiple knowledge bases.

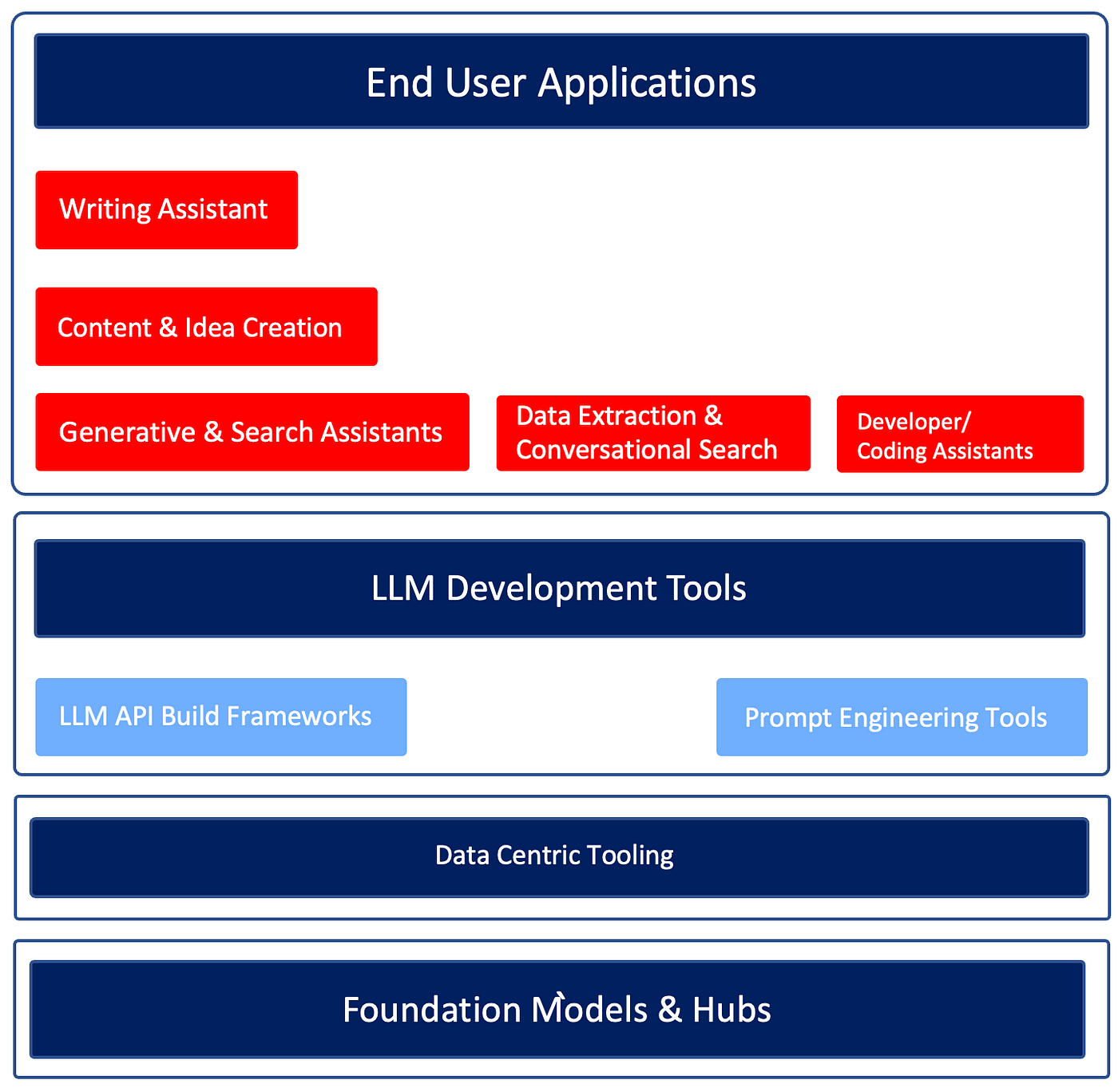

All of the mentioned updates and added features to the OpenAI offering are obviously ominous signs for companies specialising in end user applications & search; as shown in red in the image below.

Hence product managers of LLM based applications will have to focus on creating differentiation on two fronts:

Exceptional user experience &

A strong propriety software layer between the UX and LLMs; which adds significant value.

The image below shows the typical technology stack for LLM based end user applications.

It is the propriety software layer which constitutes the “secret sauce”, and encapsulates the company’s competitive advantage and differentiation.

In Closing

End user applications have the most exposure in terms of growing LLM functionality.

There is no safe place for any start-up, but the LLM Development Toolssegment provides a more entrenched and sticky scenario. Especially where software acts as an orchestration layer.

The segment of Data centric tooling will only increase in importance as the need for LLM/FM fine-tuning increases. This is due to fine-tuning of large models demanding a bottom-up approach to curating and engineering fine-tune data.

Finally, the whole ecosystem taking shape are obviously dependant and at the behest of the LLM providers. And any change and updates from the likes of OpenAI, Cohere and others, will have a ripple effect throughout the LLM ecosystem.

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.