Gemini From Google

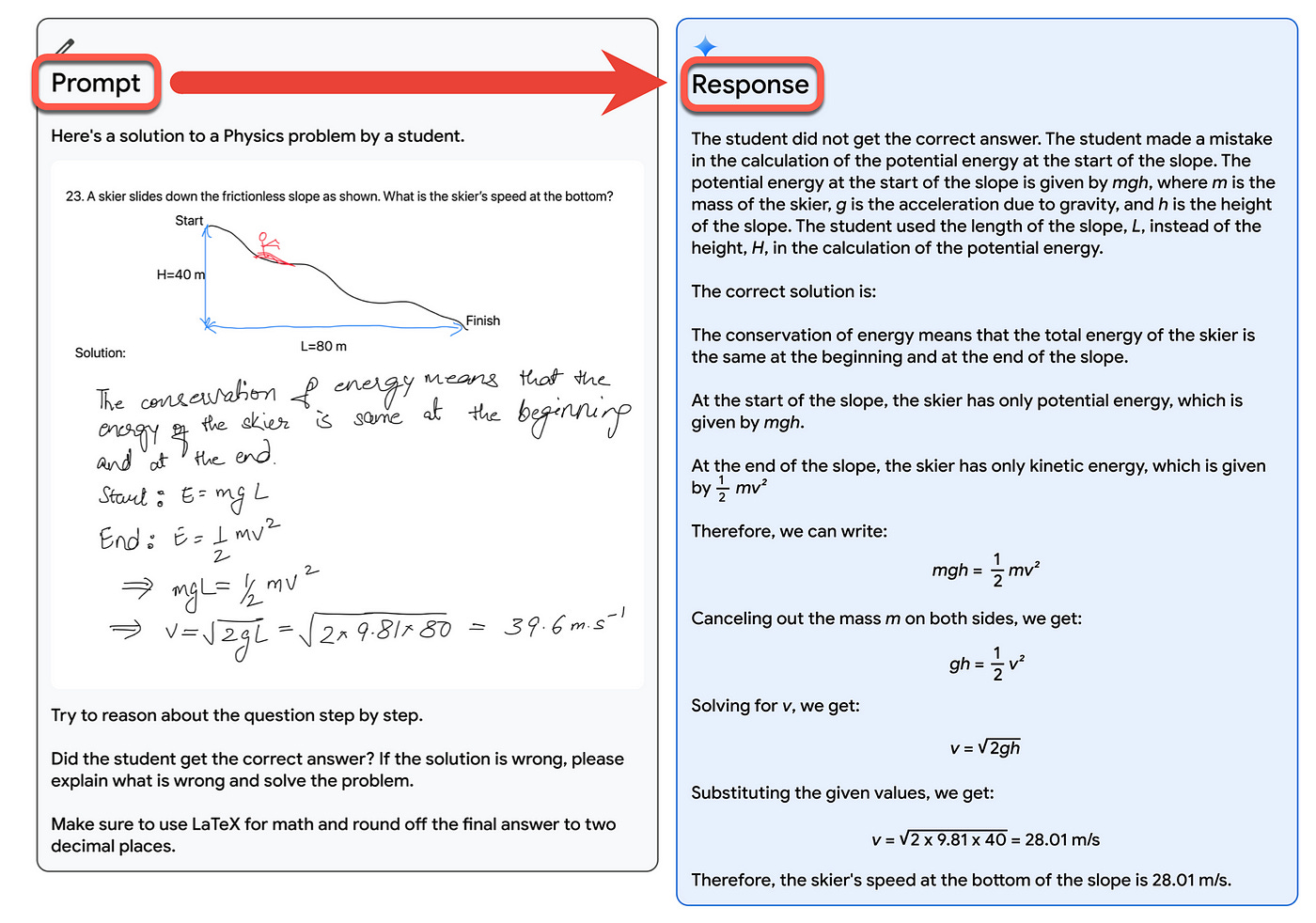

Gemini is described by Google as a native multi-modal Foundation Model, managing multi-modal context seamlessly across text, images, video, audio, and code.

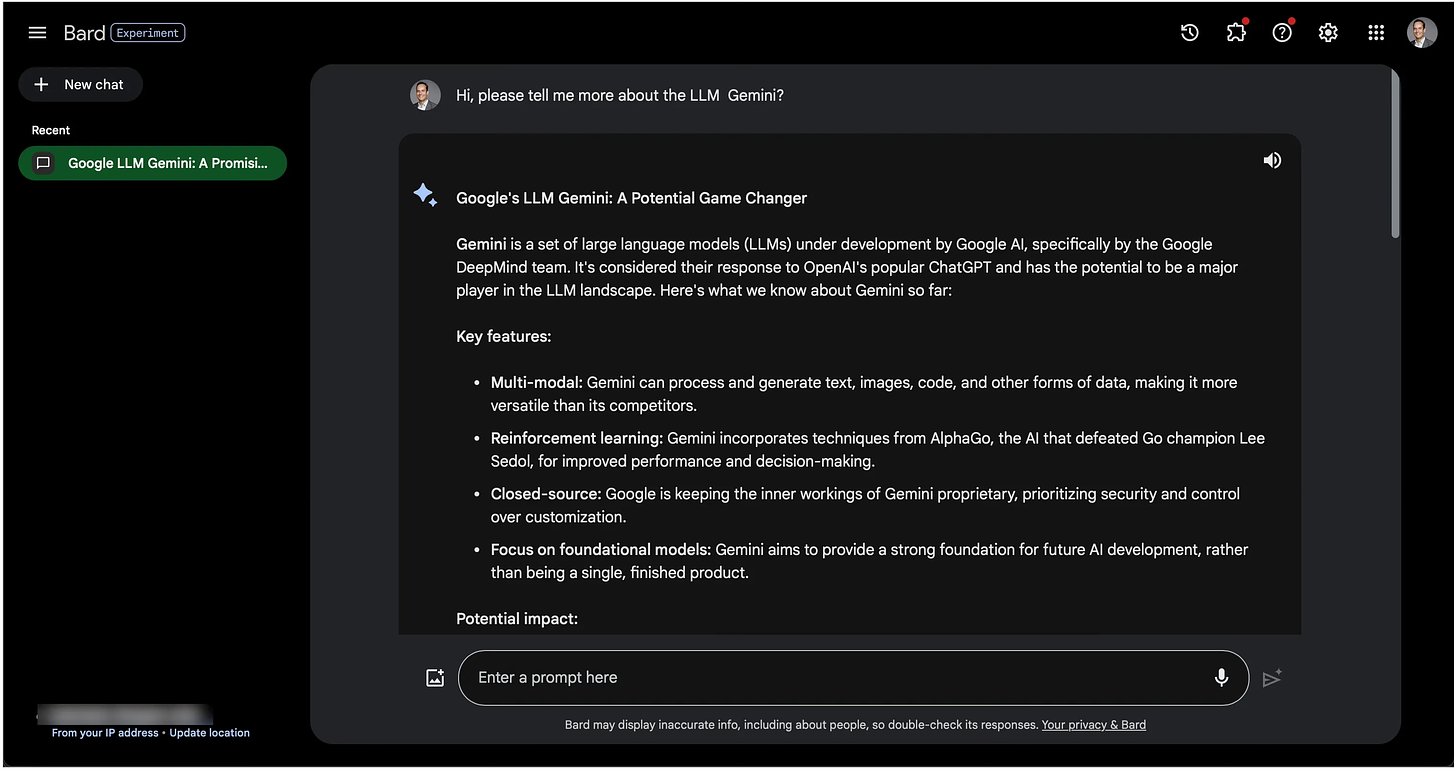

Starting 6 December 2023, Bard is using a fine-tuned version of Gemini Profor more advanced reasoning, planning, understanding, etc.

Introduction

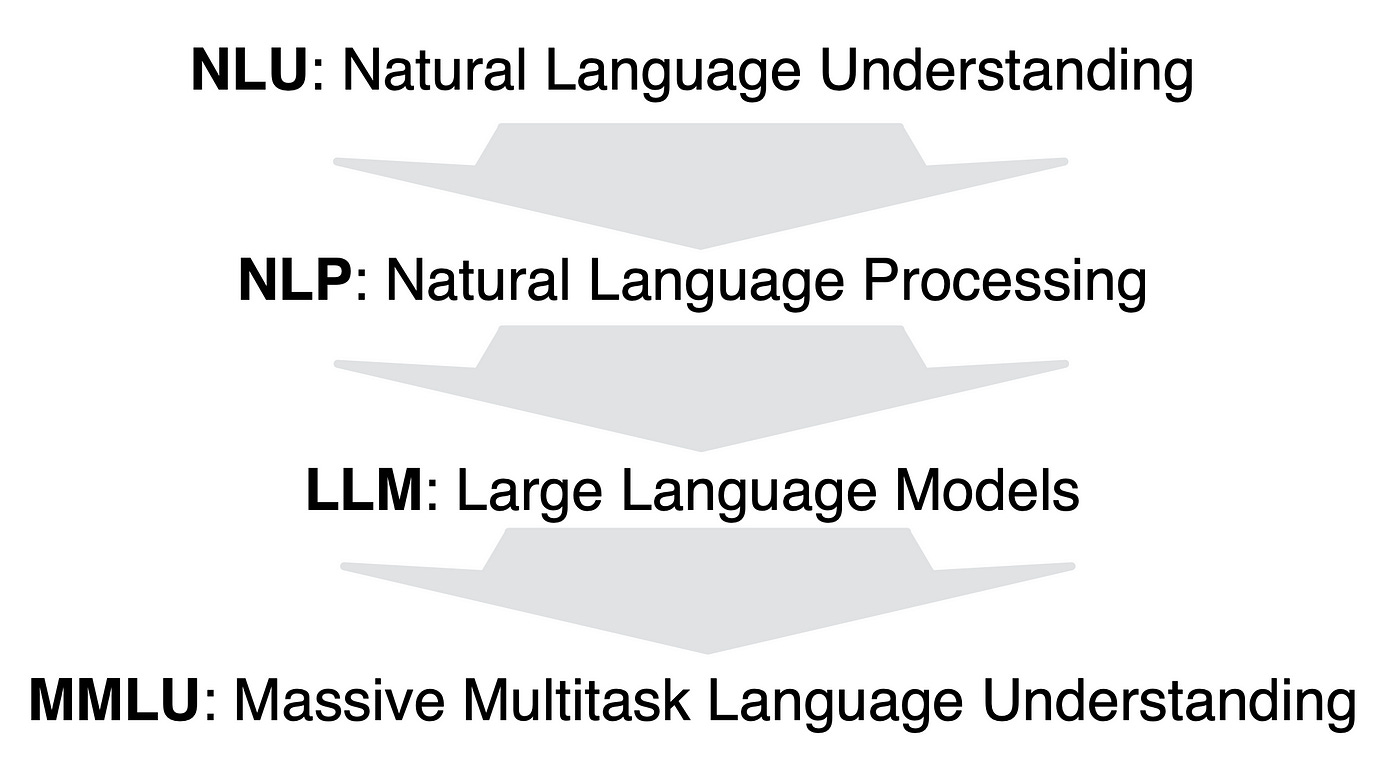

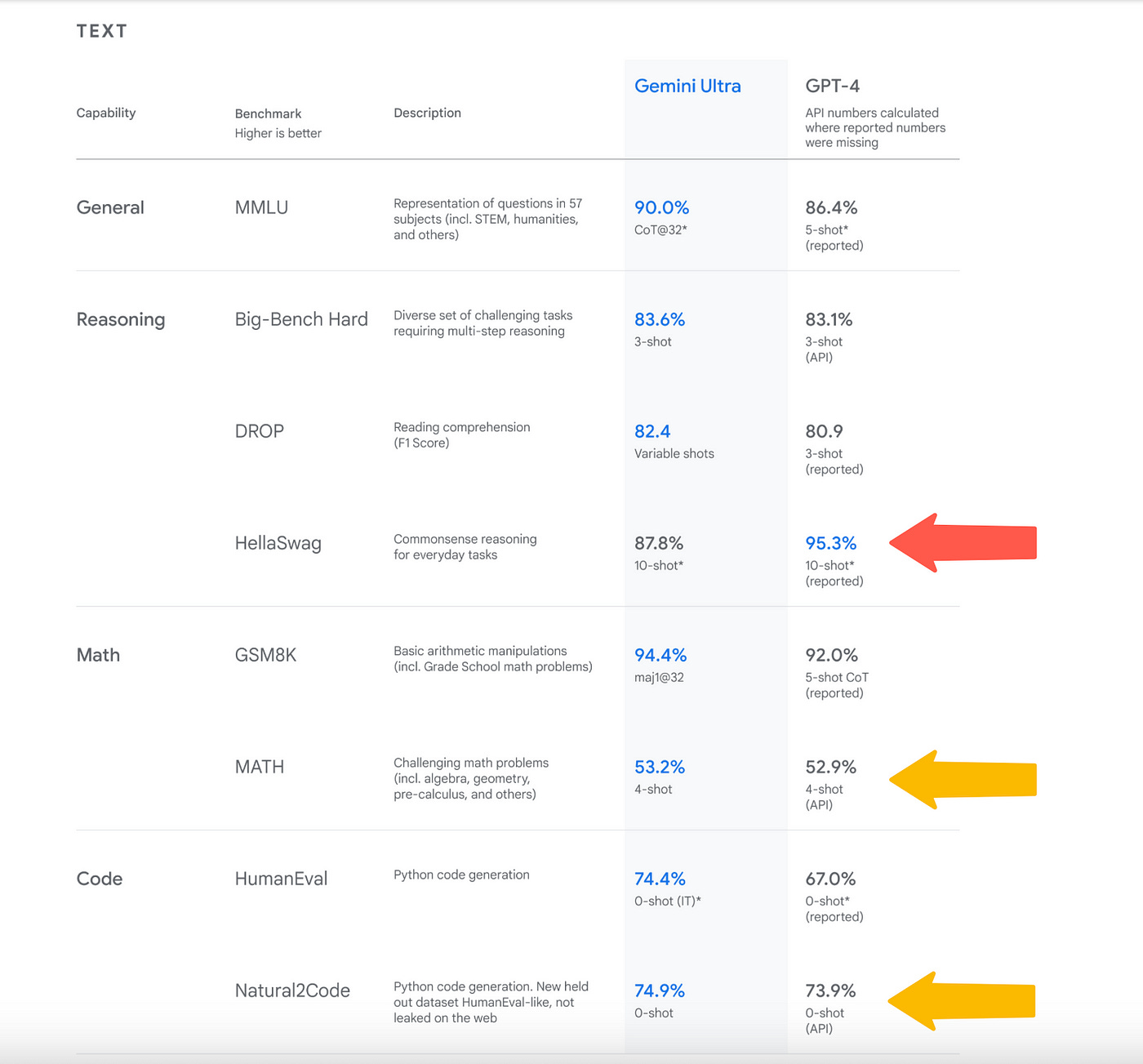

Considering the image below, Google uses the phrase Massive Multitask Language Understanding (MMLU) which seems to be a leap from the notion of LLMs, past the concept of multi-modal Foundation Models (FMs), to a contextually aware multi-modal scenario.

Availability & Products

Gemini Pro

Starting 6 December 2023, Bard is using a fine-tuned version of Gemini Profor more advanced reasoning, planning, understanding, etc.

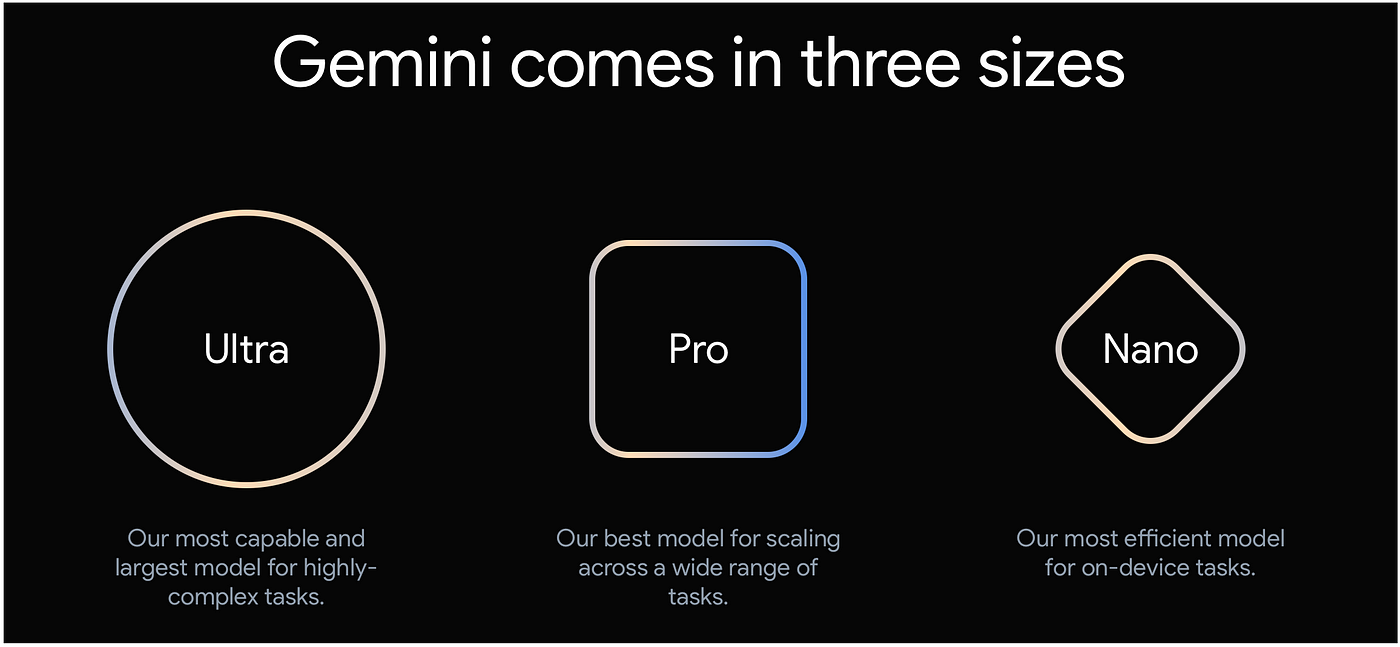

Gemini Nano

Gemini Nano will be available to Pixel 8 Pro.

In the coming months, Gemini will be available in more of Google’s products and services like Search, Ads, Chrome and Duet AI.

Gemini Search

Google has already started to experiment with Gemini in Search, improving Google’s Search Generative Experience (SGE).

Developing with Gemini

From 13 December 2023, developers and enterprise customers can access Gemini Pro via the Gemini API in Google AI Studio or Google Cloud Vertex AI.

Google AI Studio is a free, web-based developer tool to prototype and launch apps quickly with an API key.

Android developers will also have access to build with Gemini Nano.

Gemini Ultra Is Coming Soon

For Gemini Ultra, Google is currently busy with what they call extensive trust and safety checks, including red-teaming by trusted external parties, and further refining the model using fine-tuning and reinforcement learning from human feedback (RLHF) before making it broadly available.

As part of this process, Google will make Gemini Ultra available to selected customers, developers, partners and safety and responsibility experts for early experimentation and feedback before rolling it out to developers and enterprise customers early next year.

Early next year, Google plans to launch Bard Advance. Google describes it as a new, cutting-edge AI experience that gives users access to the best models and capabilities, starting with Gemini Ultra.

Human Level Cognition

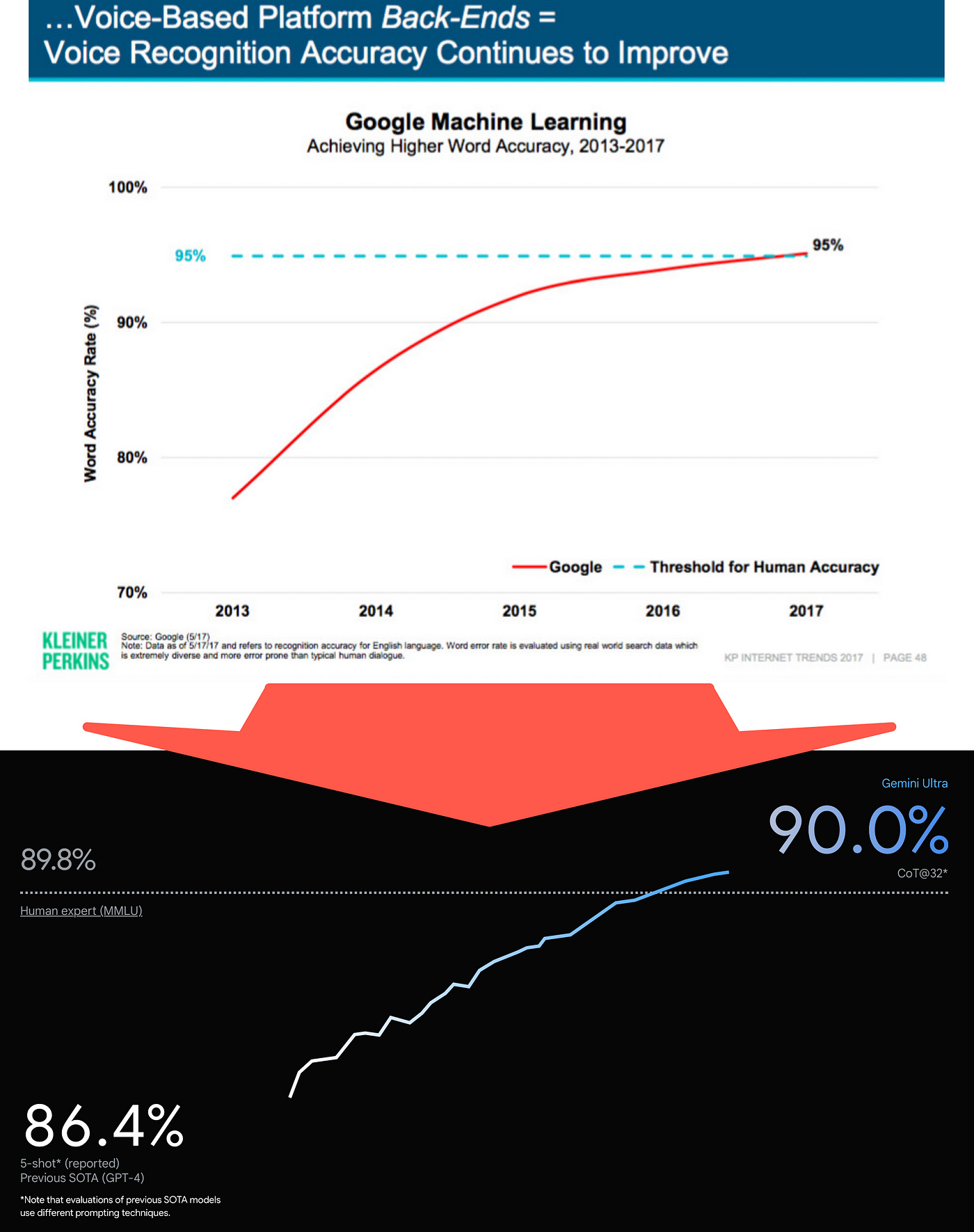

The graph illustrating Gemini Pro exceeding human expert level of MMLU reminded me of when in 2017 Google Machine Learning ASR exceeded the threshold of Human accuracy in speech recognition.

Google also states that Gemini is the first model to outperform human experts on MMLU (Massive Multitask Language Understanding), one of the most popular methods to test the knowledge and problem solving abilities of AI models.

Bard With Gemini Pro Is Available Now

Bard is currently making use of a custom tuned version of Gemini Pro for English text use-cases in more than 170 countries.

The version of Gemini Pro available in Bart now, is for text-only use; with modalities being added soon, according to Google.

For a list of regions, below is a link:

Where Bard with Gemini Pro is available

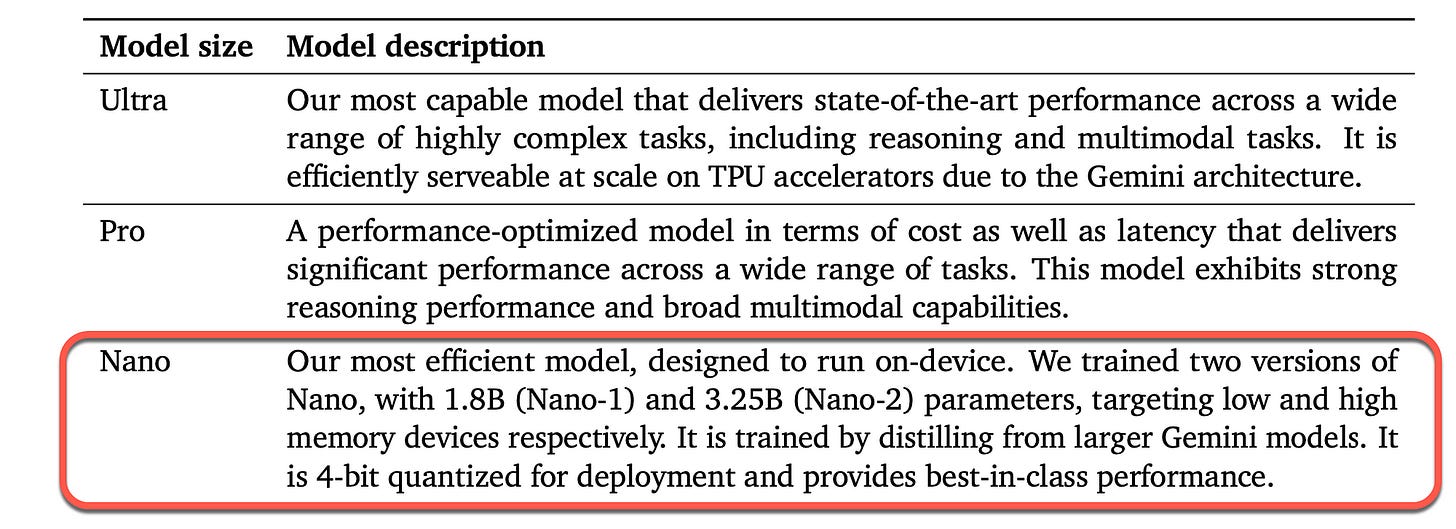

Quantisation

Quantisation is a technique to reduce the computational and memory costs of running inference.

The process of quantisation means the resulting model requires less memory storage, consumes less energy (in theory). It also allows to run models on embedded devices.

Below is a breakdown of the Gemini models, with Nano being designed to run on a device.

Test Results

Gemini Ultra achieves a groundbreaking score of 90.0%, surpassing human experts in Massive Multitask Language Understanding (MMLU).

This innovative model utilises a comprehensive test framework encompassing 57 diverse subjects, including: math, physics, history, law, medicine, and ethics, to evaluate both global knowledge and problem-solving skills.

Through Google’s novel benchmark approach to MMLU, Gemini leverages its advanced reasoning capabilities, enabling it to deliberate more thoughtfully when faced with challenging questions.

This strategic approach results in substantial enhancements compared to relying solely on initial impressions.

In Conclusion

The four major impediments for large scale enterprise adoption of LLMs are:

Inference Latency

Cost in terms of input and output tokens.

Privacy and the lack of easy quantisation.

Hallucination

Th fact that Gemini will form part of the Vertex AI studio, and with Google introducing Gemini Nano, it seems that items one, three and four are being addressed.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

Gemini is built from the ground up for multimodality - reasoning seamlessly across image, video, audio, and code.

Introducing Gemini: our largest and most capable AI model

Gemini is our most capable and general model, built to be multimodal and optimized for three different sizes: Ultra…

Bard gets its biggest upgrade yet with Gemini

We're starting to bring Gemini's advanced capabilities into Bard.

https://storage.googleapis.com/deepmind-media/gemini/gemini_1_report.pdf