Generative AI Prompt Pipelines

Prompt Pipelines extend prompt templates by automatically injecting contextual reference data for each prompt.

The input format for Large Language Model based Generative AI is the principle of prompts.

Prompts or LLM prompting can be divided into four segments, as seen below.

Prompt Engineering

Prompt Templating

Prompt Chaining

Prompt Pipelines

In this article the use of prompt pipelines will be described.

In Machine Learning a pipeline can be described as and end-to-end construct, which orchestrates a flow of events and data.

The pipeline is kicked-off or initiated by a trigger; and based on certain events and parameters, a flow is followed which results in an output.

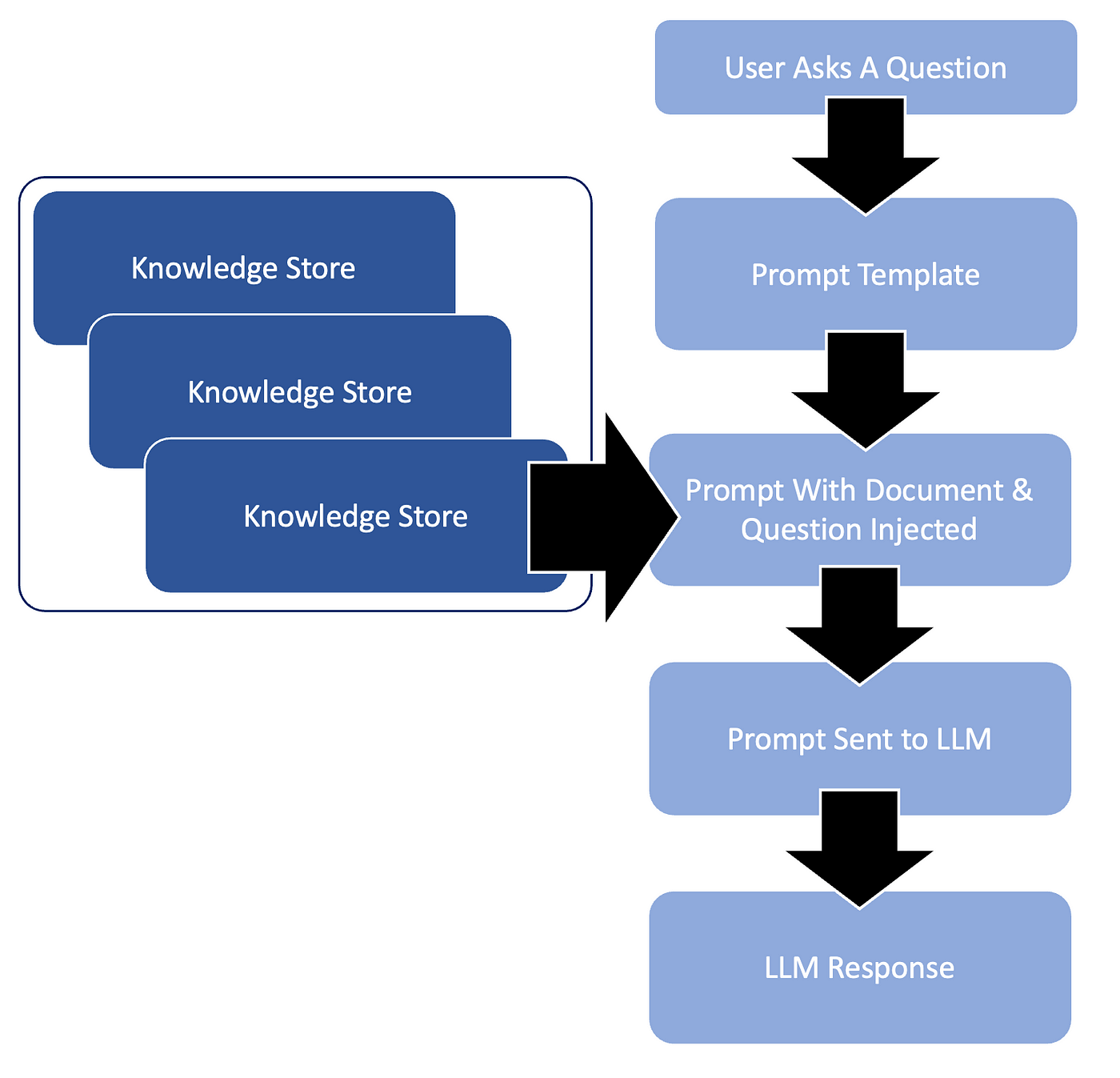

In the case of a prompt pipeline, the flow is in most cases initiated by a user request. The request is directed to a specific prompt template.

Prompt Pipelines can also be described as an intelligent extension to prompt templates.

The variables or placeholders in the pre-defined prompt template are populated (also known as prompt injection) with the question from the user, and the knowledge to be searched from the knowledge store.

The data from the knowledge store acts as a reference for the question to be answered. Having this body of information available prevents LLM hallucination. It also prevents the LLM to use dated or old data from the model which is inaccurate at the time.

Subsequently the composed prompt is sent to the LLM and the LLM response returned to the user.

Below is an example of a prompt template prior to the document and the question data being injected.

prompt_template_text="""Synthesize a comprehensive answer from the following text for the given question.

Provide a clear and concise response that summarizes the key points and information presented in the text.

Your answer should be in your own words and be no longer than 50 words.

\n\n Related text: {join(documents)} \n\n Question: {query} \n\n Answer:"""Again below, a question asked by the user, which is injected with the knowledge store data.

output = pipe.run(query="How does Rhodes Statue look like?")

print(output["results"])And finally the result to the question:

[‘The head would have had curly hair with evenly spaced spikes of bronze or silver flame radiating, similar to the images found on contemporary Rhodian coins.’]

Final Thoughts

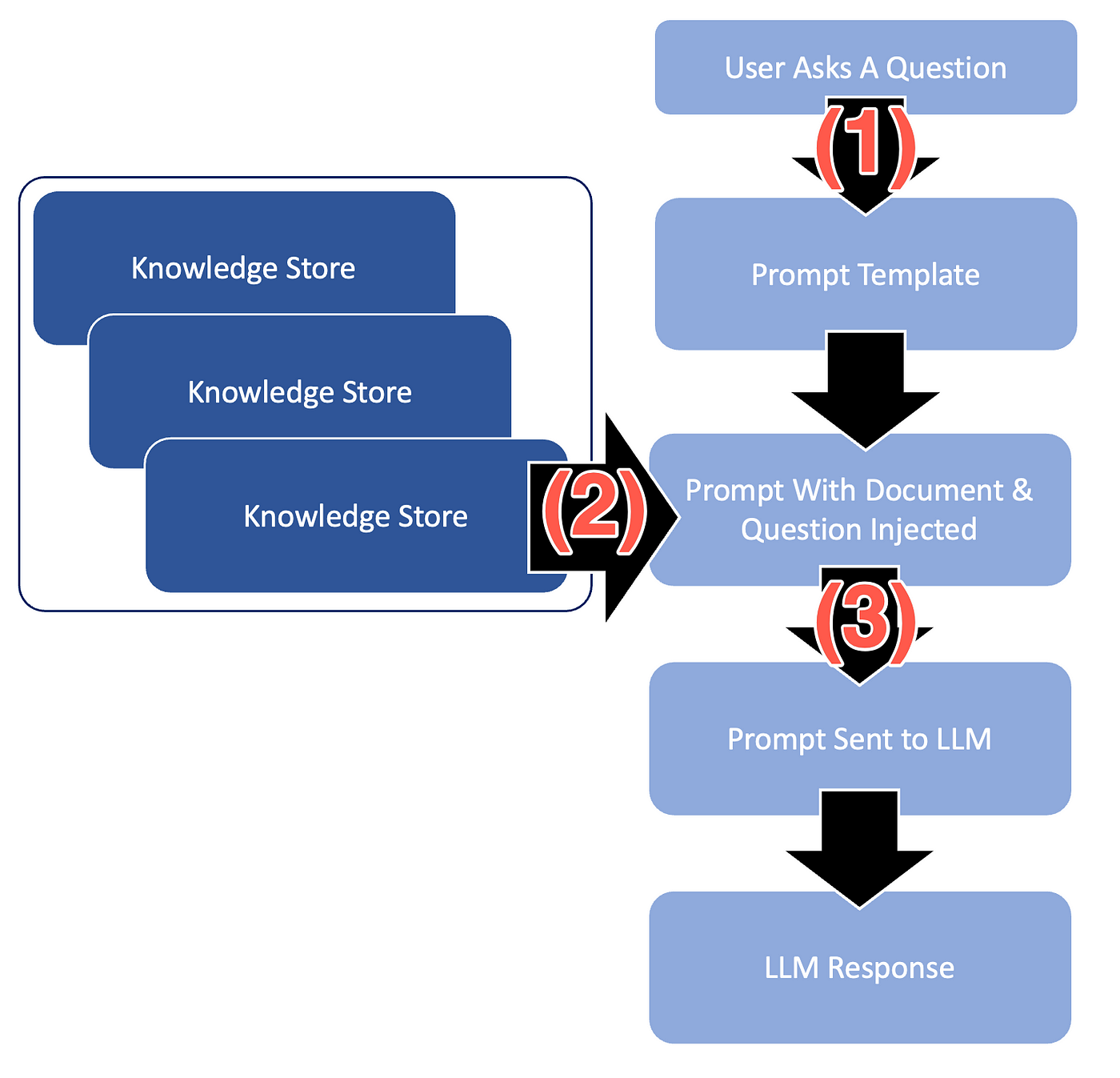

Considering the image below, in a follow-up article I would like discuss how a prompt pipeline can be implemented for a wider domain with multiple user input classes.

(1) User input should be categorised and assigned to a specific and applicable prompt template. These categories are analogous to assigning user input to an intent.

Based on the classification assigned to the user input, the correct data needs to be extracted (2) and injected (3) into the prompt template.

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.