GPT-4o mini

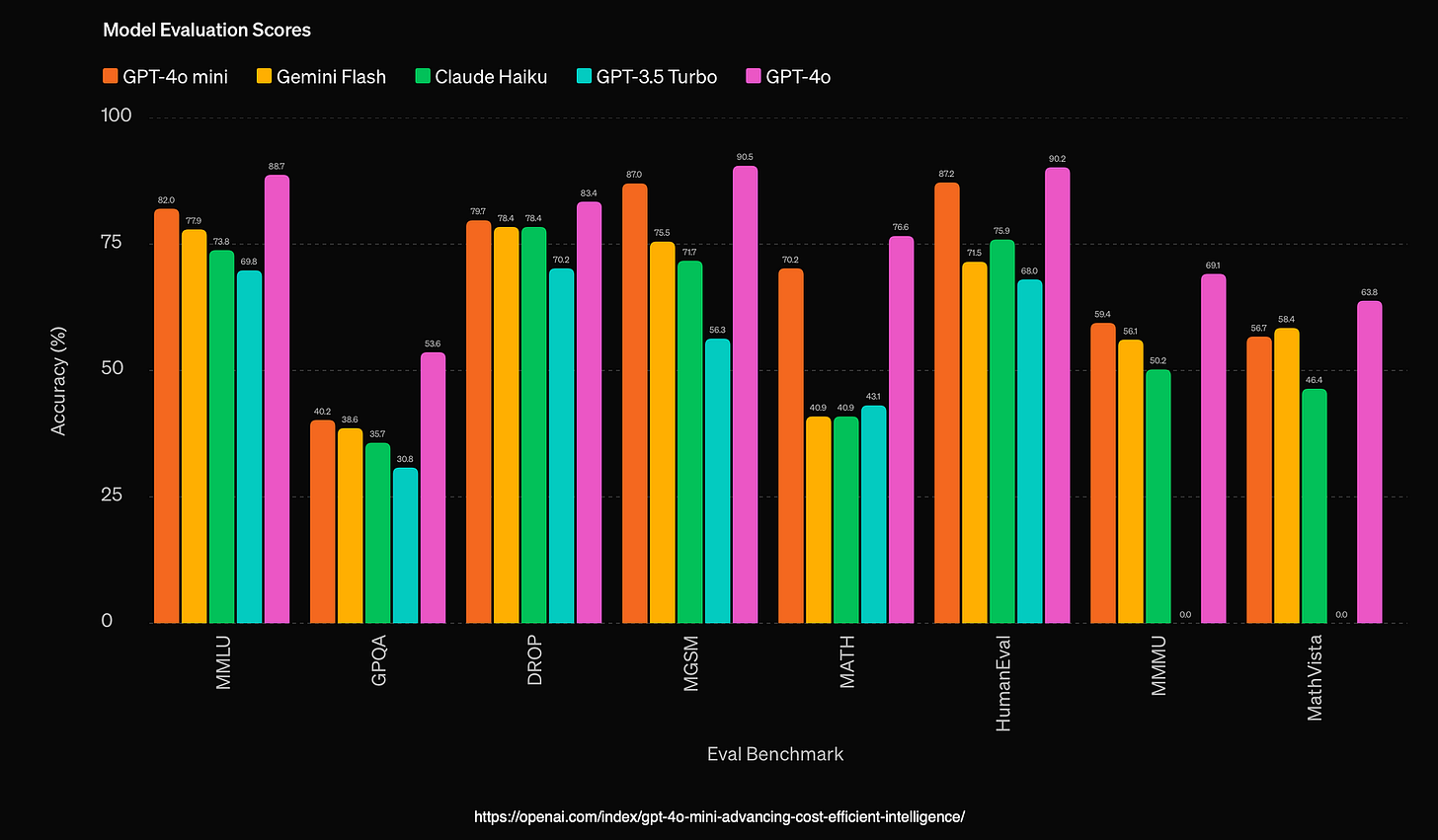

OpenAI states that they are advancing cost-efficient intelligence with their most cost-efficient Small Language Model.

Introduction

Today Sam Altman stated that in 2022, the best model in the world was text-davinci-003 which was much worse than GPT-4o mini, at it cost 100 times more.

Advantages of GPT-4o-mini

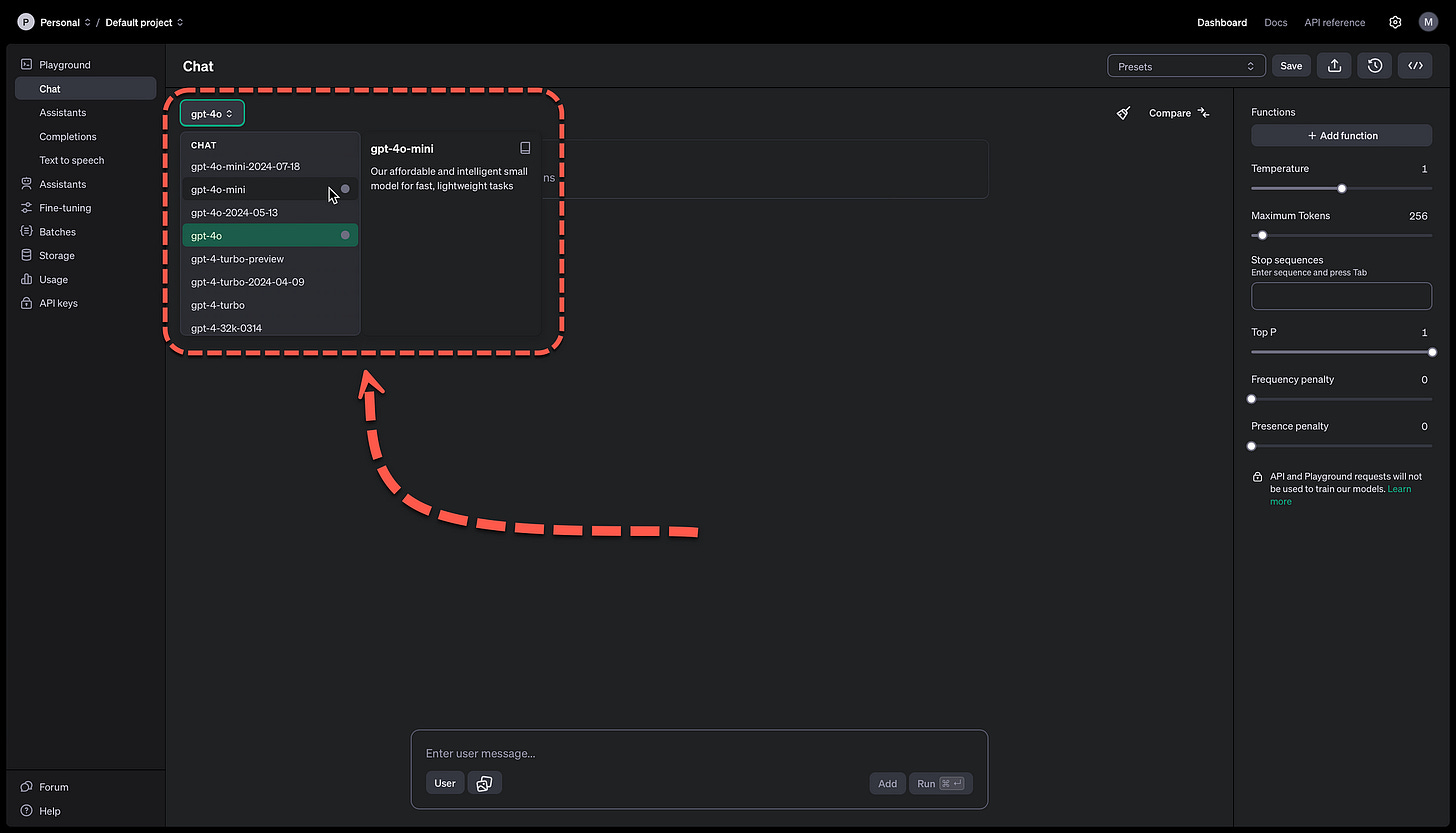

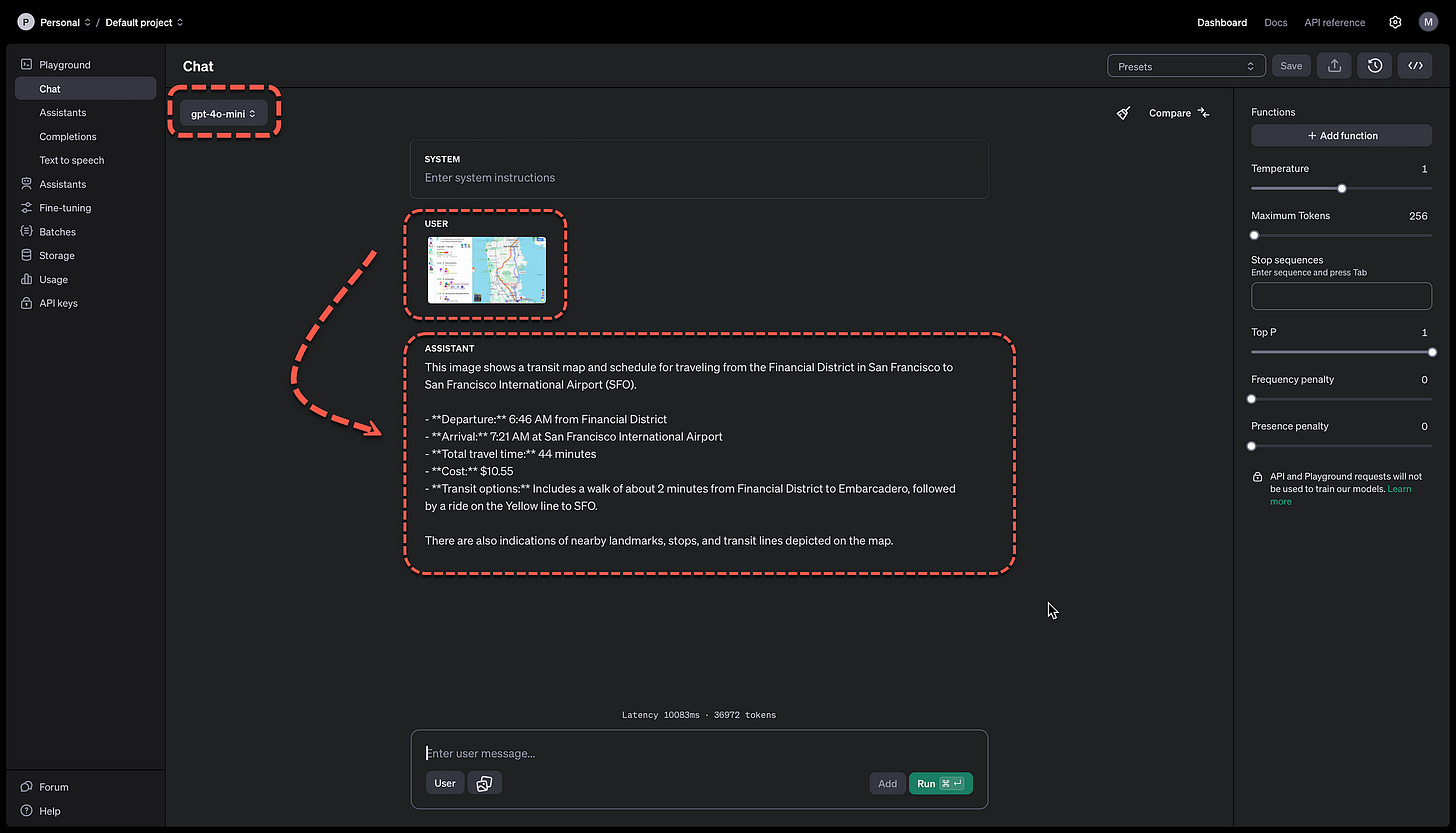

GPT-4o mini supports text & vision in the API and playground

Text, image, video & audio inputs and outputs coming in the future.

The model has a context window of 128K tokens and knowledge up to October 2023.

The model does have multi-language capabilities

Enhanced inference speeds

The combination of inference speed and cost make the model ideal for agentic applications with multiple parallel calls to the model.

Fine-tuning for GPT-4o mini will be rolled out soon.

Cost: 15 cents / million input tokens & 60 cents per million output tokens.

Considerations

With open-sourced SLMs the exciting part is running the model locally and having full control over the model via local inferencing.

In the case of OpenAI, this is not applicable due to their commercial hosted API model.

Hence OpenAI focus on speed, cost and capability.

And also following the trend of small models.

There are highly capable text based SLM’s which are open-sourced in the case of Orca-2, Phi3, TynyLlama, to name a few.

A differentiators for GPT-4o-mini will have to be cost, speed, capability and available modalities.

Why Small Language Models?

Before delving into Small Language Models (SLMs), it’s important to consider the current use-cases for Large Language Models (LLMs).

LLMs have been widely adopted due to several key characteristics, including:

Natural Language Generation

Common-Sense Reasoning

Dialogue and Conversation Context Management

Natural Language Understanding

Handling Unstructured Input Data

Knowledge Intensive nature

While LLMs have delivered on most of these promises, one area remains challenging: their knowledge-intensive nature.

We have opted to supersede the use of LLMs trained knowledge by making use of In-Context Learning (ICL) via RAG implementations.

RAG serves as an equaliser when it comes to Small Language Models (SLMs). RAG supplements for the lack of knowledge intensive capabilities within SLMs.

Apart from the lack of some Knowledge Intensive features, SLMs are capable of the other five aspects mentioned above.

Small Language Models Offer Several Advantages:

Local inference

They enable efficient on-device processing, reducing the need for cloud-based resources. This is in general for SLMs, obviously OpenAI’s offering will be commercial API based.

Cost

Small models are more cost-effective to run, requiring less computational power and storage.

Privacy

By processing data locally, they enhance user privacy and reduce data exposure risks.

Open Source Models

They provide flexibility and customisation options, allowing users to modify and adapt models to their specific needs.

Model Control & Management

They allow for easier control and management, enabling fine-tuning and optimisation for particular tasks without relying on external dependencies.

Follow me on LinkedIn for updates on Large Language Models

I’m currently the Chief Evangelist @ Kore.ai. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

https://openai.com/index/gpt-4o-mini-advancing-cost-efficient-intelligence/