HF MCP Server

Connect HuggingFace with AI Agents through the Model Context Protocol (MCP)

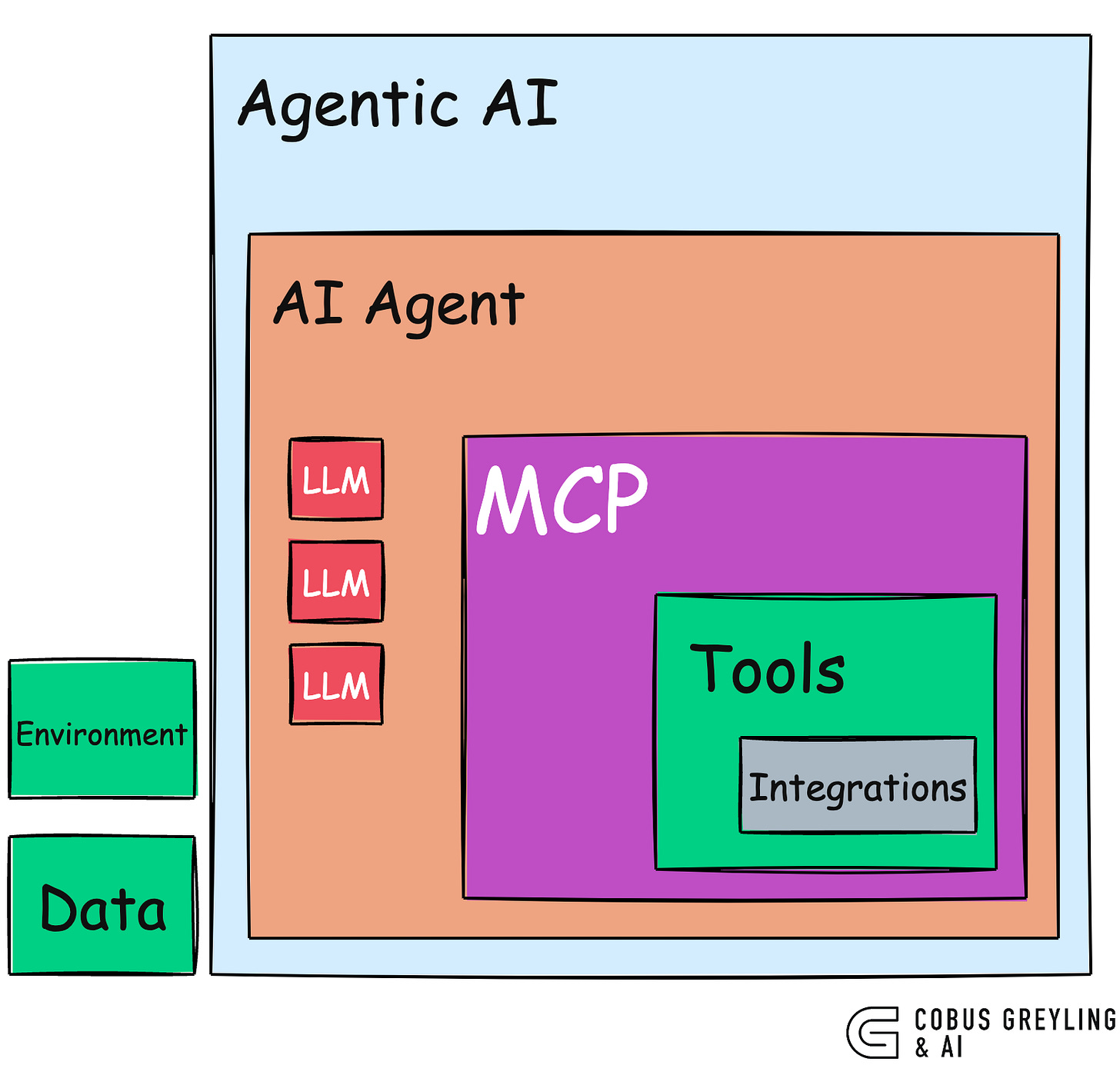

Firstly, Why MCP Matters?

The Model Context Protocol …traditionally, AI Agents have been limited by their internal knowledge bases or tightly controlled integrations via tools.

MCP breaks down these barriers by providing a secure, standardised framework for accessing external tools and data sources.

This means AI Agents can now access in real-time data, leverage specialised models, or even interact with user-created applications.

For developers, MCP offers a unified way to integrate their AI systems with a variety of platforms, reducing the complexity of building custom integrations.

The HuggingFace Advantage

The HuggingFace MCP Server takes this concept to the next level by connecting AI Agents to one of the most vibrant ML ecosystems available.

HuggingFace hosts thousands of pre-trained models covering tasks like natural language processing, computer vision, audio processing and more.

It also offers meticulously curated datasets and Spaces — interactive environments where developers showcase ML-powered applications…

With the MCP Server, an AI Agent can, for example:

Search for relevant models

For example, if you are looking for a model which suits a specific task, the assistant can query HuggingFace’s model hub and recommend the best fit.

Analyse Datasets

The AI Agent, integrated with the HF MCP Server, can retrieve a dataset from HuggingFace, perform exploratory analysis and summarise key insights.

Interact with Spaces

Want to test a new ML-powered app? The assistant can navigate HuggingFace Spaces, interact with the app, and provide real-time feedback or results.

This level of integration transforms AI Agents from static tools into dynamic collaborators, capable of leveraging the latest advancements in machine learning to solve real-world problems.

Why OpenAI?

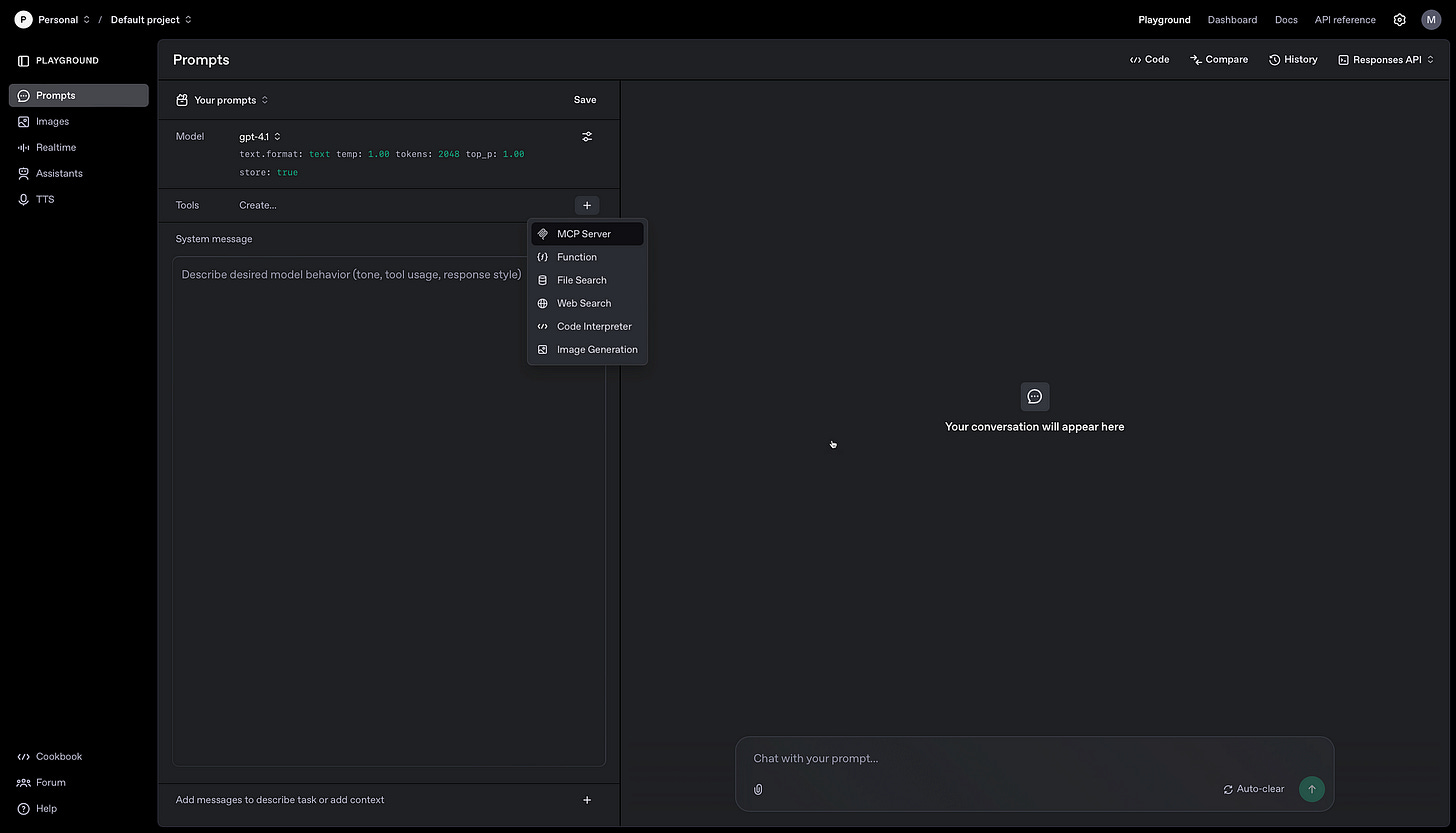

One of the easiest ways to demonstrate and use the HF MCP Server is via the OpenAI no-code console.

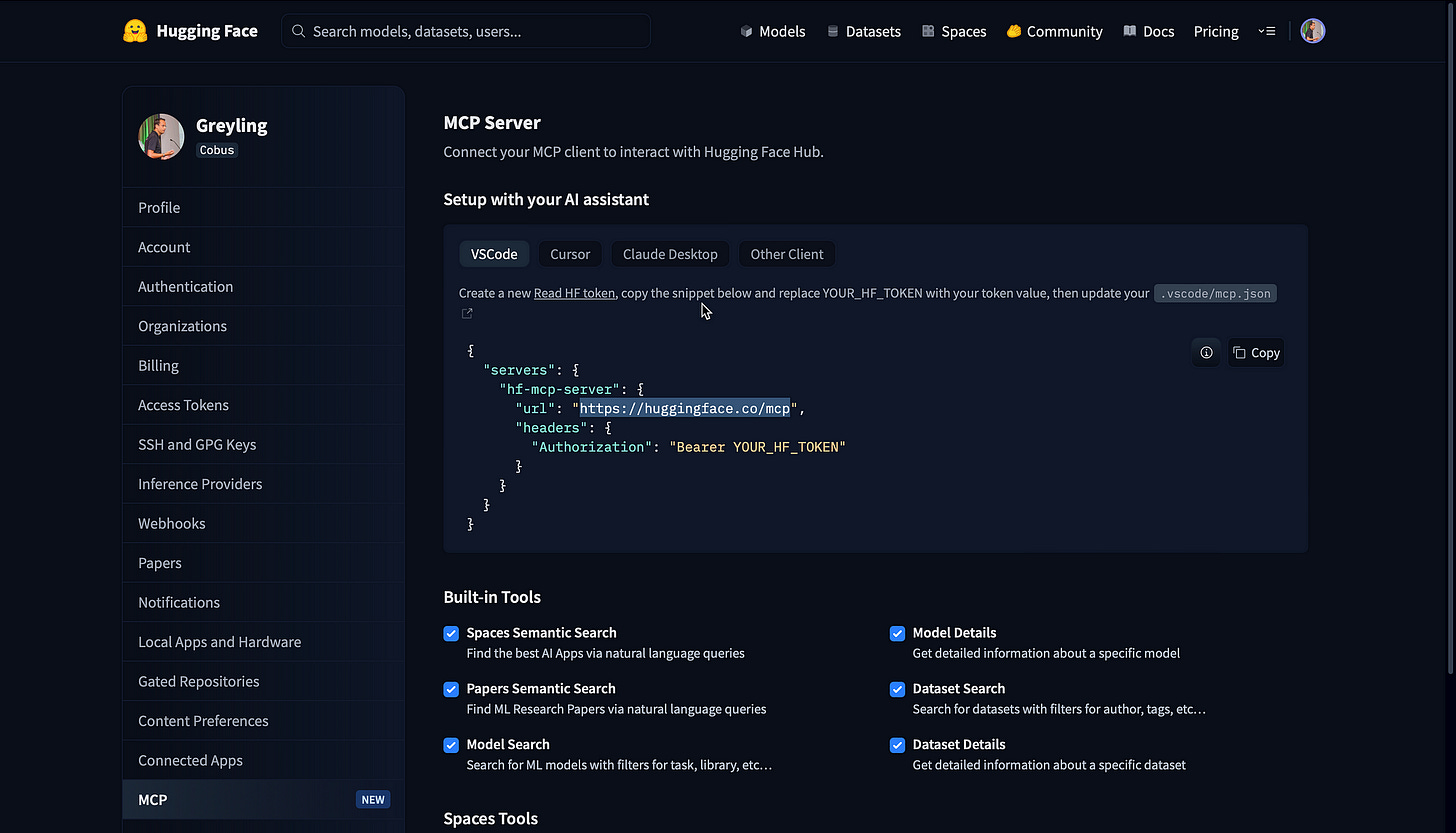

All you need to access the HF MCP Server is the url, and have an HF access token which you can generate for free from HF.

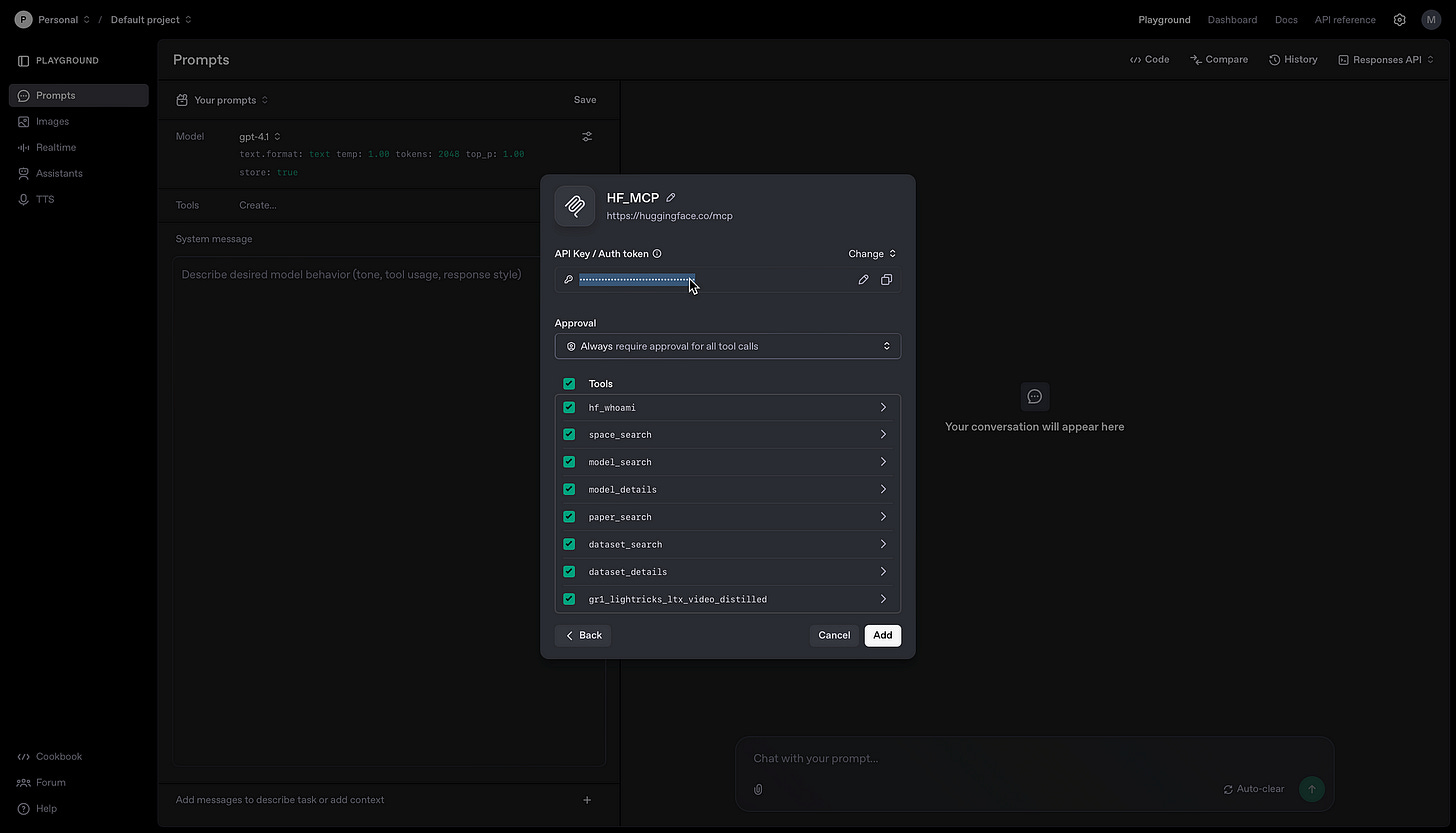

The OpenAI development console then lists all the available HF MCP Server tools available. As seen below…in my simple demo I select all of them.

And I also set the approval to always ask…the only reason I do this is to know explicitly when the HF MCP Server is called.

There is still an element of the OpenAI AI Agent deciding when and where the MCP server should be called.

Simple Walk-Through

As seen below, under Tools, click the plus sign…a list of options are shown, here select MCP Server.

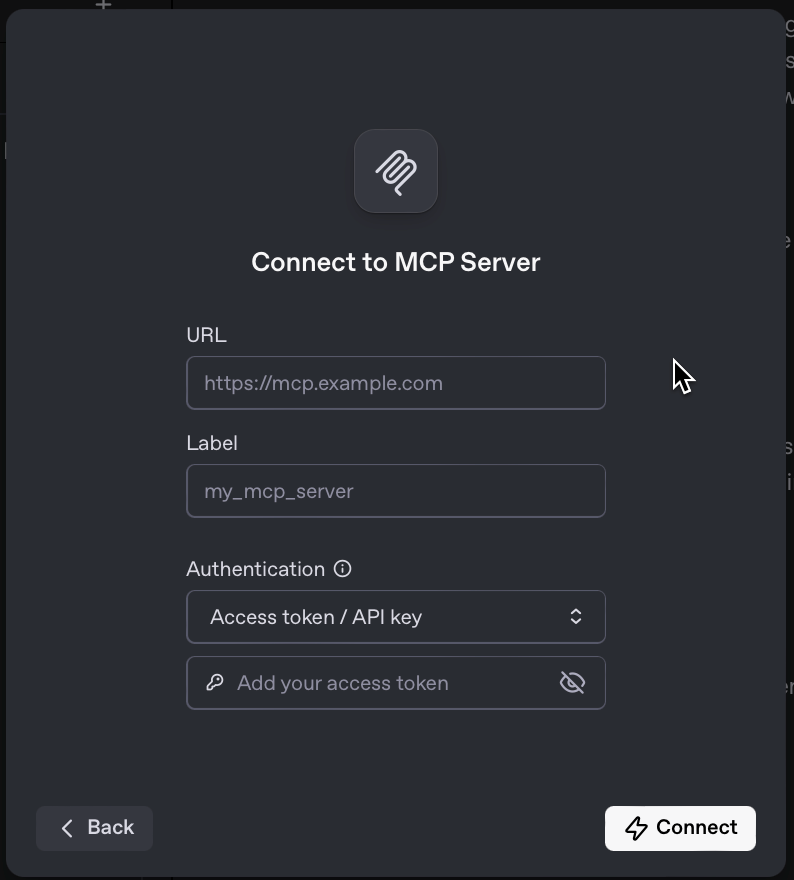

When prompted, under URL enter: https://huggingface.co/mcp, then under Label, enter any name by which you will recognise the server by.

Under Authentication, enter your HuggingFace access token.

I ticked all the available tools listed…this automatic process of listing all the tools is a nice way of getting an understanding of the scope of the MCP server.

Then lastly, I can test the integration by asking a question directly related to the HF MCP Server…I’m prompted for approval, an the response from the MCP server is given.

The AI Agent made use of the dataset_search tool within the MCP server…

When Is MCP Used?

The way a user asks a question, even with the same intent, could lead to different triggers or tool choices if the keyword detection or intent extraction isn’t fully deterministic.

An OpenAI assistant chooses to use a Model Context Protocol (MCP) server when:

System or prompt rules instruct it to do so;

It detects keywords, query types, or direct requests matching MCP capabilities;

It determines the task needs external, real-time, or comprehensive data not covered in its own training;

Or, backend logic identifies the query as best suited to tool/server response.

This ensures the user gets the most precise, up-to-date and contextually relevant answer. But, this also introduces a level of nondeterminism.

The information fetched from an MCP server reflects the current state of external resources. If you ask the same question at different times, the answer may differ based on changes in those resources.

The assistant can apply rules or heuristics to determine when to call the MCP server.

Also, slight changes in the user’s phrasing or context — such as asking more specifically or generally — might or might not trigger the tool, leading to different answer paths.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.