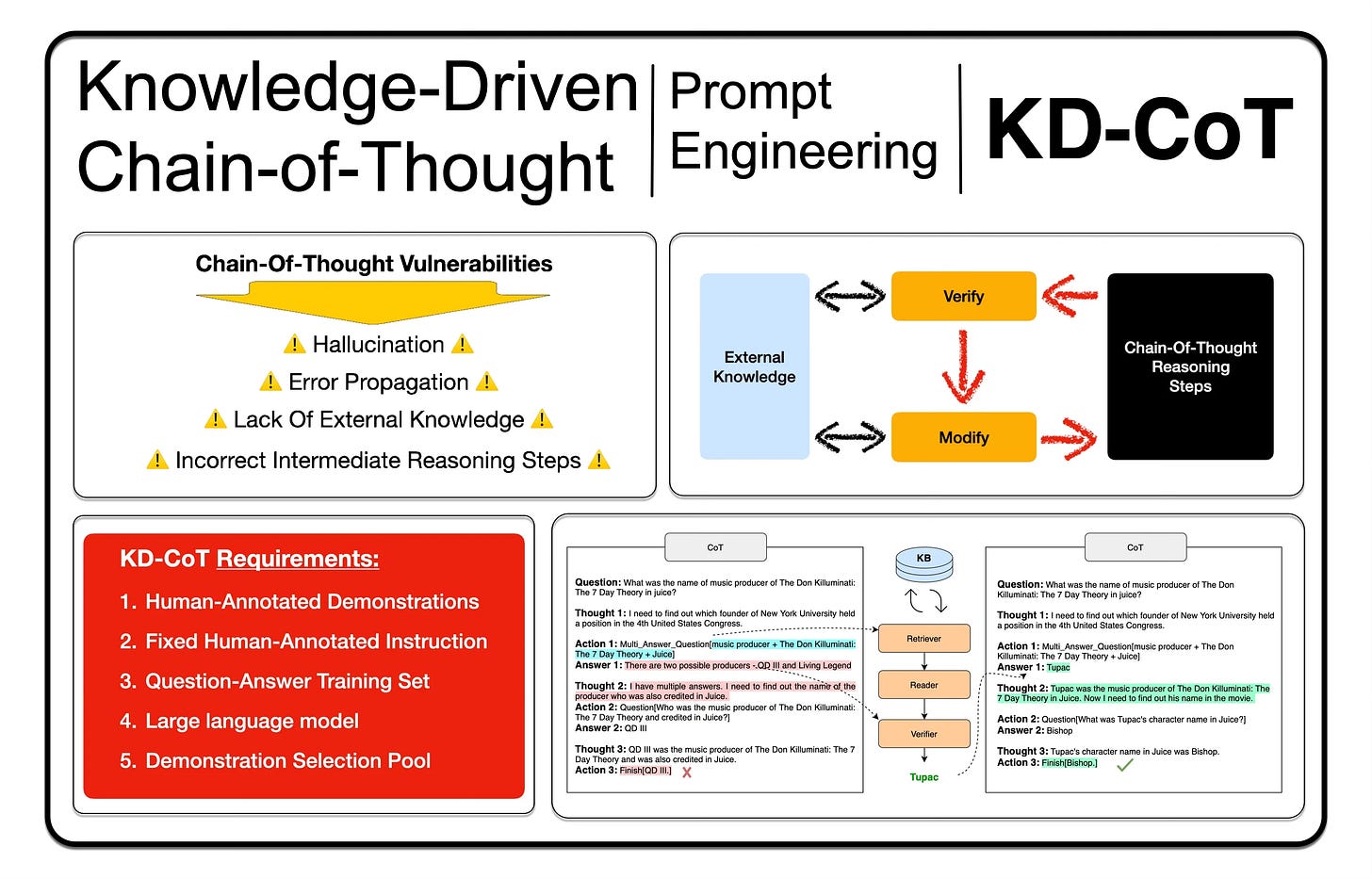

Knowledge-Driven Chain-of-Thought (KD-CoT)

The aim of KD-CoT is to introduce faithful reasoning to LLM based Chain-of-Thought reasoning for instances where Knowledge-intensive Question Answering is required.

This study strongly reminds of the Chain-Of-Knowledge Prompting approach.

Reoccurring Themes

Considering recent studies on prompting approaches for LLMs, there are a number of reoccurring themes…

The one theme is that Emergent Abilities are not hidden or unpublished LLM capabilities which are just waiting to be discovered, but rather the phenomenon of Emergent Abilities can be attributed to new approaches of In-Context Learning which are being developed.

Another theme is the importance of In-Context Learning (ICL) for LLMs, which have been highlighted in numerous studies. To facilitate ICL, context is being delivered to the LLM via methods like RAG, Fine-Tuning, or both.

Related to ICL, is accurate demonstrations for the LLM to emulate which is presented at inference.

The need for tools or connectors are being recognised. Cohere refers to this as grounding, where supplementary data is retrieved by the LLM-based agent, from external data sources.

It is being recognised that stand-alone, isolated prompting techniques have vulnerabilities. These vulnerabilities include hallucination and error propagation in the case of CoT. The study identifies the problem of incorrect intermediate steps creating an error during the reasoning steps, which are propagated further down the chain. And subsequently negatively impacts the final conclusion.

There is an increased focus on data, and a human-in-the-loop approach to data discovery, design and development.

lastly, inspectability along the chain of LLM interaction is receiving attention and approaches of data delivery are divided between gradient approaches which is more opaque. And non-gradient approaches which is ideal for human inspection and discovery.

Flexibility & Complexity

In the recent past there was an allure of the simplicity of LLM implementations, LLMs were thought to have emerging capabilities just waiting to be discovered and leveraged.

A single very well formed meta-prompt was seen as all you needed to build an application. This mirage is disappearing and the reality is dawning that, as flexibility is needed, complexity needs to be introduced.

KD-CoT

Back to Knowledge Driven Chain-Of-Thought (KD-CoT)…

KD-CoT speaks to the movement to grounded reference data an in-context learning. KD-CoT is dependant on the following five elements:

Human-Annotated Demonstrations

Fixed Human-Annotated Instruction

Question-Answer Training Set

Large Language Model

Demonstration Selection Pool

It is evident that a human-in-the-loop, data-centric approach is key to KD-CoT.

KD-CoT is an interactive framework which leverages a QA system to access external knowledge to produce high-quality answers.

KD-CoT is focussed on solving for knowledge intense, Knowledge-base Question Answering.

This approach also leverages In-Context Learning (ICL) for conditioning the LLM with contextual reference demonstration data to generate more accurate responses.

RAG

The study propose a retriever-reader-verifier QA system to access external knowledge and interact with the LLM.

Hallucination is still a challenge due to the absence contextual information at inference and the lack of access to external knowledge. Error propagation is also a challenge with CoT.

The study highlight the failings of CoT in terms of knowledge intensive tasks.

Routine

In each round, LLMs interact with a QA system that retrieves external knowledge and produce faithful reasoning traces based on retrieved precise answers.

The structured CoT reasoning of LLMs is facilitated by custom developed KBQA CoT collection, which serves as in-context learning demonstrations and can also be utilised as feedback augmentation to train a robust retriever.

In Closing

LLM-based implementation designs from a research perspective are becoming increasingly complex, relying on a human-in-the-loop process; or at least curated supporting data for a more data centric approach to AI.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.