LangChain Just Launched LangGraph Cloud

LangGraph is a fairly recent addition to the ever expanding LangChain ecosystem. With the launch of LangGraph Cloud, a managed, hosted service is introduced for deploying and hosting LangGraph apps.

Introduction

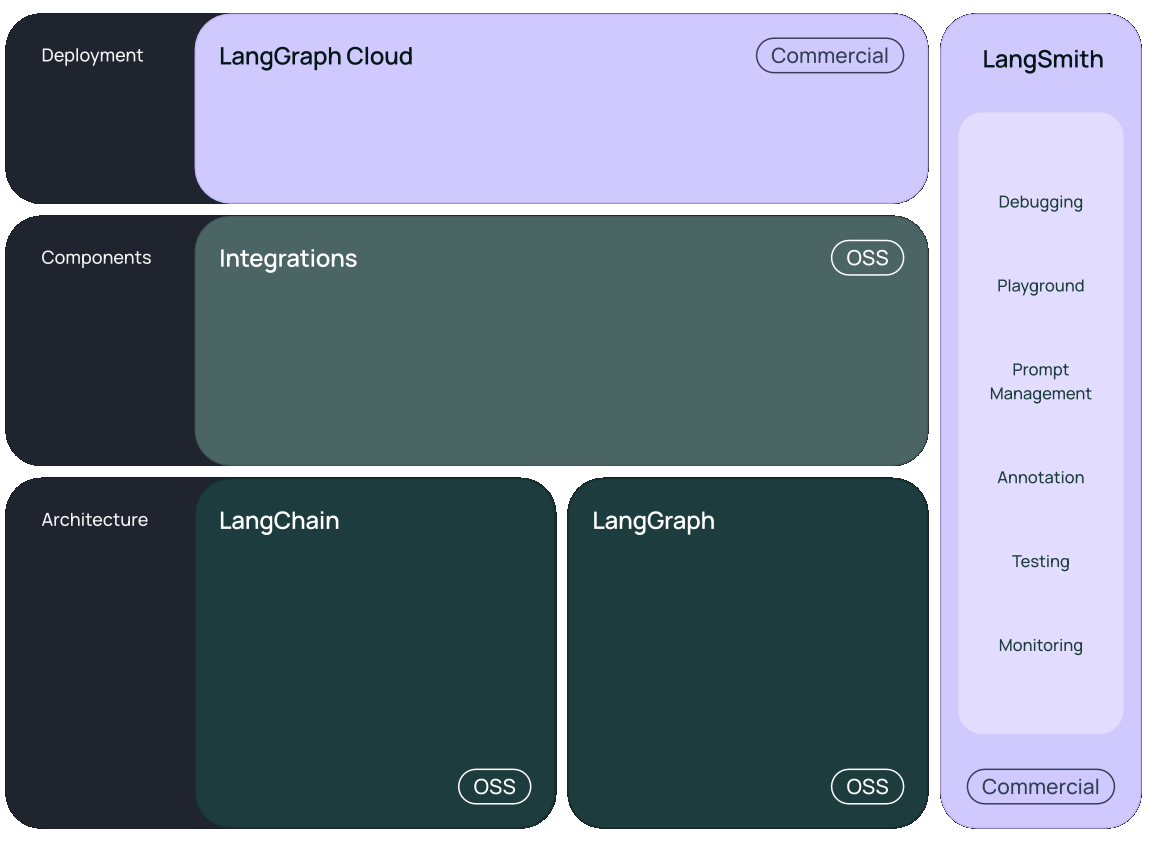

The LangChain ecosystem is unfolding at a rapid pace, with a combination of Open Source Software (OSS) and Commercial software. The Commercial software includes LangSmith & LangGraph Cloud.

Agents (aka Agentic Applications)

We are all starting to realise that Agentic Applications will become a standard in the near future. The advantages of Agents are numerous…but to name a few:

Agents can handle complex, ambiguous and more implicit user queries in an automated fashion.

Underpinning agents is the capability to create a chain of events on the fly based on the task assigned by the user.

Agents make use of an LLM which acts as the backbone of the agent.

When the agent receives a user query, the agent decomposes the task into sub-tasks, which are then executed in a sequential fashion.

One or more tools are made available to the Agent which can be employed by the agent as the agent deems fit. The agent decides which tool to use based on a tool description which forms part of each tool.

A tool is a unit of capability which includes tasks like web search, mathematics, API calls and more.

Impediments to Agent Adoption

Impediments and apprehension to Agent adoption included:

LLM inference cost. The backbone LLM are queried multiple times during the course of a query, should an agent have a large number of users inference cost can skyrocket.

Controllability, inspectability, observability and a more granular control are much needed. In the market there is this fear that agents are too autonomous.

Agents broke the glass ceiling of chatbots, but by a little too much; and some measure of control is now required.

For more complex agents, to decrease latency, there is a requirement to run tasks in parallel, and also stream not only LLM responses, but agent responses as it becomes available.

LangGraph

LangGraph is framework-agnostic, with each node functioning as a regular Python function.

It extends the core Runnable API (a shared interface for streaming, async, and batch calls) to facilitate:

Seamless state management across multiple conversation turns or tool calls.

Flexible routing between nodes based on dynamic criteria

Smooth transitions between LLMs and human intervention

Persistence for long-running, multi-session applications

LangGraph Cloud

Below the basic personal workflow is shown. A user will develop their LangGraph application within their IDE of choice. From here they will push their code to GitHub.

From LangGraph Cloud the GitHub code can be accessed and deployed to LangGraph Cloud. From LangGraph Cloud applications can be tested, traces can be run, interrupt can be added, and more.

Below you can see the LangGraph assistant specifications which gives the OpenAPI specs.

LangGraph Studio

The LangGraph Studio visualises how data flows, allowing interaction through message sending.

It shows and streams the steps as they occur, enabling users to revisit and edit nodes, and fork new paths from any point.

Breakpoints can be added to the graph to pause sequences, requesting permission to proceed before continuing. This powerful tool offers a dynamic way to develop applications.

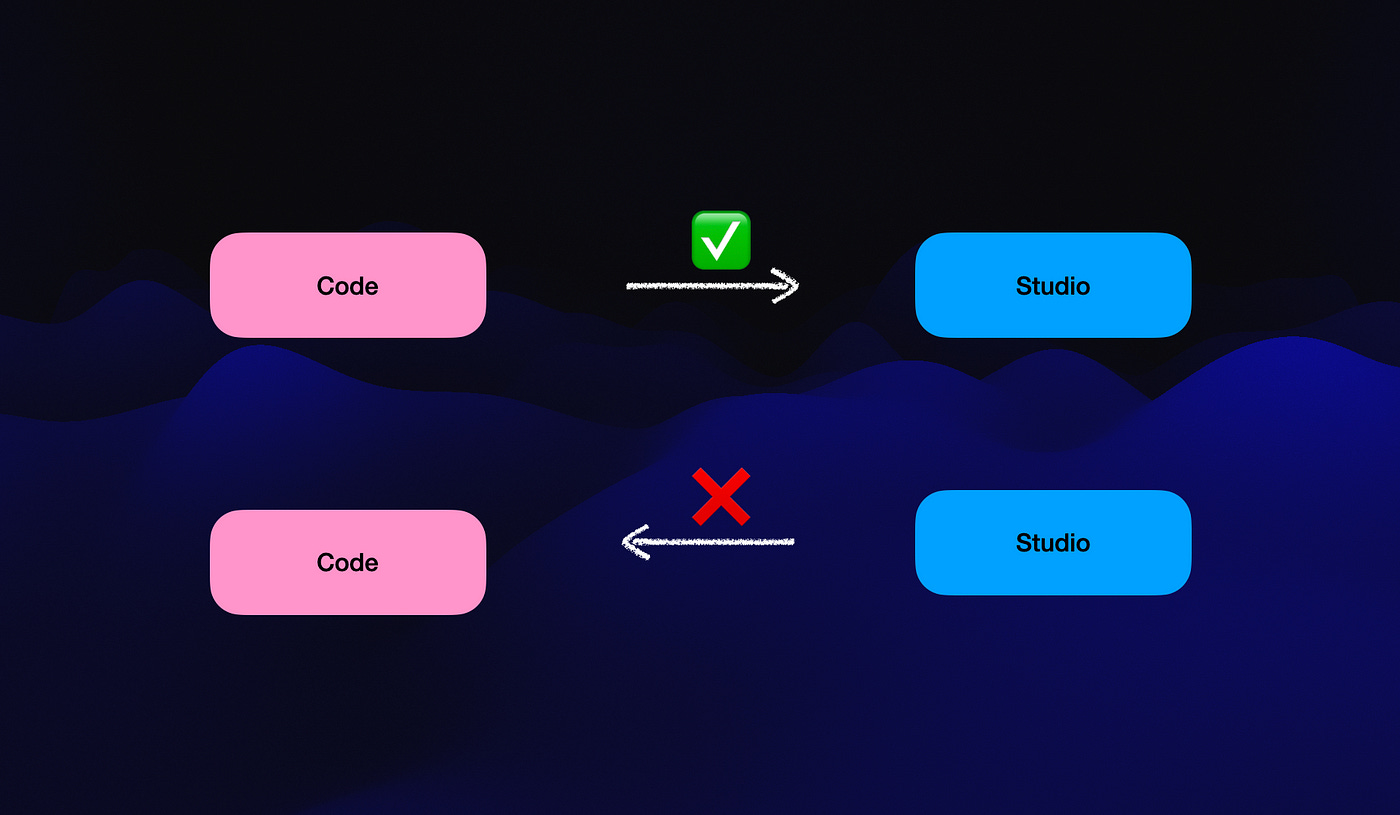

It needs to be stated that LangGraph Studio is a graphic representation of the code you have written. Studio is a way to visualise and gain insights into data flow.

Studio is not a flow creation or development tool, hence code can be visually represented in Studio. But within Studio, code cannot be edited or changed. Hence Studio is an observation, debugging and conversation flow tool.

Studio is a powerful tracing tool, adding pauses and forking the conversation to inspect different permutations.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.