LangGraph Agents By LangChain

Have you noticed the market shift towards Graph Based Representations of AI applications and flows?

Introduction

Of late there has been a return to graph based data representations and flows for AI applications and agents. Some of the recent releases of graph based flow design and agent build tools include GALE from kore.ai, LangGraph from LangChain, Workflows from LlamaIndex and Deepset Studio.

In computer science, Graph is an abstract data type (ADT) which defined by its behaviour (semantics) from the point of view of a user of the data.

As I have mentioned before, graph representations have always been part and parcel of the traditional conversational / chatbot scene for building and maintaining flows via a GUI.

With the advent of agent AI and agentic applications, there has been a departure from this. And of late, there is a return to graph-based flows.

Two Approaches

There are two approaches to a graph representation of data…

The flow is defined via a pro-code approach with a graphical representation which can be generated from the code. In the case of LangGraph, there is a limited degree of interaction possible via the GUI. Hence the visual graph representation of the flow is generated via the code.

In other cases the actual flow is defined and built and maintained via the GUI and hence can be fully manipulated via the GUI. On each node, users can drill down to create custom functions and configurations via code windows. Which allows for a pro-code granular configuration of the flow.

Why Now?

Graph data, an abstract data type, is a theoretical model that defines a data type based on its behaviour (how it functions) rather than its implementation details.

Hence it is easier to interpret application flow and manage it, as apposed to traditional data representations where data is viewed on the basis of how it is physically organised and managed, which is a concern of those who implement or work with the data at a technical level.

Many AI agents or Agentic applications, particularly those dealing with knowledge representation or natural language processing, involve entities that are interconnected.

Graphs naturally represent these complex interdependencies, making it easier to model and navigate relationships between data points.

Knowledge Graphs: For instance, in knowledge graphs, entities (like people, places, or concepts) are nodes, and relationships between them are edges. This allows for efficient querying and reasoning over data.

Balancing Rigidity & Flexibility

The introduction of graph based data representations needs to detract from instances where flexibility is a necessity. Graph representations can be used to design, build and run an application with a pipeline or process automation approach.

Or it can be used in an agent approach which demands more flexibility. Here the basic steps can be defined, and the flow through which the agent will cycle until a final conclusion is reached.

LangGraph By LangChain

We start this working notebook, with the code installs or upgrades the langgraph and langchain_anthropic Python packages quietly, while suppressing error messages, within a Jupyter notebook environment…

%%capture --no-stderr

%pip install --quiet -U langgraph langchain_anthropicHere the code is designed to securely set an environment variable for the current session.

import getpass

import os

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("ANTHROPIC_API_KEY")This Python code snippet is setting up several environment variables that are used for configuring LangChain LangSmith project, for tracing and monitoring with a specific endpoint.

import os

from uuid import uuid4

unique_id = uuid4().hex[0:8]

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_PROJECT"] = f"LangGraph_HumanInTheLoop"

os.environ["LANGCHAIN_ENDPOINT"] = "https://api.smith.langchain.com"

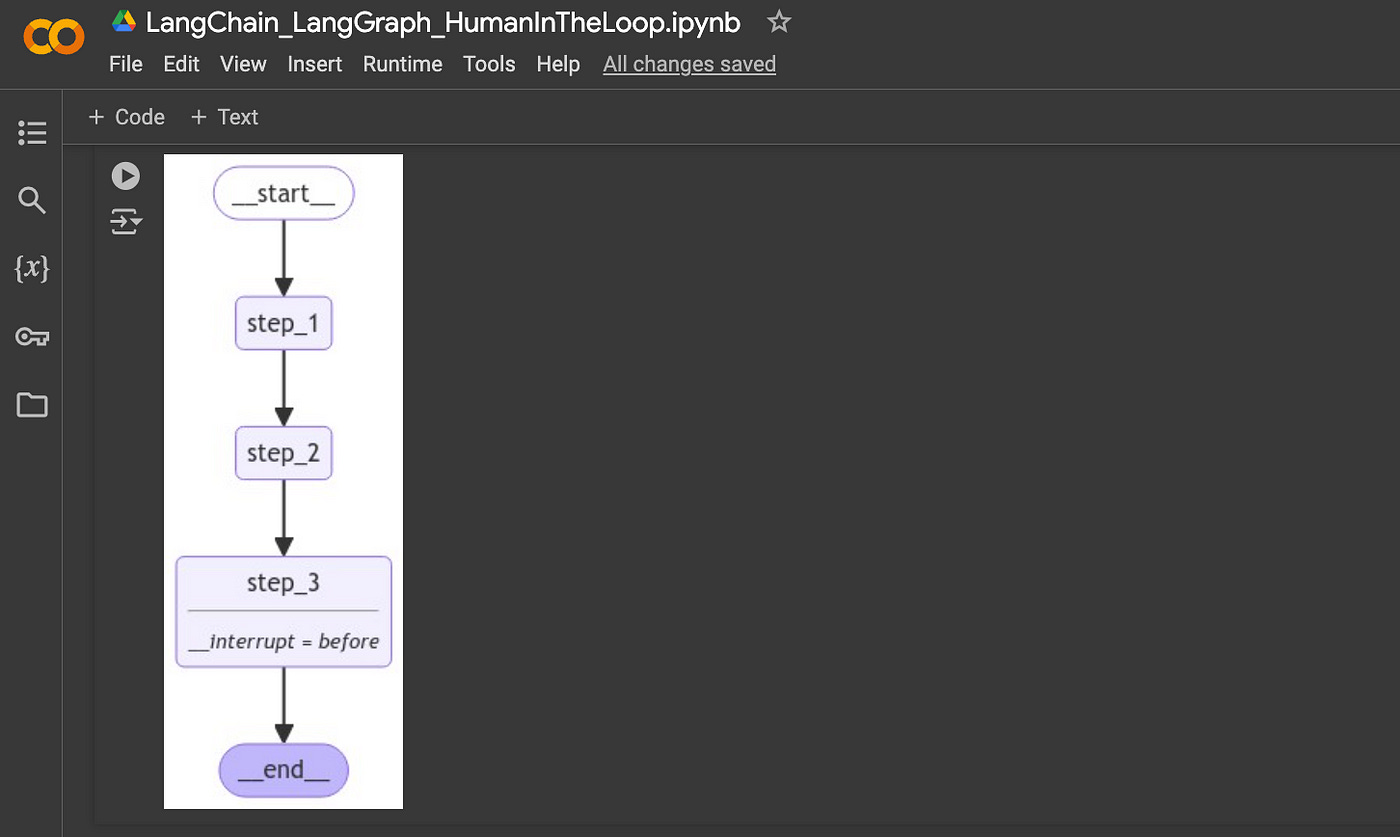

os.environ["LANGCHAIN_API_KEY"] = "<Your API Key>"Here the workflow and state management defined for a three step system using langgraph.

Each step in a process is represented as a node in a graph.

from typing import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.checkpoint.memory import MemorySaver

from IPython.display import Image, display

class State(TypedDict):

input: str

def step_1(state):

print("---Step 1---")

pass

def step_2(state):

print("---Step 2---")

pass

def step_3(state):

print("---Step 3---")

pass

builder = StateGraph(State)

builder.add_node("step_1", step_1)

builder.add_node("step_2", step_2)

builder.add_node("step_3", step_3)

builder.add_edge(START, "step_1")

builder.add_edge("step_1", "step_2")

builder.add_edge("step_2", "step_3")

builder.add_edge("step_3", END)

# Set up memory

memory = MemorySaver()

# Add

graph = builder.compile(checkpointer=memory, interrupt_before=["step_3"])

# View

display(Image(graph.get_graph().draw_mermaid_png()))A visual representation of the graph can be printed out,

This code snippet is an extension of the state management process using langgraph, where it interacts with the user to determine whether to proceed with a particular step in the graph..

# Input

initial_input = {"input": "hello world"}

# Thread

thread = {"configurable": {"thread_id": "1"}}

# Run the graph until the first interruption

for event in graph.stream(initial_input, thread, stream_mode="values"):

print(event)

user_approval = input("Do you want to go to Step 3? (yes/no): ")

if user_approval.lower() == "yes":

# If approved, continue the graph execution

for event in graph.stream(None, thread, stream_mode="values"):

print(event)

else:

print("Operation cancelled by user.")And the output when the code is run…

{'input': 'hello world'}

---Step 1---

---Step 2---

Do you want to go to Step 3? (yes/no): yes

---Step 3---AI Agent

In the context of agents, breakpoints are valuable for manually approving specific agent actions.

To demonstrate this, below is a straightforward ReAct-style agent that performs tool calling.

inserted there is a breakpoint just before the action node is executed.

# Set up the tool

from langchain_anthropic import ChatAnthropic

from langchain_core.tools import tool

from langgraph.graph import MessagesState, START

from langgraph.prebuilt import ToolNode

from langgraph.graph import END, StateGraph

from langgraph.checkpoint.memory import MemorySaver

@tool

def search(query: str):

"""Call to surf the web."""

# This is a placeholder for the actual implementation

# Don't let the LLM know this though

return [

"It's sunny in San Francisco, but you better look sudden weater changes later afternoon!"

]

tools = [search]

tool_node = ToolNode(tools)

# Set up the model

model = ChatAnthropic(model="claude-3-5-sonnet-20240620")

model = model.bind_tools(tools)

# Define nodes and conditional edges

# Define the function that determines whether to continue or not

def should_continue(state):

messages = state["messages"]

last_message = messages[-1]

# If there is no function call, then we finish

if not last_message.tool_calls:

return "end"

# Otherwise if there is, we continue

else:

return "continue"

# Define the function that calls the model

def call_model(state):

messages = state["messages"]

response = model.invoke(messages)

# We return a list, because this will get added to the existing list

return {"messages": [response]}

# Define a new graph

workflow = StateGraph(MessagesState)

# Define the two nodes we will cycle between

workflow.add_node("agent", call_model)

workflow.add_node("action", tool_node)

# Set the entrypoint as `agent`

# This means that this node is the first one called

workflow.add_edge(START, "agent")

# We now add a conditional edge

workflow.add_conditional_edges(

# First, we define the start node. We use `agent`.

# This means these are the edges taken after the `agent` node is called.

"agent",

# Next, we pass in the function that will determine which node is called next.

should_continue,

# Finally we pass in a mapping.

# The keys are strings, and the values are other nodes.

# END is a special node marking that the graph should finish.

# What will happen is we will call `should_continue`, and then the output of that

# will be matched against the keys in this mapping.

# Based on which one it matches, that node will then be called.

{

# If `tools`, then we call the tool node.

"continue": "action",

# Otherwise we finish.

"end": END,

},

)

# We now add a normal edge from `tools` to `agent`.

# This means that after `tools` is called, `agent` node is called next.

workflow.add_edge("action", "agent")

# Set up memory

memory = MemorySaver()

# Finally, we compile it!

# This compiles it into a LangChain Runnable,

# meaning you can use it as you would any other runnable

# We add in `interrupt_before=["action"]`

# This will add a breakpoint before the `action` node is called

app = workflow.compile(checkpointer=memory, interrupt_before=["action"])

display(Image(app.get_graph().draw_mermaid_png()))Below a visual representation of the flow…

Now, we can interact with the agent…

You’ll notice that it pauses before invoking a tool, as interrupt_before is configured to trigger before the action node.

from langchain_core.messages import HumanMessage

thread = {"configurable": {"thread_id": "3"}}

inputs = [HumanMessage(content="search for the weather in sf now?")]

for event in app.stream({"messages": inputs}, thread, stream_mode="values"):

event["messages"][-1].pretty_print()And the output…

================================ Human Message =================================

search for the weather in sf now?

================================== Ai Message ==================================

[{'text': "Certainly! I can help you search for the current weather in San Francisco. To do this, I'll use the search function to look up the latest weather information. Let me do that for you right away.", 'type': 'text'}, {'id': 'toolu_0195ZVcpdHkUrcgtZWmxVTue', 'input': {'query': 'current weather in San Francisco'}, 'name': 'search', 'type': 'tool_use'}]

Tool Calls:

search (toolu_0195ZVcpdHkUrcgtZWmxVTue)

Call ID: toolu_0195ZVcpdHkUrcgtZWmxVTue

Args:

query: current weather in San FranciscoNow call the agent again without providing any inputs to continue…

This will execute the tool as originally requested.

When you run an interrupted graph with None as the input, it signals the graph to proceed as if the interruption hadn’t occurred.

for event in app.stream(None, thread, stream_mode="values"):

event["messages"][-1].pretty_print()And the output…

================================= Tool Message =================================

Name: search

["It's sunny in San Francisco, but you better look sudden weater changes later afternoon!"]

================================== Ai Message ==================================

Based on the search results, here's the current weather information for San Francisco:

It's currently sunny in San Francisco. However, it's important to note that there might be sudden weather changes later in the afternoon.

This means that while the weather is pleasant right now, you should be prepared for potential changes as the day progresses. It might be a good idea to check the weather forecast again later or carry appropriate clothing if you plan to be out for an extended period.

Is there anything else you'd like to know about the weather in San Francisco or any other information I can help you with?I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.