LangGraph From LangChain Explained In Simple Terms

LangGraph is a method for creating state machines for conversational flow by defining them as graphs & it’s easier to understand than you might think.

Introduction

But why are we returning to state and state transition control? I thought that with Autonomous AI agents we have moved past the notion of state control and transition?

Well, if you look at the output from autonomous agents, you will notice how the agent creates a sequence of actions on the fly, which it follows.

The aim of LangGraph is to have level of control when it comes to executing autonomous AI agents.

Apologies for this article beings long, but I wanted to really get a grasp on what the underlying principles are of Graph is a data type.

The Status Quo

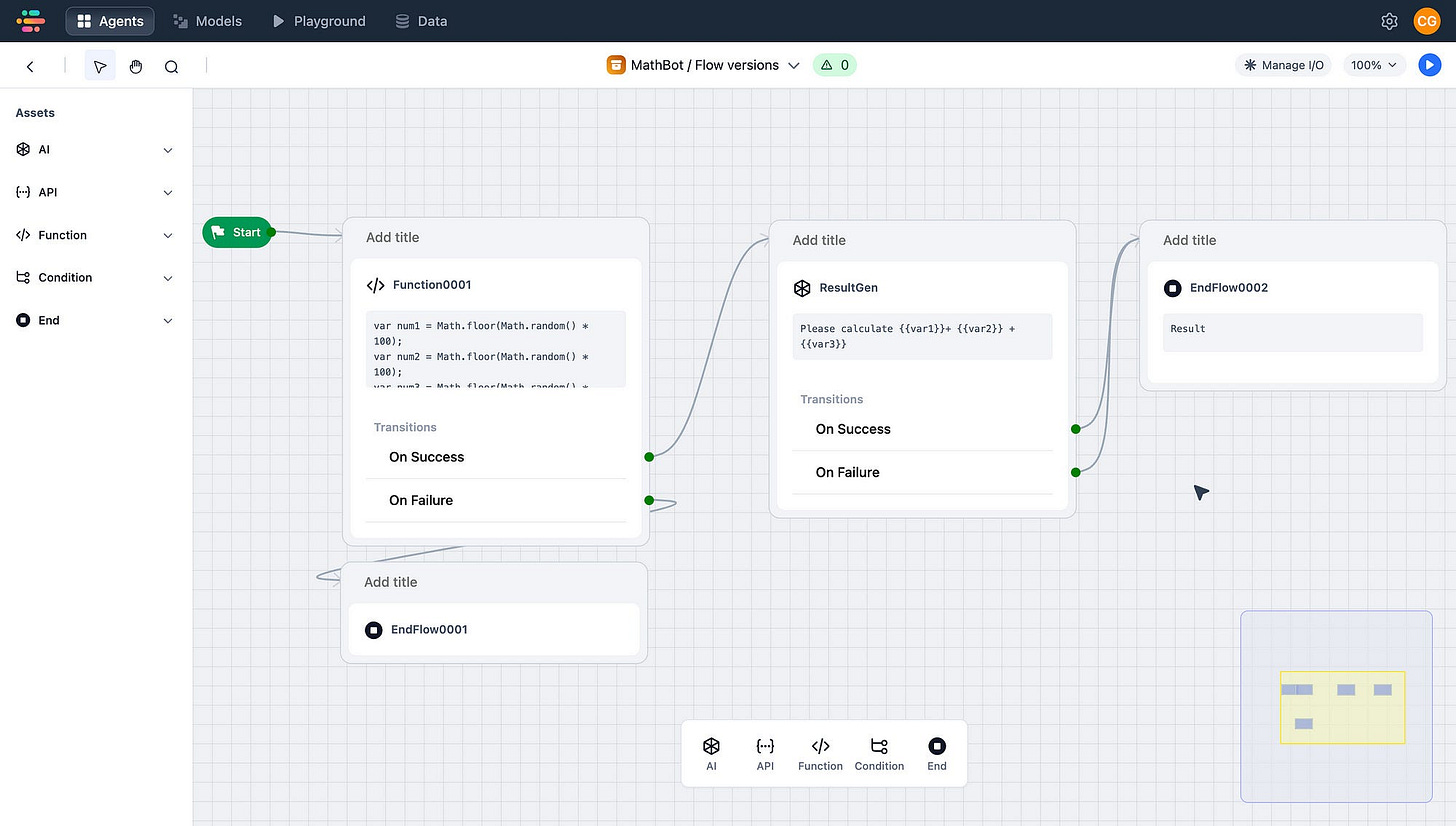

Considering the image below, this is how most of us have come to know the design & development user interface for creating dialog and process flows for Conversational UI.

The design affordances can be broken up into two main categories, nodesand edges.

The nodes are the blocks, sometimes referred to assets. In the image below, there are five nodes on the design canvas. Between each of these nodes are links, or what is called edges. Edges shows the possible destination node or nodes.

Prompt Chaining

With the advent of Large Language models, prompt chaining appeared on the scene…

Prompt chaining can be described as a technique used in working with language models, where multiple prompts (nodes) are sequentially linked (via edges) together to guide the generative app through a series of related tasks or steps.

This method is used to achieve more complex and nuanced outputs by breaking down a large task into smaller, manageable parts.

Here’s a simple explanation of how prompt chaining works:

Breaking Down the Task (nodes): A complex task is divided into smaller, sequential steps. Each step is designed to achieve a specific part of the overall goal.

Creating Prompts for Each Step (edges): For each step, a specific prompt is crafted to guide the language model in generating the required output. These prompts are designed to be clear and focused on their respective sub-tasks.

Sequential Execution: The language model processes the first prompt and generates an output. This output is then used as part of the input for the next prompt in the sequence.

But it needs to be noted, prompt chaining is based on the same principles as chatbot flow building. Hence we sit with the same problem as before, a rigid state machine.

Indeed, there are aspects of prompt chaining which introduces some degree of flexibility in the input of each node, and dynamic variation in the output. But in general the sequence remains fixed and rigid.

Challenges

This approach requires the flow to be meticulously hand crafted and each decision point needs to be defined; hence this is in essence a state machine, and the dialog tree is defined by different states (nodes) and decisions points on where the conversation needs to move along, edges.

Edges can also be seen as the options which are available for the conversation sate/dialog turn to move to.

This brings us to one of the challenges of conversation design; state and flow control.

So this brings us to the challenge of this approach being too rigid and in general there is a desire to shed some of the rigidity and introduce flexibility.

Traditional chatbots as we know it, has a problem of too much structure & being too rigid.

Enter Agents

Autonomous Agents were introduced quite recently, and the level of autonomy of agents were astonishing. Agents have a level of autonomy where it can create a chain or sequence of events in real-time and follow this temporary chain it created until a final answer to the question is reached.

You can think of this as disposable chains.

Considering the example below, agents can be asked very ambiguous questions like: Who is regarded as the father of the iPhone and what is the square root of his year of birth?”

or

What is the square root of the year of birth, of the man who is generally regarded as the father of the iPhone?

And as seen below, the agent creates a chain in real-time, reflects on the question, and goes through a process of action, observation, thought, action, observation, thought…until the final answer is reached.

> Entering new AgentExecutor chain...

I need to find out who is regarded as the father of the iPhone and his year of birth. Then, I will calculate the square root of his year of birth.

Action: Search

Action Input: father of the iPhone year of birth

Observation: Family. Steven Paul Jobs was born in San Francisco, California, on February 24, 1955, to Joanne Carole Schieble and Abdulfattah "John" Jandali (Arabic: عبد الف ...

Thought:I found that Steve Jobs is regarded as the father of the iPhone and he was born in 1955. Now I will calculate the square root of his year of birth.

Action: Calculator

Action Input: sqrt(1955)

Observation: Answer: 44.21538193886829

Thought:I now know the final answer.

Final Answer: Steve Jobs is regarded as the father of the iPhone, and the square root of his year of birth (1955) is approximately 44.22.

> Finished chain.

'Steve Jobs is regarded as the father of the iPhone, and the square root of his year of birth (1955) is approximately 44.22.Challenges

The challenge with agents and a constant point of criticism I have heard the most, is the high level of autonomy agents have.

Makers would like to have some level of control over agents.

So the introduction of agents have moved us from too much control and rigidity, to greater flexibility but a lack of control.

Enter LangGraph From LangChain

But first, what is Graph as a data type?

What Is Graph (Abstract Data Type)

The idea of graph data may seem opaque at first, but here I try and break it down.

Indeed graph is an abstract data type…

An abstract data type is a mathematical model for data types, defined by its behaviour (semantics) from the point of view of a user of the data.

Abstract data types are in stark contrasts with data structures, which are concrete representations of data, and are the point of view of an implementer, not a user. This data structure is less opaque and easy to interpret.

Directed Graph

Directed graph (or digraph) is a graph that is made up of a set of nodes connected by directed edges.

Graph data structure consists of a finite set of nodes together with a set of unordered pairs of these nodes for an undirected graph.

Considering the graph representation below, the nodes are shown, together with the edges and the edge options.

LangGraph

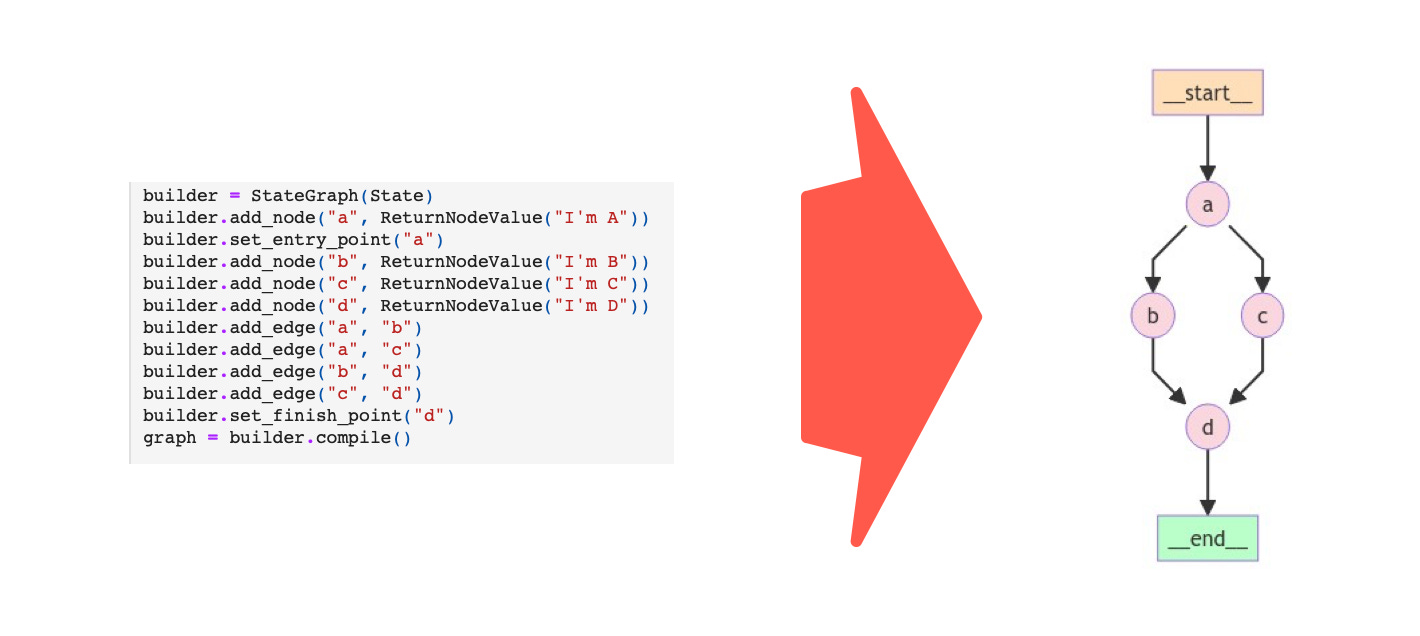

Again considering the image blow, a snippet of LangGraph Python code is shown on the left, with the graph drawn out on the right. You can see in the code where the node is defined, builder.add_node with a ReturnNodeValue. For each node having an edge defined builder.add_edge.

You also see that a is set as the entry_point and d as the finish_point.

LangGraph is a module built on top of LangChain to better enable creation of cyclical graphs, often needed for agent runtimes.

One of the big value props of LangChain is the ability to easily create custom chains, also known as flow engineering. Combining LangGraph with LangChain agents, agents can be both directed and cyclic.

A Directed Acyclic Graph (DAG) is a type of graph used in computer science and mathematics. Here’s a simple explanation:

Directed: Each connection (or edge) between nodes (or vertices) has a direction, like a one-way street. It shows which way you can go from one node to another.

Acyclic: It doesn’t have any cycles. This means if you start at one node and follow the directions, you can never return to the same node. There’s no way to get stuck in a loop.

Imagine it as a family tree or a flowchart where you can only move forward and never return to the same point you started from.

A common pattern observed in developing more complex LLM applications is the introduction of cycles into the runtime. These cycles frequently use the LLM to determine the next step in the process.

A significant advantage of LLMs is their capability to perform these reasoning tasks, essentially functioning like an LLM in a for-loop. Systems employing this approach are often referred to as agents.

Agents & Control

However, looping agents often require granular control at various stages.

Makers might need to ensure that an agent always calls a specific tool first or seek more control over how tools are utilised.

Additionally, they may want to use different prompts for the agent depending on its current state.

Exposing A Narrow Interface

At its core, LangGraph provides a streamlined interface built on top of LangChain.

Why LangGraph?

LangGraph is framework-agnostic, with each node functioning as a regular Python function.

It extends the core Runnable API (a shared interface for streaming, async, and batch calls) to facilitate:

Seamless state management across multiple conversation turns or tool usages.

Flexible routing between nodes based on dynamic criteria

Smooth transitions between LLMs and human intervention

Persistence for long-running, multi-session applications

LangGraph Chatbot

Below is a working LangChain chatbot, based on the Anthropic model. The base code is copied from LangChain example code in their cookbook.

%%capture --no-stderr

%pip install -U langgraph langsmith

# Used for this tutorial; not a requirement for LangGraph

%pip install -U langchain_anthropic

#################################

import getpass

import os

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("ANTHROPIC_API_KEY")

#################################

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph

from langgraph.graph.message import add_messages

class State(TypedDict):

# Messages have the type "list". The `add_messages` function

# in the annotation defines how this state key should be updated

# (in this case, it appends messages to the list, rather than overwriting them)

messages: Annotated[list, add_messages]

graph_builder = StateGraph(State)

#################################

from langchain_anthropic import ChatAnthropic

llm = ChatAnthropic(model="claude-3-haiku-20240307")

def chatbot(state: State):

return {"messages": [llm.invoke(state["messages"])]}

# The first argument is the unique node name

# The second argument is the function or object that will be called whenever

# the node is used.

graph_builder.add_node("chatbot", chatbot)

#################################

graph_builder.set_entry_point("chatbot")

#################################

graph_builder.set_finish_point("chatbot")

#################################

graph = graph_builder.compile()

#################################

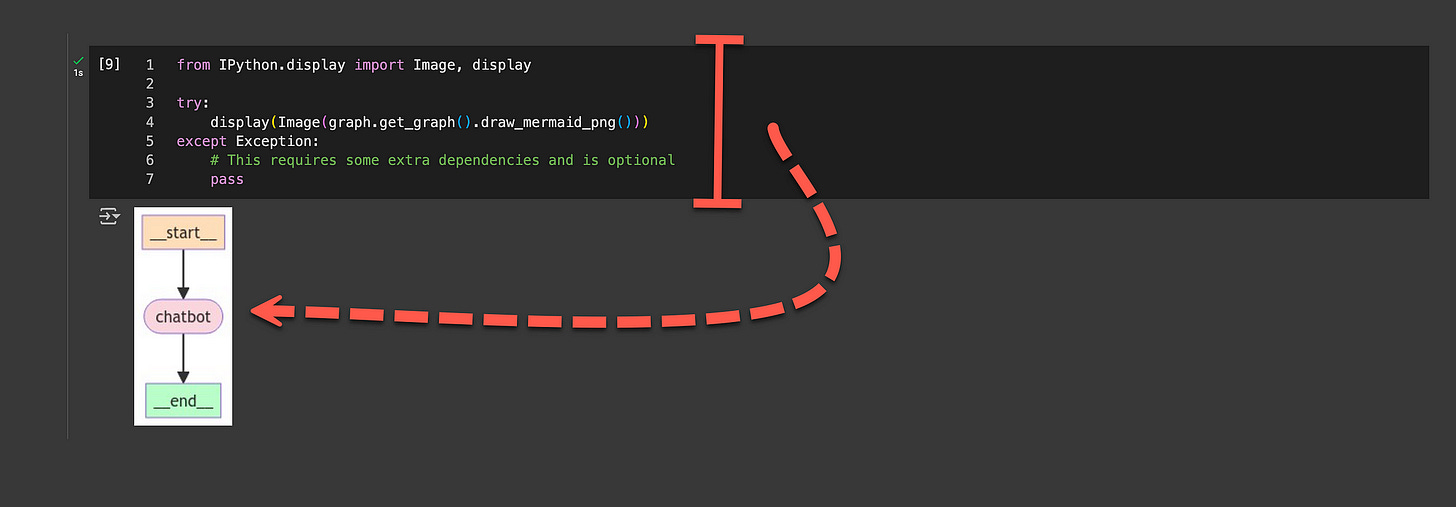

from IPython.display import Image, display

try:

display(Image(graph.get_graph().draw_mermaid_png()))

except Exception:

# This requires some extra dependencies and is optional

pass

#################################

while True:

user_input = input("User: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye!")

break

for event in graph.stream({"messages": ("user", user_input)}):

for value in event.values():

print("Assistant:", value["messages"][-1].content)

#################################Below the snipped showing how the graphic rendering the flow.

Finally

The Graph Data Type is a powerful tool to show a visual representation of data. And more than a visual representation, the representation between different nodes is ideal to create a spacial representation of data nodes.

Graph Data Types is also ideal for their semantic behaviour from the point of view of a user of the data.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.