LangSmith, LangGraph Cloud & LangGraph Studio

Here I do an end-to-end walkthrough of an Agent built using LangGraph, deployed to LangGraph Cloud & viewed in LangGraph Studio. Ending with LangSmith on managing applications & LLM performance.

Introduction

Considering the intersection of language and AI, developments have been taking place at a tremendous pace. And LangChain finds itself at the forefront of shaping how generative AI applications are developed and managed.

A few initial observations regarding generative AI and language:

A few months ago it was thought that OpenAI has captured the market with their highly capable LLMs.

Then a slew of open-sourced models, most notably from Meta disrupted the perceived commercial model.

LLM providers realised that Language Models will become a mere utility and started to focus on end-user applications and RAG-like functionalities referred to as grounding, agent-like functionality and personal assistants.

Hallucination had to be solved for, and it was discovered that LLMs do not have emergent capabilities, but rather LLMs do exceptionally well at in-context learning (ICL). An application structure developed around implementing, scaling and managing ICL implementations; which we now know as RAG.

RAG (non-gradient) started to be preferred above fine-tuning (gradient) approaches for reasons of being transparent, not as opaque as fine-tuning. Adding to generative AI apps being observable, inspectable and easy modifiable.

Because we started using all aspects of LLMs (NLG, reasoning, planning, dialog state management, etc.) except the knowledge intensive nature of LLMs, Small Language Models become very much applicable.

This was due to very capable open-sourced SLMs, quantisation, local, offline inference, advanced capability in reasoning and chain-of-thought training.

And, the focus is shifting to two aspects…the first being a data centricapproach. Where unstructured data can be discovered, designed and augmented for RAG and fine-tuning. Recent fine-tuning did not focus on augmenting the knowledge-intensive nature of Language Models, but rather to imbue the LMs with specific behavioural capabilities.

This is evident in the recent acquisition bye OpenAI to move closer to the data portion and delivering RAG solutions.

The second aspect the need for a no-code to low-code AI productivity suite providing access to models, hosting, flow-engineering, fine-tuning, prompt studio and guardrails.

There is also a notable movement to add graph data…graph is an abstract data type…An abstract data type is a mathematical model for data types, defined by its behaviour (semantics) from the point of view of a user of the data. Abstract data types are in stark contrasts with data structures, which are concrete representations of data, and are the point of view of an implementer, not a user. This data structure is less opaque and easy to interpret.

Back To LangChain

langChain introduced LangSmith as a tool for detailed tracing and management of Generative AI applications. The offering included a prompt playground, and prompt hub.

langChain also recently introduced LangGraph, which adds to some degree structure to agentic applications.

An abstract data type is a mathematical model for data types, defined by its behaviour (semantics) from the point of view of a user of the data.

Abstract data types are in stark contrasts with data structures, which are concrete representations of data, and are the point of view of an implementer, not a user. This data structure is less opaque and easy to interpret.

Directed graph (or digraph) is a graph that is made up of a set of nodes connected by directed edges.

Graph data structure consists of a finite set of nodes together with a set of unordered pairs of these nodes for an undirected graph.

Considering the graph representation below, the nodes are shown, together with the edges and the edge options.

LangSmith

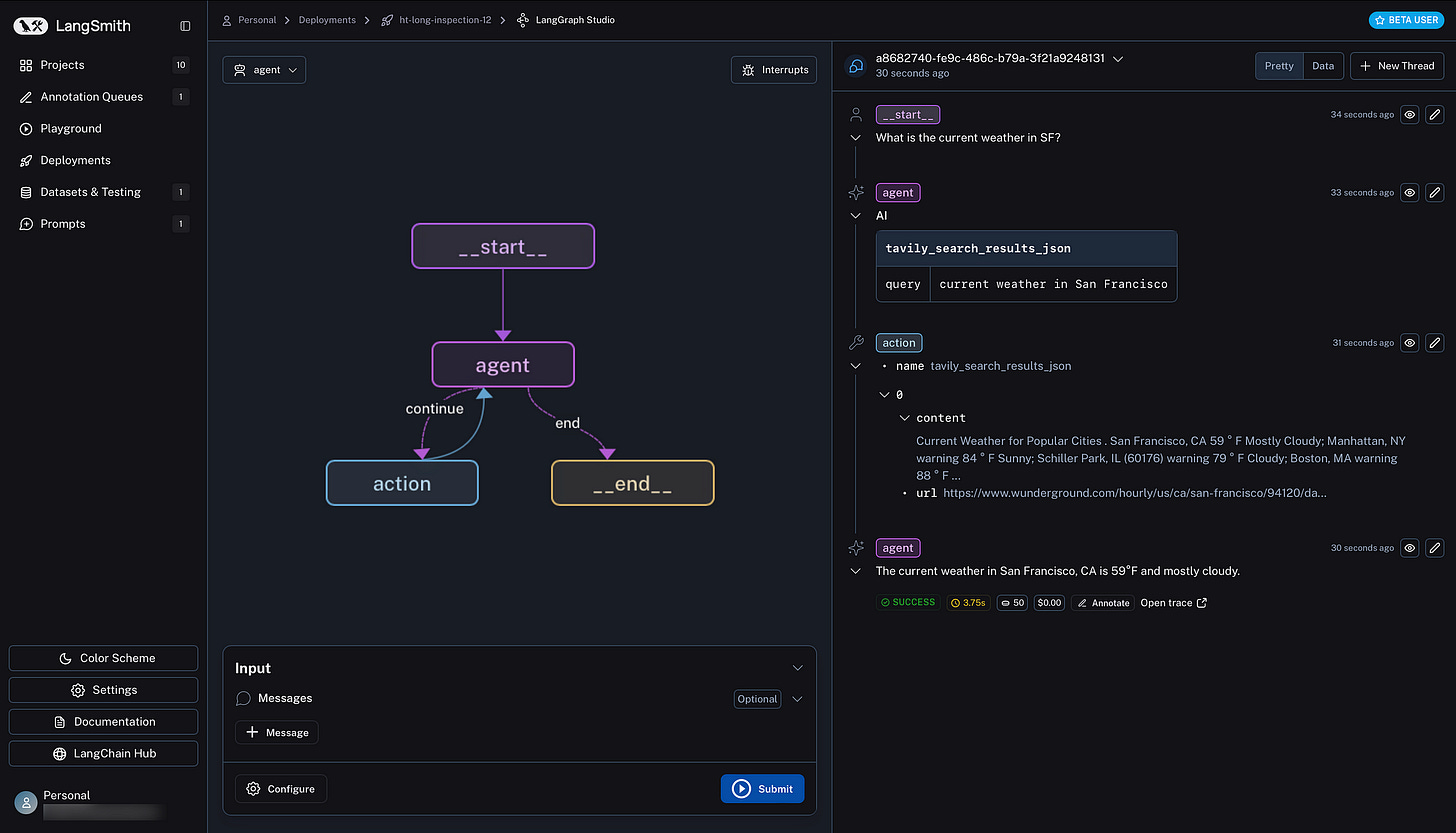

Considering the image below, the LangSmith console can be seen with the six elements listed on the left; Projects, Annotation Queues, Playground, Deployments, Datasets & Testing and Prompts.

The addition for LangGraph is the deployments portion to manage the LangGraph.

It needs to be stated that LangGraph Studio is a graphic representation of the code you have written. Studio is a way to visualise and gain insights into data flow.

Studio is not a flow creation or development tool, hence code can be visually represented in Studio. But within Studio, code cannot be edited or changed. Hence Studio is an observation, debugging and conversation flow tool.

Deploying A LangGraph App to Studio

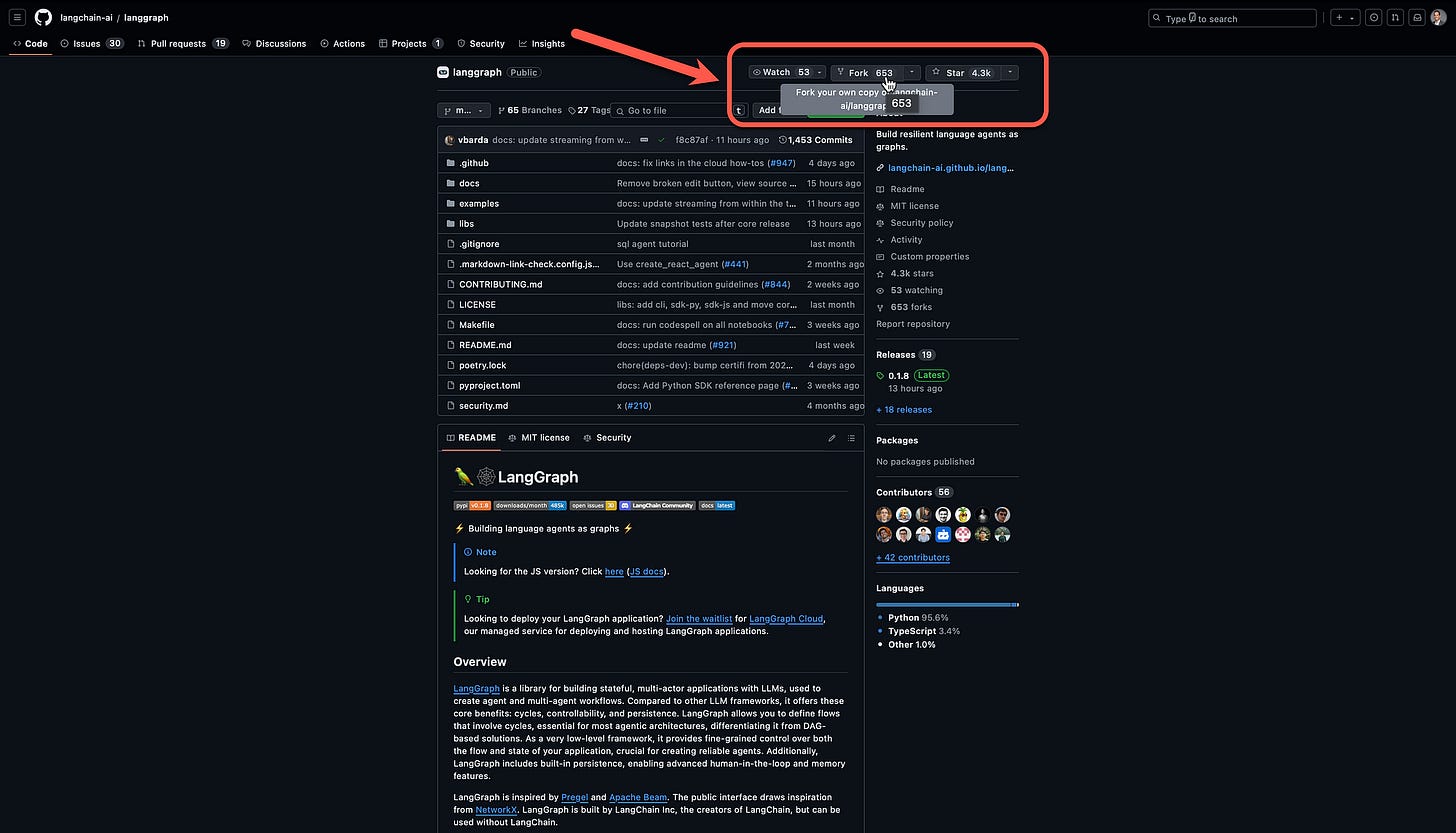

To create my first LangGraph application, I have to fork the Github example application by LangChain.

Below, the repository in my Github showing the forked application…

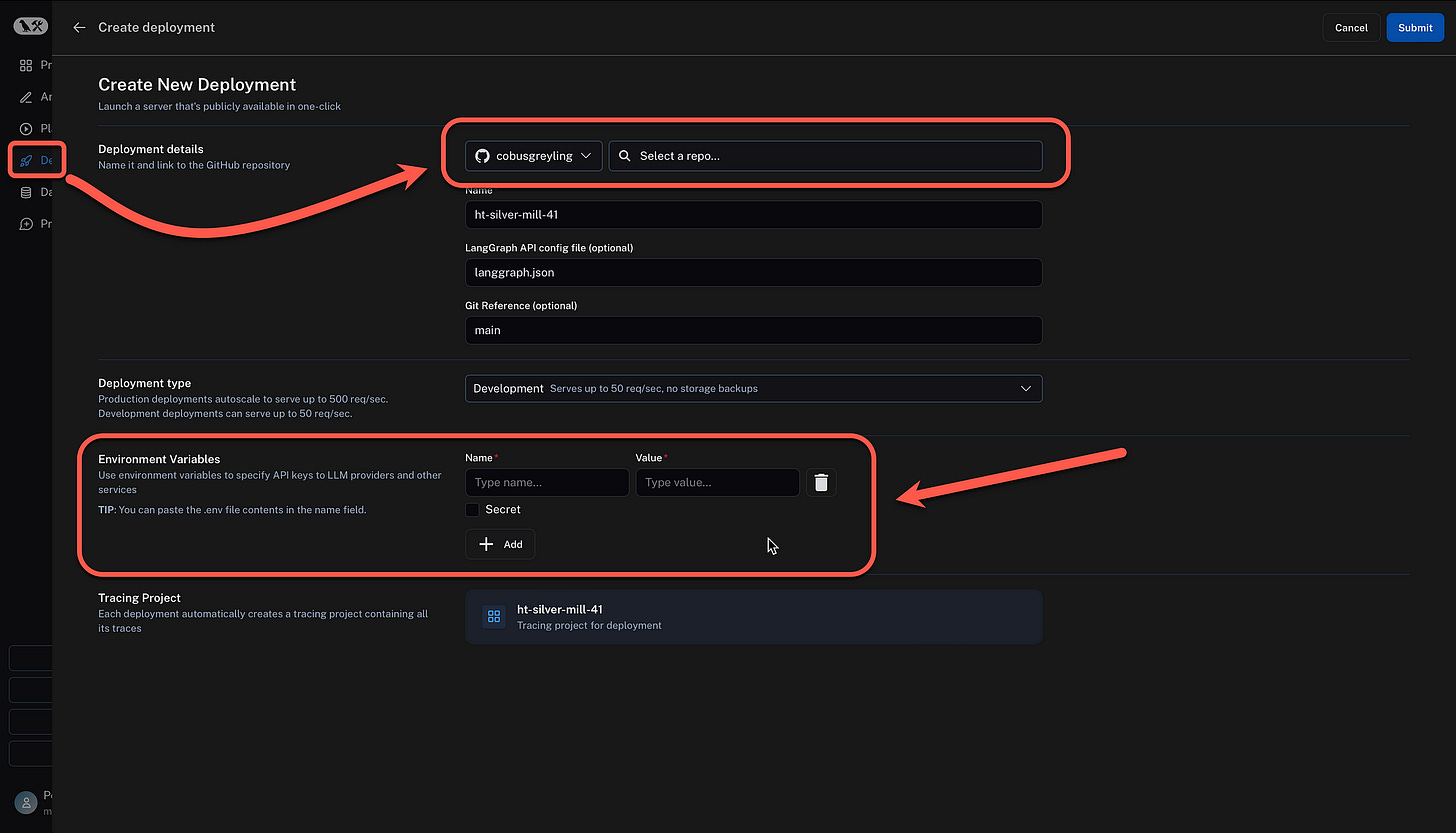

Back in LangSmith, I need to deploy the forked repository instance to LangGraph Cloud. Below I show the sequence of events in order to deploy a LangGraph application to LanGraph Cloud.

Again below you can see I reference my GitHub from LangSmith, and select the repository where my LangGraph application resides. I can also set the environment variables, in this case it is my OpenAI API key, Anthropic API key, etc.

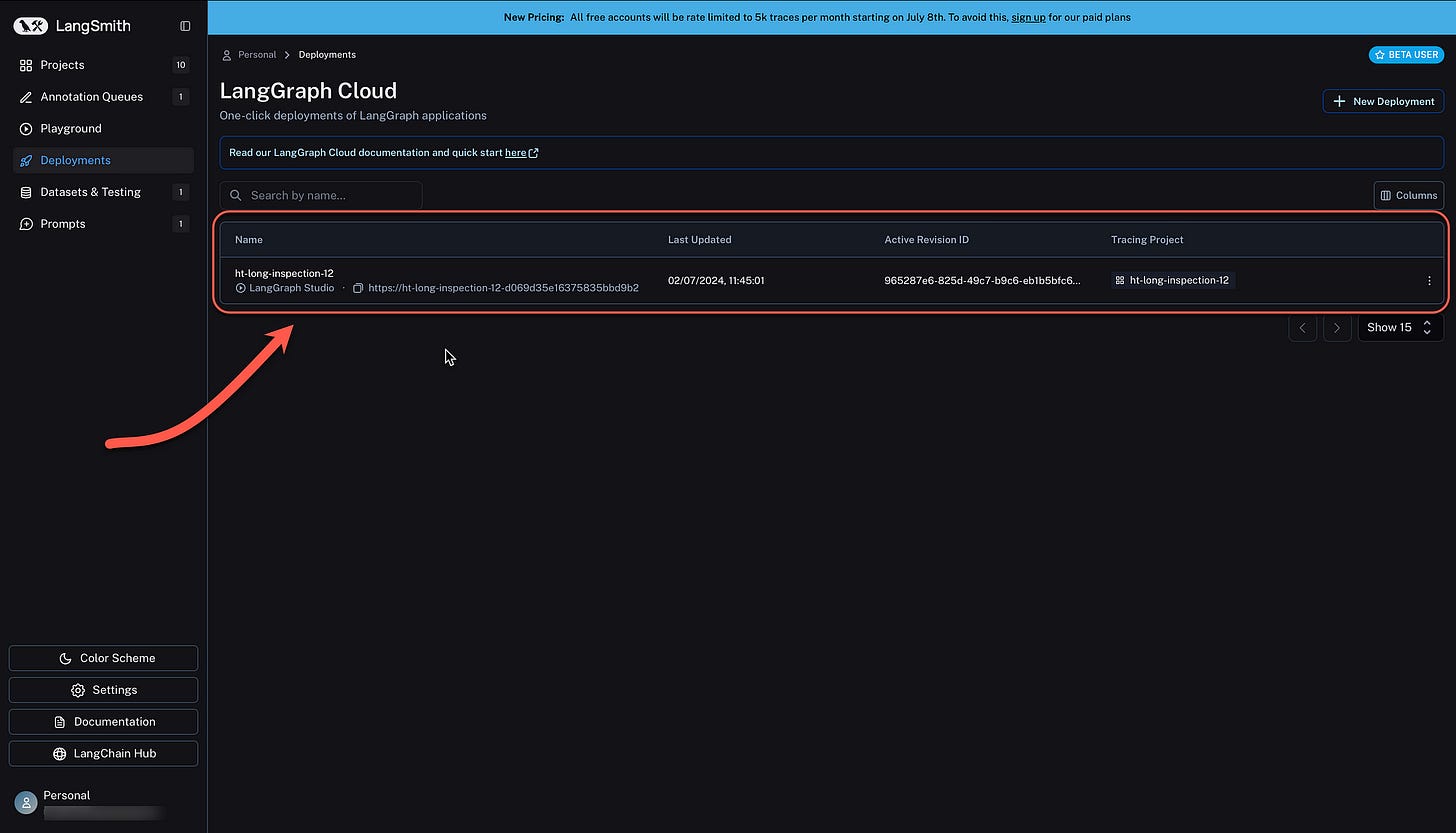

After successful deployment, the LangGraph Cloud instance is shown, with the tracing project, status, and more.

LangGraph Studio

When LangGraph Studio is opened, a visual Graph representation is shown of the application which can be interacted with. Pauses or breaks can be added to the application and the nodes can be ordered in a meaningful way for the user.

There is a Configuration option, and message settigs. Notice on the right, the status, tokens spend, latency and more are shown.

Consider below how an interrupt is added to the agent node, the flow of the application is stopped and waits for the maker to explicitly continue the execution of the flow.

The flow is show on the right, with the action which was taken, with the URL and content and the agent response.

Flow Trace

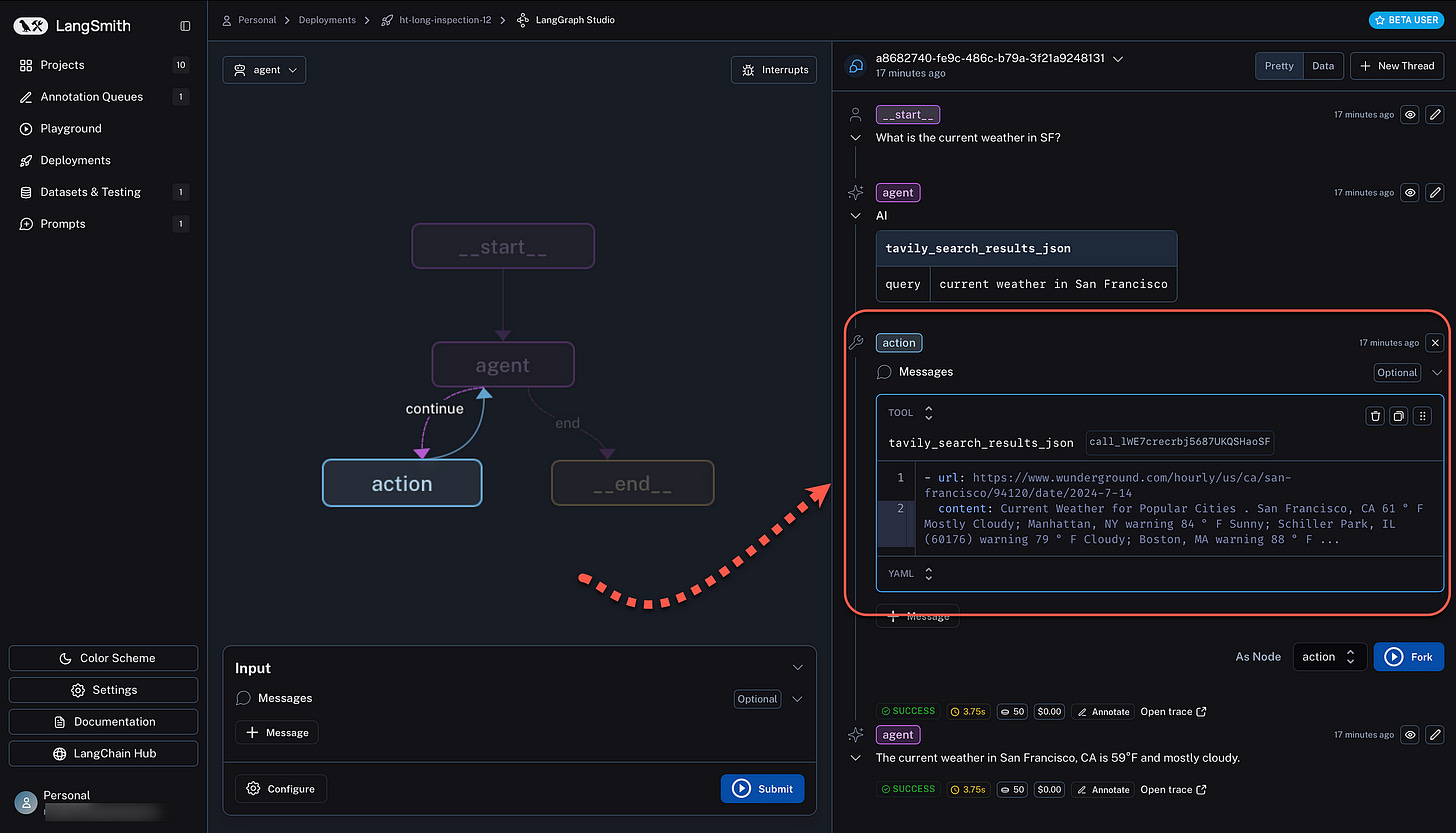

Below it is shown how, when the maker hovers over the graph nodes in the trace on the right, the specific node is highlighted on the left.

Flow Forking

LangChain refers to it as a time travel feature, a specific node in the flow can be forked. In the example below, I change the actual data of the action taken by the agent…

Then I can execute the flow again, and as seen below, there are two forked versions and the maker can toggle between the two results. This interface is ideal for testing different permutations along the flow to see what the end result will be.

Lastly, below the trace of the LangGraph agent shown in LangSmith, notice the level of detail available. Options in LangSmith trace views include options to view runs, threads, root runs, LLM calls, etc. Data retentions can be edited, metadata, threads, and more.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.