Language Agent Tree Search — LATS

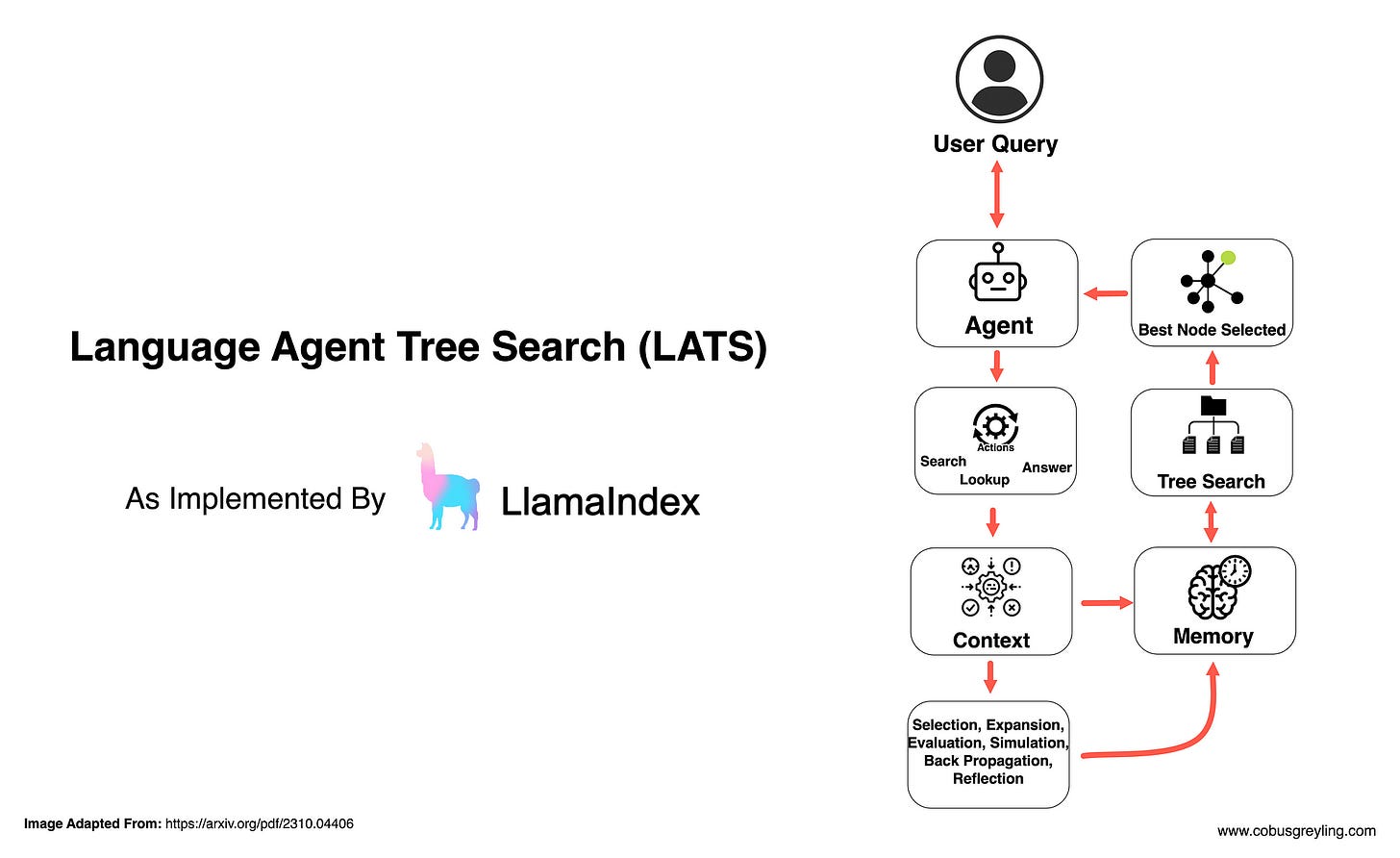

The LATS framework is a first of its kind general framework that synergises the capabilities of LMs in reasoning, acting, and planning.

It moves us towards general autonomous agents capable of reasoning and decision making in a variety of environments.

Introduction

For a while now it has been known that LLMs cannot be used in isolation for complex and highly granular and contextually aware implementations.

LLMs need not only to be enhanced by external tools, but form the backbone of a agentic framework. This is achieved by making use of external tools and semantic feedback.

External tools can include features like web search, accessing particular APIs, Document search and more.

The study found that most conversational UIs often fall short of human-level deliberate and thoughtful decision making. And added to this, many methods fail on the aspect of considering multiple reasoning paths or planning ahead.

Often such methods operate in isolation, lacking the incorporation of external feedback that can improve reasoning.

Language Agent Tree Search Unifies Reasoning, Acting & Planning in Language Models.

Practical Working Example

A framework like LATS might seem opaque at first, but later in this article I’ll step through a LlamaIndex implementation of this framework. This goes a long way in demystifying the framework when one can see it in action.

Back To LATS

Initial Considerations

The framework incorporates the basic components of human conversation. That being memory, contextual awareness, following a human-like thought process which is underpinned by search.

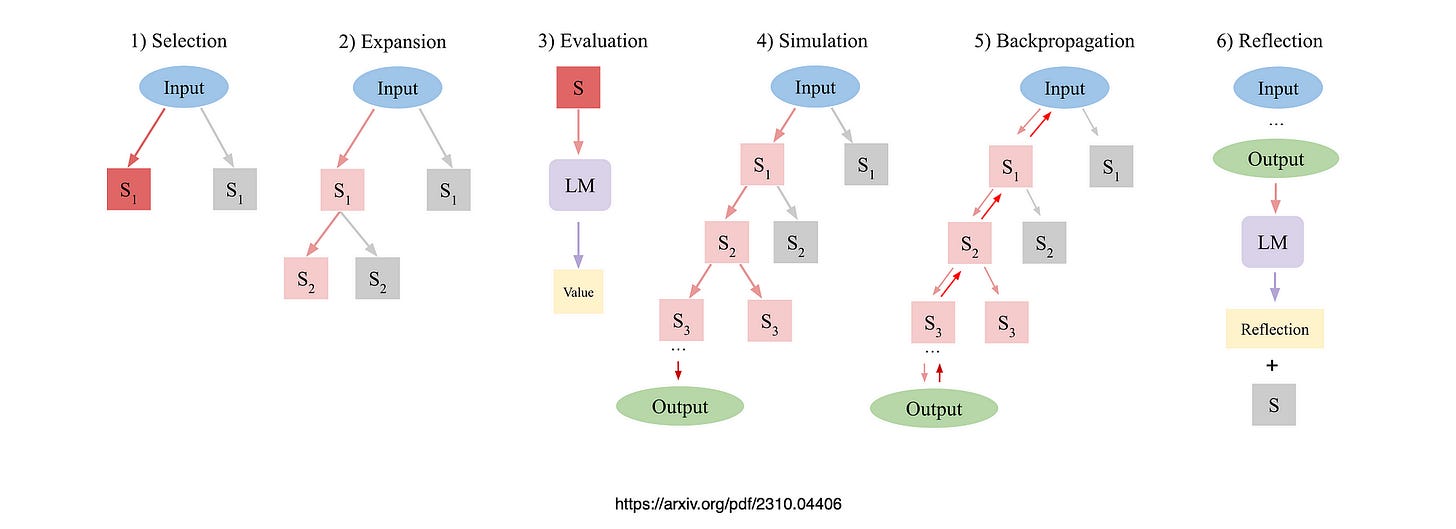

The LlamaIndex implementation of a LATS agent shows how the ambit of the agent needs to be set with the number of possible sub-actions to explore under each node together with how deep each exploration of the search goes.

Latency always comes to mind when considering these agents, and the cost of running the LLM backbone. However, there are solutions to these challenges.

Cost can be curtailed as mentioned earlier, setting the budget for the LLM helps, and in cases where the budget is reached with no conclusive answer, the conversation & context can be transferred to a human.

The LATS agent explores and find the best trajectory based on sample actions and is more flexible and adaptive to problem-solving compared to reflexive prompting methods.

By integrating external feedback and self-reflection, LATS enhances model sensibility and enables agents to learn from experience, surpassing reasoning-based search methods.

Considering LlamaIndex for a moment, the combination of autonomous agents and RAG referred to by LlamaIndex as Agentic RAG is a natural culmination of best practice.

The documents ingested by the agent, serves as an excellent contextual reference and makes the agent domain-of-use specific.

Consider for a moment if this agent is used in an organisation as an inward facing bot. Assisting employees answer complex and often ambitious questions.

Practical LATS Implementation

LlamaIndex Implementation

The complete LlamaIndex notebook can be found here.

The notebook starts with the initial setup:

%pip install llama-index-agent-lats

%pip install llama-index-program-openai

%pip install llama-index-llms-openai

%pip install llama-index-embeddings-openai

%pip install llama-index-core llama-index-readers-fileThen define your OpenAI API key:

import os

os.environ["OPENAI_API_KEY"] = "<Your API Key Goes Here>"

import nest_asyncio

nest_asyncio.apply()Then the LLM and embedding models are defined:

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.core import Settings

# NOTE: a higher temperate will help make the tree-expansion more diverse

llm = OpenAI(model="gpt-4-turbo", temperature=0.6)

embed_model = OpenAIEmbedding(model="text-embedding-3-small")

Settings.llm = llm

Settings.embed_model = embed_modelDownload the relevant data:

!mkdir -p 'data/10k/'

!wget 'https://raw.githubusercontent.com/run-llama/llama_index/main/docs/docs/examples/data/10k/uber_2021.pdf' -O 'data/10k/uber_2021.pdf'

!wget 'https://raw.githubusercontent.com/run-llama/llama_index/main/docs/docs/examples/data/10k/lyft_2021.pdf' -O 'data/10k/lyft_2021.pdf'Index the documents:

import os

from llama_index.core import (

SimpleDirectoryReader,

VectorStoreIndex,

load_index_from_storage,

)

from llama_index.core.storage import StorageContext

if not os.path.exists("./storage/lyft"):

# load data

lyft_docs = SimpleDirectoryReader(

input_files=["./data/10k/lyft_2021.pdf"]

).load_data()

uber_docs = SimpleDirectoryReader(

input_files=["./data/10k/uber_2021.pdf"]

).load_data()

# build index

lyft_index = VectorStoreIndex.from_documents(lyft_docs)

uber_index = VectorStoreIndex.from_documents(uber_docs)

# persist index

lyft_index.storage_context.persist(persist_dir="./storage/lyft")

uber_index.storage_context.persist(persist_dir="./storage/uber")

else:

storage_context = StorageContext.from_defaults(

persist_dir="./storage/lyft"

)

lyft_index = load_index_from_storage(storage_context)

storage_context = StorageContext.from_defaults(

persist_dir="./storage/uber"

)

uber_index = load_index_from_storage(storage_context)Setup the two tools, or engines:

lyft_engine = lyft_index.as_query_engine(similarity_top_k=3)

uber_engine = uber_index.as_query_engine(similarity_top_k=3)In typical agent fashion, the two agent tools are given a description, this description is important as it helps the agent decide when and how to use the tool.

Obviously agents can have access to multiple tools and the more tools at the disposal of the agent, the more powerful the agent is.

from llama_index.core.tools import QueryEngineTool, ToolMetadata

query_engine_tools = [

QueryEngineTool(

query_engine=lyft_engine,

metadata=ToolMetadata(

name="lyft_10k",

description=(

"Provides information about Lyft financials for year 2021. "

"Use a detailed plain text question as input to the tool. "

"The input is used to power a semantic search engine."

),

),

),

QueryEngineTool(

query_engine=uber_engine,

metadata=ToolMetadata(

name="uber_10k",

description=(

"Provides information about Uber financials for year 2021. "

"Use a detailed plain text question as input to the tool. "

"The input is used to power a semantic search engine."

),

),

),

]This is the setup of the LATS agent…

This is also where the budget of the agent is defined…

num_expansionsrefers to the number of possible sub-actions to explore under each node.num_expansions=2means we will explore to possible next-actions for every parent action.max_rolloutsrefers to how deep each exploration of the search space continues.max_rollouts=5means a maximum depth of 5 is explored in the tree.

You can also see the reference to the LLM and the tools.

from llama_index.agent.lats import LATSAgentWorker

agent_worker = LATSAgentWorker.from_tools(

query_engine_tools,

llm=llm,

num_expansions=2,

max_rollouts=3, # using -1 for unlimited rollouts

verbose=True,

)

agent = agent.as_worker()Below a question is posed to the agent, notice how ambiguous the question is, with different conditions and nuances.

task = agent.create_task(

"Given the risk factors of Uber and Lyft described in their 10K files, "

"which company is performing better? Please use concrete numbers to inform your decision."

)The notebook from LlamaIndex goes through a number of different permutations…

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

COBUS GREYLING

At the intersection of AI & Language | NLP/NLU/LLM, Chat/Voicebots, CCAI I explore and write about all things at the…

www.cobusgreyling.com

llama_index/docs/docs/examples/agent/lats_agent.ipynb at main · run-llama/llama_index

LlamaIndex is a data framework for your LLM applications - llama_index/docs/docs/examples/agent/lats_agent.ipynb at…

github.com

Language Agent Tree Search Unifies Reasoning Acting and Planning in Language Models

While language models (LMs) have shown potential across a range of decision-making tasks, their reliance on simple…

arxiv.org

https://llamahub.ai/l/agent/llama-index-agent-lats?from=agent