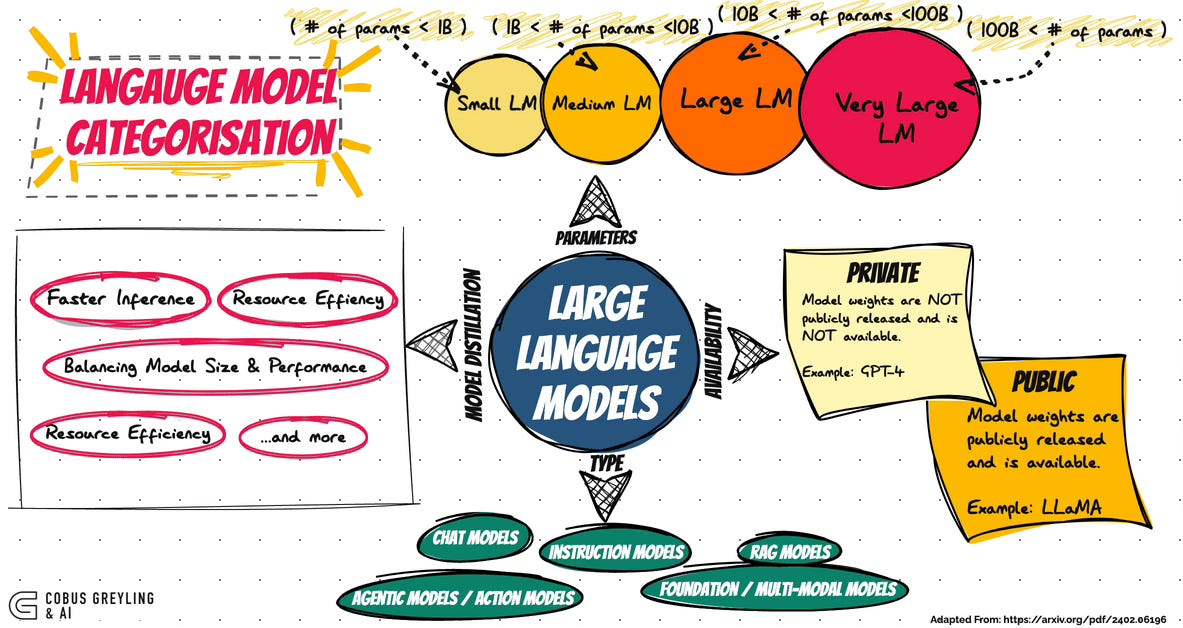

Language Model Categorisation

The landscape of artificial intelligence is quickly evolving & the term Large Language Models (LLMs) is gradually being replaced by more nuanced terminology.

This shift is largely due to advances in fine-tuning approaches and optimisations that have led to a diverse range of model sizes and capabilities, as well as a wider range of applications that these models can address.

Developments include not only various model sizes like small, medium, large, and extra-large models, but also new types of models designed for specific functions, such as reasoning or multimodal processing.

The term Large Language Model has evolved beyond a specific technical concept to become part of the general vocabulary associated with generative AI.

It now loosely refers to any AI model focused on understanding and generating human language.

As AI capabilities have expanded, so has the term, which now encompasses a broad range of models — large, small, and even multimodal — that process language in creative and complex ways.

In essence, it has become shorthand for the wide array of language centred AI technologies that power modern applications.

Understanding Model Size From Small to Extra-Large Models

Large Language Models

Large Language Models traditionally refer to models with vast numbers of parameters.

Parameters in this context are the components within an AI model that determine how it processes data; essentially, they’re like the knobs & levers that the model tunes to learn from and make sense of language.

For example, OpenAI’s GPT-3 has 175 billion parameters, while GPT-4 has even more. However, advancements have now led to a variety of model sizes that cater to different needs.

Medium to Small Language Models

Small & Medium Models are typically more computationally efficient, suitable for specific tasks where large-scale computation power is not required.

One could consider this approach as one where the model is fit to the use-case and implementation requirements.

Extra-large models are continuing to push the boundaries in terms of accuracy and capabilities, often requiring substantial computational resources but providing state-of-the-art performance.

This range of sizes allows organisations to select models based on their unique requirements and constraints, allowing more tailored and efficient AI solutions.

Expanding Beyond Language with Multi-Modal Models

The term Language Model has also evolved as AI models increasingly incorporate multimodal capabilities, meaning they can process and generate outputs in more than one format.

Multi-modal models can handle text, image, audio, and video inputs, making them versatile in handling various data types.

Multi-modal models can perform tasks that would require several distinct systems working in tandem. For example, models can understand and generate associations between images and text, which is helpful for tasks like image captioning or content recommendation.

These capabilities highlight how language models are transforming to support increasingly complex tasks that extend far beyond language alone.

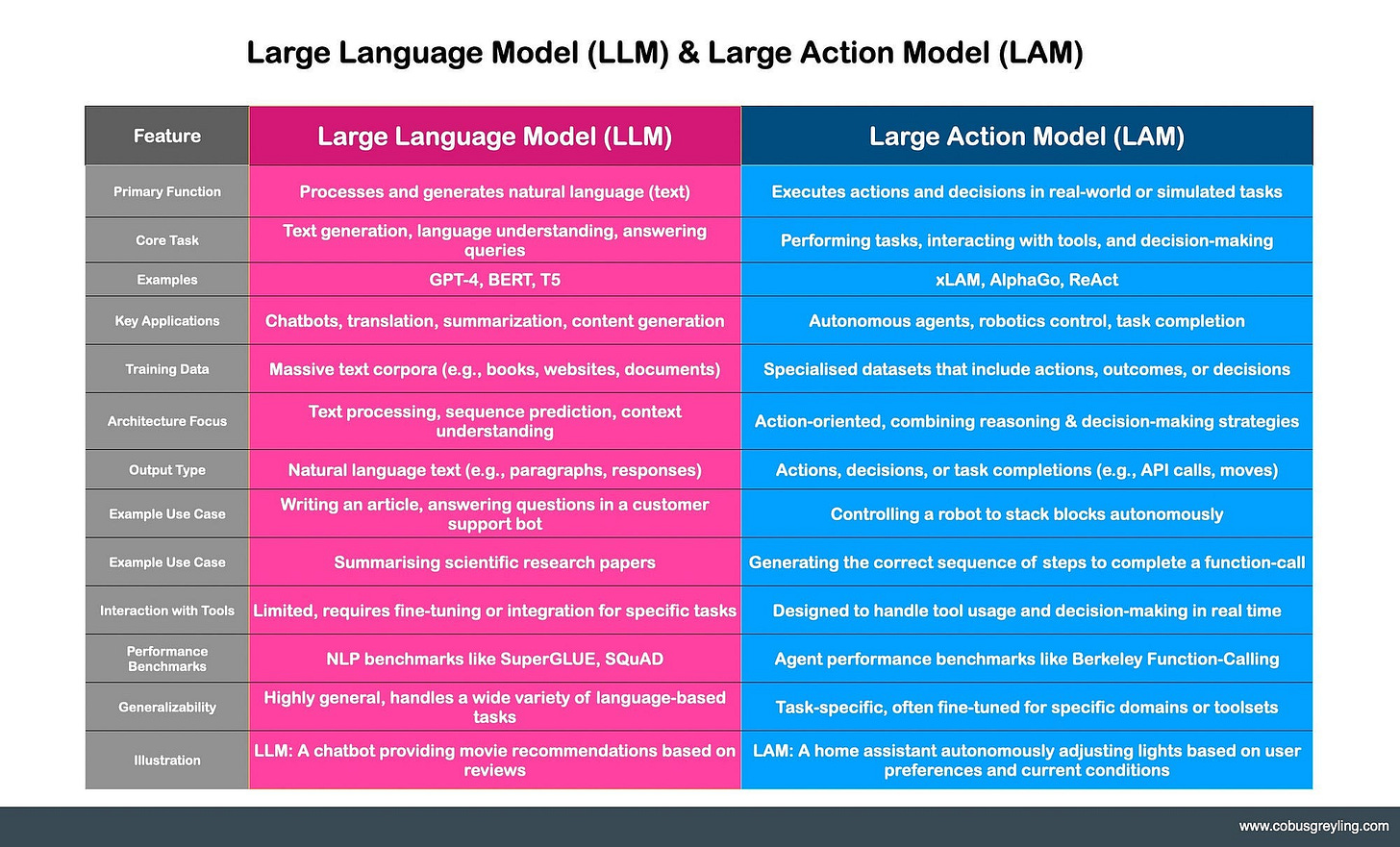

Action Models (also known as Large Action Models)

Another notable development in the field is the introduction of action models, which Salesforce has been pioneering.

Training Small Language Models (SLMs)

As the capabilities of LLMs have grown, an emerging approach has been a push towards creating Small Language Models (SLMs) that leverage the advancements from their larger counterparts and are often trained by LLMs.

This often involves techniques like partial masking, which focuses the model’s training on particular segments of data to optimise performance.

Microsoft has recently explored this with techniques like Tiny Stories, which use simple, focused narratives to train models on key reasoning skills without needing to process vast amounts of data.

This training strategy allows SLMs to become exceptional at specific tasks, such as reasoning, by learning from LLMs through a distilled approach.

These smaller models are not only easier to deploy but also highly efficient, performing complex reasoning tasks with fewer computational resources.

Conclusion

The shift from the traditional concept of Large Language Models to a more diverse and purpose-specific set of models marks a significant evolution in language & AI.

With the advent of multimodal and action models, as well as smaller, efficient models trained for specialised tasks, the landscape of AI is becoming more adaptable and capable.

These developments highlight how AI continues to push the boundaries of what’s possible, moving beyond mere language processing to a comprehensive suite of tools capable of handling diverse inputs, performing symbolic reasoning and more.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.