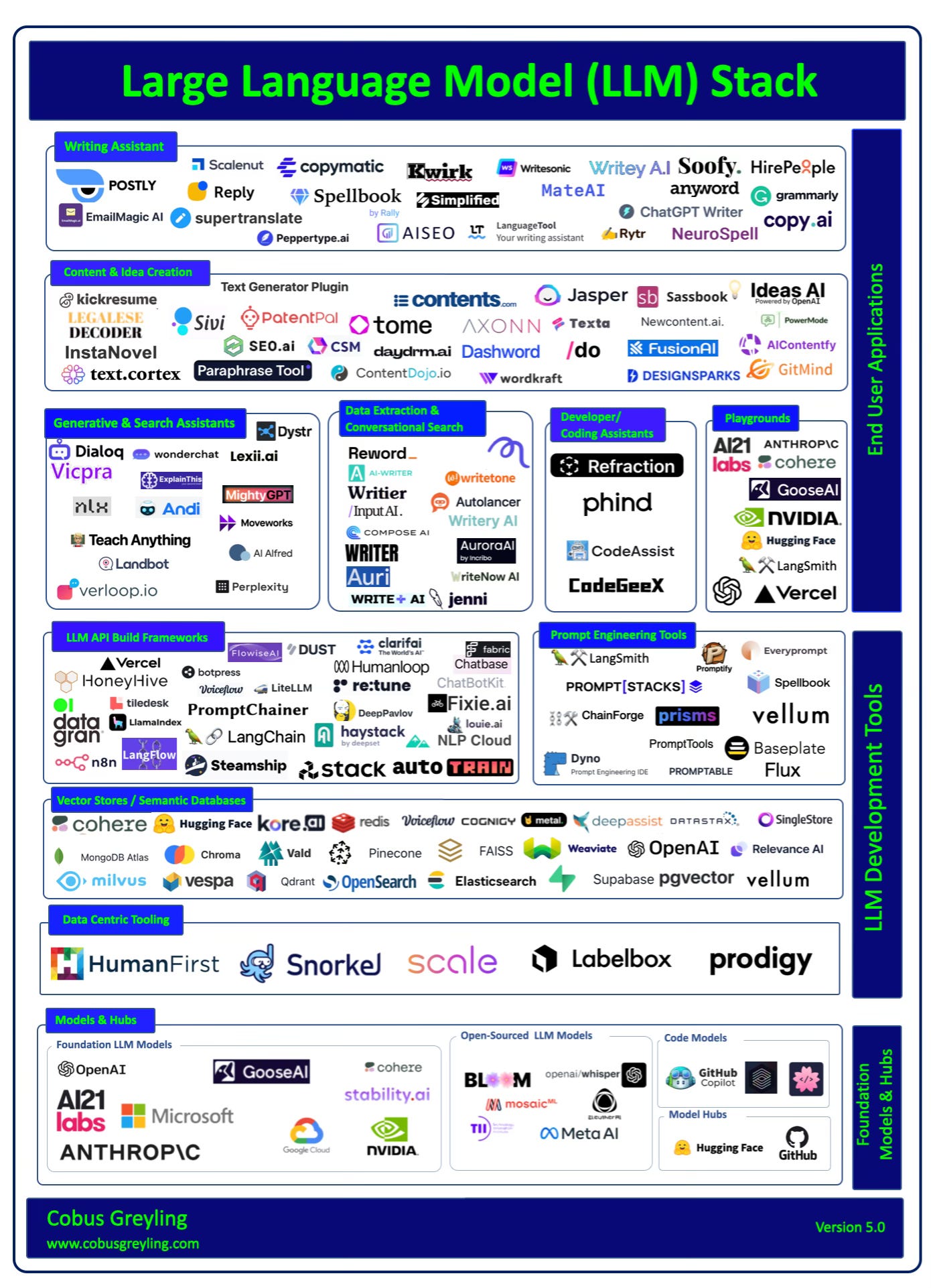

Large Language Model (LLM) Stack — Version 5

In my attempt to interpret what is taking place and where the market is moving, I created a product taxonomy defining the various LLM implementations and use cases.

There are sure to be overlaps between some products and categories listed above. I looked into the functionality of each and every product listed, hence the categories & segmentation of the landscape is a result of that research.

There are a few market shifts and trends taking place:

Diversification of LLM models are continuing more open-source options being available. I created a new category for LLM Playgrounds, and there is a significant opportunity to give makers access to models to experiment with.

Default LLM functionality is continuing to expand, with no-code fine-tuning dashboards and fine-tuning being available for more models.

Basic LLM offerings are superseding existing products. Consider here the growing LLM context windows, applications needing to reference multiple models and more.

Considering the image above, I would argue products higher up in the stack are more vulnerable.

LLMs are releasing end-user chat interfaces, like HuggingChat, ChatGPTand Coral from Cohere.

Prompt Engineering techniques are growing and the flexibility and utility of LLMs are really coming to the fore.

LLM fine-tuning is still relevant to create industry and market related models, for instance medical, legal, engineering, etc.

However, for general implementations a popular architecture is one of RAG, where accurate and contextually relevant reference data is included in the prompt to increase LLM response accuracy and negates hallucination.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.