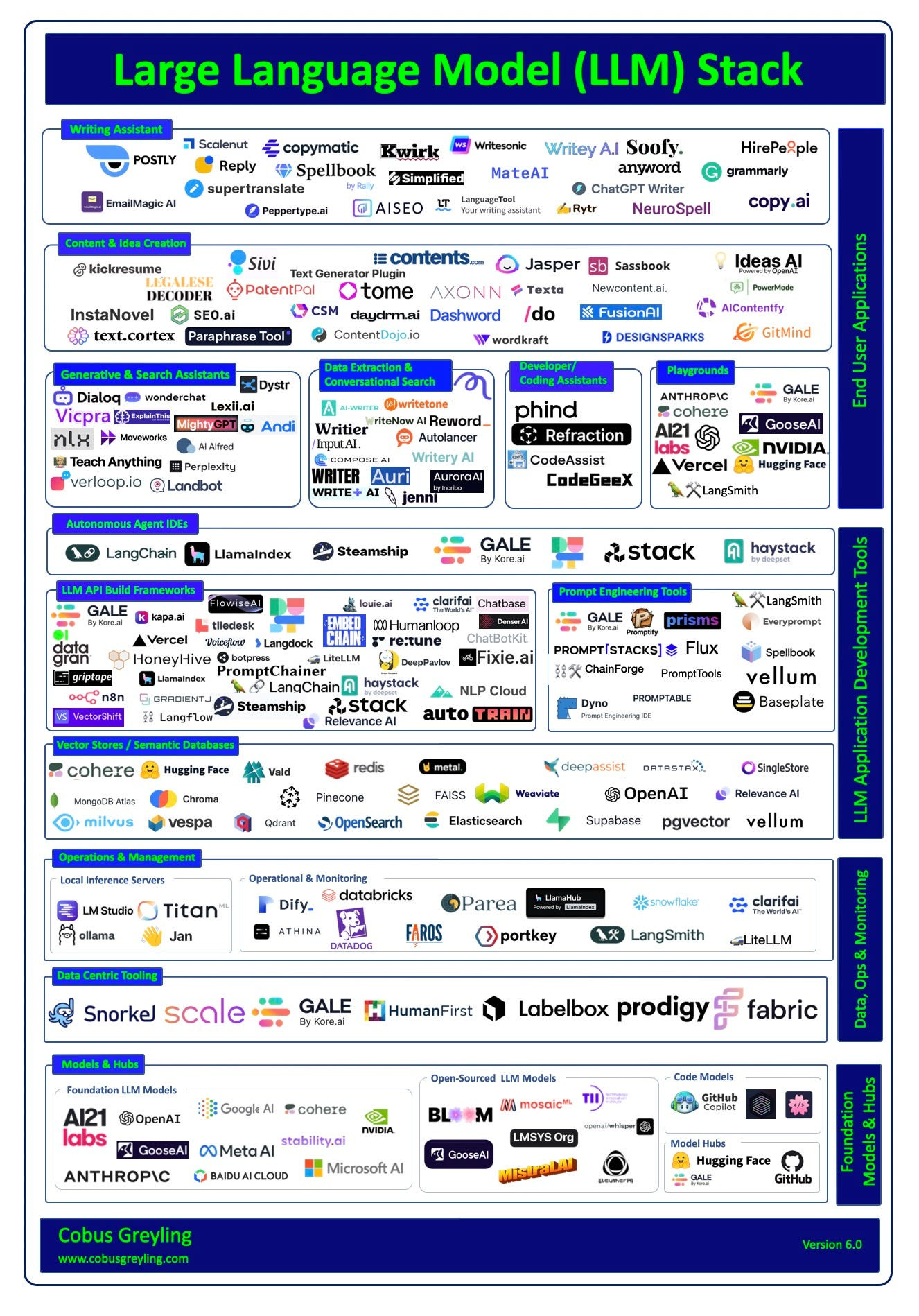

Large Language Model (LLM) Stack — Version 6

In trying to decipher current market trends and anticipate its direction, I again updated my product taxonomy outlining different LLM implementations and their respective use cases.

Some products and categories listed will for sure exhibit overlaps. I’m interested to learn what I have missed and what can be updated.

Some of the main points are…

⏺ In the market there has been immense interest in private / self hosting of models, small language models and local inference servers.

⏺ There has been an emergence of productivity hubs like GALE, LangSmith, LlamaHub and others.

⏺ RAG has continued to grow in functionality and general interest with RAG specific prompt engineering emerging.

⏺ Fine-Tuning LLMs and SLMs, not to imbue it with knowledge, but rather improve the behaviour of the model was the subject of a number of studies.

⏺ SLMs have featured of late, with models like TinyLlama, Orca-2 and Phi-3.

⏺ Considering the image, I would still argue products higher up in the stack are more vulnerable. LLM providers like OpenAI and Cohere are releasing more products and features which are baked into their offering. Like RAG-like functionality, memory, agents and more.

⏺ Default LLM functionality is continuously expanded, with no-code fine-tuning being available for more models.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.