Large Language Models & The Problem Of Abundance

Chatbot Development Frameworks have a problem of scarcity, while Large Language Models (LLMs) have the problem of abundance.

Chatbots

Chatbot development frameworks suffered from scarcity…chatbot developers had a few basic building blocks, these blocks are NLU (intents and entities), Dialog Flows and Response Messages.

The only machine learning, AI model based component of traditional chatbots is the NLU component. The NLU component has to predict intent and entities based on a model.

The Dialog Flow together with Response Messages are really a state machine based on fixed and hardcoded logic. The user is navigated through the maze of the dialog flow based on the user input.

When the chatbot developer starts, they start with a black canvas, and needs to develop the NLU, dialog flow and script (copy) from scratch. Hence the statement that chatbot development has a problem of scarcity.

There have been attempts to mitigate this scarcity and allow for accelerated development with an existing base. The one avenue created for accelerated chatbot development was collaboration tools and rapid prototyping.

Other tools were vertical or industry specific prebuilt bot frameworks for banking, IT Support, HR, etc.

More astute development tools were created where existing customer conversations could be used to detect intents and conversation classifications to accelerate the development of more accurate NLU.

But chatbots were still plagued with a rigid, pre-determined dialog flow, and a message abstraction layer with predetermined response messages which are also predefined.

In an effort to make the bot response messages more lifelike, nodes had a few message versions it looped through. Conversation specific information was injected into messages via placeholders, etc.

But chatbot developers yearned for a flexible and truly intelligent dialog management system.

There was also a dire need for flexible Natural Language Generation…where the response messages do not sit in a separate abstraction layer, but is tightly coupled with the dialog. And the dialog is generated on the fly.

With chatbots the unstructured conversational input had to be structured at input, and unstructured at output, in a very manual fashion.

LLMs

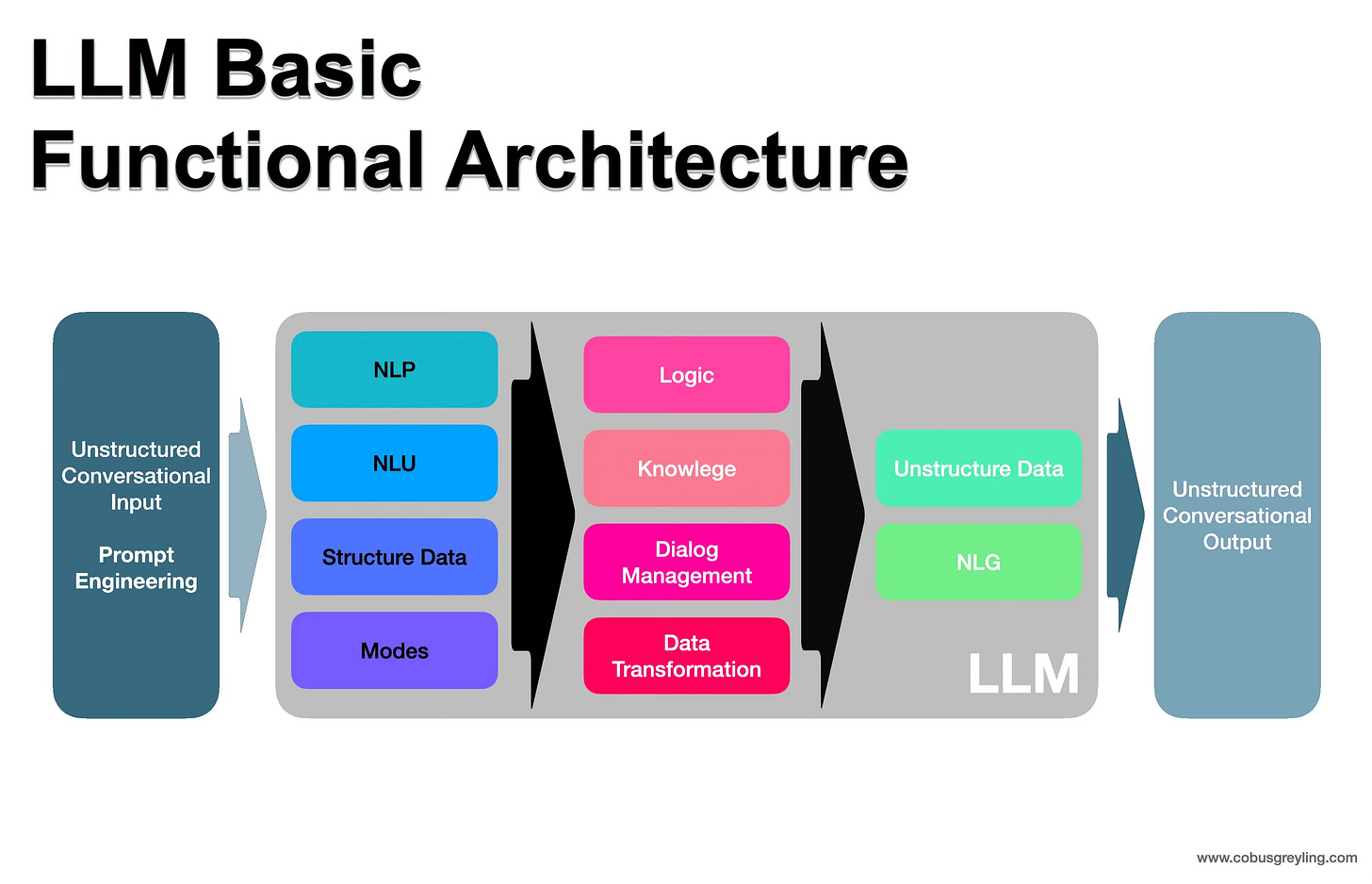

The introduction of LLMs took us to the other extreme, where all the elements like:

Data Transformation

Structuring and Unstructuring Natural Language

Dialog Management

NLG

NLU

were all combined into one model. The new challenge now is not to build a bot from a blank design canvas, but rather the new challenge was that of abundance. The problem now is to harness and control LLMs to create reliable, repeatable and responsible conversational applications.

There are a number of interesting developments in the LLM market:

Use-case specific LLMs for dialog, translation, code etc are falling away, and single models are becoming capable in virtually all of these domains.

LLM providers are making multiple models available.

LLM providers are creating two categories for use; one is personal assistants like ChatGPT, HuggingChat, Coral and more. The second category is developer use via APIs.

Huge strides are made in developing new prompting techniques to harness and achieve more granular control over LLM output.

Dialog Management is performed via prompt chaining, autonomous agents, prompt pipelines, and creating LLM-enabled chatbot development frameworks where LLMs assist at development time, build time and run time.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I need to attribute Maaike Groenewege with sparking this concept during a recent interview with Maaike I listened to.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.