LLM Symbolic Reasoning For Visual AI Agents

Large Language Models with visual capabilities can use symbolic reasoning by interpreting images and forming a mental map of the environment, similar to how humans process spatial information.

When viewing an image, these models identify key objects and their relationships (for example distances, positions, sizes) & convert this information into symbolic representations — like a map made of abstract symbols.

These symbols can represent spatial realities (for example, chair next to table), allowing the model to reason logically about tasks & answer questions based on these spatial observations. This helps AI make decisions or plan actions in real-world contexts.

Human reasoning can be understood as a cooperation between the intuitive & associative, and the deliberative & logical. ~ Source

System 1 & System 2

Considering the image below, conversational AI systems traditionally followed System 2 approaches, characterised by deliberate and logical reasoning.

These systems relied on intent detection and structured flows to determine action sequences. With the rise of Generative AI and Large Language Models (LLMs), there’s a shift toward System 1 solutions, which are more intuitive and associative.

A possible approach to activity reasoning is to build a symbolic system consisting of symbols and rules, connecting various elements to mimic human reasoning.

Previous attempts, though useful, faced challenges due to handcrafted symbols and limited rules derived from visual annotations. This limited their ability to generalise complex activities.

To address these issues, a new symbolic system is proposed with two key properties: broad-coverage symbols and rational rules. Instead of relying on expensive manual annotations, LLMs are leveraged to approximate these properties.

Given an image, symbols are extracted from visual content, and fuzzy logic is applied to deduce activity semantics based on rules, enhancing reasoning capabilities.

This shift exemplifies how intuitive, associative reasoning enabled by LLMs is pushing the boundaries of AI agent systems in tasks like activity recognition.

Humanlike

With just a quick glance at an image, we as humans can naturally translate visual inputs into symbols or concepts.

This allows us to use common-sense reasoning to understand and imagine the broader context beyond the visible scene — similar to how we infer the existence of gravity without directly seeing it.

Considering the image above:

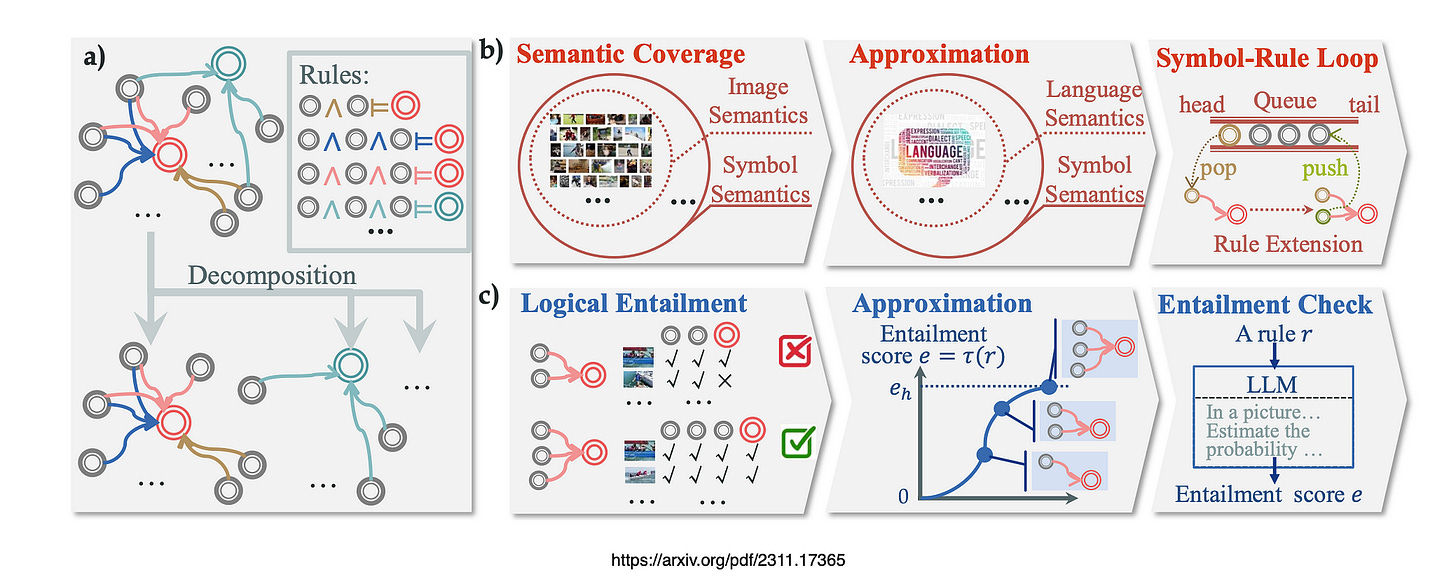

a) Structure and decomposition of the symbolic system

b) Semantic coverage . It can be approximated based on LLMs’ knowledge and achieved via the symbol-rule loop

c) Logical entailment. It can be approximated based on an entailment scoring function and achieved by entailment check.

The Link

Symbolic reasoning acts as a crucial bridge between applications requiring reasoning and language models with vision capabilities.

It enables models to move beyond mere perception of visual input and engage in complex cognitive tasks, such as understanding spatial relationships, object properties, or planning sequences.

By integrating symbolic reasoning, models can convert images into abstract symbols or concepts, allowing them to reason about the environment in a structured way.

This approach enhances both decision-making and problem-solving, linking visual understanding with more deliberate, logic-based reasoning typical in AI-driven applications.

Approaches I Could Find

There are a few basic approaches to symbolic reasoning LLMs:

Rule-Based Systems

LLMs can integrate symbolic rules where predefined logical structures help them reason about tasks, like manipulating objects or planning.

Hybrid Models

Combining LLMs with symbolic reasoning frameworks helps the model perform logical inference while retaining the flexibility of language-based understanding.

Symbol Extraction

LLMs translate natural language descriptions into symbols or representations, which then allow for structured reasoning, similar to how humans create mental models from complex information.

Fuzzy Logic

LLMs may use approximations of logical structures to handle ambiguity in symbolic reasoning, merging soft reasoning with strict symbolic rules.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.