LLMs Excel At In-Context Learning (ICL), But What About Long In-context Learning?

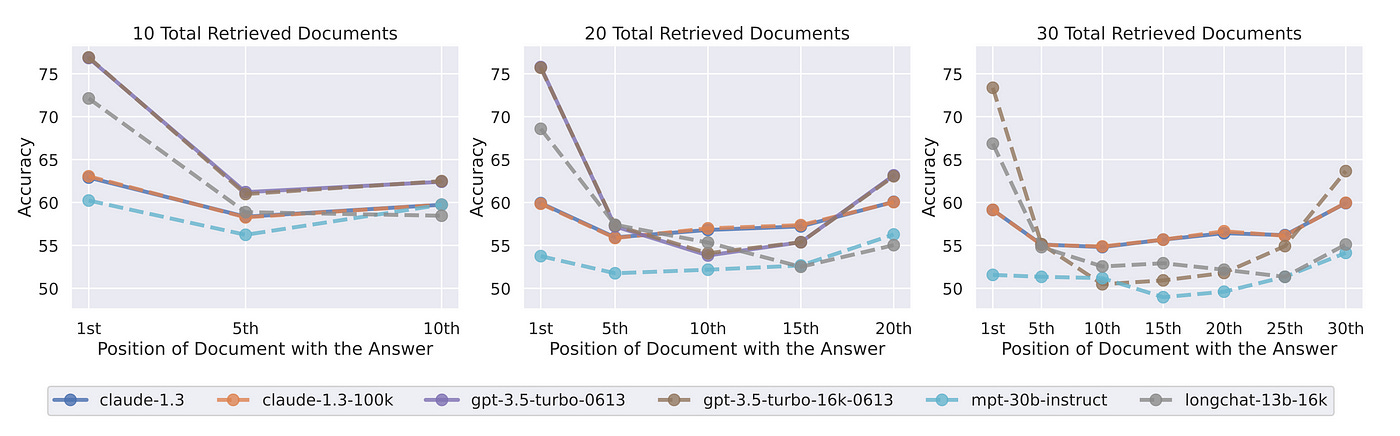

This study was inspired by the Lost in the Middle phenomenon which surfaced in 2023.

Context at inference remains of utmost importance and context matters for factually accurate generated responses. The two studies I reference in this article again illustrates that LLMs are not ready for context management to be offloaded to LLMs with large context windows.

Added to this, considering enterprise implementations, multiple users and a myriad of other considerations; RAG is still the most scaleable, manageable, observable and inspectable architecture. The allure of offloading functionality to LLMs, apart from scaleability and general architectural considerations, leads to an opaque black-box approach.

Introduction

The 2023 study found that LLMs perform better when the relevant information is located at the beginning or end of the input context.

However, when relevant context is in the middle of longer contexts, the retrieval performance is degraded considerably. This is also the case for models specifically designed for long contexts.

Back To The Recent Study

This recent investigation delves into whether the distribution of positions in instances impacts the performance of long in-context learning in tasks involving extreme-label classification.

Some Background

Large Language Models (LLMs) are really good at in-context learning (ICL). It has been proven that when LLMs are presented with a contextual reference at inference, LLMs lean more on the contextual reference than the knowledge imbedded in the base model.

Hence the popularity of RAG.

Because the primary focus of RAG is to inject a prompt with highly contextual information at inference.

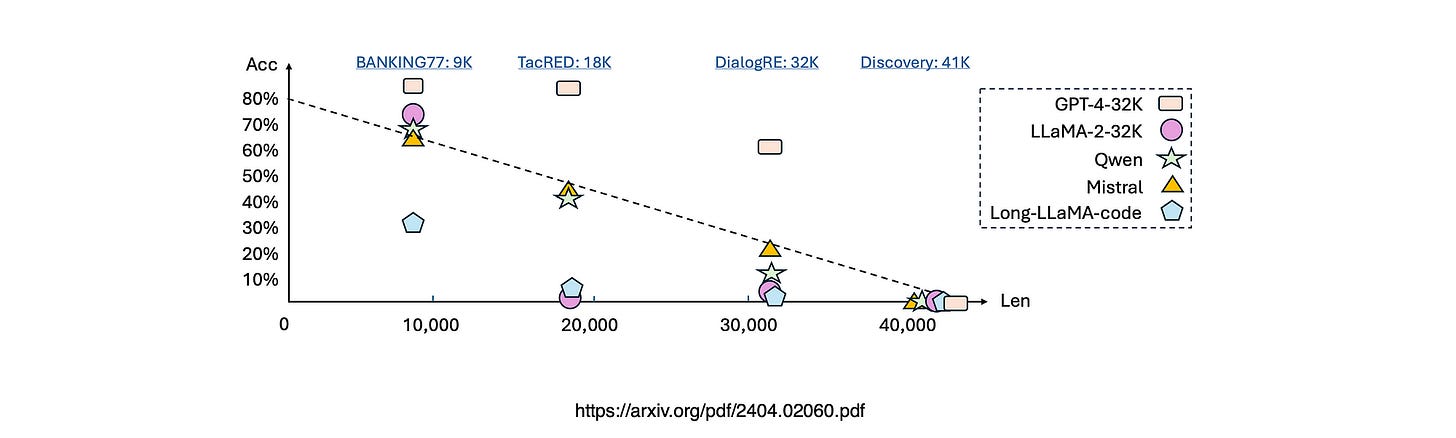

In this study, LLM performance was assessed across various lengths during along in-context benchmark process. Datasets were assembled with varying difficulty levels.

As the dataset difficulty escalates, LLMs encountered challenges in graspingthe task definition, resulting in notable performance degradation.

Most notably, on the most challenging Discovery dataset, none of the LLMs successfully comprehend the extensive demonstration, resulting in zero accuracy.

With extreme-label ICL, the model needs to scan through the entire demonstration to understand the whole label space to make the correct prediction.

Key Findings

Long-context LLMs perform relatively well on less challenging tasks with shorter demonstration lengths by effectively utilising the long context window.

With the most challenging tasks, all the LLMs struggle to understand the task definition, thus reaching a performance close to zero.

This suggests a notable gap in current LLM capabilities for processing and understanding long, context-rich sequences.

Further analysis revealed a tendency among models to favour predictions for labels presented toward the end of the sequence.

The study reveals that long context understanding and reasoning is still a challenging task for the existing LLMs.

LLM Long Context In General

A myriad of LLMs have been released to support long context windows from 32K to 2M tokens.

Considering the image below, the accuracy of long context comprehension and performance are very much dependant on the complexity of the task.

The study states that in-context learning requires LLMs to recognise the taskby scanning over the entire input to understand the label space. This task necessitates LLMs’ ability to comprehend the entire input to make predictions.

The study evaluates the performance of 13 long-context LLMs and find that the performance of the models uniformly dips as the task becomes more complex.

Some models like Qwen and Mistral even degrade linearly with regard to the input length.

As the input grows longer, it either hurts or makes the performance to fluctuate.

The study also developed LongICLBench, dedicated to assessing long in-context learning tasks for large language models.

The evaluation covers a line of recent long-context LLMs on LongICLBench and reveal their performances with gradually changed difficulty levels.

A body of research has established that increasing the number of example demonstrations can enhance ICL performance. Nonetheless, there are studies indicating that longer input prompts can actually diminish performance , with the effectiveness of prior large language models (LLMs) being constrained by the maximum sequence length encountered during their training.

It is also claimed in previous works that LLM+ICL falls short on specification-heavy tasks due to inadequate long-text understanding ability. To counter this issue, various works have introduced memory augmentation and extrapolation techniques to support ICL with an extensive set of demonstration.

The effectiveness of Transformer-based models is hindered by the quadratic increase in computational cost relative to sequence length, particularly in handling long context inputs.

In Closing

It’s clear that certain models, such as InternLM2–7B-base, are very sensitive to where the labels are placed in the prompt. They only work well when labels are at the end.

On the other hand, models like ChatGLM3–6B-32K handle changes in label positioning better, with only a small 3.3% drop in accuracy.

Even the powerful GPT4-turbo struggles with grouped distributions, experiencing a significant 20.3% decline in performance.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.