Matching Retrieved Context With Question Context Using LogProbs With OpenAI for RAG

To minimise hallucinations and enhance the efficiency of RAG-powered Question Answering systems, with OpenAI log probabilities can be used to assess the model’s confidence in its generated content bas

Introduction

The term Logprobs is short for logarithm of probabilities.

Probability

Probability is a measure of how likely something is to happen. For example, if you flip a normal coin, the probability of getting heads is 0.5 because there are two possible outcomes (heads or tails), and they are equally likely to happen.

Logarithm

It’s a mathematical function that helps us work with really small numbers more easily. When you take the logarithm of a number, you’re finding out what power you need to raise a specific base to get that number. It’s like breaking down big numbers into smaller, more manageable ones.

For instance, if you have a number like 100. If you take the logarithm of 100, what you’re figuring out is: What number do I have to raise to get 100? That answer is 10, because 10 raised to the power of 2 equals 100. So, the logarithm of 100 (with a base of 10) is 2.

LogProbs

Logprobs are logarithm of probabilities. Probabilities can be really tiny numbers, especially when dealing with complex calculations in fields like mathematics or computer science.

Taking the logarithm makes these numbers easier to work with because it stretches out the scale, making small probabilities more distinguishable and easier to compare.

Logprobs help to handle probabilities in a more efficient way, especially when dealing with complex calculations or very small probabilities.

Some Examples For RAG

Considering the working code example below, a RAG example is given where the LLM returns its confidence in its generation based on the retrieval.

model=”gpt-4", logprobs=True, top_logprobs=3

To minimise hallucinations and enhance the efficiency of RAG-powered Question Answering systems, with OpenAI log probabilities can be used to assess the model’s confidence in its generated content based on the contextual reference and the question. And how well the context covers the extent of the question.

pip install openai

#####################################################

from openai import OpenAI

from math import exp

import numpy as np

from IPython.display import display, HTML

import os

client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY", "<Your OpenAI API Key Goes Here>"))

#####################################################

def get_completion(

messages: list[dict[str, str]],

model: str = "gpt-4",

max_tokens=500,

temperature=0,

stop=None,

seed=123,

tools=None,

logprobs=None, # whether to return log probabilities of the output tokens or not. If true, returns the log probabilities of each output token returned in the content of message..

top_logprobs=None,

) -> str:

params = {

"model": model,

"messages": messages,

"max_tokens": max_tokens,

"temperature": temperature,

"stop": stop,

"seed": seed,

"logprobs": logprobs,

"top_logprobs": top_logprobs,

}

if tools:

params["tools"] = tools

completion = client.chat.completions.create(**params)

return completion

#####################################################

# Article retrieved

ada_lovelace_article = """Augusta Ada King, Countess of Lovelace (née Byron; 10 December 1815 – 27 November 1852) was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognise that the machine had applications beyond pure calculation.

Ada Byron was the only legitimate child of poet Lord Byron and reformer Lady Byron. All Lovelace's half-siblings, Lord Byron's other children, were born out of wedlock to other women. Byron separated from his wife a month after Ada was born and left England forever. He died in Greece when Ada was eight. Her mother was anxious about her upbringing and promoted Ada's interest in mathematics and logic in an effort to prevent her from developing her father's perceived insanity. Despite this, Ada remained interested in him, naming her two sons Byron and Gordon. Upon her death, she was buried next to him at her request. Although often ill in her childhood, Ada pursued her studies assiduously. She married William King in 1835. King was made Earl of Lovelace in 1838, Ada thereby becoming Countess of Lovelace.

Her educational and social exploits brought her into contact with scientists such as Andrew Crosse, Charles Babbage, Sir David Brewster, Charles Wheatstone, Michael Faraday, and the author Charles Dickens, contacts which she used to further her education. Ada described her approach as "poetical science" and herself as an "Analyst (& Metaphysician)".

When she was eighteen, her mathematical talents led her to a long working relationship and friendship with fellow British mathematician Charles Babbage, who is known as "the father of computers". She was in particular interested in Babbage's work on the Analytical Engine. Lovelace first met him in June 1833, through their mutual friend, and her private tutor, Mary Somerville.

Between 1842 and 1843, Ada translated an article by the military engineer Luigi Menabrea (later Prime Minister of Italy) about the Analytical Engine, supplementing it with an elaborate set of seven notes, simply called "Notes".

Lovelace's notes are important in the early history of computers, especially since the seventh one contained what many consider to be the first computer program—that is, an algorithm designed to be carried out by a machine. Other historians reject this perspective and point out that Babbage's personal notes from the years 1836/1837 contain the first programs for the engine. She also developed a vision of the capability of computers to go beyond mere calculating or number-crunching, while many others, including Babbage himself, focused only on those capabilities. Her mindset of "poetical science" led her to ask questions about the Analytical Engine (as shown in her notes) examining how individuals and society relate to technology as a collaborative tool.

"""

# Questions that can be easily answered given the article

easy_questions = [

"What nationality was Ada Lovelace?",

"What was an important finding from Lovelace's seventh note?",

]

# Questions that are not fully covered in the article

medium_questions = [

"Did Lovelace collaborate with Charles Dickens",

"What concepts did Lovelace build with Charles Babbage",

]

#####################################################

PROMPT = """You retrieved this article: {article}. The question is: {question}.

Before even answering the question, consider whether you have sufficient information in the article to answer the question fully.

Your output should JUST be the boolean true or false, of if you have sufficient information in the article to answer the question.

Respond with just one word, the boolean true or false. You must output the word 'True', or the word 'False', nothing else.

"""

#####################################################

html_output = ""

html_output += "Questions clearly answered in article"

for question in easy_questions:

API_RESPONSE = get_completion(

[

{

"role": "user",

"content": PROMPT.format(

article=ada_lovelace_article, question=question

),

}

],

model="gpt-4",

logprobs=True,

)

html_output += f'<p style="color:green">Question: {question}</p>'

for logprob in API_RESPONSE.choices[0].logprobs.content:

html_output += f'<p style="color:cyan">has_sufficient_context_for_answer: {logprob.token}, <span style="color:darkorange">logprobs: {logprob.logprob}, <span style="color:magenta">linear probability: {np.round(np.exp(logprob.logprob)*100,2)}%</span></p>'

html_output += "Questions only partially covered in the article"

for question in medium_questions:

API_RESPONSE = get_completion(

[

{

"role": "user",

"content": PROMPT.format(

article=ada_lovelace_article, question=question

),

}

],

model="gpt-4",

logprobs=True,

top_logprobs=3,

)

html_output += f'<p style="color:green">Question: {question}</p>'

for logprob in API_RESPONSE.choices[0].logprobs.content:

html_output += f'<p style="color:cyan">has_sufficient_context_for_answer: {logprob.token}, <span style="color:darkorange">logprobs: {logprob.logprob}, <span style="color:magenta">linear probability: {np.round(np.exp(logprob.logprob)*100,2)}%</span></p>'

display(HTML(html_output))

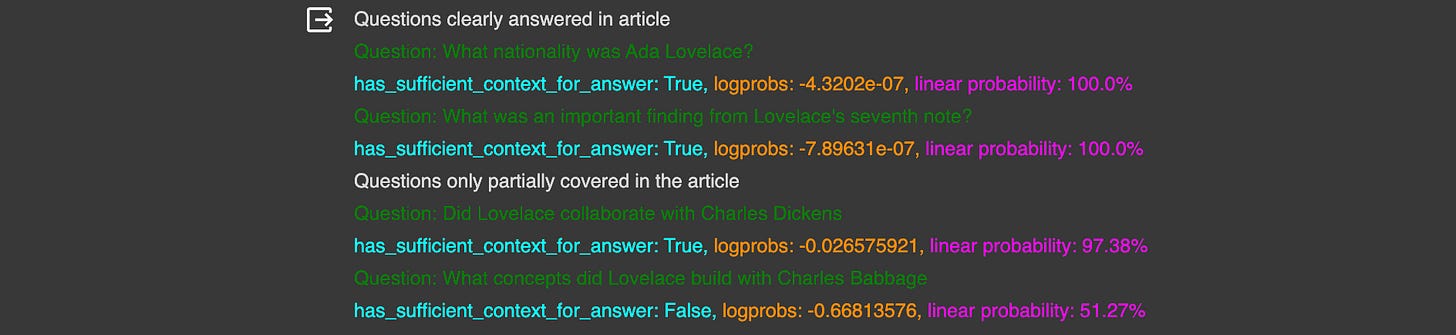

#####################################################Considering the feedback below, a true or false value is given to indicate if sufficient context was available to answer the question.

For the initial two questions, the model confidently states, with almost 100% certainty, that the article provides enough context to answer them.

However, for the trickier questions that aren’t as straightforward in the article, the model is less certain about having enough context. This acts as a valuable guide to ensure that our retrieved content meets the mark.

This self-assessment can decrease hallucinations by either limiting answers or asking the user again when the certainty of having enough context falls below a certain level.

Practical Use-Case

So apart from the self-assessment portion, the other valuable contribution this feature makes is being able to perform in-conversation disambiguation. And ask for clarity from the user; requesting more context related to their input.

Added to this, out of domain questions can be addressed and an user can be informed that the query is out of domain but here is a general answer based on a web search or the LLM base information.

Additionally we can also output the linear probability of each output token, in order to convert the log probability to the more easily interprable scale of 0–100%. ~ OpenAI

Finally, it’s worth noting that the linear probability is particularly straightforward to interpret. Its simplicity lends itself well to post-call analysis, where it can be instrumental in performing user intent detection.

By analysing conversations, we can gain valuable insights into the specific discussions users aim to engage in. This understanding not only enhances our ability to tailor responses effectively but also enable makers to refine and optimising conversational systems to better serve user needs and preferences.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.