Moving From Natural Language Understanding To Mobile UI Understanding

This study from Apple is a paradigm shift from Natural Language Understanding to Mobile UI Understanding…

Introduction

The premise of natural language understanding (NLU) is for a system or machine to understand natural language input from an human in order to respond to it.

Hence the focus is to structure conversational input which is inherently unstructured and extract intent (verbs) and entities (nouns) from the input to make sense of the meaning and intent of the user.

These conversational inputs can be via a whole host of mediums through which sense is made the unstructured nature of human conversation.

Keep in mind that a Conversational UI needs to structure the unstructured conversational data.

Ferret-UI is a model designed to understand user interactions with a mobile screen.

Mobile UI Understanding

Hence the paradigm shift is from natural language understanding to mobile UI understanding.

Understanding conversational context to understanding the current context on a mobile screen.

As with conversations, context is of paramount importance. It is very hard to derive meaning from any conversation if there is not sufficient context. That is the underlying principle of RAG, to supply the LLM with context at inference.

Visual Understanding

Ferret-UI is a model designed to understand user interactions with a mobile screen.

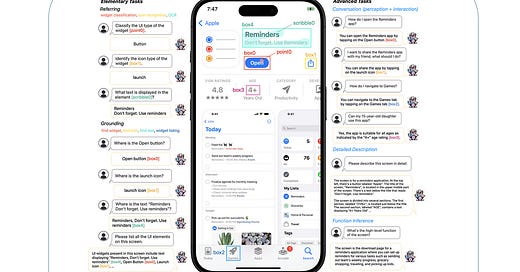

The image is below is quite self explanatory, on how the mobile screen can be interrogated in natural language. There are numerous use-cases which comes to mind.

This solution can be seen as a conversational enablement of a mobile operating system. Or the information can be used to learn from user behaviour and supply users with a customised experience.

This is something which is referred to as ambient orchestration, where user behaviour can be learn and suggestions can be made by the mobile OS, automation of user routines can be intelligent and truly orchestrated.

Ferret-UI is able to perform referring tasks like widget classification, icon recognition, together with flexible input formats like point, box, and scribble.

These tasks equip the model with rich visual and spatial knowledge, enabling it to distinguish UI types at both coarse and fine levels, such as between various icons or text elements.

This foundational and contextual knowledge is crucial for performing more advanced tasks. Specifically, Ferret-UI is able to not only discuss visual elements in detailed description and perception conversation, as seen in the image above.

But also propose goal-oriented actions in interaction conversation and deduce the overall function of the screen via function inference.

The image below is an overview of elementary task data generation. A UI detector identifies all elements, noting their type, text, and bounding boxes.

These detections form training samples for elementary tasks. For grounding tasks, all elements are used to create a widget listing sample, while other tasks focus on individual elements.

Elements are categorised into icons, text, and non-icon/text widgets, etc.For each type, there is one referring sample and one grounding sample.

Classification

Below is shown task data which is generated. Bounding box coordinates are shown, notice the prompt which is used labeled as the shared prompt. This is followed by the task prompt and a one-shot example.

In Conclusion

This study holds a few interesting paradigm shifts which include:

NLU aims to enable machines to comprehend human language and respond appropriately. It focuses on structuring unstructured conversational input to understand the user’s meaning.

This structuring is essential for making sense of human conversation across various mediums, ensuring a Conversational UI can effectively process unstructured data.

The paradigm shift is from natural language understanding to mobile UI understanding, moving from comprehending conversational context to understanding the current context on a mobile screen.

Moving from only understanding conversations, to understanding screens.

Gleaning context from screens and user interactions, as apposed to conversations only.

Ferret-UI can be considered as a RAG implementation where augmentation is not performed via retrieved documents, but rather retrieved screens.

As conversations are unstructured data, and part of a Conversational UI is to create structure around this unstructured data. In a similar fashion, Ferret-UI creates a structure around what is displayed on the screen.

Ferret-UI adds language to what is mapped on the screen, allowing context rich, accurate and multi-turn conversations. A significant step up from the “single dialog-turn command and control” scenario.

Not only does Ferret-UI add a language layer to devices, but other functionality can be added. Like task orchestration based on user behaviour, anticipating the next interaction, user guidance, and more.

I’m currently the Chief Evangelist @ Kore.ai. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.